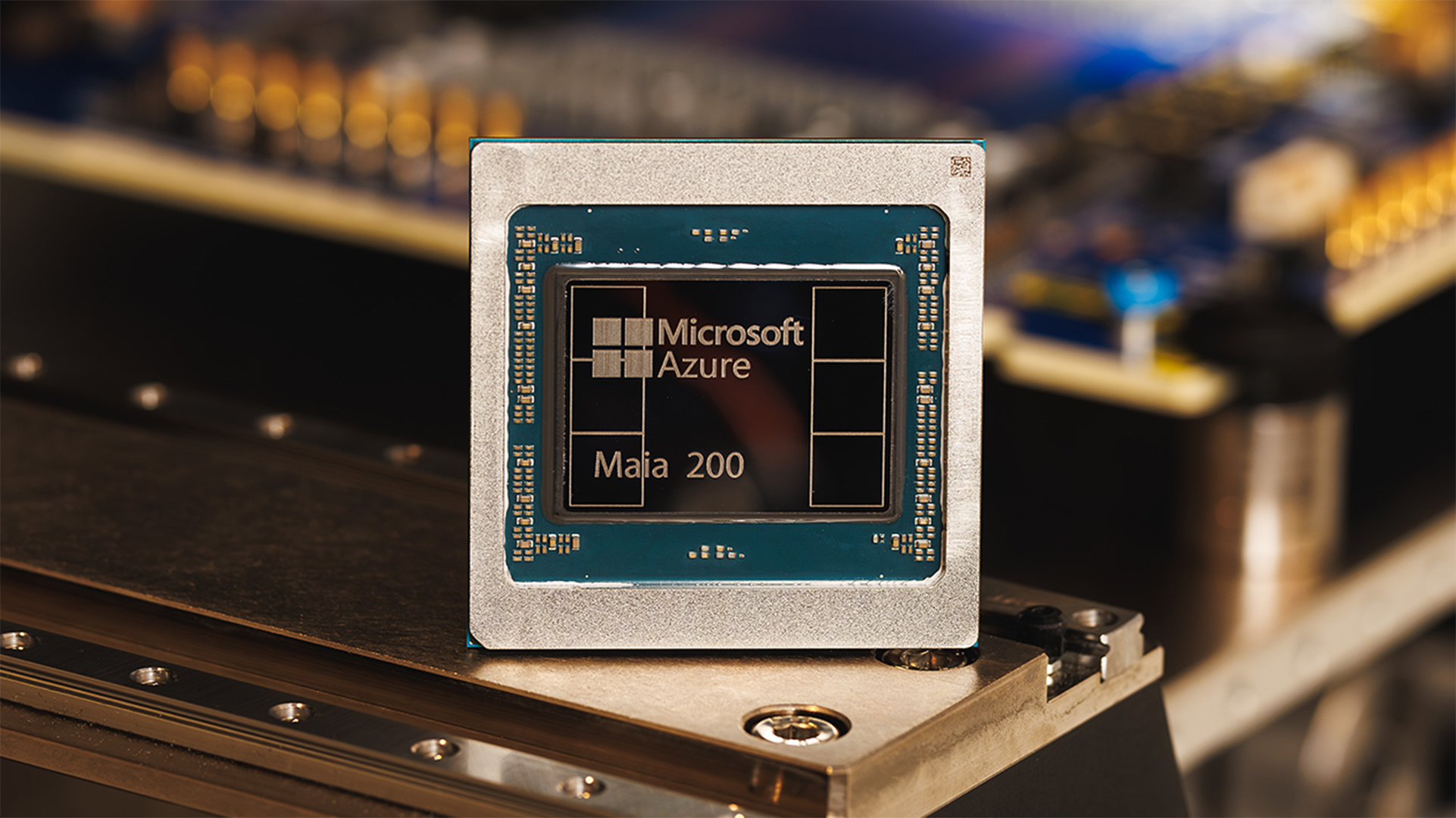

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

Microsoft has announced the launch of Maia 200, a next-generation AI accelerator intended to bolster its in-house inference capabilities.

Maia 200 is the latest AI accelerator in the tech giant’s Maia chip family, based on TSMC’s 3nm process, providing 10 petaflops at 4-bit precision (FP4) and approximately 5 petaflops at 8-bit precision (FP8).

This makes it a strong piece of hardware for AI inference – with Microsoft having claimed it can run the largest frontier models with no issue – and is ready for future releases.

Microsoft compared the performance of Maia 200 favorably with competing hardware, claiming it delivers 3x the FP4 performance of Amazon’s Trainium3 and better FP8 performance than Google’s TPU v7, which clocks in at 4.61 petaflops.

As of today, Maia 200 is active in its US Central data center region and Microsoft revealed its US West 3 region is next on the list.

How powerful is Maia 200?

Though raw computational performance is a strong benchmark for how well a chip can run AI models, data bandwidth is a core concern.

It’s a particularly critical factor for enterprises seeking to inference AI with as little latency as possible for critical workloads such as AI agents, as well as for delivering scalable AI services in the public cloud.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Maia 200 has 256GB of fifth-generation high-bandwidth memory (HBM3E), capable of 7TB/sec transfer speeds.

Microsoft engineers have also redesigned Maia 200’s memory subsystem to prioritize narrow-precision datatypes and retain core model weights and data to reduce overall data transfer between components in the inference process.

On a technical level, this meant implementing a new direct memory access (DMA) engine, a tailor-made network on chip (NoC) fabric, and 272MB of on-die static random access memory (SRAM) to enable high-bandwidth data transfer and keep weights next to processing units.

Each Maia 200 accelerator is capable of 1.4TB/sec of scale-up bandwidth.

Hyperscalers expand custom silicon

Microsoft first announced the Maia 100 chip in November 2023, with the stated goal of powering services like Microsoft Copilot and Azure OpenAI Service in its datacenters, as well as run training for models.

It’s far from alone when it comes to reducing its reliance on third-party chip designs from AMD and Nvidia, as AWS leans heavily on its Trainium and Inferentia chips and the bulk of Google’s core AI workloads are completed using its tensor processing units (TPUs).

The Maia chip family is meant to supplement, rather than replace, Microsoft’s use of chips by AMD and Nvidia. But the inherent advantages Maia 200 make it likely that in the near future, a greater percentage of Microsoft’s core workloads could run on its own silicon.

For example, the hyperscaler stressed that Maia 200 was painstakingly optimized for the Azure control plane and Microsoft’s proprietary cooling systems.

All this means Maia 200 can go from delivery to deployment at its data centers in just days, cutting the overall timeline on Microsoft’s internal AI infrastructure program in half.

On top of that, Maia 200’s improved energy efficiency is intended to lower the energy cost of running AI workloads across Azure.

To begin with, Microsoft’s Superintelligence team will be using Maia 200 to generate synthetic data and improve in-house AI models.

Starting today, developers, academics, and open source contributors, and frontier AI labs can sign up for the Maia 200 software development kit (SDK) to optimize future workloads for the hardware.

FOLLOW US ON SOCIAL MEDIA

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation

-

Asus Zenbook DUO (2026) review

Asus Zenbook DUO (2026) reviewReviews With a next-gen processor and some key design improvements, this is the best dual-screen laptop yet