The tech industry reacts to the UK’s AI Growth Lab

The UK government is launching a sandbox for companies to test AI products. It could drive innovation, but if it’s not done right, then good ideas could stall

The UK is falling behind in the global artificial intelligence (AI) race and regulation could be largely to blame. Some 60% of businesses are reluctant to adopt AI because of policy and regulatory hurdles, according to the UK government’s Technology Adoption Review 2025. In October, the government set out its plan to establish an AI Growth Lab to drive AI adoption and make the UK more competitive.

The AI Growth Lab is effectively a sandbox in which UK businesses would be able to safely test their AI products under relaxed regulatory conditions and without the risk of incurring penalties and damaging their reputation and customer trust.

As technology secretary Liz Kendall explained at the launch of the initiative during the Times Tech Summit in October: “We want to remove the needless red tape that slows progress so we can drive growth and modernize the public services people rely on every day.”

Regulatory sandboxes aren’t revolutionary. The UK was a global pioneer of the sandbox model when the Financial Conduct Authority (FCA) set up a fintech sandbox in 2016. The most recent participant AI fintech Eunice was selected at the end of November to explore how to improve transparency in the UK’s crypto markets.

Last year, the Medicines and Healthcare products Regulatory Agency (MHRA) launched a sandbox for AI medical devices – the programme received £1m in funding in June this year. The Information Commissioner's Office also has a sandbox, past participants of which include age verification firm Yoti, which worked on how to keep young people safe online.

We asked members of the UK tech community for their reaction to the AI Growth Lab.

A cross-economy approach

The proposed AI sandbox will initially be used to cut bureaucracy and support innovative sectors that are regarded as critical to the UK’s modern industrial strategy, such as advanced manufacturing, financial services, life sciences, and professional and business services.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

A cross-economy approach is "positive", Jane Smith, chief data and AI officer for EMEA at ThoughtSpot, tells ITPro, because “it means the government is listening to the complaints about [red tape] slowing things down, and trying to strike a balance between innovation and regulation.”

The government’s public call for views on how the AI Growth Lab should be structured includes whether it will be led by the government itself or independent regulators. According to its blueprint, the former would be best suited for products that could be applied to different sectors, while the latter would be ideal for those designed for highly-regulated sectors where greater insight is needed so that decisions don’t go unchecked.

Prioritizing the genuine innovators

Companies selected to participate in the AI Growth Lab could end up attracting 6.6 times the funding that they would otherwise, according to the blueprint. Participation will likely be open to all UK companies that can demonstrate innovation and have a product related to AI that they want to bring to market, but current regulations pose a barrier to.

Jake Atkinson, head of growth at AI fintech MQube, agrees with the need to support “genuine innovation”, but also hopes that the government prioritizes applicants that can demonstrate “well-articulated compliance and strong potential for consumer benefit”.

There are some lessons that can be taken from the FCA’s fintech sandbox, Atkinson adds. For starters, testing opportunities should be open to companies of all sizes. “Allowing access to be dominated by large incumbents would be anti-competitive and risks stifling innovation by AI SMEs.”

Participants should also be given the “freedom to fail”. But “that freedom will become a liability without clear regulatory guidelines”. Any regulation needs to have “a strong ethical core to both the development and use of AI technology”, Atkinson warns.

Speeding up the approval process

Those companies eventually selected to be part of the AI Growth Lab will be able to test their solutions in live environments. For example, this could be a health startup exploring how AI can cut waiting times, or it could be an engineering firm deploying an AI inspection tool on a live site without being restricted by building regulations.

“These types of pilots would generate the kind of evidence regulators usually wait years for, so approvals move faster, and investors stop hesitating,” points out Camden Woollven, head of strategy and partnership marketing at GRC International Group, and previously group head of AI at compliance training provider GRC Solutions. “For most UK businesses, the appeal [of the AI Growth Lab] is simple: quicker clarity on what will pass scrutiny.”

Atkinson agrees that sandboxes are the perfect environment for experimenting and building guardrails. “It’s possible to test extensively and effectively using synthetic data, but nothing beats having real-world data that you can use to test, test, and test again,” he adds.

Making the UK more competitive

The government is hoping the AI Growth Lab will help put UK companies on a stronger footing to compete with their European counterparts.

This is good to hear, says Tom Lorimer, co-founder and CEO of AI research and development lab Passion Labs, which has worked with Aventur Wealth inside the FCA sandbox. “Done properly, it could finally give companies a direct, faster route to sign-off on AI deployments, especially in areas where UK regulation has a strong track record of slowing things down unnecessarily.”

But it also has to “be implemented with teeth”, with explicit timelines and transparent rules, “rather than turned into another Whitehall talking shop”, Lorimer stresses.

“The AI Growth Lab could easily become just another layer of administration dressed up as innovation. But the intent is right,” he adds. “We’re already seeing what meaningful support can unlock through the FCA sandbox. If the UK can replicate that model at scale, it will be a major step forward.”

The safety caveat

Once the AI Growth Lab is up and running, it should become a launchpad for businesses building AI products and help them bring them to market more quickly.

However, just because a certain product appears safe under testing in a secure and safe environment, i.e., a sandbox, doesn’t mean that the product would be safe in the real world.

As Smith warns: “Making things safe in a sandbox is one thing, but how are we going to make things safe in the real world? Are we essentially beta testing in live production, where the live production environment is the real world? There's a risk that we could be seeing the appearance of safety over actual safety.”

Rich is a freelance journalist writing about business and technology for national, B2B and trade publications. While his specialist areas are digital transformation and leadership and workplace issues, he’s also covered everything from how AI can be used to manage inventory levels during stock shortages to how digital twins can transform healthcare. You can follow Rich on LinkedIn.

-

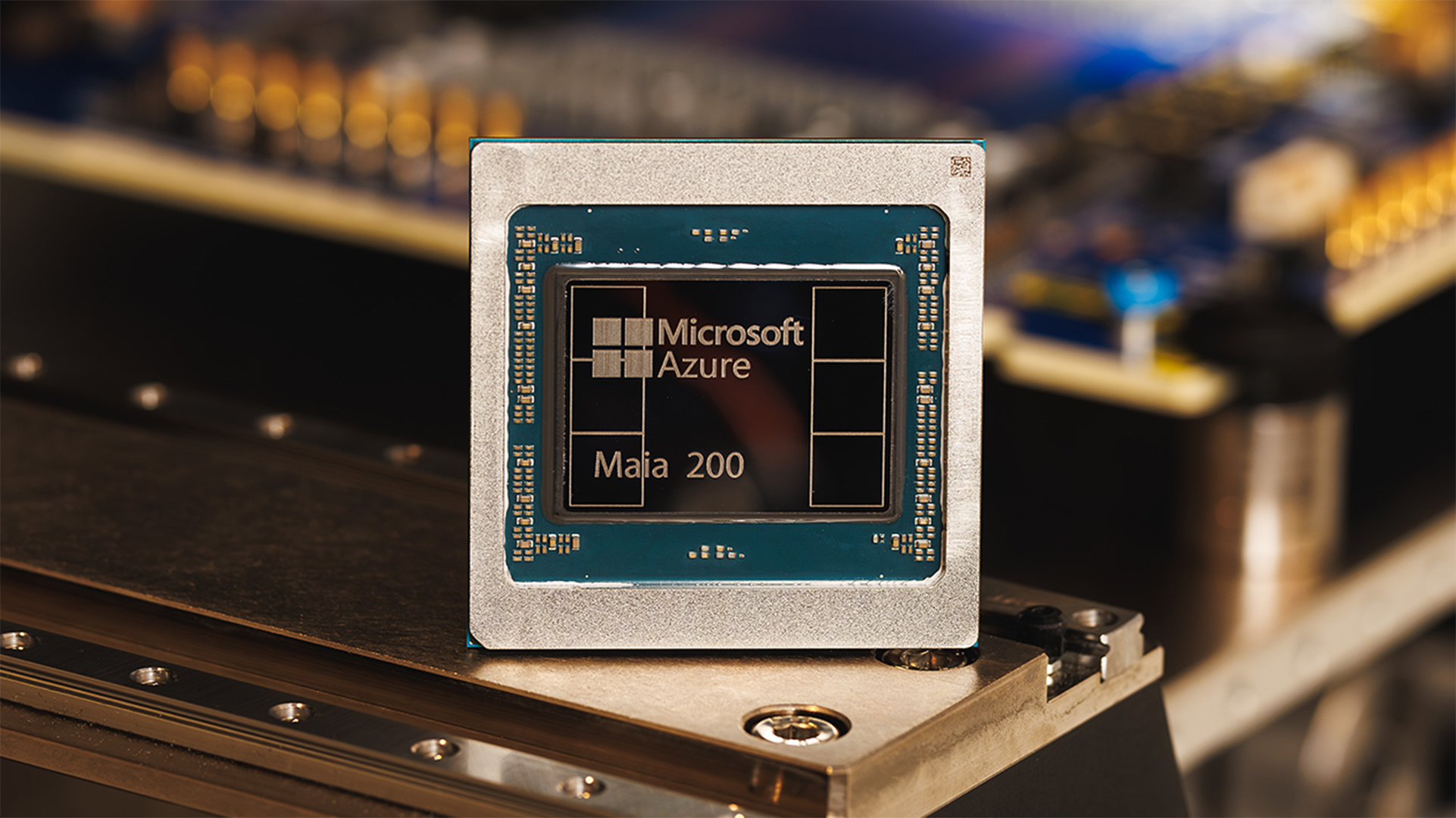

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation