GoldenGate Transactional Data Management 9.0

Organisations have so much data today that they are struggling to make the best use of it. Data warehouses, business intelligence (BI), data mining, data consolidation - these are complex, time consuming and resource hungry tasks. The tools available are rarely off the shelf and until 2000, anyone braving this market would expect to pay substantial sums of money for software tools.

This is a series tool for serious database management. TDM9 is much easier to use than any other transformation tool on the market. It just lacks the sophistication in the toolset that is becoming commonplace even in commodity database products.

Organisations have so much data today that they are struggling to make the best use of it. Data warehouses, business intelligence (BI), data mining, data consolidation - these are complex, time consuming and resource hungry tasks. The tools available are rarely off the shelf and until 2000, anyone braving this market would expect to pay substantial sums of money for software tools.

When Microsoft released SQL Server 2000 with support for data warehouses and put BI at the heart of its data story, the market was rocked. Other database vendors, Oracle, Sybase and IBM soon followed suite and released more accessible tools at much lower prices. It seemed that the days of high cost tools were over.

The reality soon began to hit home. Manipulating gigabytes (GB), terrabytes (TB) even petabyes (PB) of data requires tools that are capable of doing complex data manipulation at very high speed. While the database vendors have continued to attack this market, the data tools vendors have continued to dominate the market.

GoldenGate is one such tool vendor whose Transactional Data Management v9 (TDM9) was recently released.

TDM9 is sold as part of a package which includes consultancy. Customers are provided with documentation and software on CDs but this is not something you can just buy off the shelf. There are several deployment models for TDM9 and the price you pay depends on the model that you adopt.

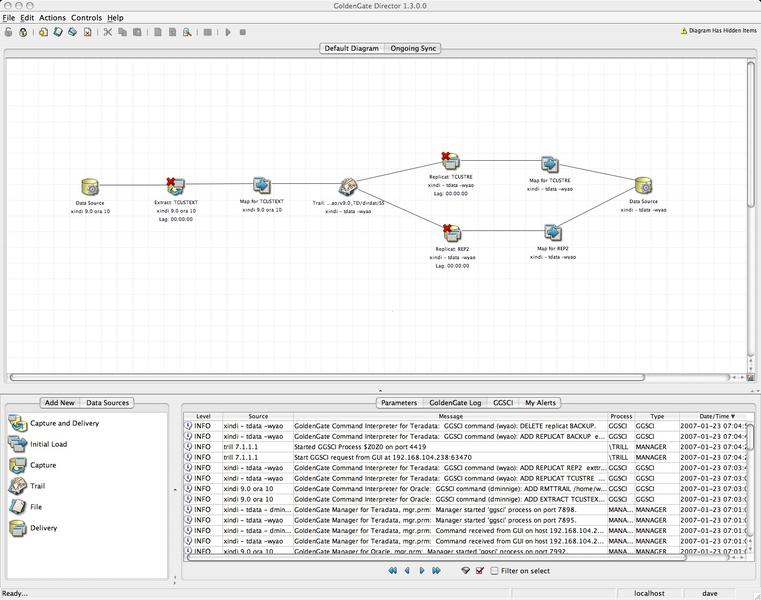

Installation of TDM9 is simple. Unzip the software into its directory, copy in the licence file and run the GoldenGate Command Line Interface (GGCLI) to configure and setup the software. For those who do not want to work with the CLI, there is a GUI tool - GoldenGate Director - that allows you to manage your deployments.

Director is a Java based tool and you will need to download the latest Java Virtual Machine from the Sun website in order to run Director. In order to use Director you need to login to it and users are assigned roles. Unfortunately, Director does not integrate to any Director Service or Domain logon database, instead using its own separate login and password database.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Before installing GoldenGate you need to think through what you want to do with the data and spend time planning your architecture. The first run through of any GoldenGate solution has to run against the source database, after that it uses the transaction log. This significantly reduces the load on the database server.

In a nutshell, GoldenGate takes data from the transaction log, transforms it and sends it to a data pump. That pump transmits the data to a receiving data pump which then passes the data to a transformation engine. Further operations are carried out on the data, after which it is inserted into the target database.

It sounds simple and in essence it is. The complexity that often occurs depends on what you want to do with the data and which of the various GoldenGate models you choose to use.

Only when you look at the process in detail, do you get any real idea of the complexity of the software and what it is doing.

Capture: This is the first step. It takes the transaction log and selects the data you want. Only data that has been committed and is therefore a completed transaction will be used. You can then do a series of operations on that data to ensure that you are only selecting the data you really need rather than send the entire contents of the transaction log.

Route: There are two parts to the routing process, send and receive. The components used are called Data Pumps and Trails by GoldenGate. Selected data is placed into a Source Trail (queue) and sent using a Data Pump.

Enhance: The Data Pump knows where it is sending the data and can carry out additional transformations on the data such as encryption or translation from ASCII to EBCDIC. Data can be encrypted and for each destination you would define a separate Source Trail and Data Pump.

At the far end of the connection is a receiving Data Pump which takes the data, carries out any transformations at its end and passes it to the Target Trail.

Apply: The Target Trail passes the data to the Delivery Process. The data is then transformed to match the receiving database. The data is mapped to the database and then inserted.

All of this can take just a fraction of a second allowing for real-time delivery of data from several sources into a BI application.

Building this process requires careful planning and, if several sources are to be combined at the target or if the data is to be split into multiple targets after further transformation, significant testing.

GoldenGate Director provides a graphical interface through which the entire process can be planned. However it has some significant limitations. A lot of database tools allow you to transform a schema from one database to another. This is particular important where you are moving between databases from multiple vendors. Director does not compare schemas and identify where field types conflict.

There is no simulation tool inside Director. You cannot walk through a transaction in debug mode to see where it is going wrong. This is where significant testing is required for each transformation. This seems like a significant mistake. In a simple transformation where you are just extracting a subset of records and inserting them into a database for real-time reporting, there is little to worry about. However, if you are going to carry out any significant transformation of the data, then the ability to walk through the data, set check points and do real time debug is essential. If this can be done in simulation mode without impacting data then it at least provides a start point to speed up identification of problems.

If the source and target databases differ you need to build your own mapping rules. Should there be any changes to the target database then there is no validation process to show the changes. Instead, you need to rebuild all the mappings from scratch and then resync the source and target databases.

GoldenGate believes that in a properly managed environment, such changes will all be controlled through a change management process and so it should. However, to provide no way to carry out a validation seems to be a significant oversight. Software is coming under increasing scrutiny by regulators and IT departments have to show what has happened to data in order to meet compliance rules. This is where an automated validation process would be ideal.

GoldenGate expects customers to take consultancy as part of the evaluation process and use this to plan and develop their first application. With a typical sales cycle of around 6 months, it would appear that most customers are taking advantage of this.

However, the tools, particularly Director, do appear to be weak and compared to the level of functionality in many database tools today, it is easy to find fault with Director. Despite this, the target market for GoldenGate is experienced database administrators and database architects. This is who they sell to and these are the people who manage the database on a daily basis.

However, things change. The growth in the number and complexity of databases inside organisations shows no sign of abating and GoldenGate does need to take another look at the tools that are required to manage these environments.

Verdict

This is a series tool for serious database management. TDM9 is much easier to use than any other transformation tool on the market. It just lacks the sophistication in the toolset that is becoming commonplace even in commodity database products.

Supported database platforms: Oracle Microsoft SQL Server IBM DB2 OS/390 UDB Sybase Enscribe SQL/MP SQL/MX Teradata running on UNIX, Windows, and HP Nonstop platforms

-

Cybersecurity experts face 20 years in prison following ransomware campaign

Cybersecurity experts face 20 years in prison following ransomware campaignTwo men used their tech expertise to carry out ALPHV BlackCat ransomware attacks

By Emma Woollacott Published

-

UK firms aim for growth through AI investment but lack of talent is a concern

UK firms aim for growth through AI investment but lack of talent is a concernTech training and upskilling will be a major focus in 2026

By Emma Woollacott Published

-

Samsung unveils plan for AI transformation across all devices

Samsung unveils plan for AI transformation across all devicesBoth the DS and DX divisions of the business are profiting from the AI boom

By Jane McCallion Published