Presented by AMD

The role of the CPU in the AI era

The backbone of enterprise AI, CPUs are the unsung heroes of inference

In years to come, we will look back on this decade as the moment when AI became universal. Although artificial intelligence has been part of the IT industry for years, the rapid rise and adoption of generative AI is set to power organizations and businesses for years to come.

This shift is largely because the industry has shifted its focus in recent years from training large language models (LLMs) to inferencing; organizations are now deploying models at scale, building powerful infrastructure to accommodate the demand, and innovating faster and more efficiently than ever before.

The shift is so significant that a recent Gartner survey found that 91% of ‘high-maturity’ organizations had already appointed a dedicated AI leader or team. These businesses are looking to foster AI innovation, deliver AI infrastructure, and design more AI architectures. Simply put, organizations are no longer thinking about the potential of AI, but actively testing it out for themselves.

With AI now in the testing and deployment phase at most businesses, the main areas of concern should be about maximizing infrastructure and or services – essentially, getting the most out of what you have. Most businesses at this point would have already considered talent, training, risk assessments, and even most hardware requirements, so the next steps are about improvements and updates.

One area that should be a priority is inference and particularly CPU-based AI inference. Server CPUs are the brains of the datacenter; they’re a highly flexible form of computing that handles AI processing and offer both energy and cost efficiency for managing high-volume workloads. They also enable businesses to get more out of their existing hardware for general-purpose computing and AI inference, which is a cost-saving across the board.

CPUs: The unsung heroes of AI

For AI workloads that involve low-compute operations per inference, CPUs offer exceptional value. GPUs are an integral part of AI inference, and, as such, they rightly take most of the limelight. But CPUs should be seen as the unsung heroes of AI, especially when it comes to inference and lightweight applications. It powers virtual assistants, enables real-time data analysis, and a whole lot more; put simply, the CPU is a versatile workhorse for AI.

CPUs are general-purpose computing engines and powerful, cost-effective solutions for seamless integration with existing enterprise workloads. Going beyond typical machine learning, CPUs are also vital for generative AI tasks that can efficiently run language models and manage critical processing functions in AI pipelines.

Once machine learning models are trained, it’s time to move on to predictions based on new data – this is what’s meant by ‘inference’. For large volumes of data, organizations will use a method called ‘batch inference’ (also known as offline inference), which is an efficient way to generate predictions when immediate, real-time responses are not needed.

For this type of inferencing, CPUs are kings. They offer cost-effective solutions for SMBs and edge deployments, excelling in sectors like retail and manufacturing. They’re also used in healthcare, where they can power AI-enhanced applications like medical transcription and drug discovery by extracting insights from medical texts.

As AMD senior product manager, Priya Vasudevan, writes in Unlocking Enterprise AI: Why are CPUs the backbone!, CPUs are extensively deployed across many industries.

“In cybersecurity, it powers spam detection (Naïve Bayes), intrusion detection, and malware classification (decision trees),” Vasudevan says. “In manufacturing, CPUs drive predictive maintenance and demand forecasting using decision trees and regression models. CPUs are also pivotal in retail, e-commerce, and streaming services, where they support recommendation systems using collaborative filtering, ranking, and other machine learning techniques.”

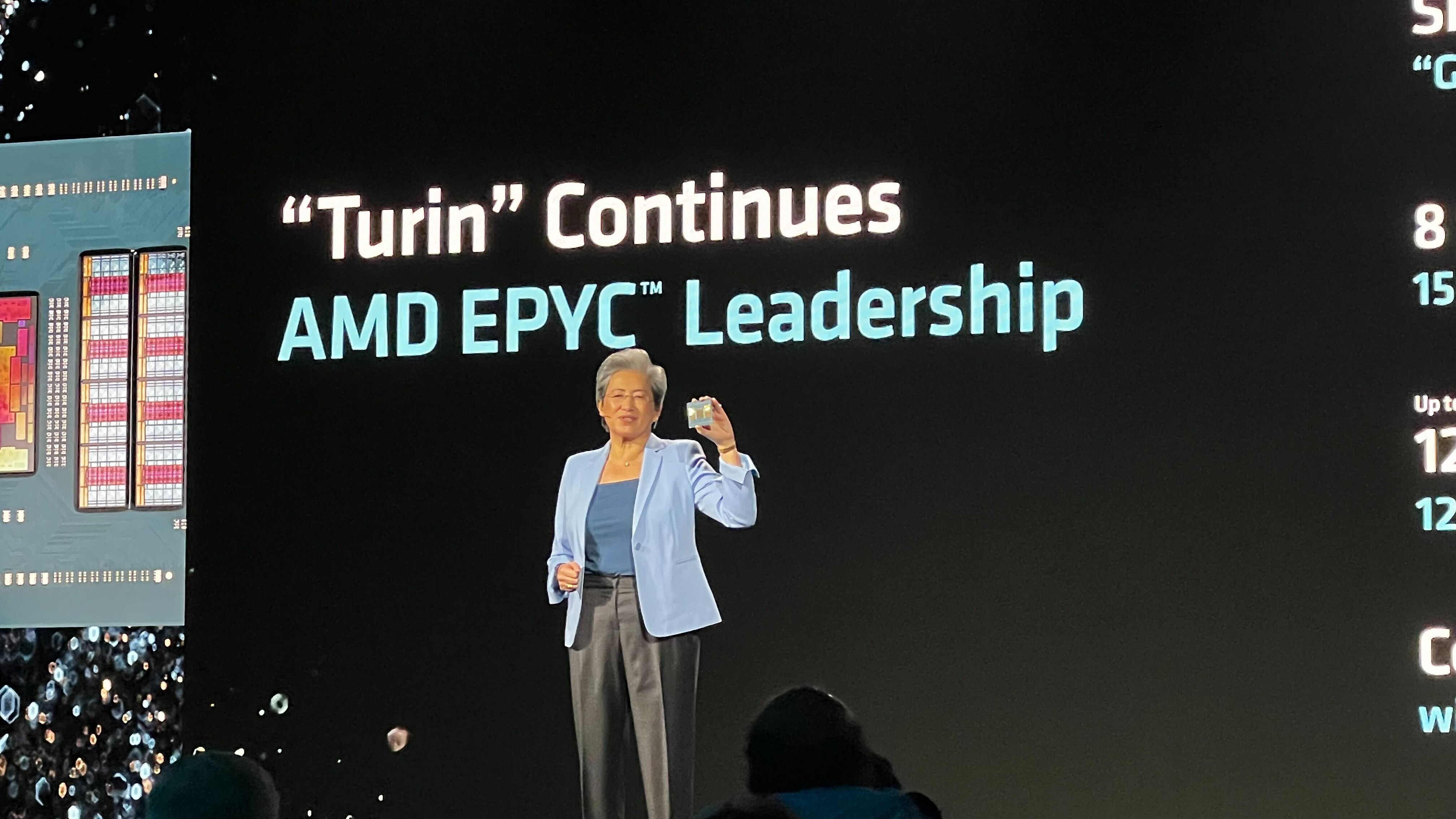

CPUs that will shine in our AI future

What organizations put in their datacenter servers matters and the boom in AI has led to rapid advancements in CPU architecture with a number of high profile chip makers battling it out for market dominance.

Senior analyst at Forrester, Alvin Nguyen, noted that AMD is delivering a “comprehensive compute portfolio”, one that is designed to meet the evolving demands of enterprise AI. Nguyen suggests that this is also reflected in the datacenter market share gains that AMD has seen in both CPUs and GPUs.

“AMD offers a broad spectrum of data center CPUs, enabling organizations to align compute capabilities with specific AI model requirements,” Nguyen said. “AMD is aggressively expanding its footprint in the AI accelerator space, positioning its MI350 Series as a competitive alternative to NVIDIA’s latest offerings.”

Nguyen adds that AMD publicly benchmarking performance against its previous generation, the MI300A/X, has highlighted strong confidence in AMD’s own architecture and execution.

High-performance CPUs are key to unlocking the full potential of AI workloads; Upgrading to next-gen CPU systems with high core counts and memory capacity will enable businesses to optimize AI performance and realize the AI-driven future of tomorrow.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Bobby Hellard is ITPro's Reviews Editor and has worked on CloudPro and ChannelPro since 2018. In his time at ITPro, Bobby has covered stories for all the major technology companies, such as Apple, Microsoft, Amazon and Facebook, and regularly attends industry-leading events such as AWS Re:Invent and Google Cloud Next.

Bobby mainly covers hardware reviews, but you will also recognize him as the face of many of our video reviews of laptops and smartphones.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

France is getting its first exascale supercomputer – and it's named after an early French AI pioneer

France is getting its first exascale supercomputer – and it's named after an early French AI pioneerNews The Alice Recoque system will be be France’s first, and Europe’s second, exascale supercomputer

-

Supercomputing in the real world

Supercomputing in the real worldSupported From identifying diseases more accurately to simulating climate change and nuclear arsenals, supercomputers are pushing the boundaries of what we thought possible

-

Discover the six superpowers of Dell PowerEdge servers

Discover the six superpowers of Dell PowerEdge serverswhitepaper Transforming your data center into a generator for hero-sized innovations and ideas.

-

Inside Lumi, one of the world’s greenest supercomputers

Inside Lumi, one of the world’s greenest supercomputersLong read Located less than 200 miles from the Arctic Circle, Europe’s fastest supercomputer gives a glimpse of how we can balance high-intensity workloads and AI with sustainability

-

AMD eyes networking efficiency gains in bid to streamline AI data center operations

AMD eyes networking efficiency gains in bid to streamline AI data center operationsNews The chip maker will match surging AI workload demands with sweeping bandwidth and infrastructure improvements

-

Enhance end-to-end data security with Microsoft SQL Server, Dell™ PowerEdge™ Servers and Windows Server 2022

Enhance end-to-end data security with Microsoft SQL Server, Dell™ PowerEdge™ Servers and Windows Server 2022whitepaper How High Performance Computing (HPC) is making great ideas greater, bringing out their boundless potential, and driving innovation forward

-

Dell PowerEdge Servers: Bringing AI to your data

Dell PowerEdge Servers: Bringing AI to your datawhitepaper How High Performance Computing (HPC) is making great ideas greater, bringing out their boundless potential, and driving innovation forward

-

Dell Technologies AI Fabric with Dell PowerSwitch, Dell PowerEdge XE9680 and Broadcom stack

Dell Technologies AI Fabric with Dell PowerSwitch, Dell PowerEdge XE9680 and Broadcom stackwhitepaper How High Performance Computing (HPC) is making great ideas greater, bringing out their boundless potential, and driving innovation forward