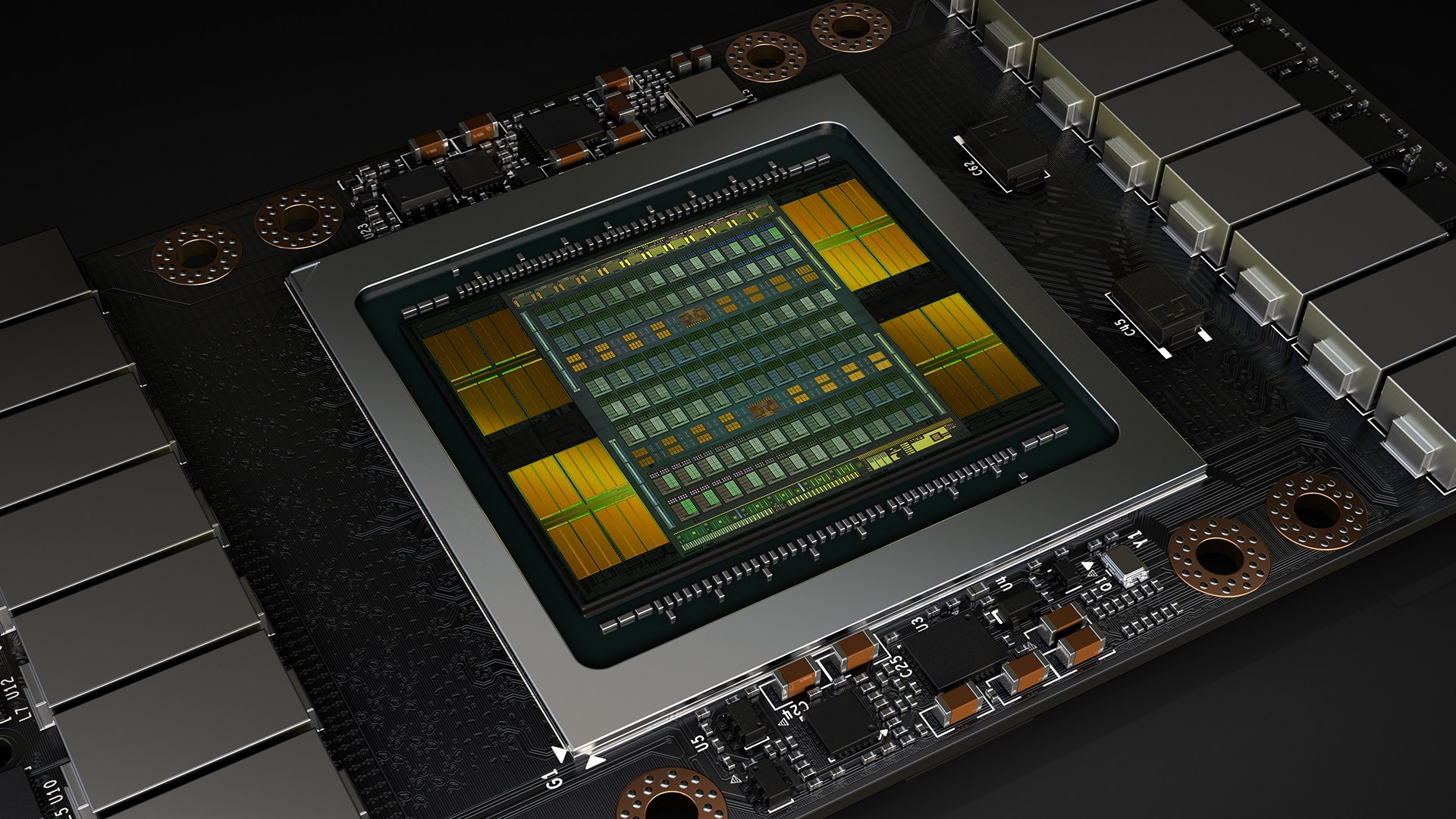

Nvidia unveils Volta GPUs designed to power next-gen AI

The company's new graphics units are said to be the equivalent of 100 CPUs

In an effort to compete against companies developing processing units optimised for use with AI, Nvidia has unveiled a new range of GPUs built on its next-generation architecture, known as Volta.

Nvidia, well regarded in the gaming industry for its market leading graphical processers, has been overshadowed in the AI field by the likes of Google and its Tensor Processing Unit (TPU), which offers specialised chips for powering AI applications.

At its annual technology conference on Wednesday, Nvidia revealed its answer: the Tesla V100, built using its new graphical architecture, Volta.

"Deep learning, a groundbreaking AI approach that creates computer software that learns, has insatiable demand for processing power," said Jensen Huang, founder and CEO of Nvidia. "Thousands of NVIDIA engineers spent over three years crafting Volta to help meet this need, enabling the industry to realise AI's life-changing potential."

The chip boasts more than 21 billion transistors and 5,120 cores, although importantly for AI, it also features 640 Tensor Cores. These cores are designed to run the complex mathematical processes that power deep learning and neural networks, which are able to produce 120 teraflops of performance, according to Nvidia.

This translates to a processing unit that is five times as powerful as Pascal, Nvidia's current graphical architecture. The Tesla V100 is capable of speeding up the deep learning processing by as much as 12 times and a single unit is the equivalent of 100 CPUs provided by the likes of Intel, the company said.

Nvidia still faces the problem that its GPU units, by their very nature, are built to power graphics, which requires considerably more computing power and demand on the chip. This means that more specialised processing units will always be more efficient than GPUs - Google recently claimed its TPU chips were up to 30 times faster than modern GPUs and CPUs for deep learning inferences, and up to 80 times more power efficient.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

However Nvidia could be on a winning streak with its GPUs, as demand from companies wanting to leverage Nvidia technology in datacentres has produced a 50% surge in revenue over the last quarter.

Nvidia also recently revealed that its Drive PX AI platform is to be integrated into Toyota's latest fleet of self-driving cars, which will be manufactured over the next few years. This is the latest in a string of deals with self-driving carmakers, with Nvidia having already partnered with Audi and Mercedes on their own autonomous vehicle range.

Picture courtesy of Nvidia

Dale Walker is a contributor specializing in cybersecurity, data protection, and IT regulations. He was the former managing editor at ITPro, as well as its sibling sites CloudPro and ChannelPro. He spent a number of years reporting for ITPro from numerous domestic and international events, including IBM, Red Hat, Google, and has been a regular reporter for Microsoft's various yearly showcases, including Ignite.

-

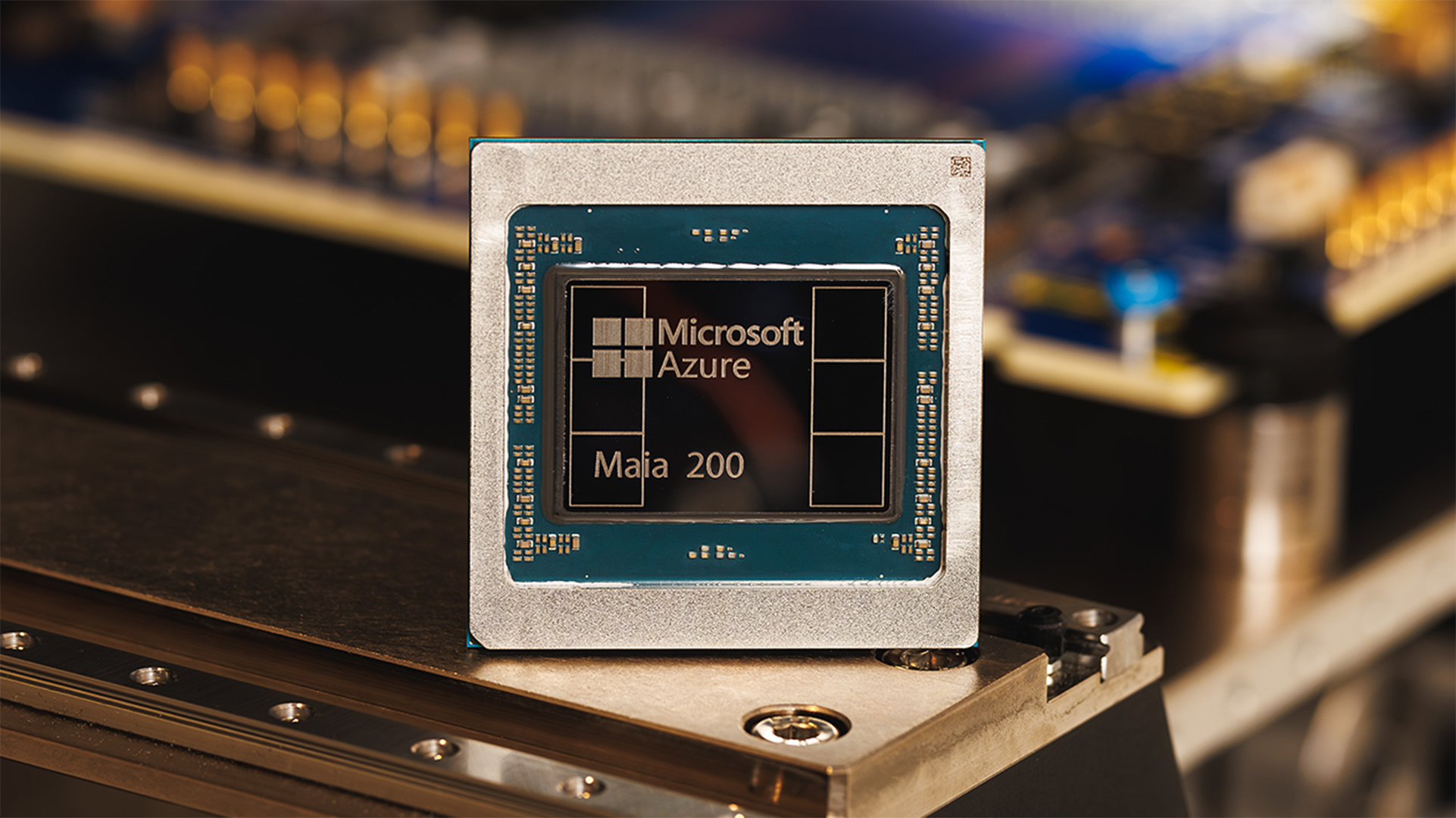

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation