Welcome to ITPro's coverage of AMD Advancing AI 2025, coming to you live from the San Jose McEnery Convention Center. Hundreds of delegates are expected to fill the auditorium today to hear AMD CEO Lisa Su reveal the company's latest strategic plans for AI.

Su is expected to take to the stage at 9.30am PST and with her keynote slated to last for two hours, we can undoubtedly expect guests from both AMD and its partners to grace the stage.

With a little under two hours to go before Lisa Su's keynote starts, it's worth reflecting on what happened at the company's last Advancing AI conference, which only took place eight months ago in October 2024.

At that event, Su launched the enterprise-focused Ryzen AI Pro 300 Series CPU range, revealed the Pensando Selina data processing unit, and announced the arrival of Instinct MI325x accelerators. It's unlikely that the company has invited customers, partners, and press to hear updates on these products, but it could indicate the direction of travel.

At Computex in June 2024 Su announced the company was moving to an annual release cadence for its GPUs and with the launch of the Instinct MI325x accelerator series at the last Advancing AI we could see an update in this area.

The auditorium is starting to fill up at the San Jose McEnery Convention Center as we wait for Lisa Su to take to the stage for her keynote address this morning.

And we are underway with an opening video – apparently the key for the AI future is trust.

And here is the woman of the hour, Dr Lisa Su, CEO of AMD.

"I'm always incredibly proud to say that billions of people use AMD technology every day, whether you're talking about services like Microsoft Office 365 or Facebook or zoom or Netflix or Uber or Salesforce or SAP and many more you're running on AMD infrastructure," says Su. "In AI, the biggest cloud and AI companies are using instinct to power their latest models and new production workloads. And there's a ton of new innovation that's going on with the new AI startups."

As with many companies now – including partner Dell and rival Nvidia – AMD is talking up AI agents and its (positive) impact on hardware.

Su says that agentic AI represents "a new class of user".

"what we're actually seeing is we're adding the equivalent of billions of new virtual users to the global compute infrastructure. All of these agents are here to help us, and that requires lots of GPUs and lots of CPUs working together in an open ecosystem," she says.

AI isn't just a cloud or data center question now, it's also an endpoint question, and particularly the PC. "We expect to see AI deployed in every single device," she says.

Su hits on another theme that's becoming popular among many enterprise hardware providers: open source and openness.

"AMD is the only company committed to openness across hardware, software and solutions," Su claims. "The history of our industry shows us that time and time again, innovation truly takes off when things are open."

"Linux surpassed Unix as a data center operating system of choice when global collaboration was unlocked," she continues. "Android's open platform helped scale mobile computing to billions of users, and in each case, openness delivered more competition, faster innovation and eventually a better outcome for users. And that's why for us at AMD, and frankly, for us as an industry, openness shouldn't be just a buzzword. It's actually critical to how we accelerate the scale adoption and impact of AI over the coming years."

Su is now joined on stage by her first guest, Xia Sun of xAI, to talk about how the company behind Grok is working with AMD.

Sun focuses on how his small team is being bolstered by AMD hardware like MI300x and – of course – openness

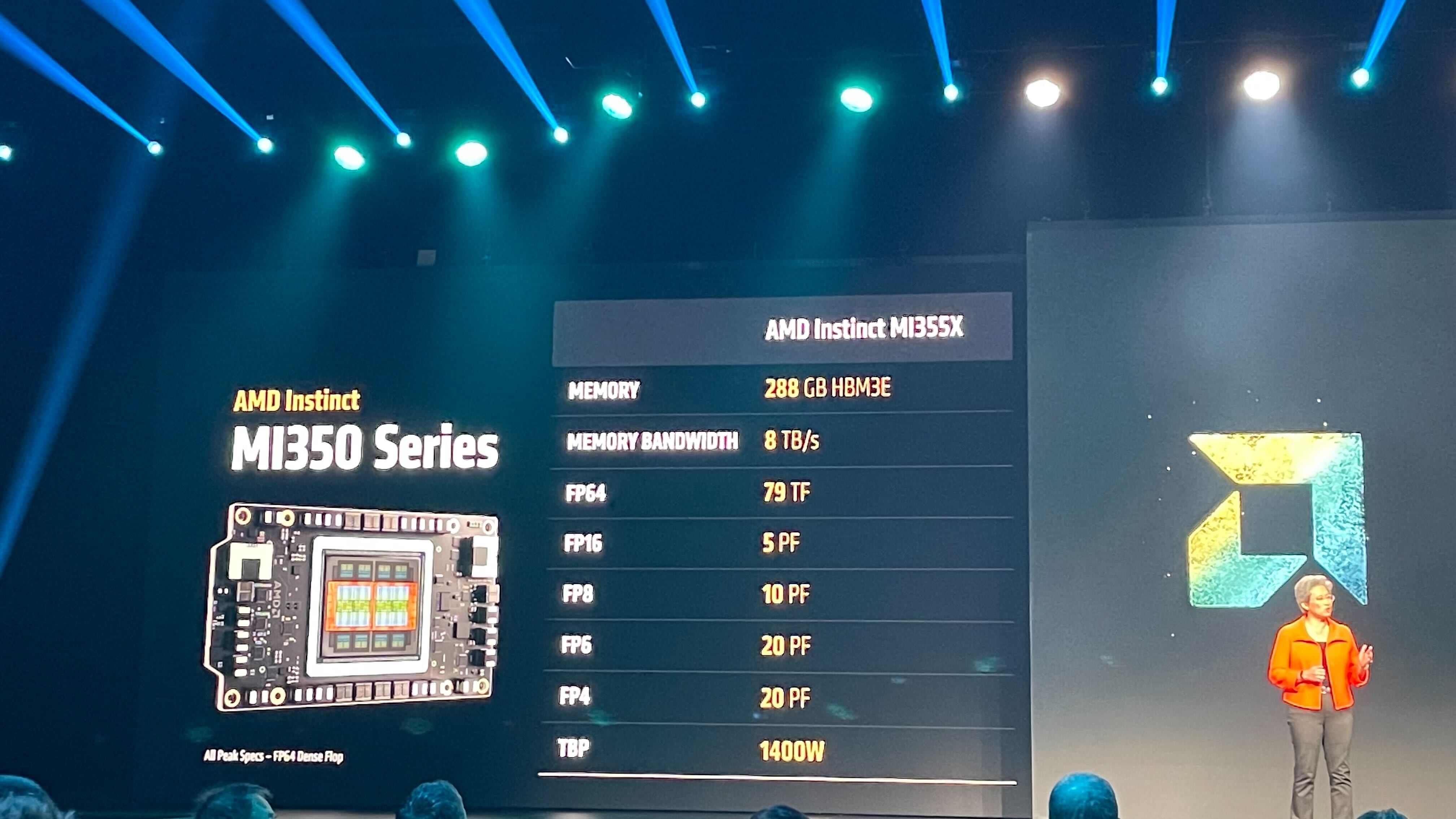

Sun leaves the stage and now we have our first product announcement, the AMD Instinct MI350 Series.

"With the Mi 350 series, we're delivering the largest generational performance lead in the history of instinct, and we're already deep in development of Mi 400 for 2026," says Su.

"Today, I'm super excited to launch the Mi 350 series, our most advanced AI platform ever that delivers leadership performance across the most demanding models this series," she says, adding that while AMD will talk about the MI355 and the MI350 "they're actually the same silicon, but MI355 supports higher thermals and power envelopes so that we can even deliver more real world performance."

Su claims that the MI350 Series "delivers a massive 4x generation leap in AI compute to accelerate both training and inference". Some of the key claimed specs are:

- 288GB memory

- Running up to 20 billion paramaters on a single GPU

- Double the FP throughput compared to competitors

- 1.6x more memory than competitors

(These are AMD claims that haven't been independently verified by ITPro)

And now another guest: Yu Jin Song, Meta VP of engineering – Meta, of course, being the force behind Llama LLM.

"We're seeing critical advancements that started with [inferencing[ and now extending to all of your AI offering," says Yu Jin Song. "I think AMD and Meta have always been strongly aligned on ... neural net solutions."

"We try to shoot problems together, and we're able to deploy optimized systems at school. So our team really see AMD as a strategic and responsive partner and someone that we really like. Well, we love working with your engineering team. We know that we love the feedback, and you also hold the high standard. You are one of our earliest partners in AI," he adds.

Yu Jin Song explains that MI350 will become a key part of the company's AI infrastructure (it's already using MI300x accelerators).

"We're also quite excited about the capabilities of MI350x," he says. "It brings significantly more compute power and acceleration memory and support for FP4, FP6 all while maintaining the safety pull factor at mi 300 so we can deploy quickly."

These are some of the Instinct MI350 Series partners, which will have products coming to market with the chips in Q3 2025, including Oracle, Dell Technologies, HPE, Cisco, and Asus.

We're going from hardware to software, now, with Su saying this is "really [what] unlocks the full potential of AI". She leaves the stage and Vamsi Boppana, SVP of AI at AMD, takes the metaphorical mic to talk about ROCm.

"We have been relentlessly focused on what matters most, making it easy for developers to build better out of the box capabilities, easy setup, more collateral, stepping up community engagements," he says.

"We've been running hackathons, contests, meetups and mail, and our customers are deploying AI capabilities at unprecedented pace, and that's why we've significantly accelerated hour release cadence," he continues. "New features and optimizations are now shipping every two weeks. Leading models like llama and DeepSeek work on day zero."

He also points out AMD's partnerships with Hugging Face, Pytorch, OpenAI Triton, and Jax.

Now for his announcement: ROCm 7. This will, he says, "bringing exciting new capabilities to address ... emerging trends, and brings support for our MI350 series of GPUs".

"[It] introduces the latest algorithms, advanced features like distributed inference, support for large scale training and new capabilities that make it easy for enterprises to deploy AI effortlessly within ROCm 7," he says, adding that inference has been "the largest area of focus [and] we've innovated and invested at every layer of inference".

Boppana now invites his own guest to the stage, Eric Boyd CVP of AI platforms at Microsoft.

The two are discussing Microsoft and AMD's longstanding partnership, with Boyd saying Microsoft has been using "several generations of Instinct".

"It's been a key part of our inferencing platform, and we've integrated ROCm to our inferencing stack, making it really easy for us to take and deploy the models on the platform," he says.

Boppana is now talking about what he describes as a new opportunity for driving down inference costs: distributed inference.

"In any LLM serving application, there are two phases. There's the pre fill phase and there's decode phase," explains Boppana. "These two phases of the model are typically handled on the same GPU, but now if you [use] the same GPU, it often becomes a bottleneck for large models, or when demand spikes happen and you can get limited in performance or flexibility."

Disaggregating the pre fill and decode phase can "significantly improve throughput, reduce cost, and boost responsiveness", he says.

Here is that solution: ROCm Enterprise AI. "ROCm Enterprise AI makes it easy to deploy AI solutions with new cluster management software, it ensures reliable, scalable and efficient operation of AI cluster," says Boppana.

"Our mlops platform allows fine tuning and distillation of models with your own data and a growing catalog of models will come for specific industries," he adds.

Another announcement from Boppana: AMD Developer Cloud and Developer credits. All developers who are here will have a credit voucher in their email inbox right now, he says, which elicits cheers from the assembled crowd.

Boppana hands over to Anush Elangovan, CVP of software development, and Sharon Zhou, CVP of AI. Elangovan channels Steve Balmer: "Developers! Developers! Developers!" to yet more cheers – although he stopped after just three.

"We are serious about bringing rocket everywhere and to everyone, from client to the cloud," he continues. "In AI, speed is your mode. Access to compute is paramount. We've been delivering on speed, so now let's get you access to compute."

"With the developer cloud, anyone with a GitHub ID or an email address can get access to an instant GPU with just a few clicks," he says, directing developers who want to give it a go to dev.amd.com.

"We've also included a lot of easy to use frameworks like vlln, SG, Lang, pytorch, etc," he says. "You just select one of those frameworks, add your SSH key and then create and you're set."

Zhou briefly encourages developers in the crowd to "come say hi" at hackathons, university labs and elsewhere, before handing back to Boppana, who reveals the next of today's announcements: The ability to access ROCm 7 on Windows or Linux, on device or on client.

Boppana hands over to Forrest Norrod, EVP and GM of data center solutions, who is underlining the importance of agentic AI for AMD, as well as the performance improvements of fifth-generation Epyc CPUs.

He also talks up what AMD says is the continued importance of CPUs.

"Some will naively tell you that CPUs are less important in the age of AI, but that's not correct," he says. :With agentic AI, we see an explosion of autonomous agents accessing data and enterprise applications. This increases the needs for efficient, high performance x86 compute across the data center, then within the AI server itself, the CPU serves the demands of pre processing and workload orchestration to keep the GPUs working efficiently."

"Let me show you a few examples of how the right CPU can manage GPUs work better," he says. "Choosing poorly can create bottlenecks and stranded valuable resources. As you can see across a range of models and use cases, our fifth generation epic CPUs can boost the inference performance of the entire system from six to 17% that makes a huge impact on the overall TCO and performance of The AI deployment, and it's a critical element in designing the best possible AI system. So get much more out of your GPUs with the right CPU."

Lisa Su is back on stage to give the audience "a first look at the next big step for [AMD's] AI roadmap, the MI400 series". She quickly pivots from there to introduce Helios AI Rack.

"Think of Helios as really a rack that functions like a single, massive compute engine," Su says. " It connects up to 72 GPUs with 260 terabytes per second of scale up bandwidth. It enables 2.9 exaflops of FP four performance, and that is a great number."

Naturally, Su also takes a moment to make comparisons with AMD's competition, saying Helios supports "50% more HBM ... memory bandwidth, and scale out bandwidth".

And now, a surprise guest, "someone who's really an icon in AI", says Su: Sam Altman, CEO of OpenAI.

Altman talks about his company is seeing in the market.

"I think the models have gotten good enough that people have been able to build really great products, text, images, voice, all kinds of reasoning capabilities," he says.

"We've seen extremely quick adoption in the enterprise now, coding has been one area people talk a lot about, but I think what we're hearing again and again in all these different ways, is that these tools have gone from things that were, you know, fun and curious to truly useful," he says.

Asked by Su how compute demands are changing, Altman says: "One of the biggest differences has been, we've moved to these reasoning models. So we have these very long roll outs where a model that often think about come back with a better answer.

"This is really the pressure on model efficiency and long context for all this, we need tons of computers, tons of memory, tons of CPUs as well. And our infrastructure ramp over the last year and what we're looking over the next year has just been a crazy, crazy thing to watch."

Turning to the hardware used to support OpenAI's platforms like ChatGPT, Altman says the company is already running some work on the MI300x, "but we are extremely excited for the MI450, the memory architecture is great for inference. It can be an incredible option for training as well".

Su asks Altman what he sees in the future for AI. "How will the workloads evolve? What happens with – quote, unquote – AGI (artificial general intelligence)," she says.

"I think we're going to maintain the same rate of progress rated models for the second half of the decade as we did for the first," Altman responds. "I wasn't so sure about that a couple of years ago for a new research thing to figure out, but now it looks like we'll be able to deliver on that."

"So if you think forward to 2030 ... these systems will be capable of remarkable new stuff, about scientific discovery, running extremely complex functions throughout society, and things that we just couldn't even imagine," he continues. "It's really going to take these are huge systems now, very complex engineering projects, very complex research, and to keep on this [path of] scaling, we've got to work together across research, engineering, hardware, how we're going to deliver these systems and products.

"This has gotten quite complex, but if we can do on that, if we if we can deliver on that drives collaboration across the whole industry, we will keep this curve going," he concludes.

Altman leaves the stage and Su delivers some final thoughts to the audience.

"When I think about this past year, it's really redefined what progress in AI looks like. It's really moved at a pace unlike anything that we have seen in modern computing ... anything that we've seen in our careers, and, frankly, anything that we've seen in our lifetime," Su says.

"The future of AI is not going to be built by any one company or in a closed ecosystem. It's going to be shaped by open collaboration across the industry. It's going to be shaped because everyone is bringing their best ideas, and it's going to be shaped because we're innovating together. So on behalf of all of us at AMD, we look forward to changing the world with you together," Su concludes, and with that the keynote is over.