Network Monitoring: What every admin should be looking out for

We discuss the importance of knowing what's happening on your network and gives some tips on how to do it effectively

If you have a business, it's almost certain that you have a network. Connected to it will be any number of boxes of tricks and not all of them will be perfectly known to you. These days, anything from a server to a doorbell, from an air-conditioning controller to a million-dollar MRI machine, could be going online and doing heaven knows what.

We've become accustomed to this. Much like "the cloud", the network has become an unremarkable part of the fabric of your building. At least, it ought to be unremarkable but as regulators and insurers have realised, risks and limitations abound, and it's crucial to ensure that your network is safe, reliable and properly monitored.

Unfortunately, this is very much a case of easier said than done. If you are a new entrepreneur, outgrowing the limits of Wi-Fi or staring at upgrade quotes in disbelief, the situation can seem impossibly daunting. Then again, at the other end of the scale, I've encountered naive souls who assumed that all networks come with monitoring somehow built in.

The onus of keeping an eye on your network isn't new, but it's a more complicated issue than it used to be: compliance rules make no distinction between on-premise and in-cloud architectures, and many standards date from a few years ago, which can complicate things further.

RELATED RESOURCE

Optimising your network for a cloud-first world

Why network transformation can ensure seamless, reliable application experience

What are you looking for?

From an administrator's perspective, there are two key concerns when it comes to network monitoring: space and time. This may seem grandiose, but when you think about it, a full network monitoring process is in effect a shadow network, containing details of all the files users create and store, plus all internet traffic. There's a good chance that the data repository is going to be the biggest, most demanding server in your business.

I said there were two key concerns, but as in general relativity space and time can be strangely equivalent. Having more space means you can store logs for longer, and analyse them for a wider variety of trends and portents. This can be tremendously valuable, as monitoring isn't just about looking out for suspicious connections, such as HTTP packets going to somewhere in China. The present-day threats are more subtle and may only show their true colours over extended periods of time. If someone manages to install a remote-control app on a machine on your network, they might well exploit it only cautiously and slowly, over the course of days or weeks.

Most administrators, therefore, aspire to keep a couple of weeks of logs, or perhaps a month if they're masters of their own budget. Regulators and inspectors may demand multi-year archives, but the larger the repository, the longer it takes to ask even simple questions so most pros try to strike a balance.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Cloud monitoring

Cloud administrators have, in some ways, a harder job than their counterparts who deal with local or hybrid networks. Even if a business uses only SaaS cloud services, and interacts strictly with web-based services, it will still have local devices that call for traditional monitoring. On top of that, the servers and VMs on which the cloud product runs are by definition out of the reach of the company's own infrastructure so what can you do? You can try asking Google to insert a traffic monitor in front of your cloud mailboxes, but obviously you're not likely to get that type of access. It can be a bit of a tightrope, trying to find the right approach, while also seeking to satisfy assertive bosses who understandably want to see best monitoring practice applied to your network security.

A transfinite problem

The word "transfinite" is a posh one, but it's not a bad fit for the challenges of network monitoring. The core of the issue is this: if you try to draw a continuum of human and natural constructs and their relative complexity, where does network monitoring fit in?

To address this knotty question, let's look at Alan Turing's theoretical first computer. This consists simply of a machine that can stamp a mark on an endless reel of paper tape, read it back, rub it out and shuffle the tape. It sounds incredibly basic, but Turing showed that any computation problem could be both encoded on and solved by such a machine.

What does that have to do with network monitoring? Well, look closely at the connections on your network: in isolation, each one is very simple. But IPv4 doesn't make just one "mark": it features 256 potential combinations of mark, strung together in up to four billion addresses, as well as encoding the actual data you send and receive across the line.

That's why the term "transfinite" comes up: it's an unusual term to hear in computing, and it brings people up short, forcing them to step back from the sales patter and get a grip. So when you look at network monitoring software and think it's a bit pricey, or has weighty system requirements, remember the context: some of the questions you might ask of it are inherently unanswerable. Not because the software is lacking, but because no answer can be given without tripping over an infinity or two in the results.

He said, she said

When it comes to monitoring what's happening on your network, one of the biggest concerns is snooping.

The truth is that it's relatively easy to splice into a network conversation without either party knowing they are being overheard. There's no shortage of sexy-sounding packet sniffers and optical taps available, and if you're using a managed Ethernet switch then you can even tweak the configuration so that all the traffic seen on one port is duplicated to another. Of course, this sort of espionage requires a man on the inside -- and your spy will also need to set up some machine or software that can receive those incoming packets and do something useful with them.

Perhaps the most important thing is to identify what's being snooped on. Dedicated loggers have existed ever since the inception of the network, but I doubt many people will want to sit through that history lesson, because the level of regard delivered by such things begins with an oscilloscope function. What we're interested in is about four levels higher up in the architecture the contents of the data packets.

For that level of scrutiny, there is really only one place to start your investigation, and that's Wireshark.

This is where you can download in the publisher's own words the world's foremost analyser for Ethernet networks. The Wireshark software is free, cross-platform, and open source; it's also very old, with ongoing support for versions that run in MS-DOS, for those who even remember that.

Wireshark's antiquity is a strength, as it means that it's evolved to deal with every sort of networking situation you can imagine. As the Internet of Things spreads around us, and the cloud absorbs all your server functions, it might seem strange to think about DOS or BSD ports of an obscure network analyser, but it all comes down to the operating systems. On a Windows machine, Wireshark installs a little background utility called WinPcap that replicates network traffic to another device (your network monitor). It's a dated concept from the days of coaxial network leads and cantankerous green-screen PCs, but it's also a perfectly neat answer to the problem of cloud-resident servers. Running a background traffic copier on a cloud VM sweeps aside all those complicated considerations about hosting partners, resistance to scale-up, platform incompatibility and the like. Just don't forget that the software's running: most cloud services charge for traffic, and such a tool, by its nature, doubles the amount of traffic a cloud instance is handling.

RELATED RESOURCE

Optimising your network for a cloud-first world

Why network transformation can ensure seamless, reliable application experience

The right tools

There is no one-size-fits-all approach to network monitoring. As we've mentioned, the big challenge today is software-as-a-service (SaaS), which gives you no ability to monitor what's happening behind the scenes. While that may seem to excuse you from a headache, it's a risky situation to be in which is why the right to download all your data or move to a hybrid deployment is so important.

What we can say, however, is that all the various approaches to network monitoring are moving in one consistent direction. It might still sound like the preserve of nerds, but it's starting to align, bit by bit, with the more sober world of the underwriter, the insurer, the investigator and the banker. At the end of the day, companies can't function without clearly visible, easily comprehended views of what their computing assets have been doing while they were off the clock.

-

Redefining resilience: Why MSP security must evolve to stay ahead

Redefining resilience: Why MSP security must evolve to stay aheadIndustry Insights Basic endpoint protection is no more, but that leads to many opportunities for MSPs...

-

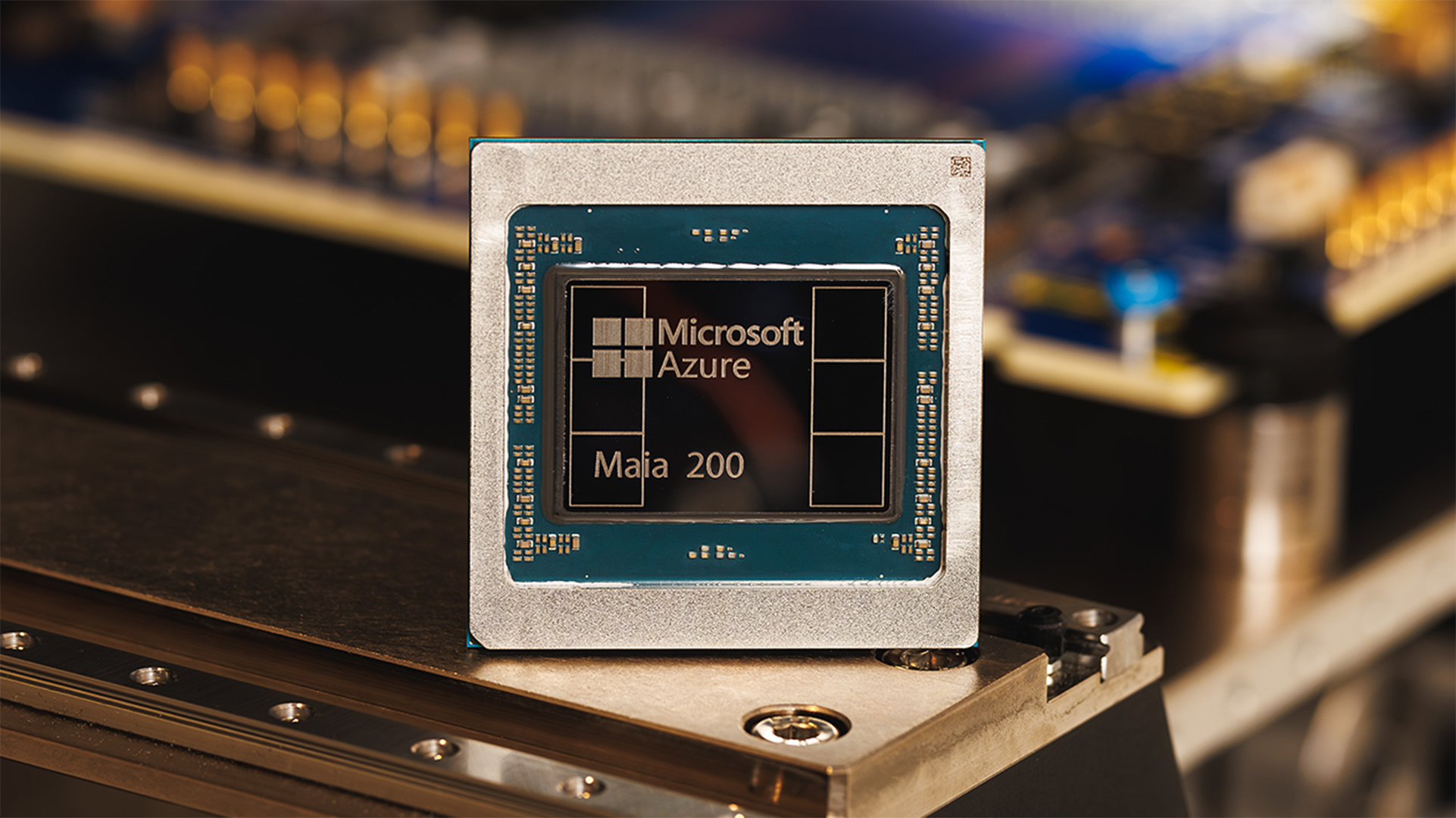

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure