AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teams

Software development will never be fully automated, so you’re going to need humans in the loop – and probably more of them

Software developers may be forgiven for worrying about their jobs in 2025, but GitLab Field CTO Marco Caronna believes the end result of AI adoption will be larger teams, not an onslaught of job cuts.

New research from GitLab shows a steep increase in the use of the technology across the profession, with 99% currently using – or planning to use – AI across the software development lifecycle.

Similarly, more than half (57%) of devs now use more than five tools for software development. This influx of AI tools is transforming the profession, with 75% revealing the technology will “significantly change” their roles within the next five years.

However, as the study noted, this is creating a “paradox” for teams. Concerns over security, compliance, and skills are rising, prompting a rethink of traditional operating frameworks.

More than two-thirds (67%) of respondents said AI is making compliance management “more challenging” for their organization, for example.

Speaking to ITPro in the wake of the report’s publication, Caronna said the current pace of change in software development means many companies are “facing issues in keeping up”.

That’s not because AI is difficult to use, either. Indeed, it’s “quite the opposite”, he told ITPro.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“It's way too easy to use, which basically means that most enterprises who are conscious about compliance, security, costs, they find themselves in a situation where the adoption of these new tools becomes extremely complex,” he explained.

“They start having issues from a compliance standpoint and from a security standpoint, because the data path of information becomes unclear, and also from a cost perspective, it becomes a little bit more challenging.”

The rise of ‘AI platform engineering’

The solution here, Caronna suggested, will be an evolution of platform engineering practices that take into account AI, fusing the technology across various teams to fine-tune the development lifecycle.

Platform engineering has long been employed as a means to embed security and compliance considerations within the broader development lifecycle, creating closer synergy between developer, security, and operations teams.

But while AI may speed up development practices, it creates new risk surfaces, for example, through flaws in AI-generated code. More than three-quarters (78%) of respondents specifically highlighted this issue, noting they’d experienced problems with code created using “vibe coding practices”.

With this in mind, Caronna suggested augmenting the practice to compensate for these potential issues could be the key to safer AI-infused development processes.

“I do expect that we will end up with an ‘AI platform engineering practice’, or it’s probably going to have a better name. I’m not good at marketing messages,” he said.

“It’s going to be a team of experts who understand how to correlate the different agents, how to make them work together, and then publish those capabilities to the consumers,” Caronna added.

“So just like platform engineering teams to actually publish the capabilities that can be used in cloud providers, on-premises and so on and so forth; there's going to be a similar pathway for AI adoption.”

Shifting left is critical

A key component of this transition will be a concerted focus on “shifting left” to tackle lingering issues with AI.

The technology has already influenced this shift, research shows. A May 2025 study from AI security firm Pynt, for example, found enterprises are ramping up efforts to shift left to bolster software security and tackle AI-related risks.

Placing a stronger emphasis on security will help break down long-standing barriers and bottlenecks for developer teams, Caronna said. Shifting left essentially helps weed out issues earlier in the lifecycle, preventing headaches further down the line.

“If you don’t start implementing a practice where you move security towards the very beginning of the coding [process], at that point what happens is that your developers are going to be chasing security issues in production,” he told ITPro.

“The closer you get to production, the more expensive it becomes to fix security issues, but also performance issues or features and capabilities issues,” Caronna added.

“So the reality is that when we talk about the prerequisites [for AI adoption] this shift left, potentially even shift left testing, that's a prerequisite that needs to happen. Once those prerequisites are in place. At that point, we can really start talking about how to adopt AI so that we can write secure code by default.”

Devs aren’t going anywhere

This transitional period will ultimately result in larger teams, according to Caronna and GitLab. Three-quarters (75%) of respondents told the firm that as AI becomes more deeply embedded within development practices, this will require more engineers.

The thinking here is that software development is never going to be fully automated, and enterprises will be keen to keep humans in the loop across the lifecycle.

Indeed, only one-third of respondents said they’d trust AI to handle daily tasks without human review. This isn’t limited to developer teams, either. With more AI tooling, enterprises face greater security and compliance risks, meaning more staff in these respective domains will likely be required.

The outlook here runs counter to the prevailing sentiment among many developers and industry stakeholders over the last year. Across 2025, concerns about the impact of AI on developers have reached boiling point, spurred on by alarmist comments from leading industry figures.

Meta CEO Mark Zuckerberg and Salesforce chief Marc Benioff have both hinted at not needing developers due to the technology.

Caronna said businesses now face two paths looking ahead. They can lean into the benefits of AI, allowing it to fuel future growth and support the creation of better software, or fall into the trap of thinking it’s an excuse to cut staff.

A slew of businesses have opted for the latter approach, and ultimately, it could prove detrimental.

“AI is not fully replacing developers,” he said. “AI is going to give you a productivity increase, and at that point you can invest that productivity increase in cost cutting or improving revenues.”

“If, as a savvy company, what you’re looking for is to improve your top line instead of decreasing the bottom line, at that point you’re going to invest that in producing even more capabilities for your customers,” Caronna added.

“More capabilities, generally speaking, should mean more revenue and an increase in the top line. That productivity increase can really provide a huge competitive advantage to companies.”

Follow us on social media

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

The modern workplace: Standardizing collaboration for the enterprise IT leader

The modern workplace: Standardizing collaboration for the enterprise IT leaderHow Barco ClickShare Hub is redefining the meeting room

-

CISA’s interim chief uploaded sensitive documents to a public version of ChatGPT – security experts explain why you should never do that

CISA’s interim chief uploaded sensitive documents to a public version of ChatGPT – security experts explain why you should never do thatNews The incident at CISA raises yet more concerns about the rise of ‘shadow AI’ and data protection risks

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

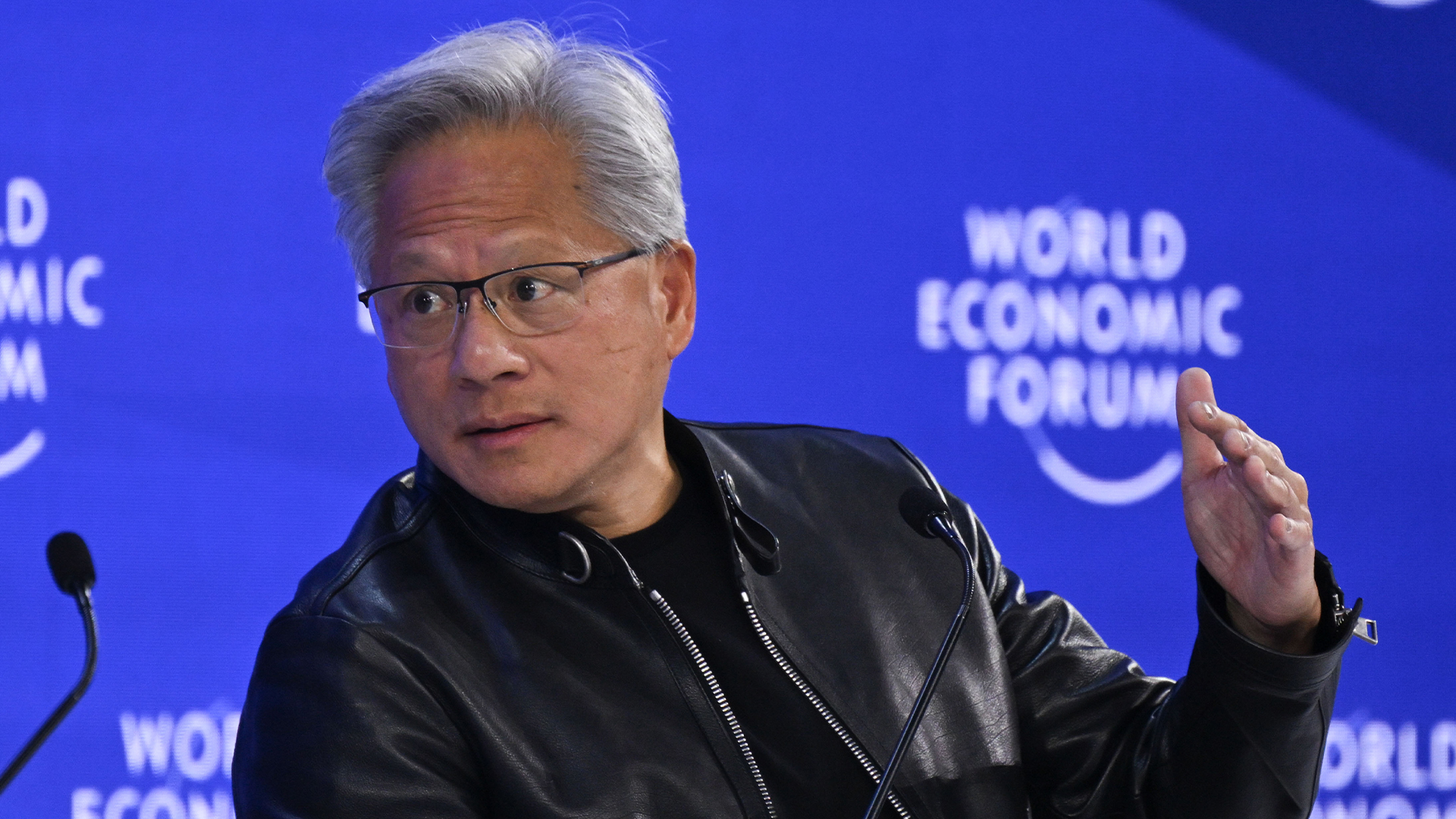

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

OpenAI's 'Skills in Codex' service aims to supercharge agent efficiency for developers

OpenAI's 'Skills in Codex' service aims to supercharge agent efficiency for developersNews The Skills in Codex service will provide users with a package of handy instructions and scripts to tweak and fine-tune agents for specific tasks.

-

‘If software development were an F1 race, these inefficiencies are the pit stops that eat into lap time’: Why developers need to sharpen their focus on documentation

‘If software development were an F1 race, these inefficiencies are the pit stops that eat into lap time’: Why developers need to sharpen their focus on documentationNews Poor documentation is a leading frustration for developers, research shows, but many are shirking responsibilities – and it's having a huge impact on efficiency.

-

AI is creating more software flaws – and they're getting worse

AI is creating more software flaws – and they're getting worseNews A CodeRabbit study compared pull requests with AI and without, finding AI is fast but highly error prone