The supersized world of supercomputers

A look at how much bigger supercomputers can get, and the benefits on offer for healthcare and the environment

12th November 2018 was a big day in the upper echelons of computing. On this date, during the SC18 supercomputing conference in Dallas, Texas, the much-anticipated Top500 list was released.

Published twice a year since 1993, this is the list of the world's 500 fastest computers, and a place at the top of the list remains a matter of considerable national pride. On this occasion, the USA retained the top position and also gained second place, following several years in which China occupied one or both of the top two places.

Commonly referred to as supercomputers, although the term high-performance computers (HPC) is often used by those in the know, these monsters bear little resemblance to the PCs that sit on our desks.

Speed demon

The world's fastest computer is called Summit and is located at the Oak Ridge National Laboratory in Tennessee. It has 2,397,824 processor cores, provided by 22-core IBM Power9 processors clocked at 3.07GHz and Nvidia Volta Tensor Core GPUs, 10 petabytes (PB) of memory (10 million GB) and 250PB of storage.

Summit - today's fastest supercomputer at the Oak Ridge National Laboratory in Tennessee - occupies a floor area equivalent to two tennis courts

While these sorts of figures include the statistics we expect to see when describing a computer, it's quite an eye-opener when we take a look at some of the less familiar figures. All this hardware is connected together by 300km of fibre-optic cable. Summit is housed in 318 large cabinets, which together weigh 310 tonnes, more than the take-off weight of many large jet airliners. It occupies a floor area of 520 square metres, the equivalent of two tennis courts. All of this consumes 13MW of electrical power, which is roughly the same as 32,000 homes in the UK. The heat generated by all this energy has to be removed by a cooling system that pumps 18,000 litres of water through Summit every minute.

Oak Ridge is keeping tight-lipped about how much all this costs; estimates vary from $200m to $600m.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

So what does all this hardware, and all this money, buy you in terms of raw computing power? The headline peak performance figure quoted in the Top500 list is 201 petaflops (quadrillion floating point operations per second).

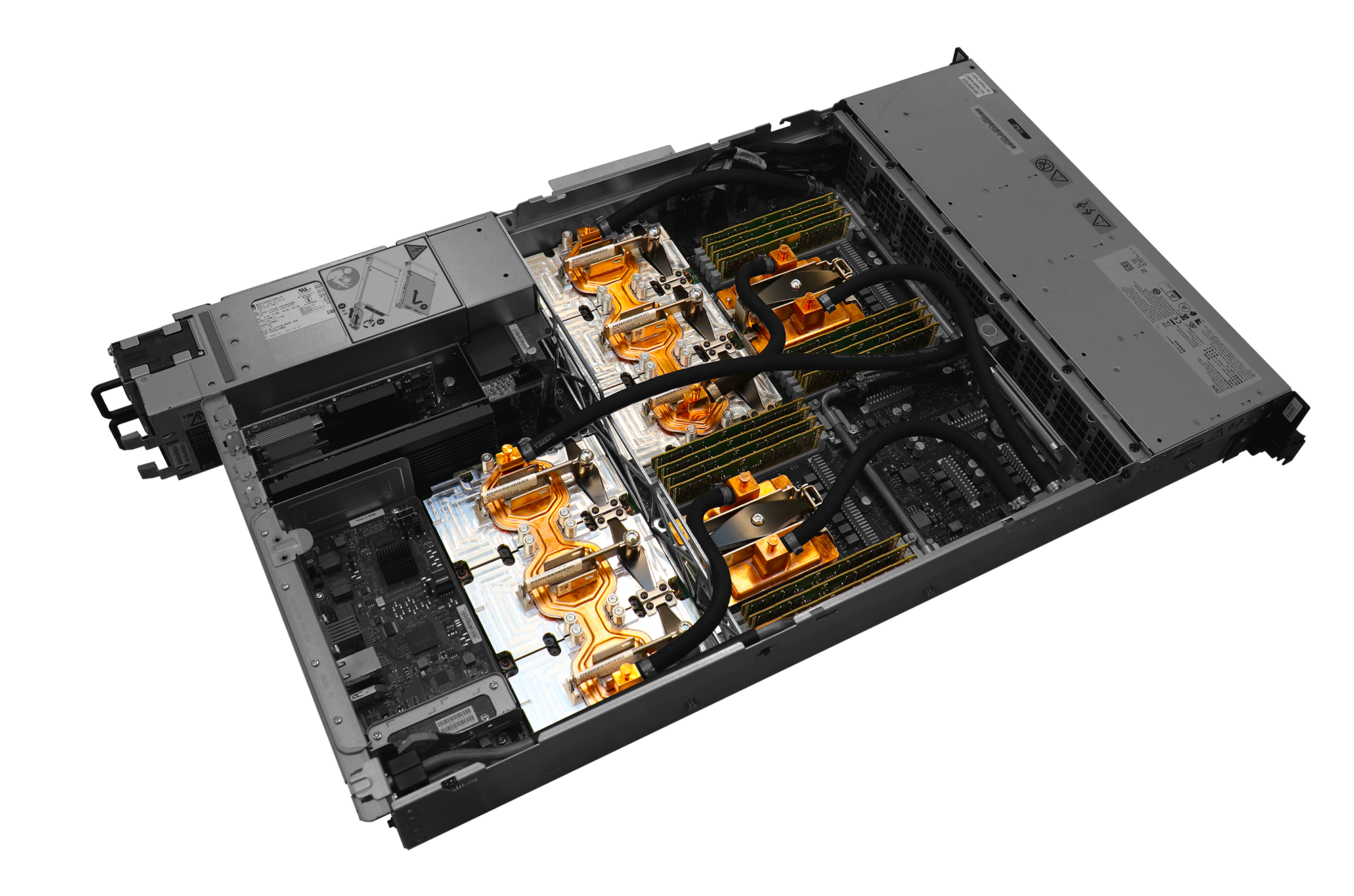

Each of Summit's 4,608 nodes contain six GPUs and two 22-core Intel Power9 CPUs, plus some serious plumbing to keep the hardware from frying

Given that Summit contains over two million processor cores, it will come as no surprise that it's several thousand times faster than the PCs we use on a day-to-day basis. Even so, a slightly more informed figure may be something of a revelation. An ordinary PC today will probably clock up 50-100 gigaflops, so the world's fastest computer will, in all likelihood, be a couple of million times faster than your pride and joy, perhaps more.

It doesn't stop there, either. While it didn't meet the stringent requirements of the Top500 list, it appears Summit has gone beyond its official figure by carrying out some calculations in excess of one exaflops using reduced precision calculations. Oak Ridge says that this ground-breaking machine takes the USA a step closer to its goal of having an exascale computing capability - that is, performance in excess of one exaflops - by 2022.

Half century

The identity of the first supercomputer is a matter of some debate. It appears that the first machine that was referred to as such was the CDD 6600, which was introduced in 1964. The 'super' tag referred to the fact that it was 10 times faster than anything else that was available at the time.

The question of what processor it used was meaningless. Like all computers of the day, the CDC 6600 was defined by its CPU, and the 6600's CPU was designed by CDC and built from discrete components. The computer cabinet was a novel X-shape when viewed from above, with this geometry minimising the lengths of the signal paths between components to maximise speed. Even in these early days, however, an element of parallelism was employed. The main CPU was designed to do only basic arithmetic and logic instructions, thereby limiting its complexity and increasing its speed. Because of the CPU's reduced capabilities, however, it was supported by 10 peripheral processors that handled memory access and input/output.

Following the success of the CDC 6600 and its successor the CDC 7600, designer Seymour Cray left CDC to set up his own company, Cray Research. This name was synonymous with supercomputing for over two decades. Its first success was the Cray-1.

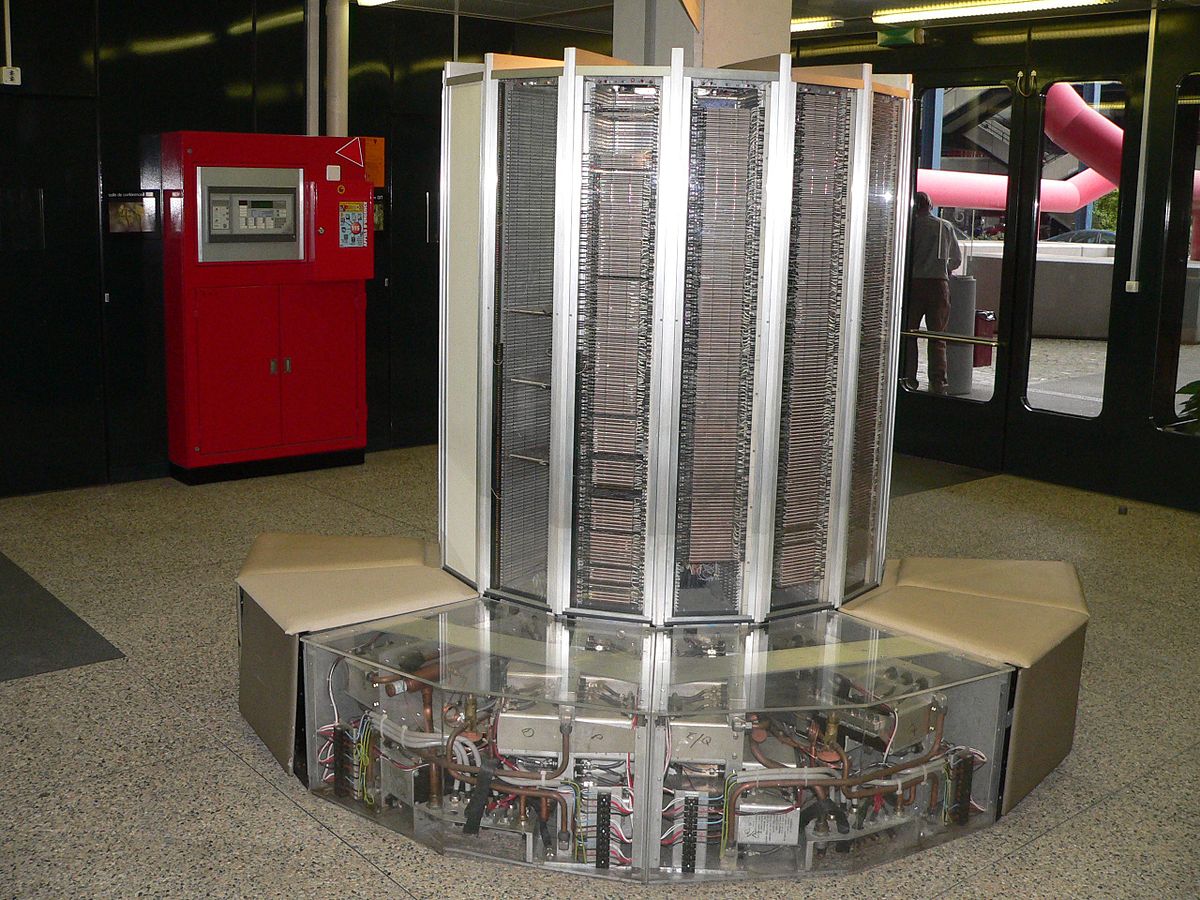

Designed, like the CDC 6600, to minimise wiring lengths, the Cray-1 had an iconic C-shaped cylindrical design with seats around its outside, covering the cooling system. Its major claim to fame was that it introduced the concept of the vector processor, a technology that dominated supercomputing for a substantial period, and still does today in the sense that ordinary CPUs and GPUs now include an element of vector processing.

The Cray-1 supercomputer broke new ground when it launched in 1975, thanks to its vector processor and iconic design

In this approach to parallelism, instructions are provided that work on several values in consecutive memory locations at once. Compared to the more conventional approach, this offers a significant speed boost by reducing the number of memory accesses, something that would otherwise represent a bottleneck, and increasing the number of results issued per clock cycle. Some vector processors also had multiple execution units and, in this way, were also able to offer performance gains in the actual computation.

The next important milestone in HPC came with the Cray X-MP in 1984. Like the Cray-1, it had a vector architecture, but where it broke new ground was in the provision of not one, but two vector processors. Its successor, the Cray Y-MP, built on this foundation by offering two, four or eight processors. And where Cray led, others followed, with companies eager to claim the highest processor count. By 1995, for example, Fujitsu's VPP300 had a massive 256 vector processors.

Meanwhile, Thinking Machines had launched its own CM-1 a few years earlier. While the CPU was an in-house microprocessor, which was much less powerful than the contemporary vector designs, it was able to include many more of them - up to 65,536.

The CM-1 wasn't able to compete with parallel vector processor machines for pure speed, but it was ahead of its time in the sense that it heralded the next major innovation in supercomputing - the use of vast numbers of off-the-shelf processors. Initially, these were high-performance microprocessor families.

Launched in 1995, the Cray T3E was a notable machine of this type, using DEC's Alpha chips. And while supercomputers with more specialised processors such as the IBM Power and Sun SPARC are still with us today, by the early 2000s, x86 chips like those in desktop PCs were starting to show up. Evidence that this was the architecture of the future was underlined in 1997, when ASCI Red became the first computer to head the Top500 list that was powered by x86 processors. Since then, the story has been one of an ever-growing core count - from ASCI Red's 9,152 to several millions today - plus the addition of GPUs to the mix.

Innovation at the top

A cursory glance at the latest Top500 list might suggest there's little innovation in today's top-end computers. A few statistics will illustrate this point. The precise processor family may differ, and the manufacturer may be Intel or AMD, but of the 500 computers, 480 use x86 architecture processors. The remaining 20 use IBM Power or Sun SPARC chips, or the Sunway architecture which is largely unknown outside China.

Operating systems are even less varied. Since November 2017, every one of the top 500 supercomputers has run some flavour of Linux; a far cry from the situation in November 2008, when no fewer than five families of operating system were represented, including four Windows-based machines.

The Isambard supercomputer is being jointly developed by the Met Office, four UK universities and Cray

So does a place at computing's top table increasingly depend on little more than how much money you have to spend on processor cores and, if so, will innovation again play a key role? To find out, we spoke to Andrew Jones, VP HPC Consulting & Services at NAG, an impartial provider of HPC expertise to buyers and users around the world.

Initially, Jones took issue with our assertion that innovation no longer plays a role in supercomputer development. In particular, while acknowledging that the current Top500 list is dominated by Intel Xeon processors, and that x86 is likely to rule the roost for the foreseeable future, he stresses that there's more to a supercomputer than its processors.

"Performance for HPC applications is driven by floating point performance. This in turns needs memory bandwidth to get data to and from the maths engines. It also requires memory capacity to store the model during computation and it requires good storage performance to record state data and results during the simulation," he explains.

And another vital element is needed to ensure all those cores work together effectively.

"HPC is essentially about scale - bringing together many compute nodes to work on a single problem. This means that HPC performance depends on a good interconnect and on good distributed parallel programming to make effective use of that scale," Jones adds.

As an example, he refers to the recently announced Cray Slingshot as one case of a high-performance interconnect technology. It employs 64 x 200Gbit/s ports and 12.8Tbit/s of bi-directional bandwidth. Slingshot supports up to 250,000 endpoints and takes a maximum of three hops from end to end.

Jones concedes that many HPC experts believe the pace and quality of innovation has slowed due to limited competition but, encouragingly, he expressed a positive view about future developments.

Serious supercomputers sometimes need serious displays, such as EVEREST at the Oak Ridge National Laboratory

"We have a fairly good view of the market directions over the next five years, some from public vendor statements, some from confidential information and some from our ownresearch," he says.

"The core theme will continue to be parallelism at every level - within the processor, within the node, and across the system. This will be interwoven with the critical role of data movement around the system to meet application needs. This means finding ways to minimise data movement, and deliver better data movement performance where movement is needed."

However, this doesn't just mean more of the same, and there will be an increasing role for software.

"This is leading to a growth in the complexity of the memory hierarchy - cache levels, high bandwidth memory, system memory, non-volatile memory, SSD, remote node memory, storage - which in turn leads to challenges for programmers," Jones explains.

"All this is true, independent of whether we are talking about CPUs or GPUs. It is likely that GPUs will increase in relevance as more applications are adapted to take advantage of them, and as the GPU-for-HPC market broadens to include strong offerings from a resurgent AMD, as well as the established Nvidia."

Beyond five years, Jones admitted, it's mostly guesswork, but he has some enticing suggestions nonetheless.

"Technologies such as quantum computing might have a role to play. But probably the biggest change will be how computational science is performed, with machine learning integrating with - not replacing - traditional simulations to deliver a step change in science and business results," he adds.

Society benefits

Much of what we've covered here sounds so esoteric that it might appear of little relevance to ordinary people. So has society at large actually benefitted from all the billions spent on supercomputers over the years?

A report by the HPC team at market analysis company IDC (now Hyperion Research) revealed some of the growing number of supercomputer applications that are already serving society. First up is a range of health benefits, as HPC is better able to simulate the workings of the human body and the influence of medications. Reference was made to research into hepatitis C, Alzheimer's disease, childhood cancer and heart diseases.

The Barcelona Supercomputing Centre, located in a 19th-century former church, houses MareNostrum, surely the world's most atmospheric supercomputer

Environmental research is also benefiting from these computing resources. Car manufacturers are bringing HPC to bear on the design of more efficient engines, and researchers are investigating less environmentally damaging methods of energy generation, including geothermal energy, and carbon capture and sequestration in which greenhouse gasses are injected back into the earth.

Other applications include predicting severe weather events, generating hazard maps for those living on floodplains or seismic regions, and detecting sophisticated cyber securitybreaches and electronic fraud.

Reading about levels of computing performance that most people can barely conceive might engender a feeling of inadequacy. If you crave the ultimate in computer power, however, don't give up hope.

That CDC 6600, the first ever supercomputer, boasted 3 megaflops, a figure that seems unimaginably slow compared to a run-of-the-mill PC today. And we'd have to come forward in time to 2004, when the BlueGene/L supercomputer topped the Top500 list, to find a machine that would match a current-day PC for pure processing speed.

This is partly the power of Moore's Law and, if this were to continue into the future - although this is no longer the certainty it once was - performance equal to that of today's supercomputers may be attainable sooner than you might have expected.

Photo by Rama / CC BY 2.0

Other images courtesy of Oak Ridge National Laboratory

-

How businesses can make their cybersecurity training stick?

How businesses can make their cybersecurity training stick?In-depth Who in the modern business needs cybersecurity training – and what key factors should firms keep in mind?

-

Mitigating bad bots

Mitigating bad botsSponsored Podcast Web crawlers pose an immediate business risk, necessitating immediate action from IT leaders

-

Global IT spending set to hit a 30-year high by end of 2025

Global IT spending set to hit a 30-year high by end of 2025News Spending on hardware, software and IT services is growing faster than it has since 1996

-

AI tools are a game changer for enterprise productivity, but reliability issues are causing major headaches – ‘everyone’s using AI, but very few know how to keep it from falling over’

AI tools are a game changer for enterprise productivity, but reliability issues are causing major headaches – ‘everyone’s using AI, but very few know how to keep it from falling over’News Enterprises are flocking to AI tools, but very few lack the appropriate infrastructure to drive adoption at scale

-

Pegasystems teams up with AWS to supercharge IT modernization

Pegasystems teams up with AWS to supercharge IT modernizationNews The duo aim to create deeper ties between the Blueprint, Bedrock, and Transform services

-

Better together

Better togetherWhitepaper Achieve more with Windows 11 and Surface

-

Transforming the enterprise

Transforming the enterpriseWhitepaper With Intel and CDW

-

The top trends in money remittance

The top trends in money remittanceWhitepaper Tackling the key issues shaping the money remittance industry

-

How Kantar revamped its IT infrastructure after being sold off

How Kantar revamped its IT infrastructure after being sold offCase Study Being acquired by a private equity firm meant Kantar couldn’t rely on its parent company’s infrastructure, and was forced to confront its technical shortcomings

-

Deutsche Bank wraps up Postbank IT integration after bug-laden migrations

Deutsche Bank wraps up Postbank IT integration after bug-laden migrationsNews The IT merger is expected to generate annual savings of €300 million by 2025