NSA: Benefits of generative AI in cyber security will outweigh the bad

The use of generative AI in cyber security will enable practitioners to tackle threats more effectively, but attackers are also ramping up their use of the technology

A senior figure at the NSA has said that generative AI in cyber security will offer practitioners marked benefits in combating attacks and cracking down on global cyber criminal groups.

Rob Joyce, director of cyber security at the US agency, told attendees at an event at Fordham University in New York that generative is “absolutely making us better at finding malicious activity” and outlined key benefits of the technology for use among security personnel.

Joyce noted that, over the last year, a significant focus on the nefarious applications of generative AI have been a key talking point, particularly their use by cyber criminals.

Many threat actors and cyber criminal groups have been leveraging generative AI tools to “turbocharge fraud and scams”, he said.

Last year, researchers at Mandiant warned that generative AI will give threat actors the ability to launch a new wave of far more powerful and personalized social engineering attacks.

Rob Joyce speaking at the 2018 Aspen Cyber Summit in San Francisco.

Mandiant's claims were just one of a number of warnings from security experts on the matter over the course of 2023. Research from Darktrace raised concerns about the prospect of AI-supported phishing attacks, warning that hackers could use the technology to fine-tune techniques and dupe users.

However, while Joyce said fears over the use of generative AI in cyber crime were justified, he also made clear the fact that national security bodies are harnessing these tools to great effect, and that cyber security experts were getting as much out of generative AI as criminals.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Citing examples and use-cases, Joyce said AI can be used to combat threat actors who hide on networks posing as safe accounts through vulnerability exploits.

As these sorts of accounts don't behave normally, AI and LLMs can be used by cyber security teams to aggregate activity and identify malicious activity.

Joyce warned, however, that generative AI won’t represent a ‘silver bullet’ for cyber security practitioners.

“[AI] isn’t the super tool that can make someone who’s incompetent actually capable, but it’s going to make those that use AI more effective and more dangerous,” he said.

Generative AI in cyber security will offer marked benefits

Speaking to ITPro, Spencer Starkey, VP for EMEA at SonicWall, echoed Joyce's comments, adding that the use of AI tools in cyber security will prove vital for practitioners in the coming years and enable them to stamp out attacks far more effectively.

“These technologies are perfect for spotting suspicious behavior and fending off cutting-edge threats because they can instantly analyze large volumes of data without requiring human oversight”, he said.

RELATED RESOURCE

Discover how you can increase your IT team's productivity

WATCH NOW

“Cyber security experts are already using AI and ML to identify cyber attacks in real-time, which emphasizes the significance of their work in maintaining a secure online environment”, he added.

While AI can sift through records of login activity and IP addresses to monitor unusual activity, it can also respond proactively if programmed to do so. Once a potential threat actor is identified, AI models can log out accounts or place restrictions on data deletion.

AI might even be able to predict attacks before they happen, if trained correctly, according to Own Company’s Graham Russel.

“A notable trend is the strategic use of backup files”, he told ITPro. “Traditionally seen as a safety net for data recovery, backup files are now being leveraged as a valuable resource for training and refining AI and machine learning models”.

“Incorporating backup files into AI and machine learning models allows organizations to simulate diverse scenarios, ensuring that the algorithms are robust and adaptable to real-world complexities," Russel added.

“This approach not only optimizes the performance of AI applications but also enhances the accuracy of predictions and decision-making processes."

AI could eliminate face and voice recognition vulnerabilities

The use of generative AI in cyber security could also help crack down on voice and facial recognition scams, according to Nick France, CTO of Sectigo.

France told ITPro that AI's ability to analyze vast quantities of data and detect anomalies in speech patterns could uncover efforts to tamper with voice authentication and facial recognition processes.

“The machine learning aspect of AI means that, when paired with security solutions such as identity verification and biometric authentication (voice or fingerprint), it improves in its detection over time, increasing accuracy but also reducing the number of false positives”, he said.

“And rather than having to wait after the scam has happened, AI has real-time capabilities that can make a decisive judgment call in the moment”.

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

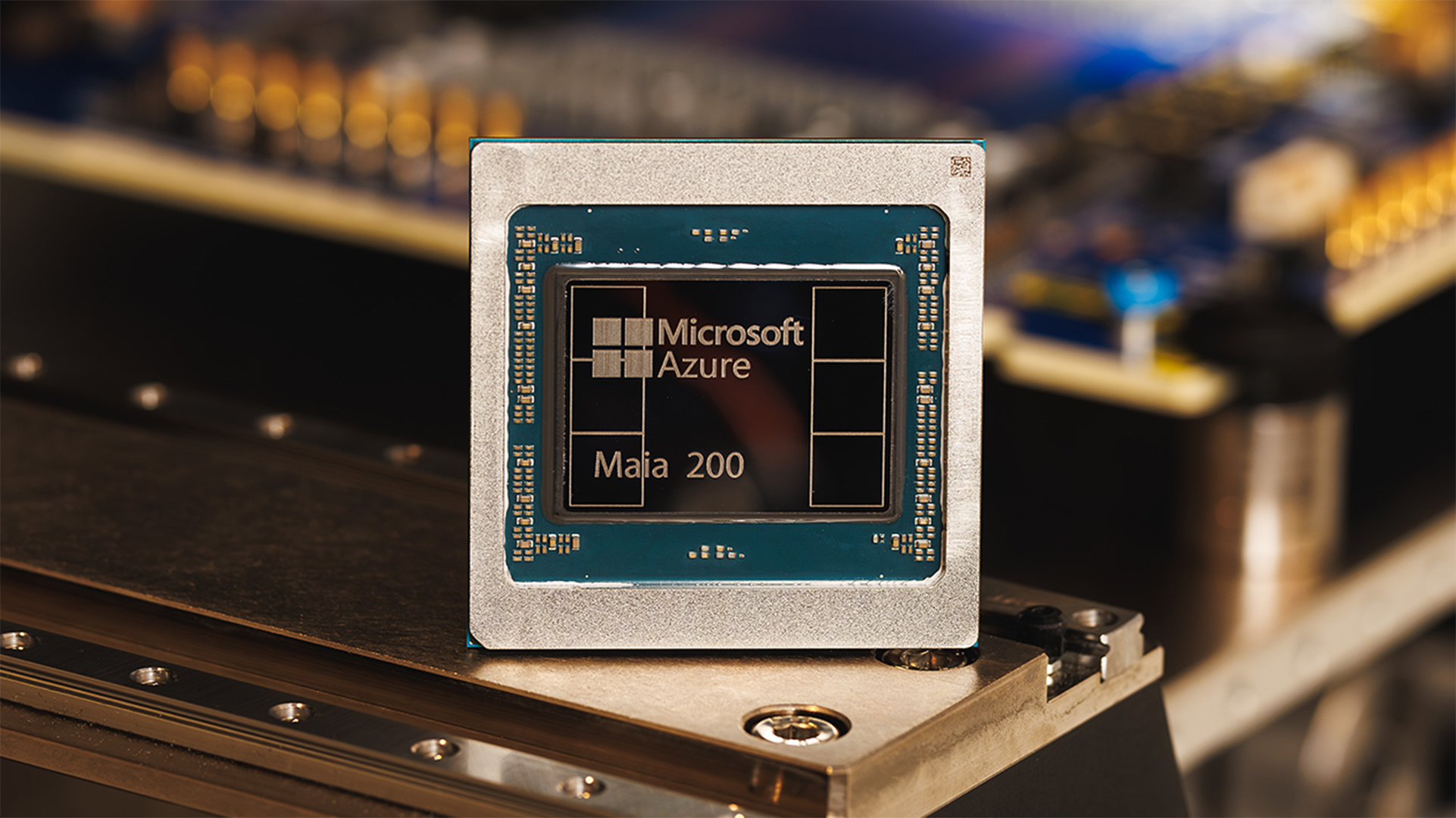

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation