The UK needs to look inward on AI legislation – standing on the world stage isn't enough

A lack of clarity on legislation is frustrating businesses while the government looks further afield

Concrete AI legislation seems to have taken a back seat in the UK, as the country’s regulators busy themselves on global projects across the Atlantic and at international summits.

Globally conscious moves from the UK government are certainly not without merit, though they do seem to fly slightly in the face of UK business.

While all manner of attention is being placed on overseas bridge-building, businesses in the UK are still unsure how their forays into generative AI and AI more broadly will stand the test of time as the government becomes more involved.

Earlier this month, technology secretary Michelle Donelan announced a “trailblazing” new Institute for AI safety in San Francisco, a “pivotal step” in the UK’s mission to tap into Bay Area tech talent and foster collaboration with the US on AI safety.

“Opening our doors overseas and building on our alliance with the US is central to my plan to set new, international standards on AI safety which we will discuss at the Seoul Summit this week,” Donelan proudly said.

Fast forward to South Korea and Donelan was even given the honor of opening the summit’s proceedings, where she waxed lyrical about laying the “foundations for sustained and productive collaboration”.

Impressive as this is, it does little to move the needle on homegrown policy. The UK AI bill has only just creeped out of the House of Lords and everyone else is left to sit and wonder what the government’s game plan is.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The UK has thus far taken an innovation-first strategy, which it championed at the first AI Safety Summit at Bletchley Park in November 2023. This isn’t without its advantages. Regulatory overreach can stifle development in burgeoning industries, particularly tech, so the UK’s attention to avoiding this could stand it in good stead.

But when it comes to specifics on the UK’s approach to AI, businesses still have very little to go off. For example, UK chancellor Jeremy Hunt wrote that he is looking to the UK’s future as the “next Silicon Valley” in the introduction of the government’s most recent budget,

Though he talked a good game, this was a goal without substance. Instead, only vague commitments to “public sector research and innovation infrastructure” were made as part of the announcement.

Similarly lacking in clarity have been the UK government’s various schemes and initiatives, like the ‘AI opportunity forum’ which has seen firms like Microsoft, Google, Barclays, and Vodafone sign up as part of wider efforts to drive AI adoption.

All of this has been slowly unfolding against a backdrop of a spiralling legal landscape for AI, greater acknowledgment of AI threats, and rising employee concerns over AI encroachment in their working life. Even as the government has promoted its “innovation first” approach, the UK’s Competition and Markets Authority (CMA) has warned that it has concerns big tech could dominate AI and could step in to prevent mergers damaging competition in the space.

Transparency is needed for long-term planning

Businesses need clarity. Regulators in the UK tech sector need to more transparently explain the UK’s approach to AI, how they will regulate AI providers and models for enterprises, and whether the approach will be pinned torisk levels or specific technologies.

By way of comparison, the EU has clearly laid out a what its AI Act means for businesses. It will adopt a “risk-based approach”, with regulators assessing AI systems and assigning them risk factors of either minimal, limited, or high-risk.

The UK seems to be toying with such an approach, at least according to sources close to the matter who have claimed that companies “developing the most sophisticated models” may be required to share details with the government eventually.

Leaders know this but can’t act on it until they’re given long-term assurances. If the government is headed in this direction, every organization will need to take note as it would require businesses to closely assess AI models they’ve already implemented.

RELATED WHITEPAPER

Almost all the information on the UK’s AI approach still stems from the whitepaper A pro-innovation approach to AI regulation, published in March 2023. The whitepaper proposes a sector-specific approach as opposed to a more comprehensive framework.

The UK’s AI regulation bill then also suggests that the government will create a specific regulatory body called the ‘AI Authority’ but, again, the legislation is far from ratified and seems to play second fiddle to the UK’s commitments to various overseas AI hubs.

The government seems blissfully unaware of its opaque approach. Donelan, for example, has said the government’s mission is to “ensure regulation provides the clarity and the certainty that businesses in complex science and tech sectors need to navigate and go forward and grow”.

There’s no question that legislating around a field as broad, complex, and fast-changing as AI will be difficult. Achieving the goal of ethical AI will be harder still. But there is also little to suggest that the UK’s movement on regulation has been up to the challenge, or anything close to providing “clarity” or “certainty”.

Industry experts have even begun to call for stricter measures on AI, arguing that a more “stringent regulatory framework” as opposed to an avoidance of commitment or a cautious wait for development.

Even the biggest tech firms are beginning to argue that the UK still has work to do on AI. Microsoft has asked the UK to expand AI safety controls through public-private collaboration on ethical AI, suggesting that the UK government can move faster to become a global AI leader.

Taking all these factors into consideration, it’s clear that the UK will need to do more on legislation. Businesses are demanding a roadmap – the UK can only wait so long before it will be forced to step up to this challenge with more transparency and trust.

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

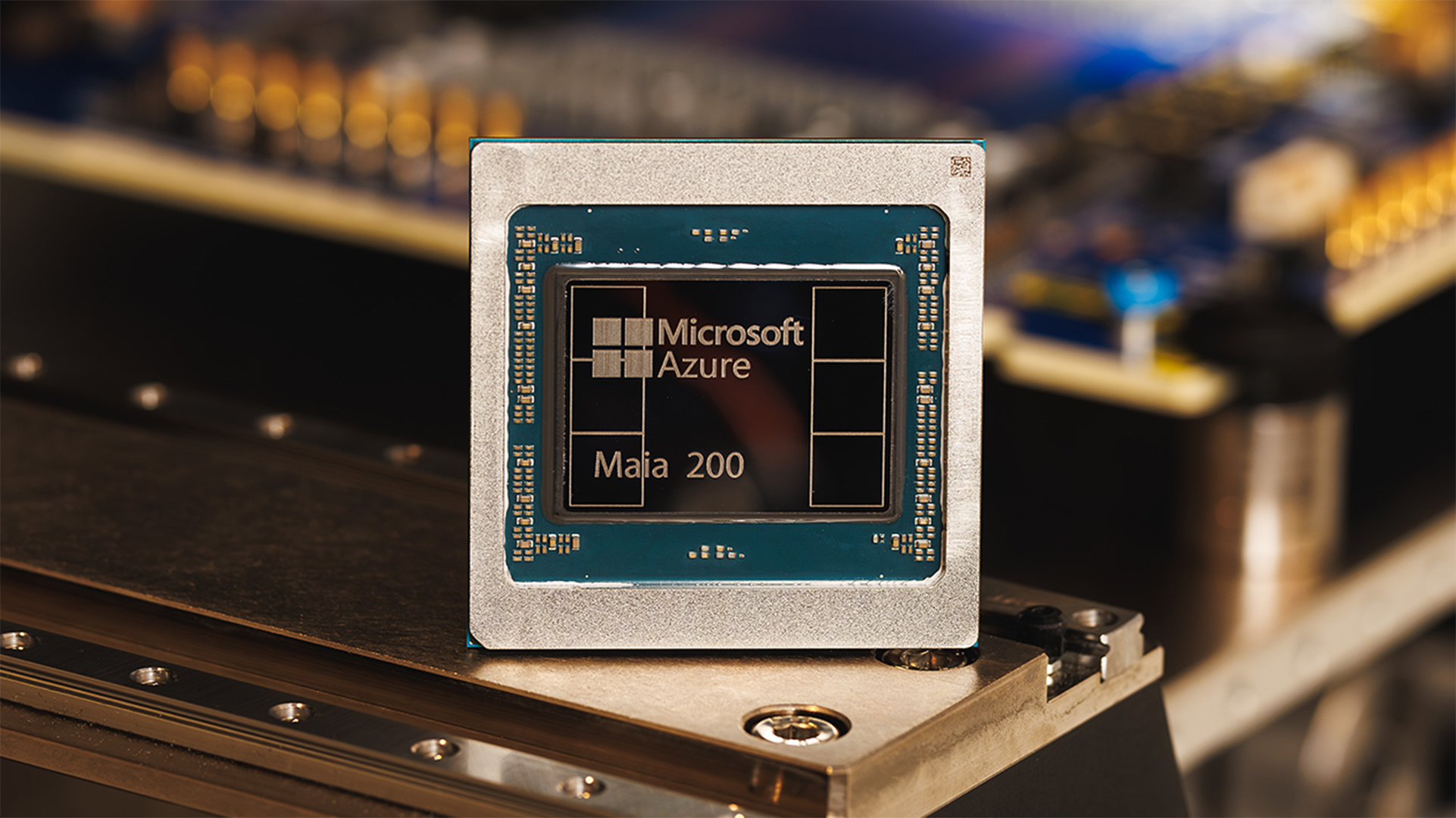

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation