Online Safety Bill: Why is Ofcom being thrown under the bus?

The UK government has handed Ofcom an impossible mission, with the thinly spread regulator being set up to fail

Frequent readers will know I’m no great fan of our telecoms and media regulator, Ofcom. In much the same way I’m no great fan of conjunctivitis or being dangled by my ankles from the back of a moving lorry, in fact. So when I find myself feeling sorry for Ofcom, you know it’s being wronged.

Ofcom is being made the fall guy for everything that’s unworkable in the government’s Online Safety Bill, which is nearing the end of its years-long plod through parliament. Ofcom is the go-to answer when ministers are quizzed about how something incredibly difficult will be resolved; it is the arbiter of anything faintly controversial in the bill; it is being tasked with finding technical solutions that haven’t even been invented yet. And when all this goes horribly wrong – which it will – the politicians will shrug their shoulders and point their finger in Ofcom’s direction. This plot is more obvious than a Bond film’s.

If you open a PDF of the draft bill, which is still being amended, and perform a search for any mention of “Ofcom”, you’ll find its name mentioned 645 times. I listened in to a Commons debate on the draft bill in early December, and MPs mentioned Ofcom hundreds of times more, with politicians from all sides urging the government to give the regulator even more responsibility than it’s already burdened with.

Here is just a flavour, a mere smattering, of some of the things that Ofcom is being tasked with in the legislation as it stands today.

- It has the power to require tech companies to remove images of child sexual exploitation using as yet unidentified “accredited technology”

- The same goes for content promoting terrorism; it must ensure that social media platforms and adult websites put in place “robust age verification” using technologies once again accredited by the regulator

- It has the power to fine big tech companies 10% of their annual turnover for compliance failures

- It has the power to take action against “individual directors, managers and other officers” at regulated companies, which it must define itself

- It will also have to step up its current responsibility to improve the public’s media literacy

That is just a soupçon of all the Ofcom responsibilities that are already in draft legislation. Then there’s the stuff that MPs want to be added to the bill. Dame Margaret Hodge wants Ofcom to investigate whether individual executives or algorithms are to blame for harmful material not being removed from the internet. Damian Collins said Ofcom must “play a role” in ensuring social networks take down “Russian state-backed disinformation”. John McDonnell called for Ofcom to assess whether confidential journalist material is being exchanged before it issues a notice ordering a tech platform to monitor user-to-user content.

Again, these are only three examples plucked from a single hour of debate on the bill in early December.

It’s not merely the sheer workload being heaped onto Ofcom’s shoulders that makes this an impossible job, it’s being asked to implement technological solutions that simply don’t exist. Davis pointed this out in a debate over the proposed Ofcom power to force social media companies to monitor encrypted conversations. “The Bill allows Ofcom to issue notices directing companies to use ‘accredited technology’, but it might as well say ‘magic’, because we do not know what is meant by ‘accredited technology’,” he pointed out.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

RELATED RESOURCE

Three innovative technologies to address UPS challenges at the edge

With increasing focus on edge computing comes added pressure for better uninterruptible power supply (UPS)

“Clause 104 will create a pressure to undermine the end-to-end encryption that is not only desirable but crucial to our telecommunications,” he added. “The clause sounds innocuous and legalistic, especially given that the notices will be issued to remove terrorist or child sexual exploitation content, which we all agree has no place online.”

Davis hits the crux of the issue right there. Every time the bill defines something we all want to get rid of – child abuse, terrorist materials, racist content – it punts responsibility to Ofcom to work out how to do it. Ministers say they don’t want to handcuff Ofcom by being prescriptive over how to remove this content, but the truth is they just don’t know how to do it. Nobody does.

And so, it will fall to Ofcom, chaired by a 79-year-old broadcasting veteran best known for being the agent for Morecambe & Wise, to work all this out. Not to mention the 644 other things it has to do, at the time of writing.

It’s an impossible job for a regulator that’s not exactly renowned for being nimble in the first place. I wouldn’t wish the job on my worst enemy. Unfortunately for both of us, it’s my worst enemy that’s got it.

Barry Collins is an experienced IT journalist who specialises in Windows, Mac, broadband and more. He's a former editor of PC Pro magazine, and has contributed to many national newspapers, magazines and websites in a career that has spanned over 20 years. You may have seen Barry as a tech pundit on television and radio, including BBC Newsnight, the Chris Evans Show and ITN News at Ten.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

Three things you need to know about the EU Data Act ahead of this week's big compliance deadline

Three things you need to know about the EU Data Act ahead of this week's big compliance deadlineNews A host of key provisions in the EU Data Act will come into effect on 12 September, and there’s a lot for businesses to unpack.

-

UK financial services firms are scrambling to comply with DORA regulations

UK financial services firms are scrambling to comply with DORA regulationsNews Lack of prioritization and tight implementation schedules mean many aren’t compliant

-

What the US-China chip war means for the tech industry

What the US-China chip war means for the tech industryIn-depth With China and the West at loggerheads over semiconductors, how will this conflict reshape the tech supply chain?

-

Former TSB CIO fined £81,000 for botched IT migration

Former TSB CIO fined £81,000 for botched IT migrationNews It’s the first penalty imposed on an individual involved in the infamous migration project

-

Microsoft, AWS face CMA probe amid competition concerns

Microsoft, AWS face CMA probe amid competition concernsNews UK businesses could face higher fees and limited options due to hyperscaler dominance of the cloud market

-

Can regulation shape cryptocurrencies into useful business assets?

Can regulation shape cryptocurrencies into useful business assets?In-depth Although the likes of Bitcoin may never stabilise, legitimising the crypto market could, in turn, pave the way for more widespread blockchain adoption

-

UK gov urged to ease "tremendous" and 'unfair' costs placed on mobile network operators

UK gov urged to ease "tremendous" and 'unfair' costs placed on mobile network operatorsNews Annual licence fees, Huawei removal costs, and social media network usage were all highlighted as detrimental to telco success

-

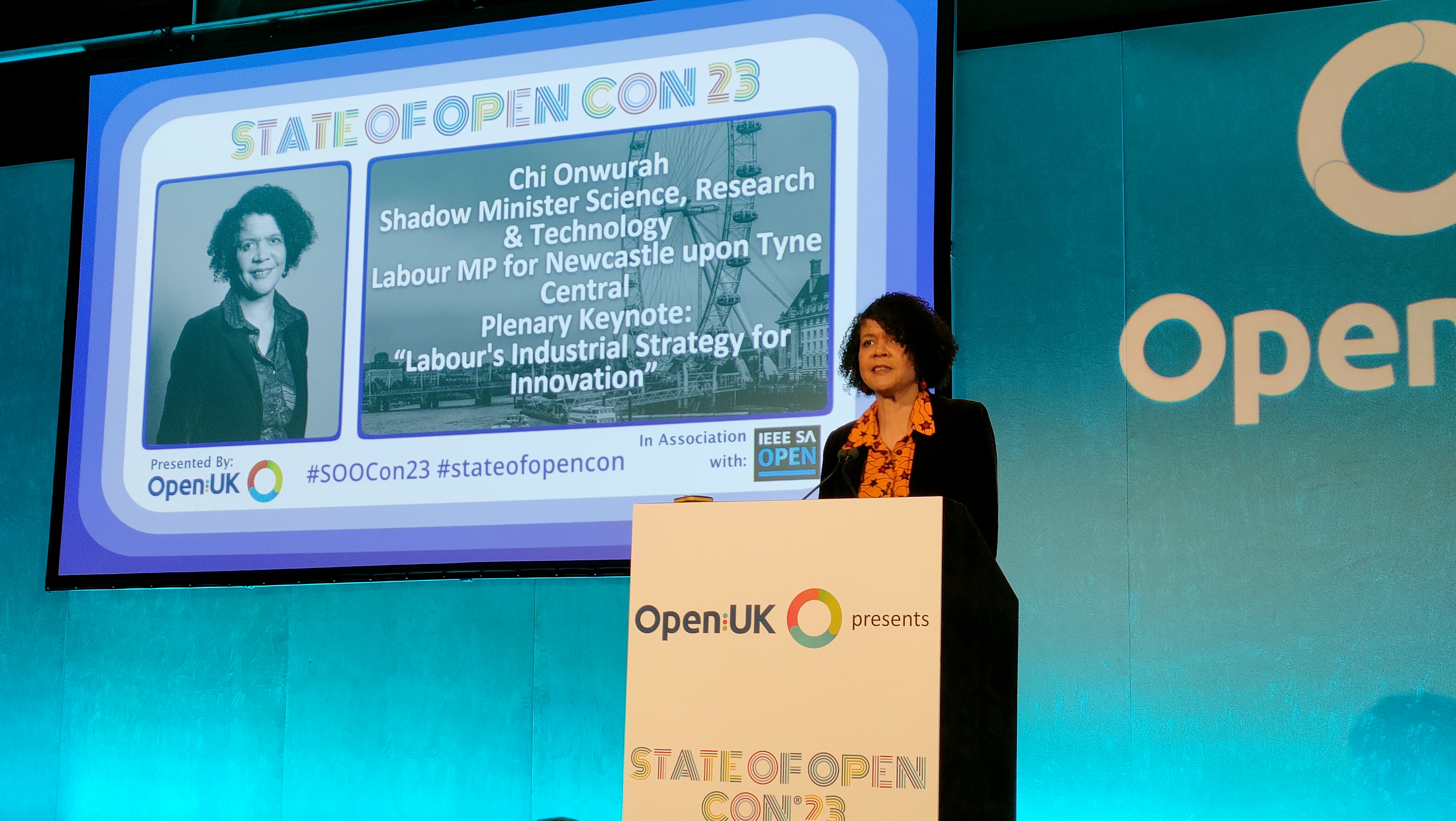

Labour plans overhaul of government's 'anti-innovation' approach to tech regulation

Labour plans overhaul of government's 'anti-innovation' approach to tech regulationNews Labour's shadow innovation minister blasts successive governments' "wholly inadequate" and "wrong-headed" approach to regulation