Qualcomm targets slimmer smart glasses with first dedicated AR SoC

Sleeker smart glasses could be on the horizon thanks to a 40% smaller PCB, reducing the amount of wiring needed by almost half

Qualcomm has today announced its first dedicated platform designed for augmented reality (AR) smart glasses, promising greater power efficiency, improved AI support and smaller device footprints.

The chip manufacturer unveiled the new AR2 Gen 1 system on a chip (SoC) at its annual Snapdragon Summit event in Hawaii. Qualcomm said it's currently working with more than ten OEM partners on devices powered by the new platform, including Lenovo, Oppo, Xiaomi, and more.

The platform is built specifically to support premium AR smart glasses, with another platform catering to more affordable mid-range devices to follow in future.

“Snapdragon AR2 is an extension of our XR portfolio and the first of a series of platforms engineered to meet the specific needs of AR glasses,” said Hugo Swart, Qualcomm’s vice-president and general manager of XR and metaverse. “It delivers groundbreaking technology designed to revolutionise AR glasses, and shake the metaverse. With it, OEMs can create thinner, sleeker, more comfortable and more immersive glasses users will love.”

Qualcomm’s previous foray into virtual and augmented reality technologies, the XR2 platform, took around a year for the first OEM devices to start hitting the market, and Swart expects this newest offering to follow the same cadence.

“Let's look into history: we announced XR2 Gen 1 in this event, so November, I think it was 2019; the first devices start to come out at the end of 2020. And then more devices came in 2021 and even ‘22,” he told IT Pro. “Based on where we are in discussions, my feeling is that we can get the same rough timeline. So second half of next year, I think we can start seeing these devices.”

The platform will support up to nine simultaneously-running cameras for improved contextual understanding, a dedicated hardware acceleration engine for better motion and position tracking, and an AI accelerator to reduce latency for six degrees of freedom or hand-tracking controls.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

There’s also a reprojection engine, which creates smoother transitions when images move between offscreen and onscreen.

One of the biggest problems affecting the adoption of smart glasses is that current technology means the glasses themselves typically have to be large and bulky to accommodate the necessary components.

Qualcomm has attempted to address this with the new AR2 by combining a physically smaller PCB - 40% reduced, compared to the reference design of its Snapdragon XR2 AR platform - with a 45% reduction in the amount of wiring required.

It also uses a distributed architecture, enabled by a multi-chip design and twin AR processors, which allows components to be spaced out more evenly across the arms and bridge of a pair of glasses without affecting latency.

The AR2 is designed for tethered solutions, meaning data which is sensitive to latency can be processed directly on the device, and more complex data processing requirements can be offloaded to a paired device such as a Snapdragon 8 Gen 2-powered smartphone or a Windows PC running a Snapdragon processor.

The system is built on a 4nm process and includes Qualcomm’s dedicated FastConnect 7800 connectivity chip featuring Wi-Fi 7 support and less than 2ms latency.

Power consumption has also been improved thanks to enhanced eye-tracking via the platform’s AI co-processor, which is used to prioritise workloads based on what the user is looking at, scaling back processing in other areas to save power.

Qualcomm claimed that the AR2 Gen1 platform offers a 2.5x better performance for AI operations, while consuming 50% less power than the XR2 design, all with less than 1W power consumption.

RELATED RESOURCE

The AR2 Gen 1, along with the Snapdragon 8 Gen 2, is optimised for use with Snapdragon Spaces, Qualcomm’s extended reality (XR) development platform. Pokémon Go developers Niantic also announced that it has partnered with Qualcomm to bring its Lightship Visual Positioning System technology to Snapdragon Spaces in 2023, and Adobe also announced at the event that its 3D content creation tools such as Adobe Aero will begin using the Snapdragon Spaces APIs.

“We strongly believe that Snapdragon Spaces is the industry-leading platform for developers to offer incredible, amazing, immersive XR experiences,” said Govind Balakrishnan, SVP Creative Cloud products and services at Adobe.

“To this end, we are going to start by bringing Adobe's 3D and immersive authoring experiences to smartphones, mobile PCs, and XR devices. And we plan to bring the Universal Scene Description open source technology to Snapdragon hardware devices.”

Adam Shepherd has been a technology journalist since 2015, covering everything from cloud storage and security, to smartphones and servers. Over the course of his career, he’s seen the spread of 5G, the growing ubiquity of wireless devices, and the start of the connected revolution. He’s also been to more trade shows and technology conferences than he cares to count.

Adam is an avid follower of the latest hardware innovations, and he is never happier than when tinkering with complex network configurations, or exploring a new Linux distro. He was also previously a co-host on the ITPro Podcast, where he was often found ranting about his love of strange gadgets, his disdain for Windows Mobile, and everything in between.

You can find Adam tweeting about enterprise technology (or more often bad jokes) @AdamShepherUK.

-

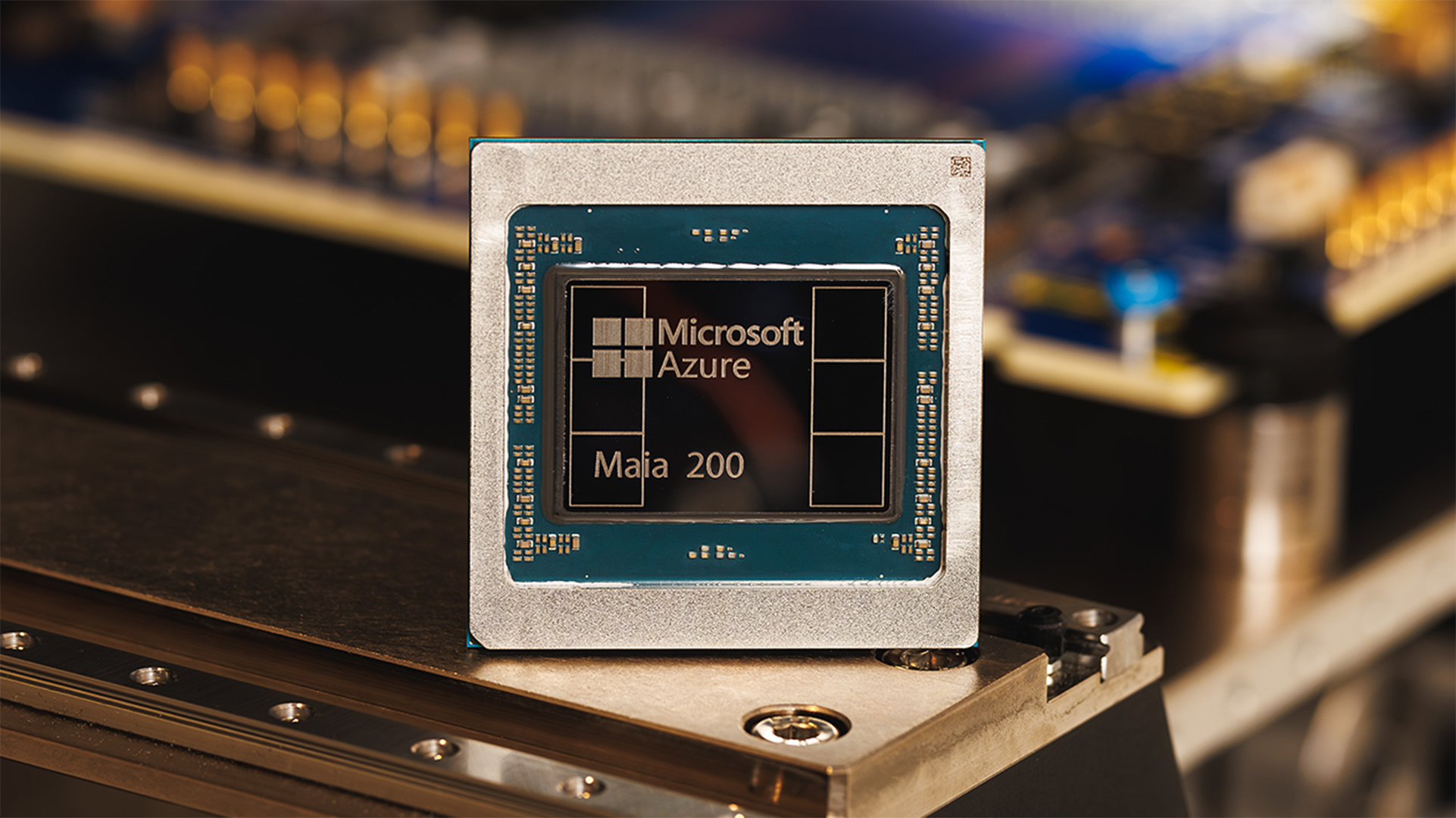

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation