Samsung debuts 'industry-first' AI-powered memory

This architecture combines high-bandwidth memory with AI processing power to boost performance and lower power consumption

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Samsung has developed a computing architecture that combines memory with artificial intelligence (AI) processing power to double the performance of data centres and high-performance computing (HPC) tasks while reducing power consumption.

Branded an 'industry first', this processor-in-memory (PIM) architecture brings AI computing capabilities to systems normally powered by high-bandwidth memory (HBM), such as data centres and supercomputers. HBM is an existing technology developed by companies including AMD and SK Hynix.

The result, according to Samsung, is twice the performance in high-powered systems, and a reduction in power consumption by more than 70%. This is driven largely by the fact the memory and processor components are integrated and no longer separated, vastly reducing the latency in the data transferred between them.

“Our groundbreaking HBM-PIM is the industry’s first programmable PIM solution tailored for diverse AI-driven workloads such as HPC, training and inference,” said Samsungs vice president of memory product planning, Kwangil Park.

“We plan to build upon this breakthrough by further collaborating with AI solution providers for even more advanced PIM-powered applications.”

RELATED RESOURCE

The trusted data centre

Best practices and business results for organisations based in Europe

Most computing systems today are based on an architecture which uses separate memory and processor units to carry out data processing tasks, known as von Neumann architecture.

This approach requires data to move back and forth on a constant basis between the two components, which can result in a bottleneck when handling ever-increasing volumes of data, slowing system performance.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

HBM-PIM, developed by Samsung, places a DRAM-optimised AI engine within each memory bank, enabling parallel processing and minimising the movement of data.

“I’m delighted to see that Samsung is addressing the memory bandwidth/power challenges for HPC and AI computing,” said Argonne’s associate laboratory director for computing, environment and life sciences, Rick Stevens. Argonne National Laboratory is a US Department of Energy research centre.

“HBM-PIM design has demonstrated impressive performance and power gains on important classes of AI applications, so we look forward to working together to evaluate its performance on additional problems of interest to Argonne National Laboratory.”

Samsung’s innovation is being tested inside AI accelerators by third-parties in the AI sector, with work expected to be completed within the first half of 2021. Early tests with Samsung’s HBM2 Aquabolt memory system demonstrated the performance improvements and power consumption reduction cited previously.

Keumars Afifi-Sabet is a writer and editor that specialises in public sector, cyber security, and cloud computing. He first joined ITPro as a staff writer in April 2018 and eventually became its Features Editor. Although a regular contributor to other tech sites in the past, these days you will find Keumars on LiveScience, where he runs its Technology section.

-

Sumo Logic expands European footprint with AWS Sovereign Cloud deal

Sumo Logic expands European footprint with AWS Sovereign Cloud dealNews The vendor is extending its AI-powered security platform to the AWS European Sovereign Cloud and Swiss Data Center

-

Going all-in on digital sovereignty

Going all-in on digital sovereigntyITPro Podcast Geopolitical uncertainty is intensifying public and private sector focus on true sovereign workloads

-

Google claims its AI chips are ‘faster, greener’ than Nvidia’s

Google claims its AI chips are ‘faster, greener’ than Nvidia’sNews Google's TPU has already been used to train AI and run data centres, but hasn't lined up against Nvidia's H100

-

£30 million IBM-linked supercomputer centre coming to North West England

£30 million IBM-linked supercomputer centre coming to North West EnglandNews Once operational, the Hartree supercomputer will be available to businesses “of all sizes”

-

How quantum computing can fight climate change

How quantum computing can fight climate changeIn-depth Quantum computers could help unpick the challenges of climate change and offer solutions with real impact – but we can’t wait for their arrival

-

“Botched government procurement” leads to £24 million Atos settlement

“Botched government procurement” leads to £24 million Atos settlementNews Labour has accused the Conservative government of using taxpayers’ money to pay for their own mistakes

-

Dell unveils four new PowerEdge servers with AMD EPYC processors

Dell unveils four new PowerEdge servers with AMD EPYC processorsNews The company claimed that customers can expect a 121% performance improvement

-

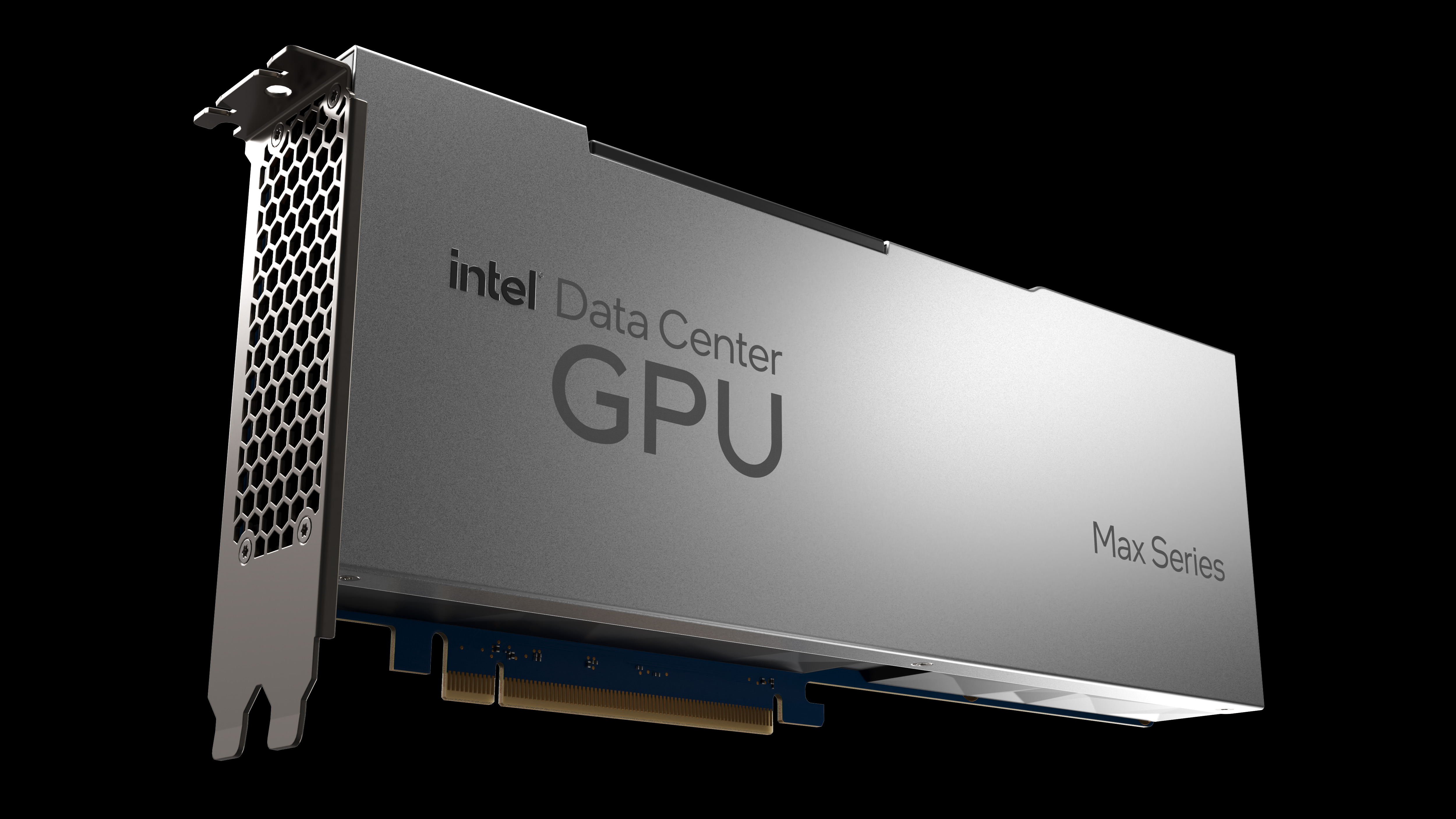

Intel unveils Max Series chip family designed for high performance computing

Intel unveils Max Series chip family designed for high performance computingNews The chip company claims its new CPU offers 4.8x better performance on HPC workloads

-

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversary

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversaryNews Chinese computing giant launches more than 50 new products for ThinkSystem server portfolio

-

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processor

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processorNews The new processor will cater to both space missions and Earth-based applications