Welcome to ITPro’s live coverage of Google Cloud Next 2024 at the Mandalay Bay hotel in Las Vegas.

It's day-two at the conference, and we’ll be bringing you all the latest news and updates from the developer keynote session.

Google Cloud describes this session as a “talk by developers, for developers”, so we’ll be diving into a lot of technical detail on all the latest goings on at the hyperscaler, including the new products, services, and tools being harnessed by customers.

While we’re waiting for the keynote session to begin this morning, why not catch up on all of our coverage from Google Cloud Next so far?

• Google Cloud doubles down on AI Hypercomputer amid sweeping compute upgrades

• Google Cloud targets ‘AI anywhere’ with Vertex AI Agents

"Five minutes until true live," we've just been told. Is it too much to hope that this will mean a properly live demo of Gemini? Live demos are often spurned at tech conferences as they present a risk, so if we get treated to this it would be a real vote of confidence by Google Cloud in its products.

"AI is a today problem"

We're off, starting with a stylized countdown to the official start of the conference and quickly moving into a video montage of some of Google Cloud's recent breakthroughs in AI

At Google, we're told, "AI is a today problem" with the montage taking in the various business use cases for Google AI. "It can translate from code to code and is one step closer to speaking a thousand languages." We're also told it has transformational applications for analytics and security.

Here to tell us more is Thomas Kurian, CEO at Google Cloud. "It's been less than eight months since Next 2023," he begins, adding that in this time Google Cloud has introduced over 1,000 product advances and moved forward with infrastructure such as unveiled six new subsea cables.

To go into more detail, Kurian welcomes Sundar Pichai in a pre-recorded video to go into more detail on some of the biggest advances Google Cloud has made.

Nearly 90% of AI unicorns are Google Cloud customers, Pichai tells the audience. Focusing in on Gemini, he stresses the vast advances that have been made with Google's in-house model in the past year alone – Gemini 1.5 Pro, now being made generally available, can run one million tokens of information consistently and can process audio, video, code, text, and images through its multimodal form.

These could be used, Pichai says, by an insurance company to take in video and images of an incident and automate the claims process.

We’re told to expect testimonies from representatives at companies like Uber, Goldman Sachs, and Palo Alto to explore concrete ways in which Google AI is already changing business processes.

Passing the baton back to Kurian, we're being given an overview of some of today's news.

“Our biggest announcements today are focused on generative AI,” Kurian says, explaining that Google Cloud is focused on AI ‘agents’ that can be used to process multimodal information to automate complex tasks such as personal shopping or making employee handoffs for nurses more automated.

Teasing these leaps is one thing, but grounding them in tangible improvements to business processes is key.

Here (via video) to explain more is David Solomon, chairman and CEO at Goldman Sachs.

“At Goldman Sachs specifically, our work with generative AI has been focused on three key pillars: enabling business growth, enhancing client experience, and improving developer productivity

Solomon says the evidence already shows that generative AI can be used to improve developer efficiency by up to 40%. The company is also exploring ways to use AI to summarize public filings and gather earnings information.

Infrastructure is crucial in the age of AI

Kurian notes that infrastructure is all-important to support AI development in the cloud and introduces Amin Vahdat, VP Systems and Infrastructure at Google Cloud to unpack today's slew of infrastructure announcements.

Vahdat runs through a string of new announcements across Google's AI Hypercomputer, including the general availability of its new v5p TPU AI accelerator and the upcoming rollout of Nvidia's new Blackwell chip family to Google Cloud.

The firm has also targeted load-time improvements through Hyperdisk ML, a block storage solution that can offer up to 11.9x improvements over comparable alternatives.

To read more about today's AI Hypercomputer and infrastructure news, check out our in-depth coverage.

Google Axion is here

Thomas Kurian has emerged from a spinning wall to unveil Google Axion, Google’s first custom, Arm-based CPU for the data center. It is capable of providing 60% better energy efficiency than other x86 instances and 50% performance improvements over competing chips.

"That's why we've already started deploying Axion on our base instances, including BigQuery, Google Earth Engine, and YouTube Ads Cloud," Kurian says.

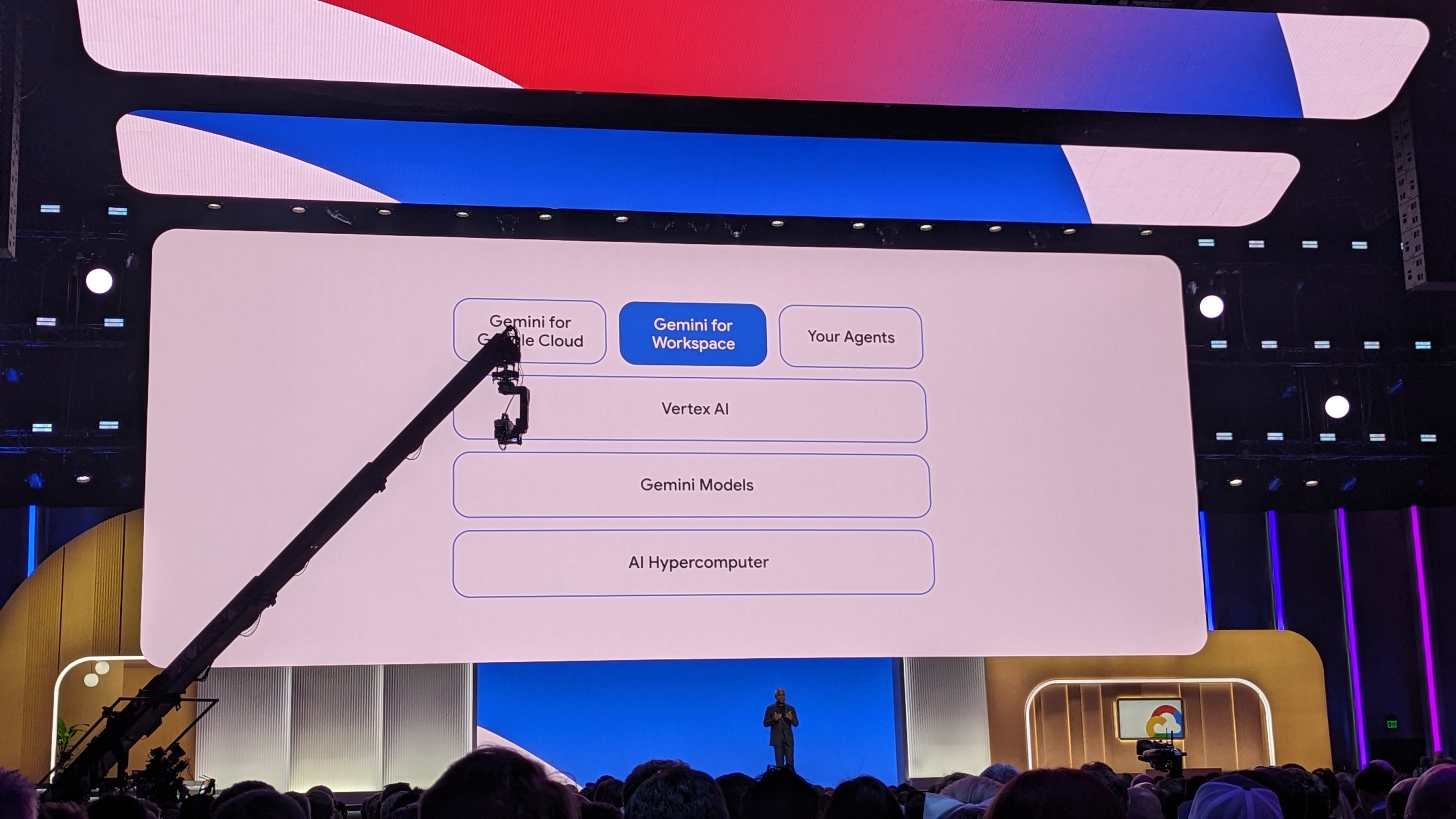

Google Cloud is doubling down on Vertex AI

Kurian moves smoothly onto Vertex AI, which Kurian says is the only solution that offers a “single platform for model tuning and infrastructure”.

More than 130 models are available through Vertex AI, including Google’s own Gemini family of models. Kurian stresses that with Gemini 1.5 Pro, Vertex just became more seamless. Putting its one million token context window in perspective, he says it can handle the equivalent of:

• One hour of video

• 11 hours audio.

• Over 30,000 lines code.

• 700,000 words.

Kurian adds that third-party and open models are an equally important part of Google Cloud's AI offering. He notes that today, Google Cloud has announced Anthropic's Claude 3 will come to Vertex AI, alongside the lightweight open coding model Code Gemma.

‘Supervised Tuning for Gemini Models’ will help businesses augment models in a simple way. Models can be linked to existing data via extensions in Vertex and data can also be used for retrieval-augmented generation (RAG).

To address hallucinations, Kurian emphasizes that AI models can now be grounded in Google Search, which he says “significantly” reduces hallucinations and improves responsiveness. This could make AI agents though Google Cloud more of a feasible option for public bodies and protected industries that need accurate information grounded in truth.

"The opportunity of customer agents is tremendous," Kurian says, which is why Google Cloud has put a lot of work into making the process of using the new Vertex AI Agent Builder as simple as possible.

Powered by Gemini Pro, agents can hold free-flowing conversations, shape conversations, and return information that is grounded in enterprise data.

Agents can be integrated with data from BigQuery or SaaS applications for more context and to help them generate as detailed results as possible. To explain more, we're being shown a demo by Amanda Lewis, developer relations engineer at Google Cloud.

An agent can use Gemini’s multimodal reasoning to identify and find a product based on a descriptive prompt of a video. The prompt “Find me a checkered shirt like the keyboard player is wearing. I’d like to see prices, where to buy it, and how soon I can be wearing it” returns the product quickly and with all the relevant information.

This can then be followed up with a phone conversation with the agent, in which speech is processed to answer questions about the product, action the purchase, and give tips on similar products and active promotions.

That’s the customer side of the experience, but what about developer and employee productivity? Uber is using Gemini for Google Workspace and we’re now being told more by Dara Khosrowshahi, CEO at Uber, via video insert.

Uber is already using Google Cloud to summarize user communications and provide context on previous conversations.

To expand on this, Aparna Pappu, GM and VP of Workspace at Google Cloud has taken to the stage.

Shots fired at rivals

Gemini for Workspace is capable of securely processing data from across an organization’s ecosystem to provide context and answer questions to improve employee productivity.

We’re starting with some shots fired at Webex, Zoom, and Microsoft Teams, with Pappu noting that Meet has been shown to outperform these platforms on audio and video quality. But to press this lead further, Google Cloud is bringing its Gemini assistant across all its workplace apps.

AI meetings and messaging add-on brings AI translation and note-taking for $10 per user, per month. To explain more, we’re being treated to another live demo in which a user can unpack how Gemini can summarize and explain files within Docs.

Using Gemini’s side panel, a user can enter the prompt “Does this offer comply with the following” and attach a Google Doc of their company’s supplier compliance rulebook to see if an 80-page proposal has any compliance issues. In seconds, the model is able to identify a security certificate issue within the document.

Beyond the off-the-shelf version of Gemini, Google Cloud is emphasizing the power of training your own employee agents within Vertex AI. Companies can train and ground their agents in their enterprise data, with new multimodal capabilities allowing video and audio to be fed in directly.

Pappu adds that Gemini is also capable of translation inherently, which could be ideal for companies looking to draw in context from across their global offices.

In yet another demo, we're show an employee using a custom agent to compare 2025 benefits plans. Using its large context window, Gemini can draw in data from across multiple company PDFs and present it in a digestible format so the employee can make an informed decision on their healthcare plan.

Via API, the agent can even connect to Google Maps and the employee's orthodontic plan to suggest nearby clinics that fall under their plan, with each option summarized with annotations. All of this helps the employee to quickly research their options – at the end of the process, the agent can even suggest a time for a call with the clinic that doesn't clash with the employee's schedule.

With its in-built language support, Gemini can also handle this across mutliple languages. Google Cloud says that this will help empower employees wherever they work – in the demo, the employee can be shown the medical plan comparison in English and Japanese simply by asking.

Generative AI has applications in the creative space and to capitalize on this fully, Google Cloud has today launched a brand new app in Workspace: Google Vids.

"Google Vids is an AI-powered video creation app for work," Pappu says, explaining that it can be used to easily put together event montage videos and slideshows. It will also produce scripts based on the videos and can be tweaked using videos in Drive and files from across a user's business.

Based on an outline provided by users, Vids can generate edited videos scored with royalty-free music and containing Google-licensed stock footage.

Rooted within Workspace, Vids comes with the same collaboration capabilities as Docs, Sheets, and other Google apps.

Some customers have already had access to Vids, with Pappu promising more details in tomorrow's keynote. Other creative applications for generative AI announced today include 'text-to-live-image' within Imagen 2, which turns text into GIF-like images and Digital Watermarking so that AI-generated images can be easily identified.

Sifting through enterprise data is laborious, but Vertex can help

Moving away from creative applications, Vertex AI Agents can also be used to comb through enterprise data, with ‘data agents’ able to use natural language queries for powerful data analytics and search functionality.

In a video clip, we're told that Walmart is already using Google Cloud's Vertex AI data agents for data analysis purposes, while News Corp is using data to search through 2.5 million news articles from across 30,000 sources. Vodafone also uses data agents to empower its 800 operators to quickly search around 10,000 contracts.

A major announcement from today is that Vertex AI can be seamlessly integrated with BigQuery for data searches. We're told that by combining Vertex AI and BigQuery, businesses can expect up to four times faster performance.

To dig into this in more detail, we've got another live demo (we were promised "real live", after all). Using a custom data agent within Looker, analysts can interrogate data using natural language.

As an example, we're shown an agent using prompts such as "Show me projected supply levels compared to demand" and given a graph in return. Following on from this, the agent can be asked to find products that look similar to an image uploaded by the user (in this case a stylish Google Cloud shoe), with summaries of the price range and delivery schedule for the top three results.

Users can ask the agent to draw correlations between supply and sales, or other data points based on their enterprise data. To round this off, the analyst can ask for the information to be complied and shared with the relevant team.

Google's answer to GitHub Copilot is here

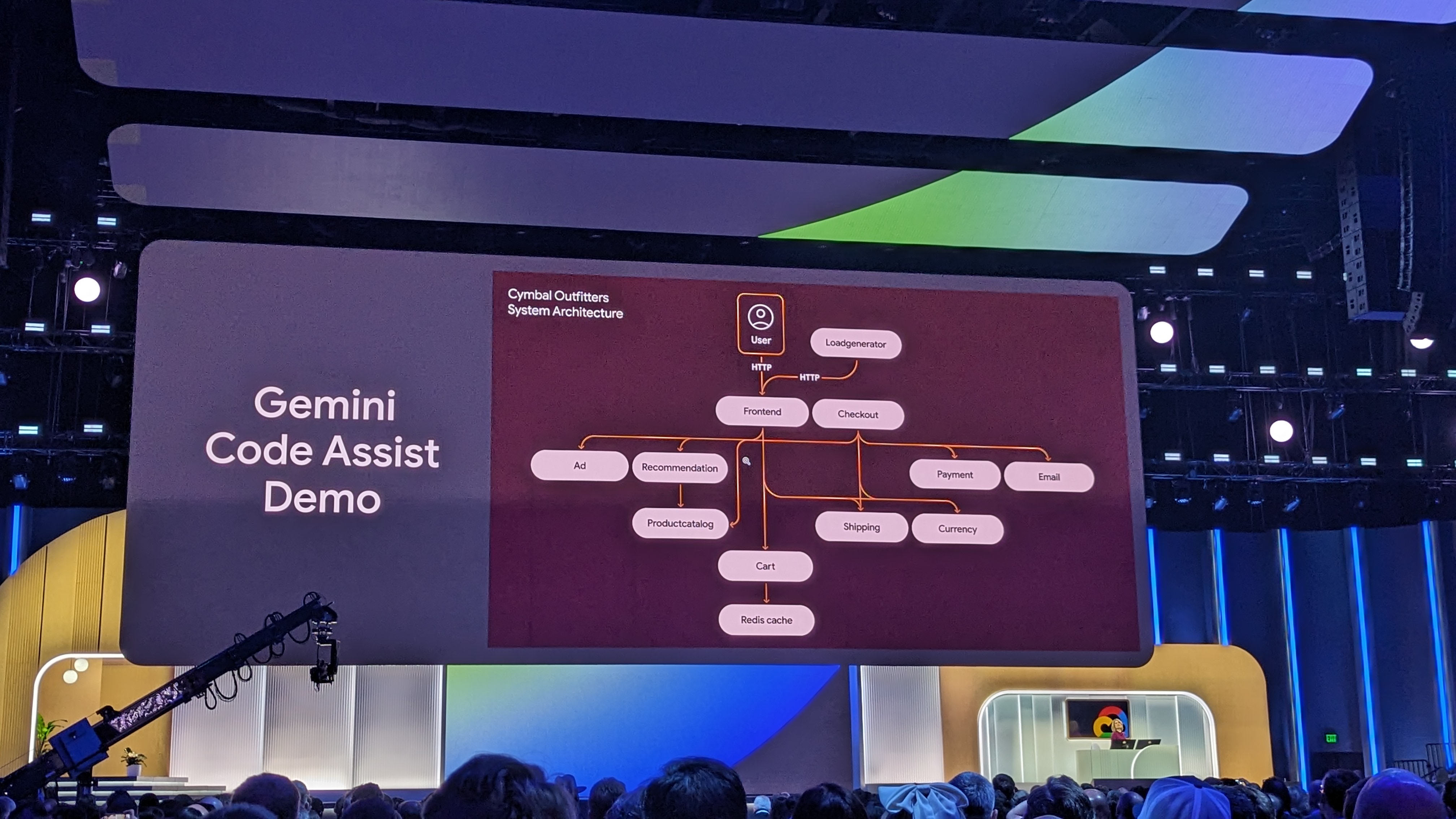

Gemini Code Assist is a major announcement at today's conference. It's Google Cloud's new built-in AI pair programmer, capable of generating vast amounts of detailed code and producing tailored outputs based on a company's own codebase.

We're now hearing from Fiona Tan, CTO at Wayfair, which has already used Gemini Code Assist to improve developer productivity.

"We chose Gemini Code Assist after running a number of pilots and evaluations," Tan says. "The results against control showed significant improvements across the spectrum."

At Wayfair, developers were able to set environments 55% faster with Gemini Code Assist, with 60% of developers stating they were able to focus on more satisfying work as a result.

Gemini Code Assist supports code bases anywhere, we're told – not just GitHub. With Gemini 1.5 Pro in code assist, this will allow entire codebases to be transformed at once, through Gemini 1.5 Pro's one million token context window.

Another key announcement made today is Gemini Cloud Assist, a tool that helps teams design and manage their application lifecycle. The tool can answer questions about user cloud issues or even suggest architectural configurations based on a natural-language description of a business' needs.

In a demo, we're shown Gemini Code Assist being used to complete a task in HTML that we're told would normally take days in just minutes. In the scenario, a fictional company's marketing team has asked a developer to move a custom recommendation service, normally shown only to customers after they've made a purchase, to the homepage for all to view.

This will be based on a Figma mockup produced by the company's design team.

"For the developers out there, you know that this means we're going to need to add padding on the homepage, modify views, and make sure that the configs have changed for our microservices," says Paige Bailey, product manager for generative AI at Google Cloud.

"Typically it would take me a week or two just to become familiarized with our company's codebase which has over 100,000 lines of code across 11 services."

WIth Gemini Code Assist, Paige shows us that she can simply ask Gemini to produce a "for you recommendation section on the homepage" and provide images of the approved design. In response, Gemini Code Assist finds the function used for recommendation, identifies the calls needed, and highlights which files need changes using codebase context. These changes can then be accepted by a developer, for ease of use while also ensuring a human is involved.

Using behind-the-scenes analysis of the user's codebase, Gemini can suggest functions while keeping the developer in the loop throughout the whole process. This is something that not all AI models could handle, as many have context windows that couldn't handle an entire codebase at once.

Security in the age of AI

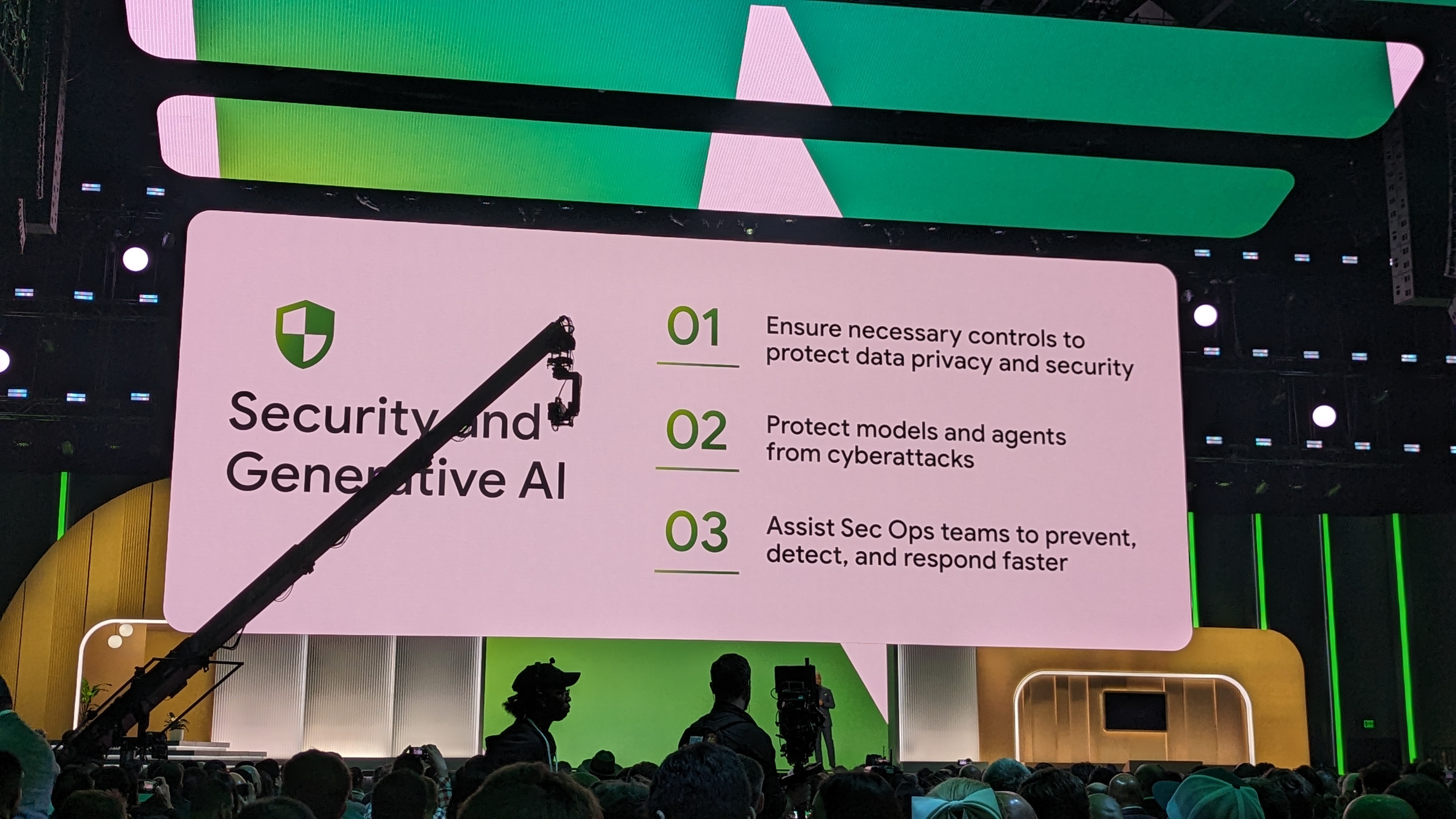

Kurian is back on stage to emphasize the importance of security when it comes to AI. He explains that Google Cloud is committed to ensuring AI risk is not only mitigated, but also using AI to actively fight back against threats.

Combining Google's threat intelligence with Mandiant data, Kurian says Gemini in Threat Intelligence will surface threats using natural language prompts. This is another area where Gemini 1.5 Pro's long context window comes in handy, he adds, as the model is capablke of analyzing large amounts of potentially malicious code.To summarize, Kurian outlines three areas Google Cloud is intensely-focused on when it comes to security and AI:

"First, to ensure our delivery platform protects the privacy and security with data we provide sovereignty and compliance controls, including data location, encryption, and access controls.

"Second, we continue to enhance our AI platform to protect your models and agents from cyber attacks that malicious prompts can bring.

"Third, we're applying generative AI to create security agents that assist security operations teams by radically increasing the speed of investigations.

Palo Alto is aligned with Google Cloud on these goals, Kurian says. To expand on this we're now being shown a video of Nikesh Arora, CEO at Palo Alto Networks.

"Very excited about all the capabilities that Gemini brings to the fore," he says. "This large language model has allowed us to do things a little faster, get more precise, we're hitting numbers north of 90% in terms of accuracy. Effectively, what we're seeing in terms of early trials is it makes our usability better, our explainability better."

Gemini in Security Command Center is another area in which AI is being used to respond to threats. The service lets businesses evaluate their security posture and summarize attack patterns more quickly.

"Together, we're creating a new era of generative AI agents built on a truly open platform for AI. And we're reinventing infrastructure to support it," Kurian says, noting that all of today's announcements feed into one another to form an end-to-end platform for AI.

And with that, today's keynote is officially over. It's been a whirlwind of announcements, with barely any pausing for breath over the past 90 minutes. Stay tuned for more from the conference via ITPro throughout the week.

What's happened so far at Google Cloud Next?

Day-one at Google Cloud Next was a very busy affair. In the opening keynote, CEO Thomas Kurian took us through all the developments at the cloud giant over the last year.

It's hard to believe it’s only been eight months since the last event when you consider the rapid pace of change both at the company and in the cloud computing and AI space.

Naturally, we saw a lot of product announcements, with a sharp focus on Google’s exciting Gemini large language model range. Gemini appears very much woven into the fabric of Google Cloud offerings now, and the firm is keen to get customers innovating with its array of features.

We also had big updates for Google Workspace, with more Gemini-powered tools being rolled out for the productivity software suite.

It’s going to be another hectic day here at the conference, so be sure to keep up to date with all the announcements here in our rolling live blog.

While we’re waiting for the keynote session to begin this morning, why not catch up on all of our coverage from Google Cloud Next so far?

• Google Cloud doubles down on AI Hypercomputer amid sweeping compute upgrades

It's the second morning of Google Cloud Next and the energy hasn't dropped a bit. ITPro has been told around 30,000 people are in attendance this year, bringing the crowds back to pre-pandemic figures.

We're just over an hour away from the second keynote, which will cover the event's AI announcements in more detail. Google Cloud has gone for the title 'Fast. Simple. Cutting edge. Pick three.', a show of confidence in its ability to deliver real business value with AI. It's going to be a lively talk with testimony from sector experts, so stay tuned for the latest.

The Michelob Ultra Arena is slowly filling up, with 45 minutes yet to go until the second keynote begins. To give readers perspective on the crowd size at this year's event, this is a photo of the exodus from the arena following the day-one keynote.

Just five minutes to go now and the crowds are pouring in. After the fast-paced general keynote, this keynote is sure to pack in more detail on the technical details of the event's major announcements. We've also been assured more demos are on the way, which always help put the actual value of products in perspective.

And we're off, starting with a dramatic drumroll. Our hosts for the event are Richard Seroter, chief evangelist at Google Cloud and Chloe Condon, senior developer relations engineer at Google Cloud.

To kick things off, we're hearing that Google Cloud used Gemini 1.5 Pro to extract some information from last year's developer keynote. This meant pasting in 75 minutes of HD video, which Gemini was able to summarize in just over a minute.

This keynote is promised to be "by developers, for developers". We're told we'll learn how Google Cloud is committed to giving developers better coding, better platforms, and better ops.

Improving developer productivity using Gemini

The first guest onstage is Brad Calder, VP and GM at Google Cloud he's here to explain more about how Gemini 1.5 Pro can improve developer workflows. He starts by acknowledging the leap forward with Gemini Code Assist, with its new one million token context window allowing developers to use AI for comprehensive reviews and upgrades.

Calder says that Gemini Cloud Assist uses context from an enterprise's cloud environment to manage application lifecycles and "solve problems in minutes instead of hours.

Here to show us more is Jason Davenport, developer advocate at Google Cloud. He's running us through a demo in which the website of an online retailer called 'Cymbal Shop' is down, hurting sales. He can prompt Gemini to show the recent alerts for 'cymbal-shop app' and receive a list of incidents in response.

He can then click on individual incidents to see logs and discover the issue is a number of 503 errors. Gemini can go further, summarizing JSON to show that there is a load balancing issue and then explaining how to troubleshoot the issue via a simple user request. Advice is specific to the user's own cloud environment, not just general information trained from search results.

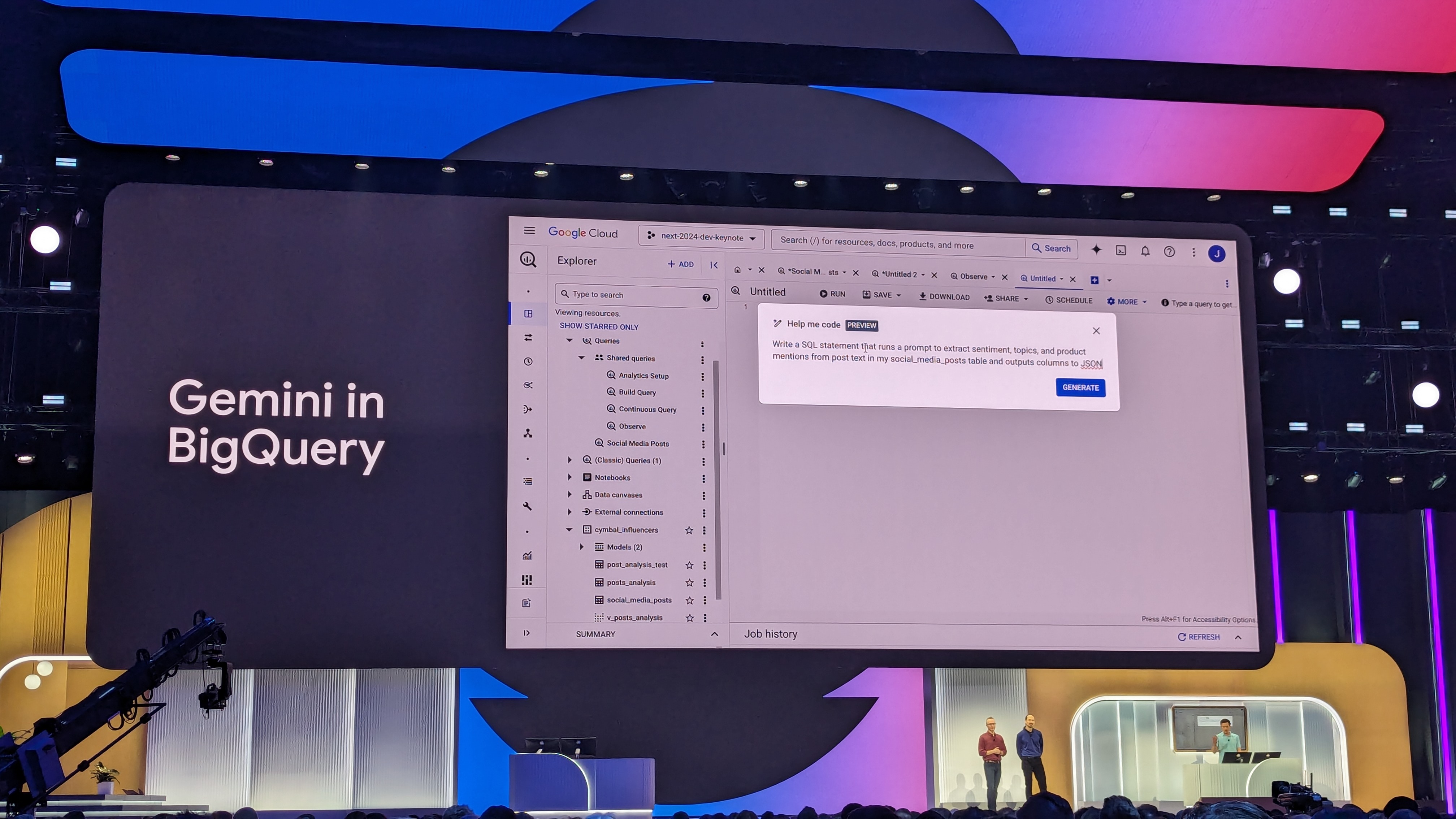

The power of Gemini in BigQuery

Gemini in BigQuery is another big announcement at Google Cloud Next, which promises to make data analysis and searching your business' data easier than ever.

We're being shown a demo in which a developer queries some records from social media platforms,

The user can ask Gemini to write a SQL prompt that extracts sentiment, topics, and product mentions in their social media feed and output the results as JSON columns.

In one step, the user can then enable 'continuous queries', a real-time data pipeline managed by BigQuery. They can then send this to their event handler PubSub, so that the applications and data teams can easily access the information by hitting 'run'.

Gemini for Google Cloud casts a wide net over business ecosystems

Condon is back on stage, to run us through how to build using Gemini for Google Cloud. Here to tell us more is Femi Akinde, product manager at Google Cloud.

We're being shown a demonstration of Gemini's multimodal power, with another example of it deriving context from a video stream. In this case, it's a video of Chloe's bookshelf.

"You and I both know LLMs are trained on general information – so can we get Gemini to recommend fantastic places to travel based on your books?" asks Akinde. Gemini does indeed produce recommendations based on the video, but Akinde and Condon say they want to go further and turn this into an app.

This means turning to Cloud Workstations for Google Cloud, an application framework with an enterprise focus. We're now welcoming Guillermo Rauch, CEO at cloud PaaS firm Vercel, to explain how AI can factor into easy app development.

Rauch acknowledges how far AI development has come in just the past year: "With retrival augmented generation, as well as tools and functions, we made applications more powerful and reliable."

But Rauch also notes that text-based models were limited and AI models still need to be more interactive and integrated. Vercel's answer to this issue is what it calls "generative UI".

In this application development example, Gemini connects with React components to bring the data together and streams information on-demand. This reduces the need for bulky client-side code and helps the app be more dynamic based on the user requirements. For example, Rauch shows us a flight selection within the app, with the user able to select their seat from a diagram that Gemini natively interprets to recognize the desired seat.

Production-grade generative AI

We're now moving from 'Build' to 'Run' on the pipeline for Gemini. Joining Soroter onstage to explain more is Kaslin Fields, developer advocate at Google Cloud and co-host of the Kubernetes Podcast.

In another demo, we're shown how Gemini's multimodal capabilities allow a uswer to send an image of their dining room to receive furniture recommendations. Going into technical detail, Fields breaks this process down into data injection, which combines prompts or media with retrieval augmented generation (RAG), evaluation where data is processed and a connecting vector database, in this case AlloyDB.

AlloyDB has a direct connection to Vertex AI, so the vectors stored within – which can be representative of combined text and images – can be quickly compared and interpreted as queries. This is how images can be quickly interrogated for their context and visual information, such as in the example above.

Running apps better with Gemini

Soroter restates the claim that Gemini makes running apps better, assisting users across the platform and runtime stage.

Here to run us through these capabilities in more detail is Chen Goldberg, VP & GM cloud runtime at Google Cloud.

In a demo, we're shown how a user can put a description of their desired app architecture into Cloud Run. Once the components are generated, the user hits 'Deploy' for the application to be created across their platforms. Google Cloud has just released multi-region deployment for Cloud Run, configurable from within the platform.

Moving onto Google Kubernetes Engine (GKE), Goldberg GKE "has you covered" if you're seeking flexibility and control. Google Cloud has announced new support for TPU optimization and can now tie Vertex AI to your cluster within GKE to unlock AI benefits.

Gemini Cloud Assist also has a role to play here, Golberg adds. Businesses can use the tool to surface insights into their clusters, with the model containing contextual informations on their configuration and regions. In this way, it can produce detailed stats on GKE clusters and also provide detailed recommendations for improving container management.

"Our AI assistant container management platform, with both GKE and Cloud Run offers you the best option to boost productivity in the cloud," Goldberg adds.

It's all about prompts

Deploying LLMs can be easier said than done, Condon notes. Prompts can make or break model usability and Google Cloud recongizes that some developers may struggle with this side of AI deployment more than others – here to unpack what the firm is doing to address this is Steve McGhee, reliabulity advocate at Google Cloud.

"You've heard the term prompt engineering, and we can iteratively use prompt engineering to improver the reliability of our apps," says McGhee, describing a feedback loop for improving prompts that builds on CI/CD principles (see image).

To make things easier for developers, Google Cloud has released Prompt Management and Prompt Evaluation within Vertex AI. Using natural language, developers can view the outputs from a model and manually indicate whether they are correct or not and label them as 'ground truths'. The system will then be able to compare outputs down the line and assign them a color-coded score for truthfulness.

Improved AI observability

Deploying and running AI models in the cloud also requires a great deal of observability. It's not enough to know when things are going wrong – you have to know how and whether they're likely to go wrong again.

We're now welcoming Charity Majors, co-founder & CTO at observability platform honeycomb.ai.

When generative AI doesn't work as intended, Majors notes, users often don't know where to begin to look. As the root cause is a mystery, observability can become difficult to achieve.

To figure out what happened, Majors shows us a demo in which queries are working 95.6% of the time. Within honeycomb.ai, users can open a granular view called 'BubbleUp' to view anomalies across important data.

We're nearing the end of the keynote now, with just one guest left to welcome to the stage: Philipp Schmid, engineering manager at Hugging Face.

One of Hugging Face's most popular models is Gemma 7B and Schmid says models such as this can now be launched directly from Google Cloud or within Hugging Face itself.

To round out the event, Condon and Seroter have said the video from the keynote will be run through Gemini to produce a summary.

We're told this meant moving the 27,000 token long video from the casino floor to the cloud, to be processed. It's a success, with Gemini able to provide detailed product descriptions and answers based on the keynote.

And with that, it's a wrap for the second keynote at Google Cloud Next 2024. Keep an eye on ITPro for the latest news, features, and multimedia from the conference.