AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’

Security teams are scrambling to catch AI-generated flaws that appear correct before disaster strikes

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

AI has overtaken all other factors in reshaping security priorities, with teams now forced to deal with AI-generated code that appears correct, professional, and production-ready – but that quietly introduces security risks.

That’s according to a new survey from Black Duck, which recorded a 12% rise in teams actively risk-ranking where LLM-generated code can and can’t be deployed last year.

Meanwhile, there was a 10% increase in custom security rules designed specifically to catch AI-generated flaws.

“The real risk of AI-generated code isn’t obvious breakage; it’s the illusion of correctness. Code that looks polished can still conceal serious security flaws, and developers are increasingly trusting it,” said Black Duck CEO Jason Schmitt.

“We’re witnessing a dangerous paradox: developers increasingly trust AI-produced code that lacks the security instincts of seasoned experts."

It's regulation that's forcing most security investment, researchers found, with the use of Software Bill of Materials (SBOM) up nearly 30%, and automated infrastructure verification surging by more than 50%.

Similarly, respondents reported an increase of more than 40% in streamlining responsible vulnerability disclosure, driven by the EU Cyber Resilience Act (CRA) and evolving US government demands.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"The surge in SBOM adoption reported in BSIMM16 is so critical, since it gives organizations the transparency to understand exactly what’s in their software — whether written by humans, AI, or third parties — and the visibility to respond quickly when vulnerabilities surface," said Schmitt.

"As regulatory mandates expand, SBOMs are moving beyond compliance — they’re becoming foundational infrastructure for managing risk in an AI-driven development landscape.”

A sharp focus on first and third-party code

Organizations are rapidly standardizing tech stacks, the survey noted. Black Duck found teams are now expanding visibility beyond first-party code as third-party and AI-assisted development explodes.

Security training is also being reinvented, researchers revealed, with multi-day courses now being replaced by just-in-time, on-demand guidance embedded directly into developer workflows in bite-sized chunks.

The use of open collaboration channels increased 29% year over year, giving teams instant access to security guidance on the fly.

AI-generated code is in vogue

Recent research from Aikido found that AI coding tools now write 24% of production code globally as enterprises across Europe and US ramp up adoption.

The trend has been gaining momentum for several years now, with a host of major tech providers such as Google and Microsoft revealing significant portions of source code is now written using the technology.

However, while this is speeding up production, research shows it also carries huge risks.

Aikido found that AI-generated code is now the cause of one-in-five breaches, with 69% of security leaders, engineers, and developers on both sides of the Atlantic having found serious vulnerabilities.

These risk factors are further exacerbated by the fact many developers are placing too much faith in the technology when coding. A separate survey from Sonar found nearly half of devs fail to check AI-generated code, placing their organization at huge risk.

FOLLOW US ON SOCIAL MEDIA

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Emma Woollacott is a freelance journalist writing for publications including the BBC, Private Eye, Forbes, Raconteur and specialist technology titles.

-

Sundar Pichai hails AI gains as Google Cloud, Gemini growth surges

Sundar Pichai hails AI gains as Google Cloud, Gemini growth surgesNews The company’s cloud unit beat Wall Street expectations as it continues to play a key role in driving AI adoption

-

Why Anthropic sent software stocks into freefall

Why Anthropic sent software stocks into freefallNews Anthropic's sector-specific plugins for Claude Cowork have investors worried about disruption to software and services companies

-

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’News A research paper from Anthropic suggests we need to be careful deploying AI to avoid losing critical skills

-

The open source ecosystem is booming thanks to AI, but hackers are taking advantage

The open source ecosystem is booming thanks to AI, but hackers are taking advantageNews Analysis by Sonatype found that AI is giving attackers new opportunities to target victims

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

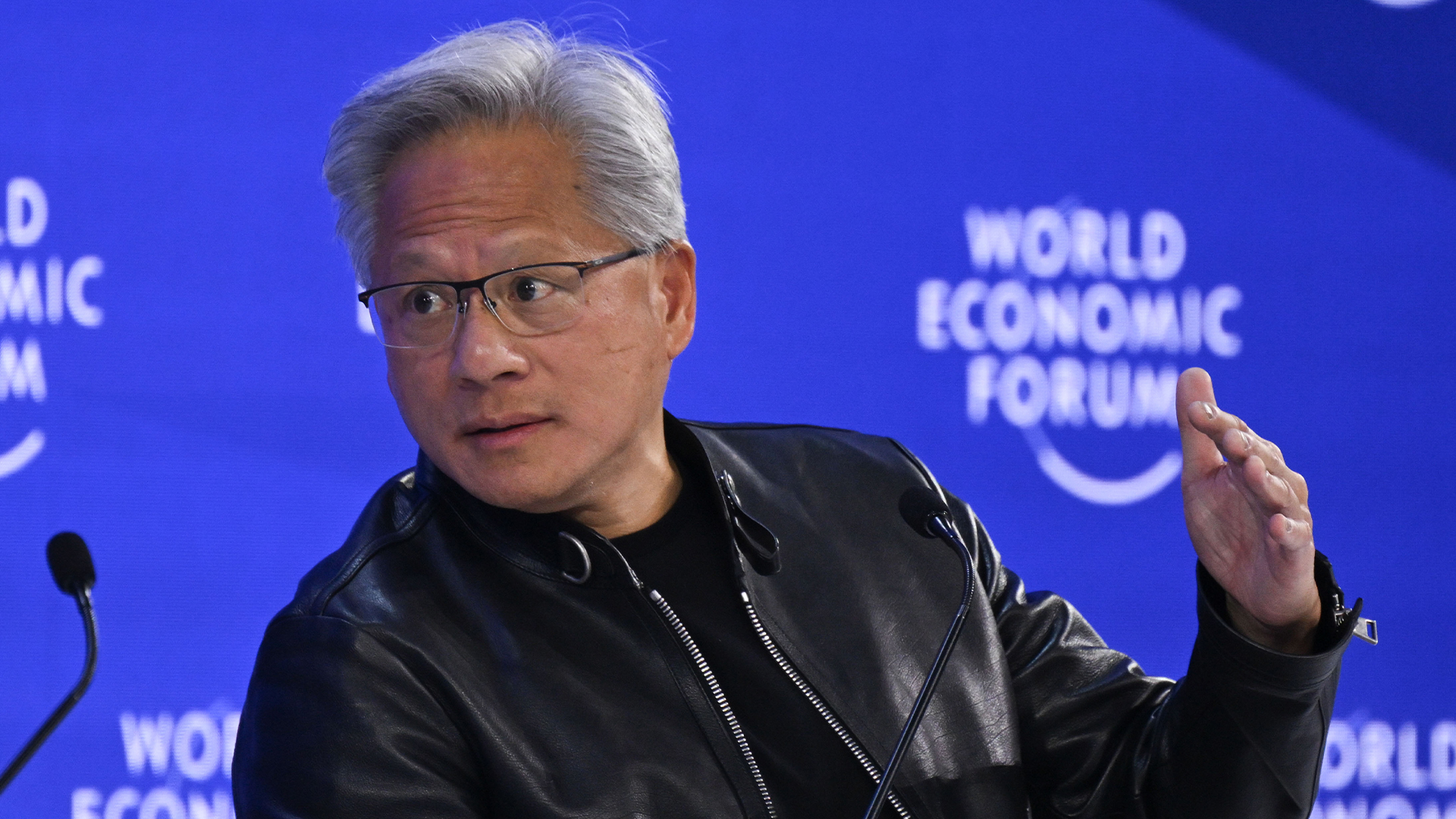

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

UK government launches industry 'ambassadors' scheme to champion software security improvements

UK government launches industry 'ambassadors' scheme to champion software security improvementsNews The Software Security Ambassadors scheme aims to boost software supply chains by helping organizations implement the Software Security Code of Practice.

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead