So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

A majority of developers are using AI to create code, but even though most don't trust the output, they're failing to take steps to verify it.

That's according to a survey from code review company Sonar, which found that 72% of developers use AI tools every day, with the technology helping to write up to 42% of committed code.

Notably, 96% of developers surveyed said they don't fully trust that AI-generated code is functionally correct – but fewer than half say they review it before committing.

Sonar said this leads to "verification debt", a term used by AWS CTO Werner Vogels while discussing the use of AI in software development at the company's annual re:Invent conference in December.

Tariq Shaukat, CEO of Sonar, said the research highlights a “fundamental shift” in software development, whereby value is no longer simply defined by the speed at which code can be written, but by the "confidence in deploying it”.

"While AI has made code generation nearly effortless, it has created a critical trust gap between output and deployment,” he said. "To realize the full potential of AI, we must close this gap."

Why devs are slacking on AI-generated code

There may be a good reason for the failure to check AI-generated code, the study noted, mainly as it typically takes more time than reviewing human-written code.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"While AI is supposed to save time, developers are spending a significant portion of that saved time on review," the Sonar report said, adding: "In fact, 38% of developers say reviewing AI-generated code requires more effort than reviewing code written by their human colleagues."

One reason for that is AI often produces code that looks correct but isn't reliable, a statement that 61% of respondents agreed with.

"That's a critical finding — it means AI code can introduce subtle bugs that are harder to spot than typical human errors," the report noted. "The same percentage (61%) agree that it 'requires a lot of effort to get good code from AI' through prompting and fixing."

How developers are using AI

The survey found the most common use for AI by developers was for proofs of concept and prototypes (88%), followed by the creation of production software for internal, non-critical workflows (83%), customer-facing applications (73%), and business-critical internal software (58%).

Those surveyed said AI was most effective at writing documentation, explaining existing code, and vibe coding. Just 55% of those polled said such tools were effective for assisting development of new code, but that task had the highest adoption rate at 90%.

"Developers have embraced AI as a daily partner, but they're finding it's a much better 'explainer' and 'prototyper' than it is a 'maintainer' or 'refactorer' — at least for now," the report states.

"It's highly effective at generating new things (docs, tests, new projects) but struggles more with the complex, nuanced work of modifying and optimizing existing, mission-critical code."

Too much trust in AI tools

The Sonar report is the latest in a string of studies highlighting the benefits of AI for developers, but a prevailing lack of trust among many on their outputs.

Best practices have also been slipped among many since the influx of these tools in the profession, research shows. In a survey from Cloudsmith last year, for example, nearly half of developers (42%) said their codebases are now largely AI-generated.

Respondents specifically highlighted productivity and efficiency gains while using the technology, yet only 67% said they actively review code before deployments.

Cloudsmith warned this lax approach to code testing and reviews could have dire consequences for enterprises, leaving them open to an array of security risks and vulnerabilities.

FOLLOW US ON SOCIAL MEDIA

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AI

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AINews Mensch’s comments come amidst rising concerns about the impact of AI on traditional software

-

Westcon-Comstor and UiPath forge closer ties in EU growth drive

Westcon-Comstor and UiPath forge closer ties in EU growth driveNews The duo have announced a new pan-European distribution deal to drive services-led AI automation growth

-

‘AI is making us able to develop software at the speed of light’: Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AI

‘AI is making us able to develop software at the speed of light’: Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AINews Mensch’s comments come amidst rising concerns about the impact of AI on traditional software

-

Automated code reviews are coming to Google's Gemini CLI Conductor extension – here's what users need to know

Automated code reviews are coming to Google's Gemini CLI Conductor extension – here's what users need to knowNews A new feature in the Gemini CLI extension looks to improve code quality through verification

-

Claude Code creator Boris Cherny says software engineers are 'more important than ever’ as AI transforms the profession – but Anthropic CEO Dario Amodei still thinks full automation is coming

Claude Code creator Boris Cherny says software engineers are 'more important than ever’ as AI transforms the profession – but Anthropic CEO Dario Amodei still thinks full automation is comingNews There’s still plenty of room for software engineers in the age of AI, at least for now

-

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario Amodei

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario AmodeiNews Internal teams at Anthropic are supercharging production and shoring up code security with Claude, claims executive

-

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’News Security teams are scrambling to catch AI-generated flaws that appear correct before disaster strikes

-

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’News A research paper from Anthropic suggests we need to be careful deploying AI to avoid losing critical skills

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

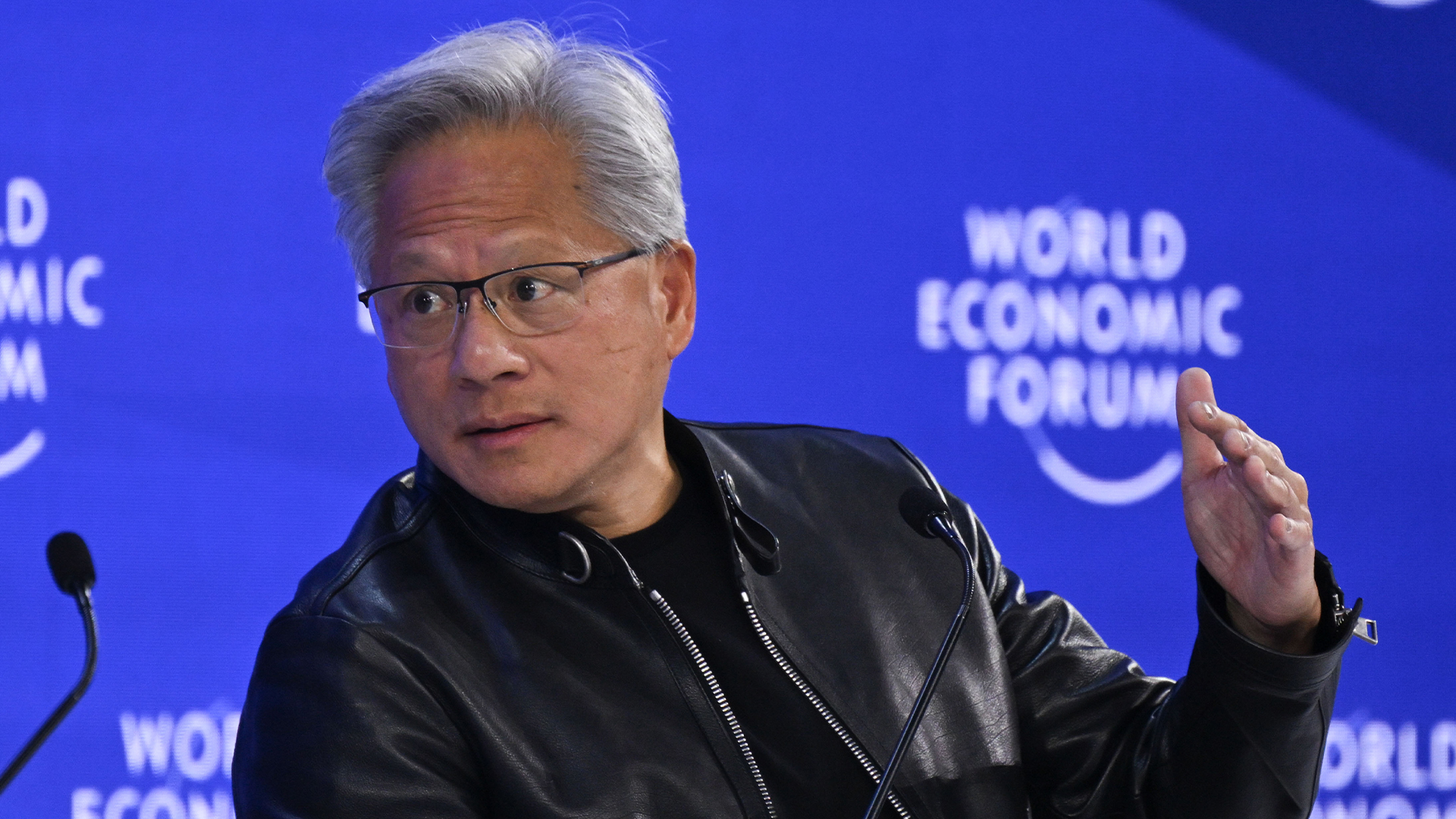

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software