A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

Curl isn’t the only open source project inundated with AI slop submissions

A bug bounty program run by a popular open source data transfer service has been shut down amidst an onslaught of AI-generated ‘slop’ contributions.

Daniel Stenberg, lead maintainer of Curl, a command line interface (CLI) tool which allows developers to transfer data, confirmed the decision to shut down the bug bounty scheme in a GitHub commit last week.

In a subsequent email outlining the move, Stenberg revealed seven bug bounty submissions were recorded within a sixteen hour period, with 20 logged since the beginning of the year.

Although some of these uncovered bugs, not a single one actually detailed a concrete vulnerability.

“Some of them were true and proper bugs, and taking care of this lot took a good while,” he said. “Eventually we concluded that none of them identified a vulnerability and we now count twenty submissions done already in 2026.”

Stenberg added that the current volume of submissions is placing a “high load” on the security team, and the decision to shut down the program aims to “reduce the noise” and number of AI-generated reports.

“The main goal with shutting down the bounty is to remove the incentive for people to submit crap and non-well researched reports to us,” he wrote.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“We believe, hope really, that we still will get actual security vulnerabilities reported to use even if we do not pay for them. The future will tell.”

cURL maintainer names and shames bug hunter

Stenberg revealed he had a “lengthy discussion” with an individual who submitted one of the AI-generated vulnerability reports after publicly ridiculing them on social media site Mastodon.

“It was useful for me to make me remember that oftentimes these people are just ordinary mislead humans and they might actually learn from this and perhaps even change,” he said.

The individual in question, however, insists their professional life has been “ruined”.

According to Stenberg, the naming and shaming approach appears to be the only option for cutting down on this growing issue.

“This is a balance of course, but I also continue to believe that exposing, discussing, and ridiculing the ones who waste our time is one of the better ways to get the message through: you should NEVER report a bug or vulnerability unless you understand it - and can reproduce it.”

AI slop reports are a major headache

This isn’t Stenberg’s first run-in with this topic. In January 2024, he aired previous concerns over the growing volume of poor reports. A key talking point here lay around the fact that these appeared legitimate and maintainers wasted time dissecting slop.

"When reports are made to look better and to appear to have a point, it takes a longer time for us to research and eventually discard it. Every security report has to have a human spend time to look at it and assess what it means," he said.

Curl isn’t alone in contending with AI-generated ‘slop’ security reports, either. As ITPro reported in December 2024, another open source maintainer lamented over the strain placed on open source developers and maintainers.

Seth Larson, a security report triage worker for a handful of open source projects, revealed they were facing an “uptick in extremely low-quality, spammy, and LLM-hallucinated security reports” to open source projects.

This torrent of AI-generated slop was having a huge impact on open source maintainers, wasting time and effort, and leading to higher levels of burnout. As with Stenberg, Larson noted that these seemingly legitimate reports were becoming a major headache.

“The issue is in the age of LLMs, these reports appear at first glance to be potentially legitimate and thus require time to refute,” he wrote in a blog post.

FOLLOW US ON SOCIAL MEDIA

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

90% of companies are woefully unprepared for quantum security threats

90% of companies are woefully unprepared for quantum security threatsNews Quantum security threats are coming, but a Bain & Company survey shows systems aren't yet in place to prevent widespread chaos

-

Trade groups hit out at EU's proposed Digital Networks Act

Trade groups hit out at EU's proposed Digital Networks ActNews The European Commission has set out its proposals to boost the EU's connectivity, to some criticism from industry associations

-

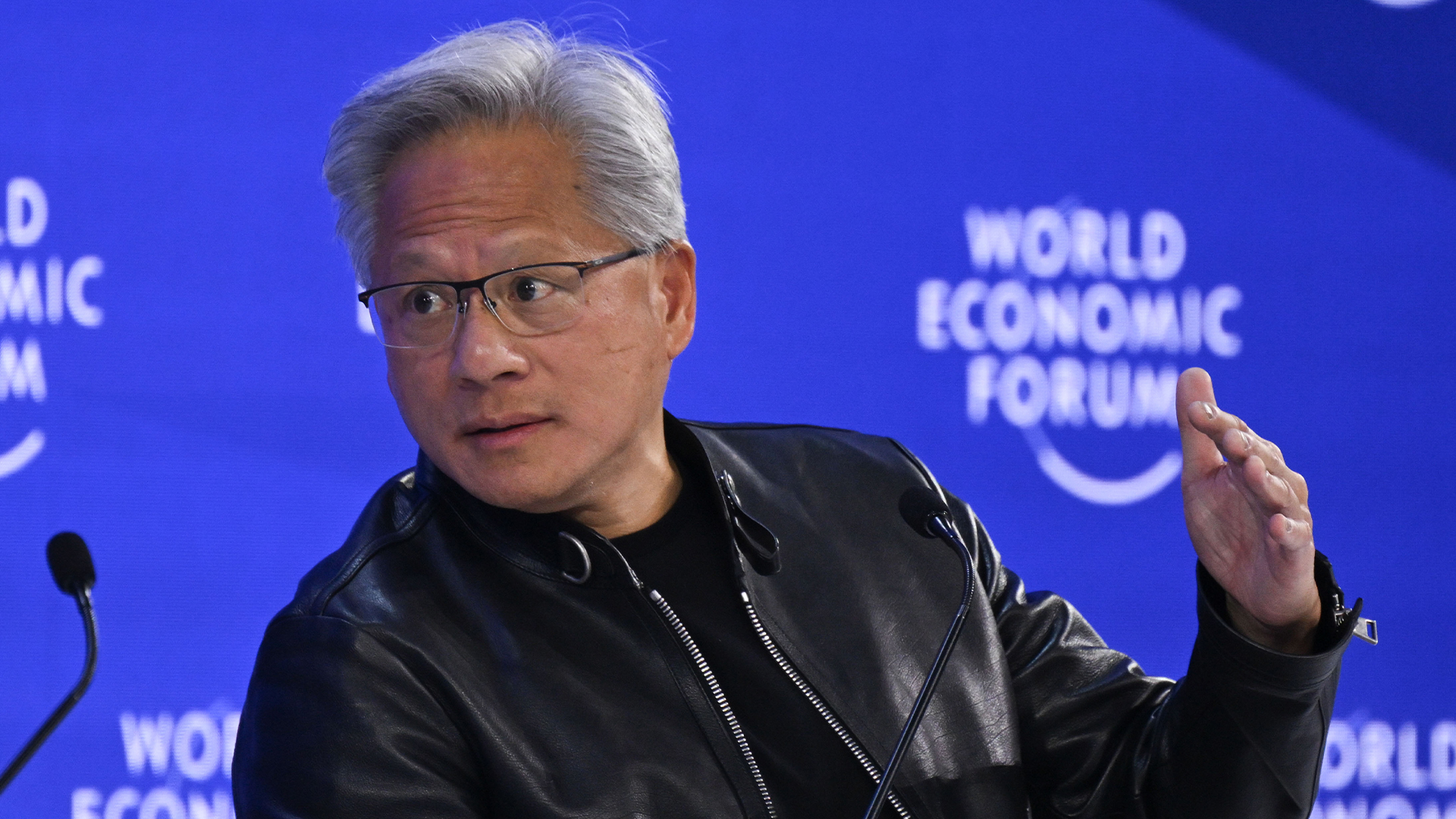

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

AI is creating more software flaws – and they're getting worse

AI is creating more software flaws – and they're getting worseNews A CodeRabbit study compared pull requests with AI and without, finding AI is fast but highly error prone

-

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teams

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teamsAnalysis Software developers may be forgiven for worrying about their jobs in 2025, but the end result of AI adoption will probably be larger teams, not an onslaught of job cuts.

-

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux Foundation

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux FoundationNews The AAIF aims to standardize agentic AI development and create an open ecosystem for developers

-

Atlassian just launched a new ChatGPT connector feature for Jira and Confluence — here's what users can expect

Atlassian just launched a new ChatGPT connector feature for Jira and Confluence — here's what users can expectNews The company says the new features will make it easier to summarize updates, surface insights, and act on information in Jira and Confluence

-

AWS says ‘frontier agents’ are here – and they’re going to transform software development

AWS says ‘frontier agents’ are here – and they’re going to transform software developmentNews A new class of AI agents promises days of autonomous work and added safety checks