AI 'slop security reports' are driving open source maintainers mad

Low-quality, LLM-generated reports should be treated as if they are malicious, according to one expert

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Open source project maintainers are drowning in a sea of AI-generated 'slop security reports', according to security report triage worker Seth Larson.

Larson said he’s witnessed an increase in poor-quality reports that are wasting maintainers' time and contributing to burnout.

"Recently I've noticed an uptick in extremely low-quality, spammy, and LLM-hallucinated security reports to open source projects. The issue is in the age of LLMs, these reports appear at first-glance to be potentially legitimate and thus require time to refute," he wrote in a blog post.

"This issue is tough to tackle because it's distributed across thousands of open source projects, and due to the security-sensitive nature of reports open source maintainers are discouraged from sharing their experiences or asking for help."

Larson wants to see platforms adding systems to prevent automated or abusive creation of security reports, and allow them to be made public without publishing a vulnerability record - essentially letting maintainers name-and-shame offenders.

They should remove the public attribution of reporters that abuse the system, take away any positive incentive to reporting security issues, and limit the ability of newly registered users to report security issues.

Meanwhile, Larson called on reporters to stop using LLM systems for detecting vulnerabilities, and to only submit reports that have been reviewed by a human being. Don't spam projects, he said, and show up with patches, not just reports.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

As for maintainers, he said low-quality reports should be treated as if they are malicious.

"Put the same amount of effort into responding as the reporter put into submitting a sloppy report: ie, near zero," he suggested.

"If you receive a report that you suspect is AI or LLM generated, reply with a short response and close the report: 'I suspect this report is AI-generated/incorrect/spam. Please respond with more justification for this report'."

Larson isn't the only maintainer to raise the issue of low-quality AI-generated security reports.

Earlier this month, Daniel Stenberg complained that, while the Curl project had always received a certain number of poor reports, AI was now making them look more plausible - and thus taking more time to check out.

"When reports are made to look better and to appear to have a point, it takes a longer time for us to research and eventually discard it. Every security report has to have a human spend time to look at it and assess what it means," he said.

"The better the crap, the longer time and the more energy we have to spend on the report until we close it. A crap report does not help the project at all. It instead takes away developer time and energy from something productive."

Emma Woollacott is a freelance journalist writing for publications including the BBC, Private Eye, Forbes, Raconteur and specialist technology titles.

-

AWS CEO Matt Garman isn’t convinced AI spells the end of the software industry

AWS CEO Matt Garman isn’t convinced AI spells the end of the software industryNews Software stocks have taken a beating in recent weeks, but AWS CEO Matt Garman has joined Nvidia's Jensen Huang and Databricks CEO Ali Ghodsi in pouring cold water on the AI-fueled hysteria.

-

Deepfake business risks are growing

Deepfake business risks are growingIn-depth As the risk of being targeted by deepfakes increases, what should businesses be looking out for?

-

The open source ecosystem is booming thanks to AI, but hackers are taking advantage

The open source ecosystem is booming thanks to AI, but hackers are taking advantageNews Analysis by Sonatype found that AI is giving attackers new opportunities to target victims

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux Foundation

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux FoundationNews The AAIF aims to standardize agentic AI development and create an open ecosystem for developers

-

Open source AI models are cheaper than closed source competitors and perform on par, so why aren’t enterprises flocking to them?

Open source AI models are cheaper than closed source competitors and perform on par, so why aren’t enterprises flocking to them?Analysis Open source AI models often perform on-par with closed source options and could save enterprises billions in cost savings, new research suggests, yet uptake remains limited.

-

AI-generated code is in vogue: Developers are now packing codebases with automated code – but they’re overlooking security and leaving enterprises open to huge risks

AI-generated code is in vogue: Developers are now packing codebases with automated code – but they’re overlooking security and leaving enterprises open to huge risksNews While AI-generated code is helping to streamline operations for developer teams, many are overlooking crucial security considerations.

-

Redis unveils new tools for developers working on AI applications

Redis unveils new tools for developers working on AI applicationsNews Redis has announced new tools aimed at making it easier for AI developers to build applications and optimize large language model (LLM) outputs.

-

AI was a harbinger of doom for low-code solutions, but peaceful coexistence is possible – developers still love the time savings and simplicity despite the allure of popular AI coding tools

AI was a harbinger of doom for low-code solutions, but peaceful coexistence is possible – developers still love the time savings and simplicity despite the allure of popular AI coding toolsNews The impact of AI coding tools on the low-code market hasn't been quite as disastrous as predicted

-

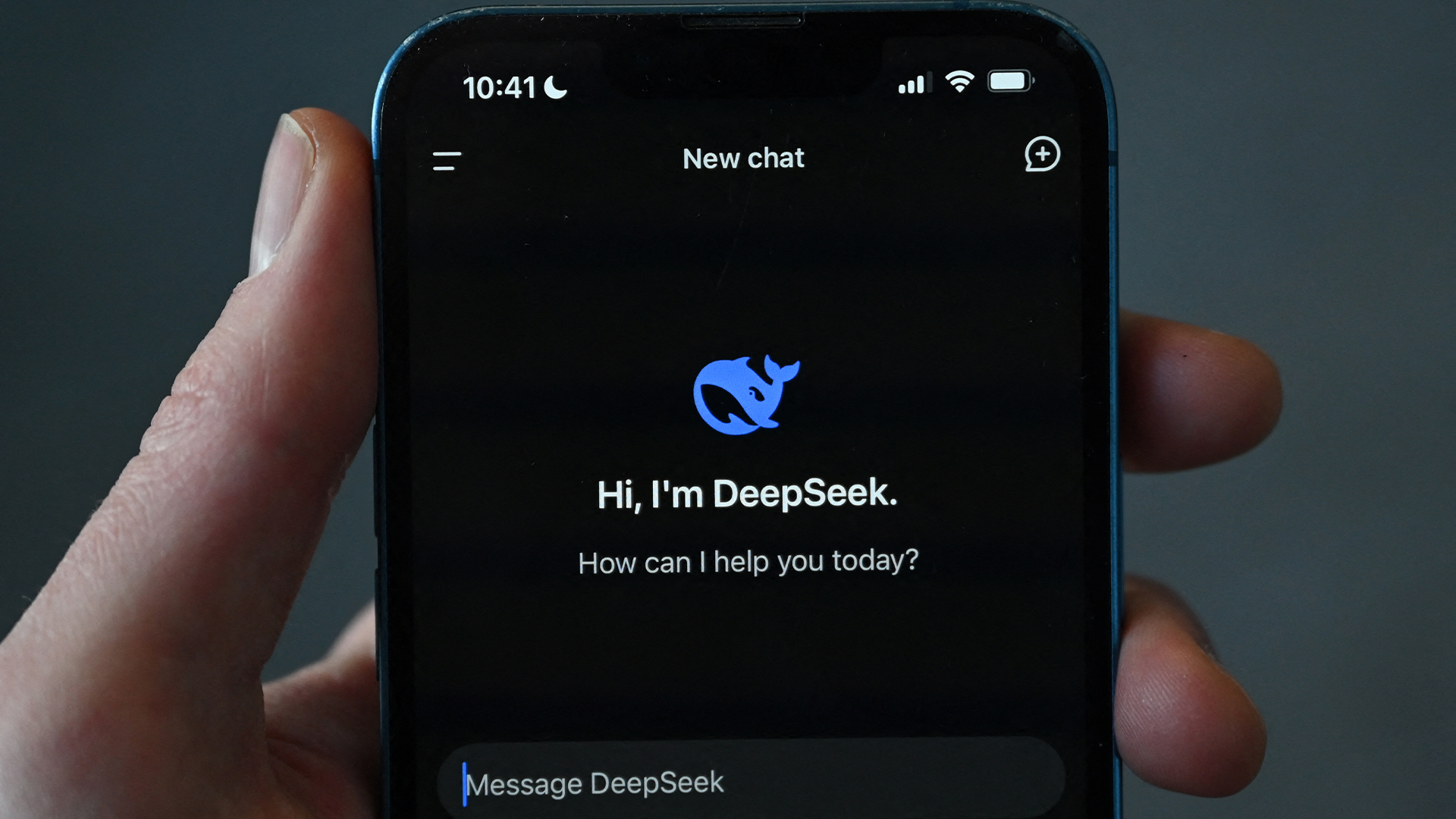

‘Awesome for the community’: DeepSeek open sourced its code repositories, and experts think it could give competitors a scare

‘Awesome for the community’: DeepSeek open sourced its code repositories, and experts think it could give competitors a scareNews Challenger AI startup DeepSeek has open-sourced some of its code repositories in a move that experts told ITPro puts the firm ahead of the competition on model transparency.