‘Awesome for the community’: DeepSeek open sourced its code repositories, and experts think it could give competitors a scare

The Chinese AI firm has gone one further than its competitors, though there are limits to what it’s revealed

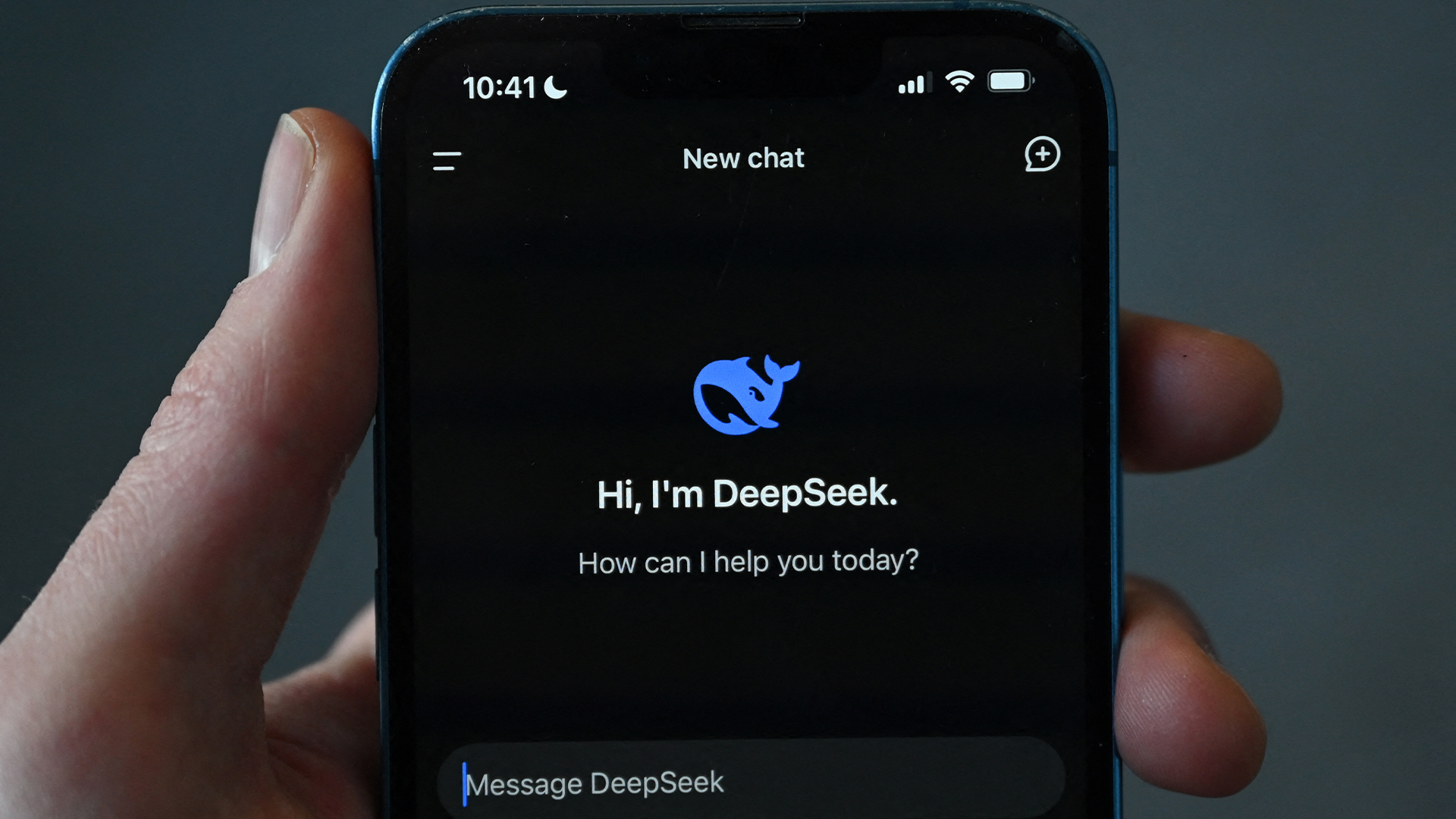

Challenger AI startup DeepSeek has open-sourced some of its code repositories in a move that experts told ITPro puts the firm ahead of the competition on model transparency.

In a post to X late last month, DeepSeek said it would be open sourcing five of its code repositories in a bid to share what it called its “small but sincere progress with full transparency.”

“These humble building blocks in our online service have been documented, deployed, and battle-tested in production,” DeepSeek wrote.

“As part of the open-source community, we believe that every line shared becomes collective momentum that accelerates the journey,” the firm added.

DeepSeek caused ripples of panic earlier this year when its sudden release raised questions about the value of previously unchallenged US competitors - DeepSeek’s models are competitive with the likes of OpenAI despite costing only a fraction of the price to build.

Its openness also stood in stark contrast to some large proprietary US models, industry analysts noted at the time. Now, DeepSeek has gone even further by promising to open-source the code behind its model. Its only competition in this regard is the likes of Meta’ Llama, which has only open-sourced the weights of its models.

“To be clear, Llama has open weights, not open code - you can't see the training code or the actual training datasets. DeepSeek has gone a step further by open sourcing a lot of the code they use, which is awesome for the community,” Alistair Pullen, co-founder and CEO of Cosine, told ITPro.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“I think DeepSeek can probably feel comfortable giving their competitors a scare by doing stuff others won't do - it does diminish their edge, but they're not wholly a model company,” Pullen added.

How open is DeepSeek?

DeepSeek is out ahead when it comes to open source AI, though that doesn’t mean it’s fully open in the traditional sense of the term. The firm hasn’t open-sourced the entirety of its code, nor key aspects of its model development such as training datasets.

The firm could gain an edge if it decides to share more of its code, according to Peter Schneider, senior product manager at Qt Group.

Code transparency is a big sticking point in terms of security and increased levels could create more community engagement, Schneider told ITPro.

“If they wanted to go the extra mile differentiating themselves, releasing their full training data and methodologies would certainly set a new standard for transparency in the AI race," Schneider added.

Industry positive about open source move

Experts have been largely positive about DeepSeek’s decision, with Pullen saying this move gives users a greater level of control and access. He referenced the ‘reinforcement learning algorithm’ that DeepSeek released, a far less memory intensive approach than others.

“Open-source AI models appeal to users because they offer greater flexibility, fine-tuning capabilities, and fewer vendor restrictions. But beyond that, the real advantage comes from the collective intelligence of the global open-source community,” Dirk Alshuth, cloud evangelist at emma, told ITPro.

“The number of contributors can grow to thousands, which ultimately leads to more robust models, innovative use cases, and applications built on top,” Alshuth said.

Continued transparency on this front will boost community engagement and give DeepSeek a unique selling point when compared to its largely closed-source competitors, Alshuth added.

“DeepSeek’s decision to share some of its AI model code is a welcome step toward greater openness in AI development,” Schneider said.

Open source AI is a tough nut to crack

DeepSeek has pushed the definition of open source AI further, though this is just the latest in an ongoing conversation about how open source is defined in the AI arena when the technology is so fundamentally different from what’s gone before.

Speaking to ITPro at the time of Llama 3’s release in 2024, Open UK CEO Amanda Brock said that current conversations around open source AI are stretching historic definitions of open source to their limits.

While elements of an AI application may be made open under a typical open source license, Brock said, other elements of an AI application may not lend themselves as easily to this definition.

Gradients, or “shades of openness,” could be the solution, Brock added, whereby different elements of an AI application are assigned different licenses.

MORE FROM ITPRO

- DeepSeek R1 has taken the world by storm, but security experts claim it has 'critical safety flaws' that you need to know about

- The DeepSeek bombshell has been a wakeup call for US tech giants

- Looking to use DeepSeek R1 in the EU? This new study shows it’s missing key criteria to comply with the EU AI Act

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

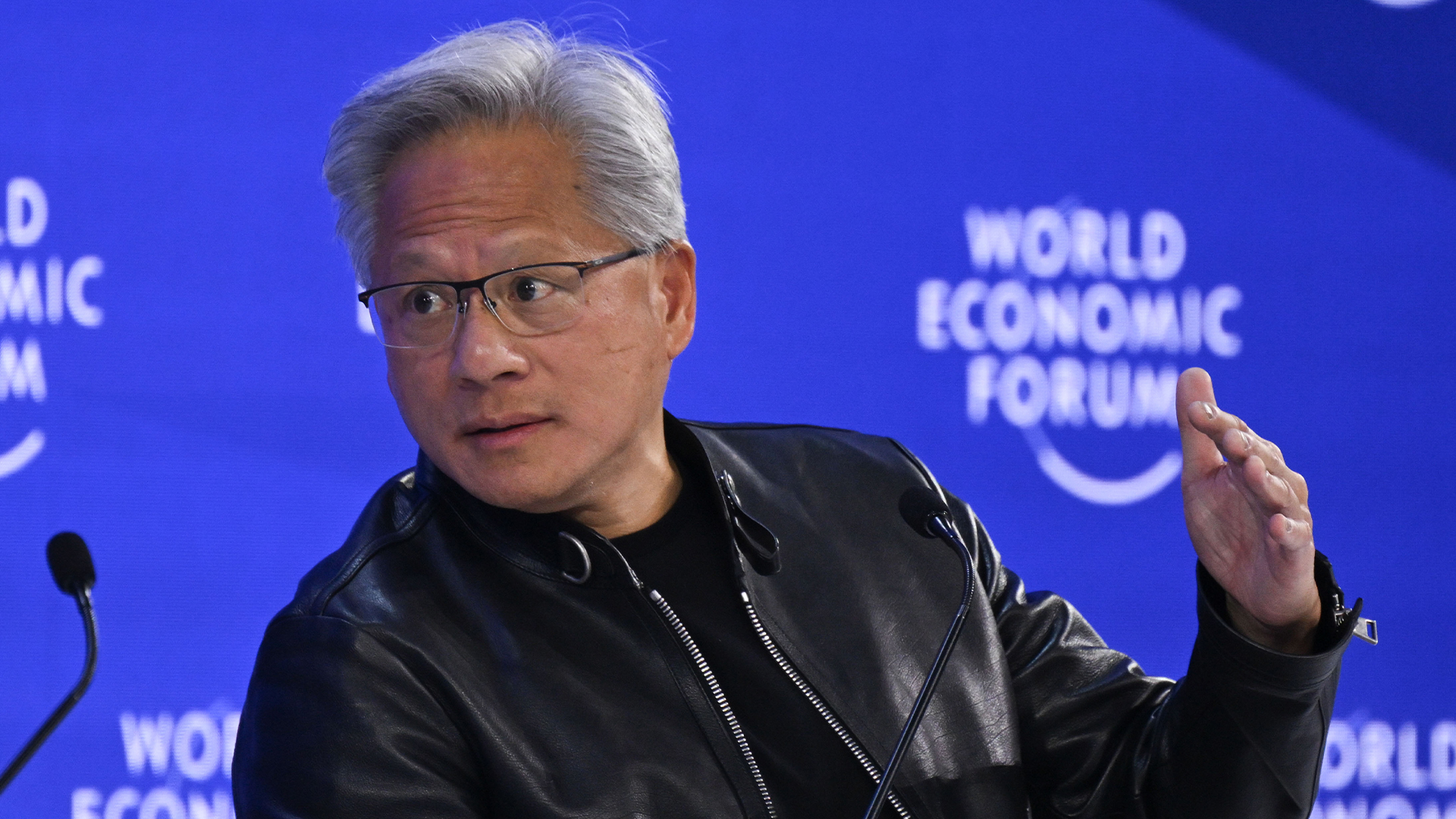

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

AI is creating more software flaws – and they're getting worse

AI is creating more software flaws – and they're getting worseNews A CodeRabbit study compared pull requests with AI and without, finding AI is fast but highly error prone

-

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teams

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teamsAnalysis Software developers may be forgiven for worrying about their jobs in 2025, but the end result of AI adoption will probably be larger teams, not an onslaught of job cuts.

-

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux Foundation

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux FoundationNews The AAIF aims to standardize agentic AI development and create an open ecosystem for developers

-

Atlassian just launched a new ChatGPT connector feature for Jira and Confluence — here's what users can expect

Atlassian just launched a new ChatGPT connector feature for Jira and Confluence — here's what users can expectNews The company says the new features will make it easier to summarize updates, surface insights, and act on information in Jira and Confluence