Why risk analysts think AI now poses a serious threat to us all

Serious minds are starting to buy into the notion that AI is a bigger threat to humanity than asteroids, pandemics and nuclear war combined

On September 26 last year, without most of us noticing, humanity’s long-term odds of survival became ever so slightly better. Some 11,000,000 km away, a NASA spacecraft was deliberately smashed into the minor planet-moon, Dimorphos, and successfully changed its direction of travel. It was an important proof of concept that showed that if we’re ever in danger of being wiped out by an asteroid, we might be able to stop it from hitting the Earth.

But what if the existential threat we need to worry about isn’t Deep Impact but Terminator? Despite years of efforts from professionals and researchers to quash any and all comparisons with apocalyptic science fiction and real-world artificial intelligence (AI), the threat of this technology going rogue and posing a serious threat to survival isn’t just for Hollywood movies. As crazy as it sounds, this is increasingly a threat that serious thinkers worry about.

AI development is akin to survival of the fittest

“AI could pose a threat to humanity's future if it has certain ingredients, which would be superhuman intelligence, extensive autonomy, some resources and novel technology,” says Ryan Carey, a research fellow in AI safety at Oxford University’s Future of Humanity Institute. “If you placed a handful of modern humans, with some resources and some modern technology into a world with eight billion members of some non-human primate species, that could pose a threat to the future of that species.”

The idea of treating AI as its own species, potentially replacing another, is consistent with evolution by natural selection. “There's no reason why AI couldn't be smarter than us and generally more capable and more fit in an evolutionary sense,” adds David Krueger, an assistant professor in Cambridge University’s Computational and Biological Learning Lab.

“Look at how humans have reshaped the Earth and changed the environment leading to the extinction of many other species,” he explains. “If [AI] becomes the more significant re-shaper of the environment than humans have been, then it could make the environment of the world something that's very difficult or impossible for humans to survive on.”

Today’s AI might not be ready to take on its human masters – it’s too busy identifying faces in our photos or transcribing our Alexa commands. But what makes the existential risk hypothesis scary is there is a compelling logic to it.

The three ingredients to AI dominance

1. The logic of AI

The first ingredient is to do with how machine learning systems “optimise” for specific objectives, but lack the “common sense” that (most) humans possess. “No matter how you specify what you want from the system, there's always loopholes in the specification,” says Krueger. “And when you just create a system that optimises for that specification as hard as it can, without any common sense interpretation, then you're going to get usually really weird and undesirable behaviour.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

In other words, an AI tasked with something seemingly simple could have unintended consequences that we can’t predict, because of the way AI ‘thinks’ about solving problems. If, for example, it was told that the number of books in a child’s home correlates with university admissions, it wouldn’t read to the child more – it would start piling up boxes of books instead. Even if we think we’re designing AI that operates only within very strict rules, unusual behaviour can still occur.

2. Over-optimisation

RELATED RESOURCE

The newest approach: Stopping bots without CAPTCHAs

Reducing friction for improved online customer experiences

The second ingredient is how an AI with one specific task will naturally engage in “power seeking” behaviour in order to achieve it. “Let's say you have an algorithm where you can, by hand, compute the next digit of Pi, and that takes you a few seconds for every digit,” says Krueger. “It would probably be better to do this with a computer, and probably be better to do this with the most powerful computer that you can find. So, then you might say, ‘actually, maybe what I need to do is make a ton of money, so that I can build a custom computer that can do this more quickly, efficiently and reliably’.”

An AI might think the same way if it has such a singular goal. An AI optimised for a specific task will use every tool at its disposal to achieve that goal. “There's no natural end to that cycle of reasoning,” says Krueger. “If you're doing that kind of goal-directed reasoning, you naturally end up seeking more and more power and resources, especially when you have a long-term goal.”

3. Handing over power

Krueger’s third ingredient answers the obvious question: why would we humans ever give an AI system the ability to engage in this maniacal ‘power seeking’ behaviour? Ultimately, even if we have a clever AI, won’t it be stuck inside a computer?

“The reason why you might expect people to build these kinds of systems is because there will be some sort of trade-off between how safe the system is and how effective it is,” says Krueger. “If you give the system more autonomy, more power, or the ability to reshape the world in more ways, then it'll be more capable.”

It’s not like this isn’t already happening – we let AI software trade stocks or navigate traffic without any human intervention, because AI is much more useful when it is directly in charge of things.

“You can build a really safe system if it basically doesn't do anything,” Krueger continues. “But if you give it more and more ability to directly affect the world… if you let it directly take actions that affect the real world or that affect the digital world, that gives it more power and will make it more able to accomplish your goals more effectively.”

Cooking up an AI-powered disaster

Putting these ingredients together leads to one possible outcome: that your goals may not be the same as the AI’s goals. Because when we give an AI a goal, it may not be our actual goal. Theorists call this the “alignment” problem.

This was memorably captured in a thought experiment by the philosopher Nick Bostrom, who imagined an AI tasked with creating paperclips. With sufficient control of real-world systems, it might engage in power-seeking behaviour to maximise the number of paperclips it can manufacture.

The problem is, we don’t want to turn everything into paperclips – even though we told the machine to make paperclips, what we really wanted was merely to organise our papers. The machine isn’t blessed with that sort of common sense, though. It remains maniacally focused on one goal, and works to make itself more powerful to achieve it. Now substitute something benign such as paperclips for something scarier, such as designing drugs or weapons systems, and it becomes easier to imagine nightmare scenarios.

How worried should we be?

The risk of a paperclip-hungry AI wiping out human existence might seem fantastically low, but it does paint a picture of how a threatening AI could emerge by following the remorseless logic that underpins AI technologies today.

RELATED RESOURCE

“If you have a very intelligent system, it could be used for social engineering of anyone using the internet, or you could develop extraordinary technologies like bioweapons, nanotechnology or smarter AI,” says Carey.

If we’re lucky, though, we might see the problem coming. “It could be more of a gradual escalation of resources, as you're giving an AI the keys to more and more important corporate or military institutions as it gets more capable,” he continues, perhaps thinking of how over the past several decades we’ve put more of our trust in AI and computer systems, because it makes things more useful.

But, of course, we might not be so lucky. Other theorists argue that if you have an AI that is capable of redesigning and improving itself, then the sophistication of AI could scale up very quickly indeed. “If that's the case, then we're in some trouble,” Carey adds.

So just how worried should we be? Scarily, Carey points to the work of philosopher Toby Ord, who wrote The Precipice in 2020, attempting to weigh the risks of various existential risks, such as asteroids, pandemics and nuclear war. In the book, he estimates a 10% chance of an AI-linked catastrophe in the next century, compared to only a 6% chance of all of the other risks combined.

“The logic for that is that these natural catastrophes would have gotten us over the past million years already, if it was going to be a volcano or something,” says Carey. “He thinks AI, if anything, is most likely to be the big one.”

-

Future focus 2025: Technologies, trends, and transformation

Future focus 2025: Technologies, trends, and transformationWhitepaper Actionable insight for IT decision-makers to drive business success today and tomorrow

-

Choosing the Best RMM Solution for Your MSP Business

Choosing the Best RMM Solution for Your MSP BusinessWhitepaper Discover a powerful technology platform that empowers Managed Services Providers (MSPs)

-

Datto SIRIS business continuity and disaster recovery

Datto SIRIS business continuity and disaster recoveryWhitepaper Save time without cutting corners

-

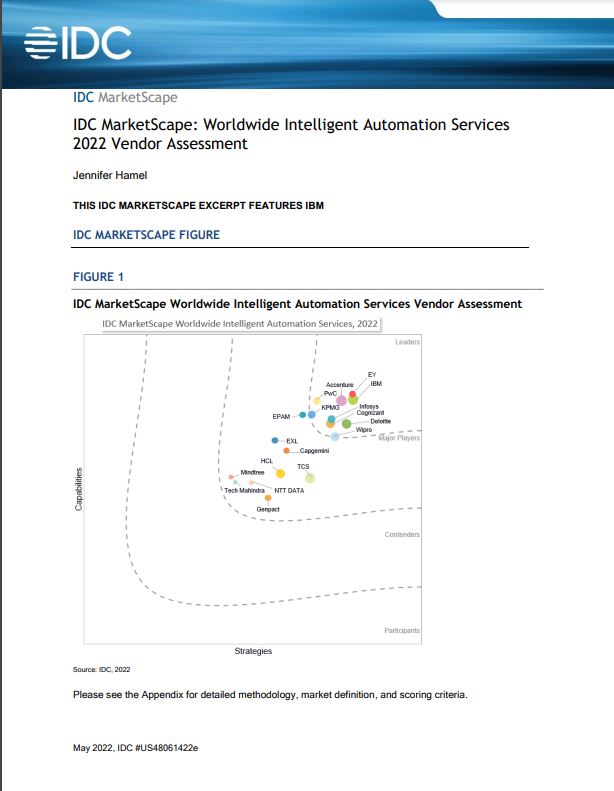

IDC spotlight paper: AI coding assistants for application modernization and IT automation

IDC spotlight paper: AI coding assistants for application modernization and IT automationwhitepaper Improve productivity, enable flexibility, and foster innovation

-

IBM watsonx code assistant for Z brings generative AI to mainframe application modernization

IBM watsonx code assistant for Z brings generative AI to mainframe application modernizationwhitepaper Modernize mainframe applications and adopt a hybrid cloud strategy

-

IBM watsonx: A differentiated approach to AI foundation models

IBM watsonx: A differentiated approach to AI foundation modelswhitepaper Modernize mainframe applications and adopt a hybrid cloud strategy

-

The revolutionary content supply chain

The revolutionary content supply chainwhitepaper How generative AI supercharges creativity and productivity

-

IDC spotlight paper: The truth about successful generative AI

IDC spotlight paper: The truth about successful generative AIwhitepaper Improve productivity, enable flexibility, and foster innovation