Arm CEO worried humans will inevitably lose control of AI

AI systems need robust safeguards and override capabilities, according to Arm CEO Rene Haas

Arm CEO Rene Haas has raised concerns that humans could eventually lose control over artificial intelligence (AI) systems if there are not sufficient safeguards or override capabilities put in place.

Speaking to Bloomberg, Haas admitted he worries about humans "losing capability’ over the machines at some point in the future.

"The thing I worry about most is humans losing capability," he told the publication.

“You need some override, some backdoor, some way that the system can be shut down."

The rapid acceleration of the generative AI space has prompted concerns among portions of the global technology sector in recent months.

In March, a host of industry executives, including Apple co-founder Steve Wosniak and Tesla CEO Elon Musk signed an open letter calling for a six-month pause on training AI systems more powerful than OpenAI’s GPT-4.

The letter claimed “human-competitive intelligence can pose profound risks to society and humanity”, and outlined a list of the “minimum “ level of safeguarding precautions that should be implemented.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

These precautionary measures include setting up new regulatory authorities dedicated to AI, oversight over the most advanced AI systems, liabilities for harm caused by AI, and more public funding for technical AI safety research.

A second open letter issued by the Center for AI Safety with a far more streamlined message was signed by notable names such as OpenAI CEO Sam Altman, Google DeepMind CEO Demis Hassabis, Anthropic CEO Dario Amodei, and Microsoft co-founder Bill Gates.

RELATED RESOURCE

Make the most of the opportunities GenAI offers

DOWNLOAD NOW

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” the letter read.

Both letters drew some criticism, with critics arguing the letters distract from the actual near-term harms AI systems already pose to society by focusing on the more abstract, long-term consequences they may incur.

The rush to apply generative AI models across society has prompted concerns around the potential to exacerbate pre-existing biases, amplification of social inequalities, and whether the use of certain generative AI tools could infringe intellectual property (IP) and copyright laws.

The European Union (EU) recently announced it has reached a provisional agreement on its own regulatory framework for AI systems, with measures in place to guarantee the transparency safety of ‘high impact’ foundation models and restrictions around using AI in biometric surveillance systems.

The agreement came after a protracted period of negotiations, slowed down by concerns from industry leaders that innovation may be inhibited by cumbersome regulatory red tape.

Solomon Klappholz is a former staff writer for ITPro and ChannelPro. He has experience writing about the technologies that facilitate industrial manufacturing, which led to him developing a particular interest in cybersecurity, IT regulation, industrial infrastructure applications, and machine learning.

-

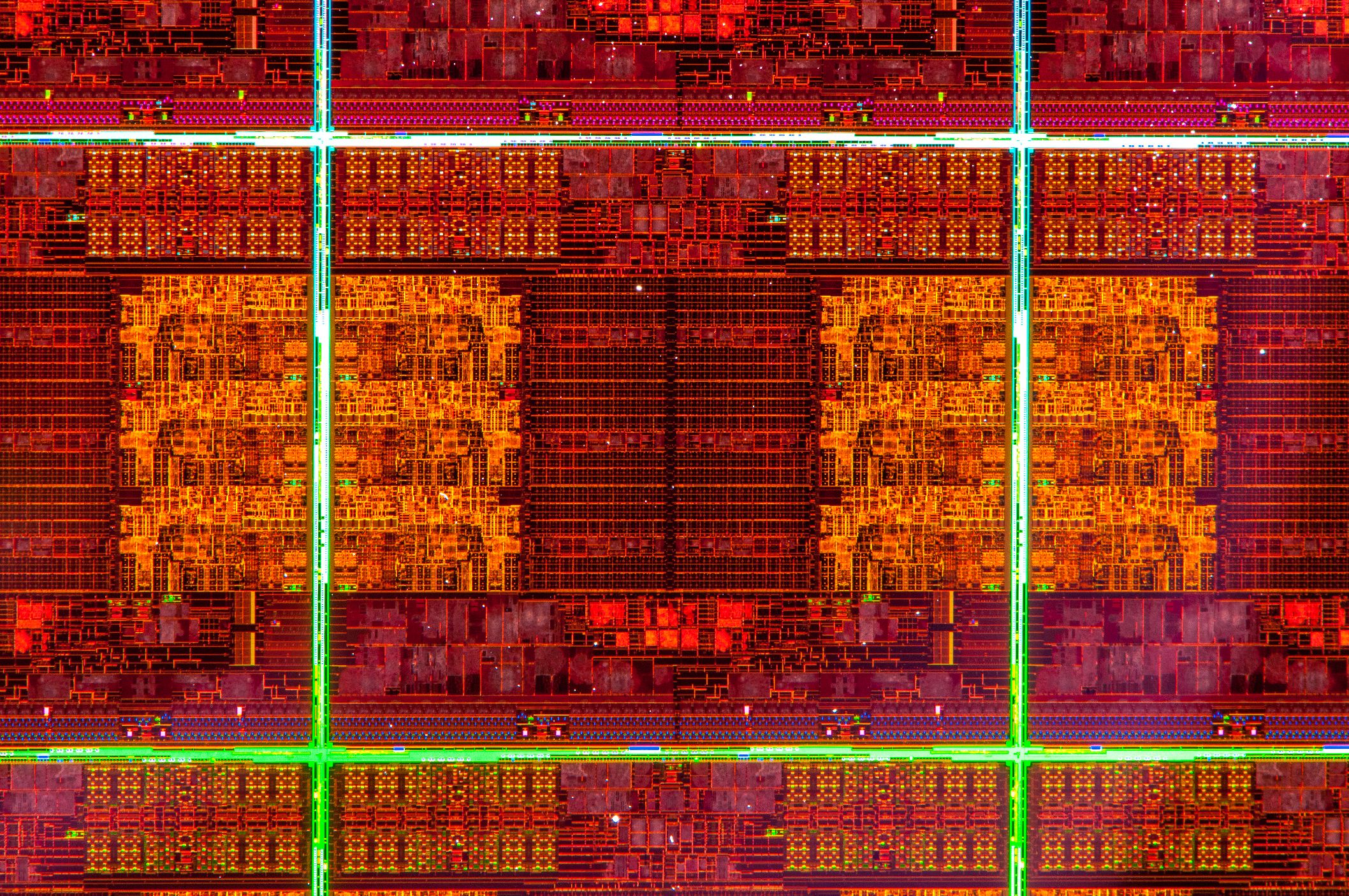

Intel foundry to manufacture ARM chip

Intel foundry to manufacture ARM chipNews Chip giant downplays the move, claiming it's part of pre-existing agreement.

-

Raspberry Pi vs Intel NUC: Need to Know

Raspberry Pi vs Intel NUC: Need to KnowVs Does the handheld Intel Core i3 NUC completely outshine it in this David vs. Goliath battle?

-

Open source champion labels Clover Trial processor a technology "dead-end"

Open source champion labels Clover Trial processor a technology "dead-end"News No one wants Intel's Windows-only chip, claims Bruce Perens.

-

Top 10 tech winners and losers of 2011

Top 10 tech winners and losers of 2011In-depth Many companies and products have done well in 2011 and many others haven't. The winners are as glorious as the losers are dejected and desperate...

-

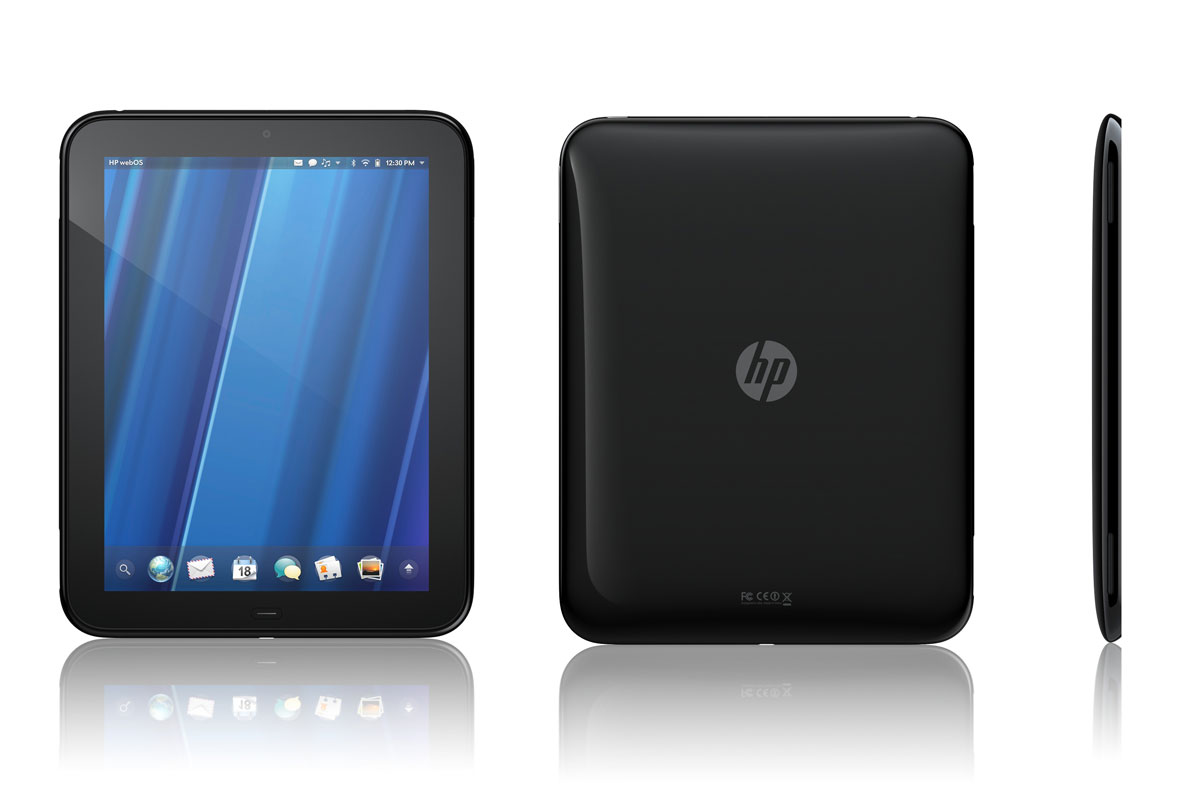

IBM extends ARM chip collaboration

IBM extends ARM chip collaborationNews IBM and ARM's new deal will see the pair expand their chip making work in the mobile sphere.

-

Smartphone Show 2008: Developers urged to ‘handshake’ with Symbian code

Smartphone Show 2008: Developers urged to ‘handshake’ with Symbian codeNews Code decays so Symbian wants to work with developers to help keep it looking good.

-

ARM teams with IBM on chips

ARM teams with IBM on chipsNews Joins group of chipmakers looking to take on Intel with 32 and 28 nanometer systems.