Organizations face ticking timebomb over AI governance

While the vast majority of firms now use AI, more than half have either very limited governance or no governance at all

Organizations are overlooking the risks of AI during their development processes, according to a new report by Trustmarque.

Business leaders are still building and deploying AI models designed for traditional software processes, despite new risks posed by AI, researchers said in a new report.

While 93% of organizations use AI in some capacity, they aren't updating their legacy software development processes to account for AI-specific risks such as model bias or gaps in explainability.

Only 7% of respondents were found to have fully embedded governance and more than half have either no governance at all, or governance that's very limited in scope.

Further, just 8% have fully integrated AI governance into their software development lifecycle, with most organizations describing their approach as either ad hoc or fragmented across teams.

AI models come with inherent risks such as bias and anomalous answers, known as hallucinations. While many organizations carry out security reviews or anomaly monitoring, under a third (28%) apply bias detection during testing and just 22% test for model interpretability.

Barriers to governance include infrastructure and tooling, with only 4% of organizations saying their data and infrastructure environments are fully prepared to support AI at scale. Registries, audit trails, and version control for AI models are often manual or missing entirely, the respondents said.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“Our report shows that AI adoption is outpacing governance. It’s a clear gap - 93% of organizations are using AI but only 7% have fully embedded governance frameworks,” said Seb Burrell, head of AI at Trustmarque.

“Right now, systems and processes haven’t kept up with the speed of innovation. Development teams lack proper tooling and infrastructure, and the issues are compounded by the lack of management buy-in for building robust governance systems."

Meanwhile, there's a lack of central ownership when it comes to accountability for AI oversight, with only 9% reporting alignment between IT leadership and governance and 19% saying there's no clear owner for governance activity.

Most AI governance is driven at the departmental level rather than through strategic leadership, and there's little monitoring, with just 18% having implemented continuous monitoring with KPIs to track progress.

Most AI governance is still driven at the departmental level rather than through strategic leadership, the report found, with little monitoring of AI ROI or potential missteps. Under one-fifth (18%) of surveyed organizations have implemented continuous monitoring with KPIs to track progress.

There's also little cross-functional collaboration, with only 40% occasionally involving legal, ethics or HR in AI-related decisions and only one-in-five having a formal governance group that spans functions.

This gap between intent and execution, said the researchers, is the biggest challenge facing enterprise AI today. The authors urged business to align AI strategies with business priorities, embed governance into development workflows, and invest in infrastructure and skills.

Leaders should also make accountability a shared, cross-functional commitment, it concluded.

"Organizations are deploying generative AI faster than they can govern it, and the consequences are real," said Burrell.

"Privacy breaches, operational instability, ethical missteps and loss of stakeholder trust are all risks that grow in the absence of effective oversight. These aren’t just compliance issues. They are long-term risks to business resilience and trust."

Emma Woollacott is a freelance journalist writing for publications including the BBC, Private Eye, Forbes, Raconteur and specialist technology titles.

-

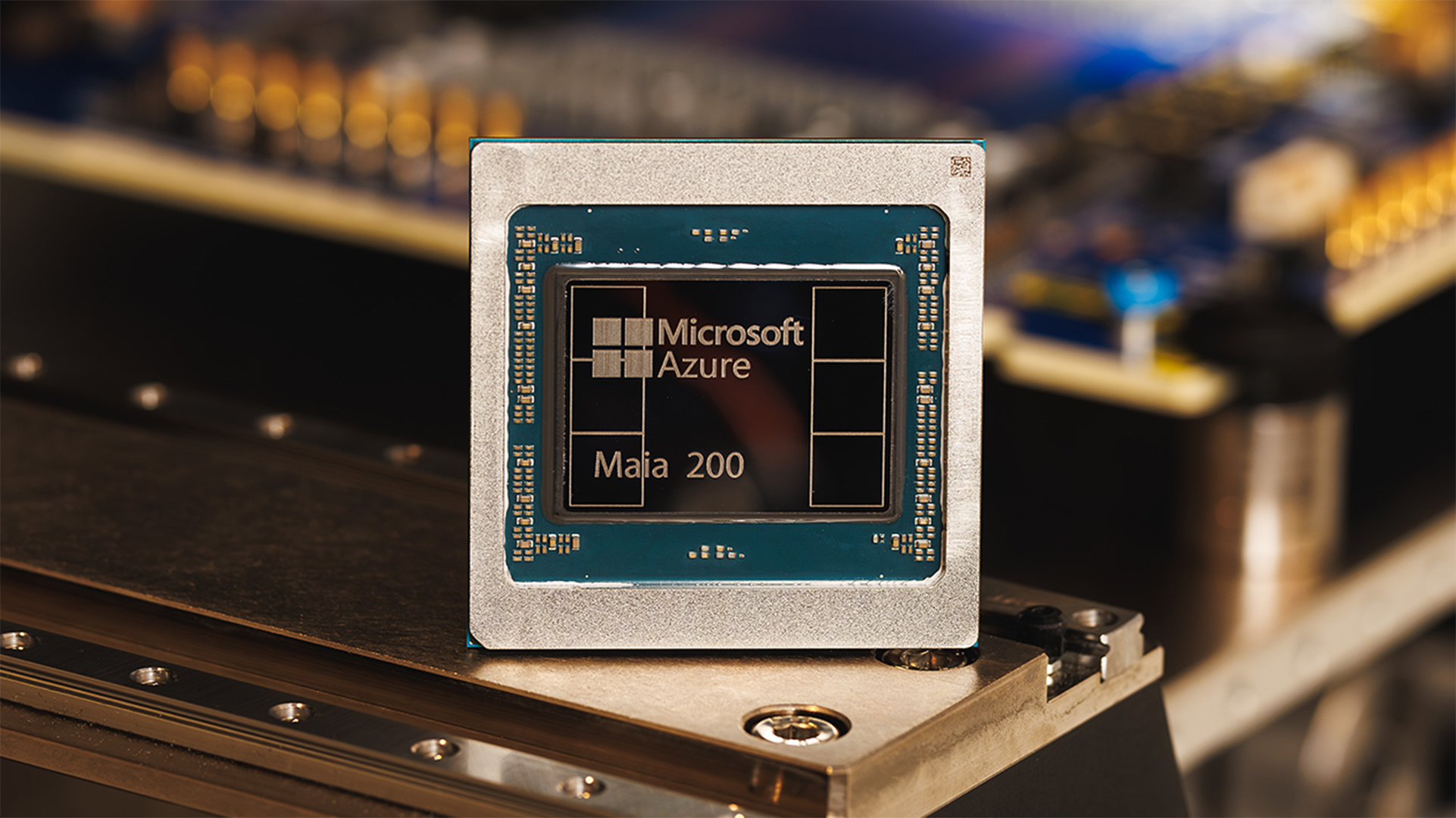

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation