“Public trust has become the new currency for AI innovation”: Why SAS is ringing the alarm bell on AI governance for enterprises

Demonstrating responsible stewardship of AI could be the key differentiator for success with the technology, rather than simply speed of adoption

Leaders looking to implement AI should make AI governance and risk management their primary focus if they are to succeed in their adoption plans, according to a SAS executive.

Reggie Townsend, VP, Data Ethics at SAS, told assembled media at SAS Innovate 2025 that tech leaders need to consider the risks AI brings now, whether they’re ready for it or not.

To illustrate his point, Townsend said he had recently received two requests for AI use within SAS: one to use ChatGPT for account planning processes and another to use DeepSeek for internal workflow activities.

“Two separate requests, mind you, within two hours of one another, from two separate employees altogether,” Townsend noted.

“This is increasingly becoming a normal day for myself and for leaders like me around the world. So, if you just extrapolated that day over the course of a month, you’d have 40 different use cases for AI and those would only be the ones that we know about.”

While Townsend was quick to stress he has “zero desire” to know the details of every single use of AI around the company, he used the example to underline the clear need for every organization to set out internal AI policies.

To further illustrate his point, Townsend cited the IAPP’s AI Governance Profession Report 2025, which found 42% of organizations already rank AI governance as a top five strategic priority and 77% of organizations overall are working on AI governance measures.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

While the report found 90% of organizations who said they have adopted AI are pursuing AI governance, it also found 30% of those without AI are already laying the groundwork for their eventual adoption by working on an AI governance strategy.

“[This] would suggest that they’re taking a governance first approach to this thing, which I actually applaud,” Townsend said. “I like to say that trustworthy AI begins before the first line of code.”

Townsend added that every large organization in the US is either buying AI or adopting it without knowing, via AI updates to products they already use. Employees, he added, are already using AI and leaders will need to consider this demand now, not later, to ensure they don’t expose themselves to risks or undermine their AI adoption roadmap.

“The organizations that thrive won't simply be those that deploy AI first but it'll be those that deploy AI most responsibly,” he said, adding that leading organizations will recognize the strategic imperative of governance and embrace its potential benefits for innovation.

Describing governance as a “catalyst” for technological innovation, Townsend also warned of the barriers that companies who push for AI without considering safe adoption will be hurt in the long term.

“Organizations without AI governance face not just potential regulatory penalties, depending on the markets they’re in, they face a potential competitive disadvantage because public trust has become the new currency for AI innovation.”

Clear roadmaps for AI governance

SAS is targeting reliable AI, having just announced new AI models for industry-specific use cases. Townsend explained that when it comes to AI that delivers predictable results, leaders should first establish a framework for measuring actual outcomes versus the expected outcomes, to which compliance and oversight teams can refer back.

“Now, this is a matter of oversight, for sure, this is a matter of operations, and this is a matter of organizational culture,” he said.

“All of these things combined are what represent this new world of AI governance, where there’s a duality, I like to say, that exists between these conveniently accessible productivity boosters that the team has been talking about this morning, intersecting with inaccuracies and inconsistency and potential intellectual property leakage.”

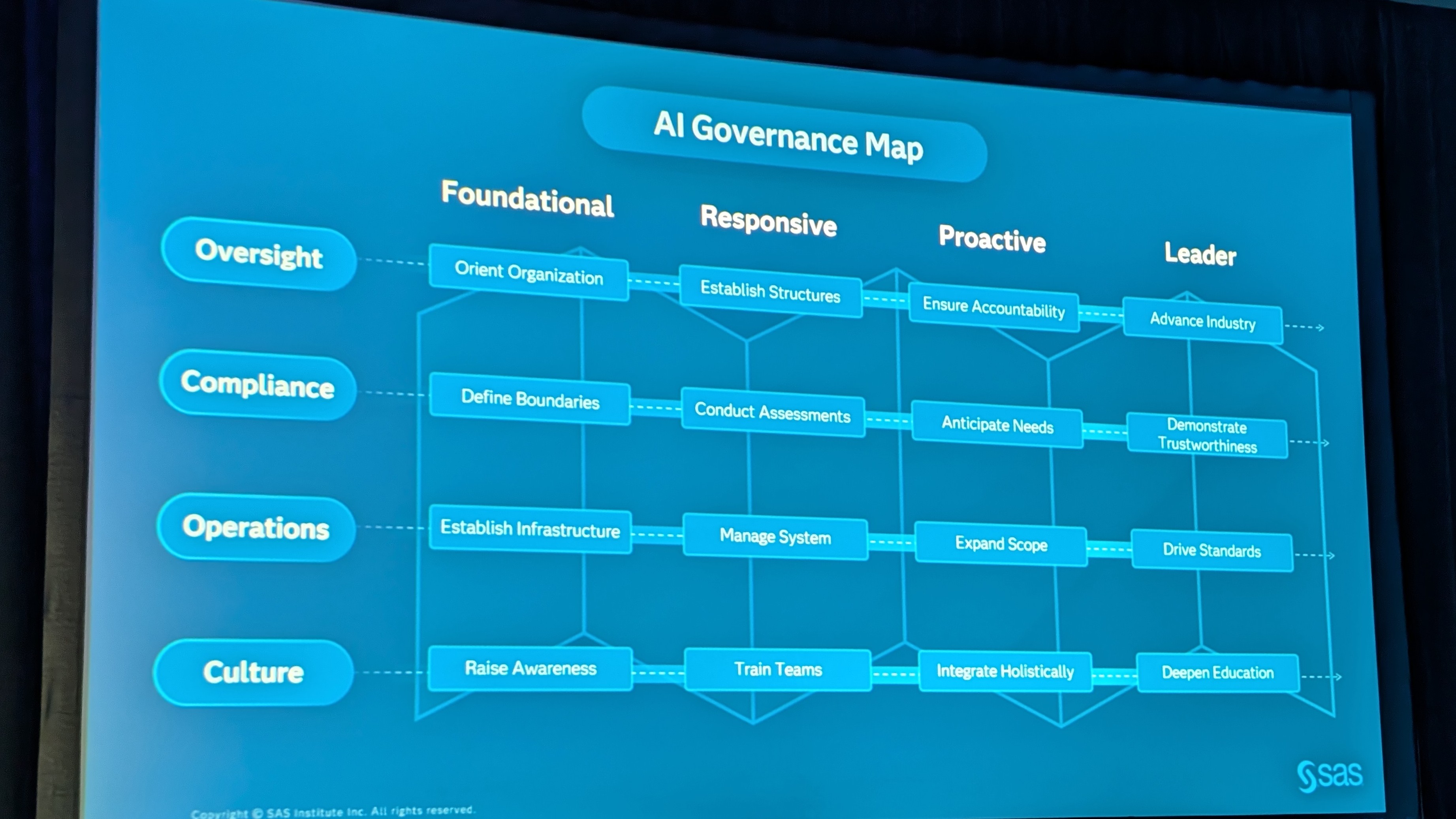

To address these issues, SAS has created the AI Governance Map, a resource for customers looking to weigh up their AI maturity across oversight, compliance, operations, and culture.

This helps organizations to assess their AI governance no matter where they are with AI implementation, rather than mandating they replace current AI systems with those specified by SAS.

In the near future, SAS has committed to releasing a new holistic AI governance solution, for monitoring models and agents, as well as completing AI orchestration and aggregation. No further details on the tool have been revealed but SAS has invited interested parties to sign up for a private preview later in 2025.

Townsend’s comments echo those of Vasu Jakkal, corporate vice president at Microsoft Security, who last week told RSAC Conference 2025 attendees that governance is an irreplaceable role and would be critical as organizations adopt more AI agents.

Just as SAS is stressing the importance of governance is a key concern, Jakkal took the position that human-led governance will stay relevant as organizations look to keep tabs on the decisions that AI agents make. Both are a pared back version of the future predicted by the likes of Marc Benioff, who in February made the claim that CEOs today will be the last with a fully human workforce.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

The modern workplace: Standardizing collaboration for the enterprise IT leader

The modern workplace: Standardizing collaboration for the enterprise IT leaderHow Barco ClickShare Hub is redefining the meeting room

-

Interim CISA chief uploaded sensitive documents to a public version of ChatGPT

Interim CISA chief uploaded sensitive documents to a public version of ChatGPTNews The incident at CISA raises yet more concerns about the rise of ‘shadow AI’ and data protection risks

-

What the UK's new Centre for AI Measurement means for the future of the industry

What the UK's new Centre for AI Measurement means for the future of the industryNews The project, led by the National Physical Laboratory, aims to accelerate the development of secure, transparent, and trustworthy AI technologies

-

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investment

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investmentNews Organizations are stymied by issues with security, privacy, and compliance, as well as the technical challenges of managing agents at scale

-

What Anthropic's constitution changes mean for the future of Claude

What Anthropic's constitution changes mean for the future of ClaudeNews The developer debates AI consciousness while trying to make Claude chatbot behave better

-

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industries

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industriesNews Microsoft CEO Satya Nadella appears confident that the AI market isn’t in the midst of a bubble, but warned widespread adoption outside of the technology industry will be key to calming concerns.

-

Workers are wasting half a day each week fixing AI ‘workslop’

Workers are wasting half a day each week fixing AI ‘workslop’News Better staff training and understanding of the technology is needed to cut down on AI workslop

-

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popular

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popularNews Companies are moving AI projects from pilot to production across the board, with a focus on open-source models and software, as well as agentic and physical AI

-

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issues

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issuesNews Microsoft CEO Satya Nadella might want the term "AI slop" shelved in 2026, but businesses will still be dealing with increasing output problems and poor returns.

-

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'News OpenAI details efforts to protect ChatGPT Atlas against prompt injection attacks