Who is Ian Hogarth, the UK’s new leader for AI safety?

The startup and AI expert will head up research into AI safety

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Tech investor Ian Hogarth has been announced as the chair of the UK government’s Foundation Model Taskforce, which will work to research and unlock AI safety.

The Taskforce will work with industry partners and sector experts to establish public consensus on the safety of machine learning (ML) models, amidst widespread adoption of new AI systems.

Hogarth was a co-founder of concert service Songkick, as well as the investment firm Plural Platform which organizes venture capital for “the unemployables”, a term it uses for experienced founders looking to strike out on their own.

Through the firm, Hogarth has reportedly invested in over 40 startups, including many that specialize in ML and AI.

RELATED RESOURCE

Teaching good cyber security behaviors with Seinfeld

Overcoming the employee engagement challenge in security awareness training

He studied engineering at Cambridge University, and followed this with an MA specializing in machine learning. Hogarth is now a visiting professor at University College London, working at its Institute for Innovation and Public Purpose (IIPP).

Since 2018, Hogarth has been a co-author of the State of AI report, an annual analysis of the development of AI.

The 2022 report stated that the UK is “taking the lead” on AI risk and that 69% of surveyed machine learning (ML) experts believed more AI safety was needed.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

One of nine predictions made at the end of the report was that over $100 million would be invested in organizations dedicated to “AI alignment” in 2023, in acknowledgment of how AI development has outpaced safety.

In order to assess the risks posed by AI, the Taskforce will bring together experts from academia, government, and industry.

It will seek to establish guardrails that can be implemented internationally, cement the UK’s place as a leader in AI research and improve the usage of the technology worldwide.

This will be an important aspect of the UK’s approach to AI regulation, which has lagged behind the EU to date.

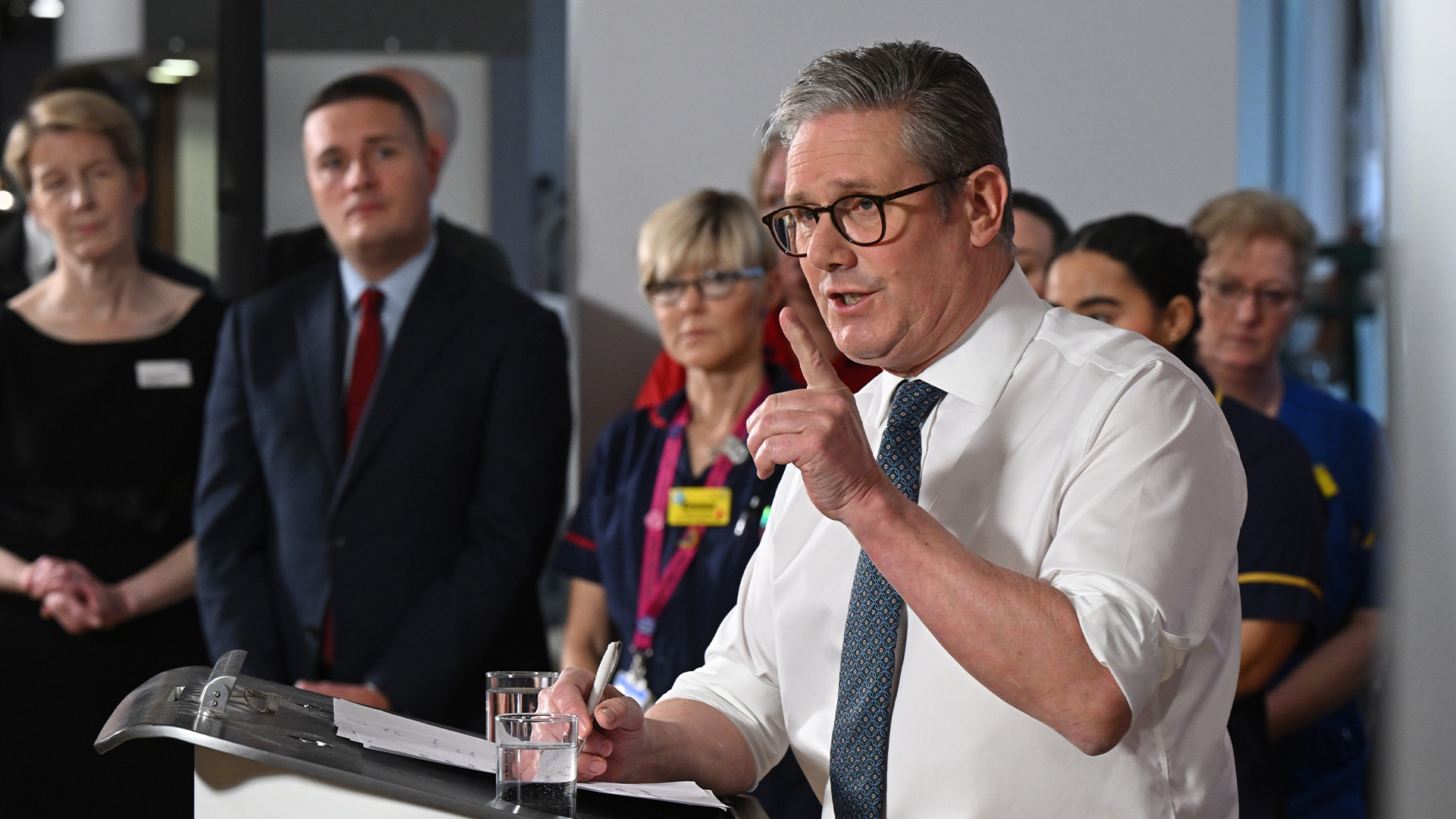

The government has compared the Taskforce to the successful Vaccine Taskforce, which helped deliver the UK’s expedited vaccine delivery in the early days of the pandemic.

“Our Foundation Model Taskforce will steer the responsible and ethical development of cutting-edge AI solutions, and ensure that the UK is right at the forefront when it comes to using this transformative technology to deliver growth and future-proof our economy,” said technology secretary Chloe Smith.

“With Ian on board, the Taskforce will be perfectly placed to strengthen the UK’s leadership on AI, and ensure that British people and businesses have access to the trustworthy tools they need to benefit from the many opportunities artificial intelligence has to offer.”

Hogarth’s role in the UK’s AI Taskforce

One of Hogarth’s first tasks in the role will be to lay the groundwork for the UK’s upcoming AI summit.

The Taskforce will initially receive £100 million of government funding and is expected to play a key role in the government’s AI ambitions going forward.

6/ The field of AI safety has been significantly under-resourced even as funding for AGI companies has now crossed a cumulative $20b+. This is a remarkable commitment by a single nation to expand the funding for research in such an important area. pic.twitter.com/Vfv6QBLqFOJune 18, 2023

Foundation models, which employ vast training data to produce a wide range of outputs, are a core facet of generative AI.

This has become a hot topic in almost all industries, with headlines focused on tools such as ChatGPT and Bard driving a rise in public interest in the technology.

But foundation models could power a wide range of tools beyond chatbots, and serve valuable purposes in high-risk sectors such as pharmaceuticals or energy.

The Taskforce will work to improve public perception of foundation models by clearly assessing the risks posed by different systems.

Through its research and at the summit hosted later this year, it will aim to establish shared approaches to regulation, ethics, and security standards for AI.

Private AI companies have already agreed to give the Taskforce early access to certain models to allow for safety evaluation.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AI

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AINews Mensch’s comments come amidst rising concerns about the impact of AI on traditional software

-

Westcon-Comstor and UiPath forge closer ties in EU growth drive

Westcon-Comstor and UiPath forge closer ties in EU growth driveNews The duo have announced a new pan-European distribution deal to drive services-led AI automation growth

-

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoption

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoptionNews The Open-Source AI Fellowship will allow engineers to apply for a 12-month “tour of duty” with the government to develop AI tools for the public sector.

-

‘Archaic’ legacy tech is crippling public sector productivity

‘Archaic’ legacy tech is crippling public sector productivityNews The UK public sector has been over-reliant on contractors and too many processes are still paper-based

-

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action plan

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action planNews The new policy, less cautious than that of the previous government, has been largely welcomed by experts

-

UK government trials chatbots in bid to bolster small business support

UK government trials chatbots in bid to bolster small business supportNews The UK government is running a private beta of a new chatbot designed to help people set up small businesses and find support.

-

The UK's hollow AI Safety Summit has only emphasized global divides

The UK's hollow AI Safety Summit has only emphasized global dividesOpinion Successes at pivotal UK event have been overshadowed by differing regulatory approaches and disagreement on open source

-

Rishi Sunak’s stance on AI goes against the demands of businesses

Rishi Sunak’s stance on AI goes against the demands of businessesAnalysis Execs demanding transparency and consistency could find themselves disappointed with the government’s hands-off approach

-

UK aims to be an AI leader with November safety summit

UK aims to be an AI leader with November safety summitNews Bletchley Park will play host to the guests who will collaborate on the future of AI

-

AI-driven net zero projects receive large cash injection from UK gov

AI-driven net zero projects receive large cash injection from UK govNews Funds have been awarded to projects that explore the development of less energy-intensive AI hardware and tech to improve renewables