Rishi Sunak’s stance on AI goes against the demands of businesses

Execs demanding transparency and consistency could find themselves disappointed with the government’s hands-off approach

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

The UK government’s pro-innovation approach to AI under Prime Minister Rishi Sunak appears to fly in the face of demands for clarity and oversight from firms throughout the sector.

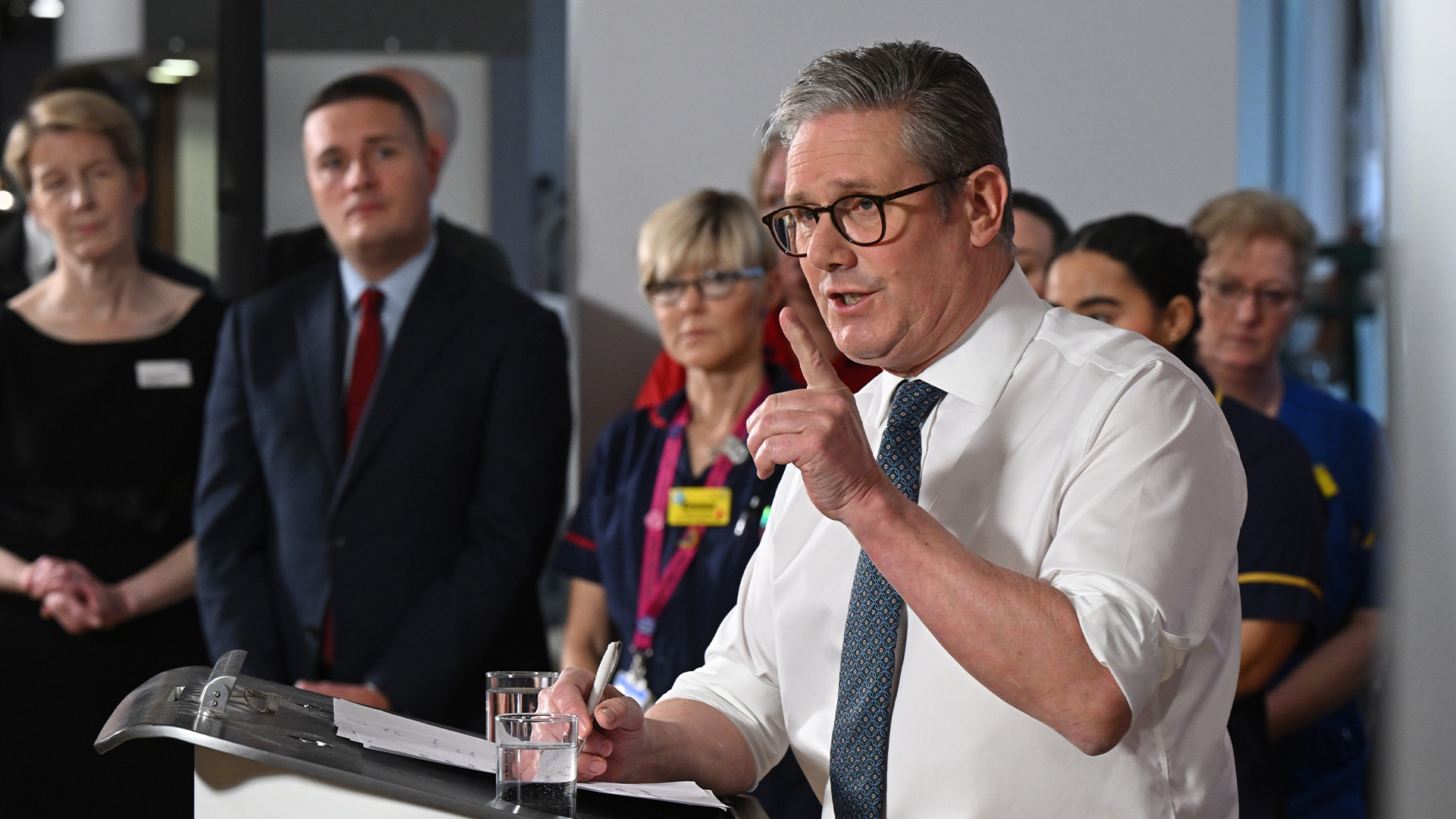

In a speech on 26 October, Sunak stated that the UK government would not “rush to regulate” AI due to its belief in innovation, and questioned how it could be expected to “write laws that make sense for something we don’t yet fully understand”.

Sunak qualified his statement with reference to a new report on AI risks published by the government with input from the UK’s intelligence community, stating that he wanted to be honest about the threats the technology poses.

It suggested that threat actors and terrorists could use generative AI to create self-replicating malware, spread disinformation and social engineering campaigns, or even gather instructions on building chemical or biological weapons.

A survey by ICASA, the association of IT professionals, found 99% of surveyed European business and IT professionals were worried about the exploitation of AI by threat actors.

Alternative research from Kaspersky found 59% of European C-suite executives were seriously concerned about the risks of implementing AI, including the potential for data leaks. Many firms are supplementing AI investment with extra spending on application security tools to mitigate these risks.

Business leaders are not alone in considering these risks. The report referenced by Sunak, Safety and Security Risks of Generative Artificial Intelligence to 2025, found that AI will “increase sharply the speed and scale of some threats”.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Amongst UK employees there are also calls for greater transparency on AI implementation, and clear government regulation would play a key role in helping the workforce get a bearing on consistent approaches and requirements on AI.

In his speech, Sunak stated the government would play a role in shaping the field of AI as the private sector is incapable of keeping itself entirely in check.

“Right now, the only people testing the safety of AI are the very organizations developing it,” Sunak said.

“Even they don’t always fully understand what their models could become capable of. And there are incentives in part, to compete to build the best models, quickest. So, we should not rely on them marking their own homework, as many of those working on this would agree.

“Not least because only governments can properly assess the risks to national security. And only nation-states have the power and legitimacy to keep their people safe.”

No rush to over-regulate

While Sunak has been vocal on AI developments, the government has been slow to implement regulations pertaining to the technology. The UK government has notably lagged behind the EU in this regard, with the EU AI Act set to come into force some time in 2025 or 2026.

This is, in part, due to the UK’s current approach to fostering AI innovation and court international partners. Sunak has been eager to position the UK as a candidate for becoming a “leading AI nation”.

Core to this aim is the government’s AI Safety Summit which will be held at Bletchley Park, best known for its connections with the father of artificial intelligence Alan Turing.

The government also appointed Ian Hogarth head of the UK’s Foundation Model Taskforce. Hogarth has been the co-author of the annual State of AI report since 2018 and will oversee an initial £100 million of government funding to guide the development of safe AI.

RELATED RESOURCE

Get insight into the challenges that IT leaders are facing

DOWNLOAD NOW

It has also awarded £8 million in funding to support 800 AI scholarships across the country, and has announced more than £2.5 billion in joint funding for projects on AI and quantum computing.

“In today’s speech ahead of the AI Safety Summit, Prime Minister Rishi Sunak said that the UK is not in a rush to regulate,” said Pamela Maynard, CEO at IT consulting and services firm Avanade.

“Regulation or not, we all have a responsibility to balance the immense economic opportunities that the AI revolution could provide with its inherent risks. That’s why we urge companies and governments to put the right responsible AI frameworks in place before they use it and commit to evolving their guidelines as the technology evolves.”

Companies such as Microsoft have already set out plans to tackle AI problems such as hallucinations and potential security threats.

This will be necessary in the short term to mitigate potential AI-related legal nightmares down the road, for both companies and governments.

Slow and steady wins the race

Amanda Brock, CEO of OpenUK said the government's decision to not “rush” AI regulation should be welcomed. Brock added that the move could pay dividends further down the line and differentiate it from the EU’s aggressive legislative approach.

“The PM has got it right not to rush to regulate as a point of principle,” said Amanda Brock, CEO of OpenUK.

“Recognizing that “we can’t write laws for something that we don’t yet fully understand” is a critical and positive acknowledgment from the PM, which may allow the UK to avoid the mistakes we see in Europe’s rush to control via the AI Act.

“But if he wants the trust so evident in his speech, we must see his “honest approach” looking to understand potential risk over time including principles-based regulation.”

Brock also called on the Prime Minister to build bridges with the open source community and the potential for open innovation and data within the AI space.

She argued that a closer collaborative relationship with the open source community could help ensure the country can “support the most forward-thinking risk management of our AI future”.

If the government did pursue more open AI development, it could further distance itself from the EU. Representatives from the open-source community have called on the EU to remove “impractical barriers” to AI development, such as the classification of AI research and testing as “commercial activity”.

Was the door to heavier regulation left open?

The UK government has previously stated that it seeks to avoid heavy-handed regulation of AI out of fear that it might stifle innovation across the sector.

Sunak made clear in his speech that the government will not deviate drastically from this position in order to achieve its long-term goals.

But in the months and years to come, it is likely that all governments will be forced to revisit AI legislation in some capacity to ensure it serves the needs of citizens and provides the right balance of competition and regulation for the private sector.

Workers' rights are already a concern as the reality of mass AI-linked job cuts sets in. The Trades Union Congress (TUC) has called for new legislation and legal frameworks to be established in order to protect workers in the face of AI.

“We urgently need new employment legislation, so workers and employers know where they stand,” said Kate Bell, TUC assistant general secretary.

“Without proper regulation of AI, our labor market risks turning into a wild west. We all have a shared interest in getting this right.”

The government’s own whitepaper on AI was more equivocal about its statutory approach to AI. While Sunak rejected a heavy-handed approach in his speech, A pro-innovation approach to AI regulation implies the government can only guarantee to not pursue a statutory approach “initially”.

“Following this initial period of implementation, and when parliamentary time allows, we anticipate introducing a statutory duty on regulators requiring them to have due regard to the principles,” the white paper states.

The creation of the AI Safety Institute announced during Sunak’s speech may also fit in with a growing need for dedicated regulators and independent bodies to oversee the safe development of AI.

Government groups such as the UK Frontier Model Taskforce may prove instrumental in the meaningful shepherding of AI development going forward.

Endeavors to do the same in the private sector, such as Microsoft, Google, OpenAI, and Anthropic’s Frontier Model Forum, have been described as having “almost zero” chance of success due to competition.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Anthropic researchers warn AI could 'inhibit skills formation' for developers

Anthropic researchers warn AI could 'inhibit skills formation' for developersNews A research paper from Anthropic suggests we need to be careful deploying AI to avoid losing critical skills

-

CultureAI’s new partner program targets AI governance gains for resellers

CultureAI’s new partner program targets AI governance gains for resellersNews The new partner framework aims to help resellers turn AI governance gaps into scalable services revenue

-

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoption

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoptionNews The Open-Source AI Fellowship will allow engineers to apply for a 12-month “tour of duty” with the government to develop AI tools for the public sector.

-

‘Archaic’ legacy tech is crippling public sector productivity

‘Archaic’ legacy tech is crippling public sector productivityNews The UK public sector has been over-reliant on contractors and too many processes are still paper-based

-

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action plan

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action planNews The new policy, less cautious than that of the previous government, has been largely welcomed by experts

-

UK government trials chatbots in bid to bolster small business support

UK government trials chatbots in bid to bolster small business supportNews The UK government is running a private beta of a new chatbot designed to help people set up small businesses and find support.

-

The UK's hollow AI Safety Summit has only emphasized global divides

The UK's hollow AI Safety Summit has only emphasized global dividesOpinion Successes at pivotal UK event have been overshadowed by differing regulatory approaches and disagreement on open source

-

UK aims to be an AI leader with November safety summit

UK aims to be an AI leader with November safety summitNews Bletchley Park will play host to the guests who will collaborate on the future of AI

-

AI-driven net zero projects receive large cash injection from UK gov

AI-driven net zero projects receive large cash injection from UK govNews Funds have been awarded to projects that explore the development of less energy-intensive AI hardware and tech to improve renewables

-

Who is Ian Hogarth, the UK’s new leader for AI safety?

Who is Ian Hogarth, the UK’s new leader for AI safety?News The startup and AI expert will head up research into AI safety