UK aims to be an AI leader with November safety summit

Bletchley Park will play host to the guests who will collaborate on the future of AI

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

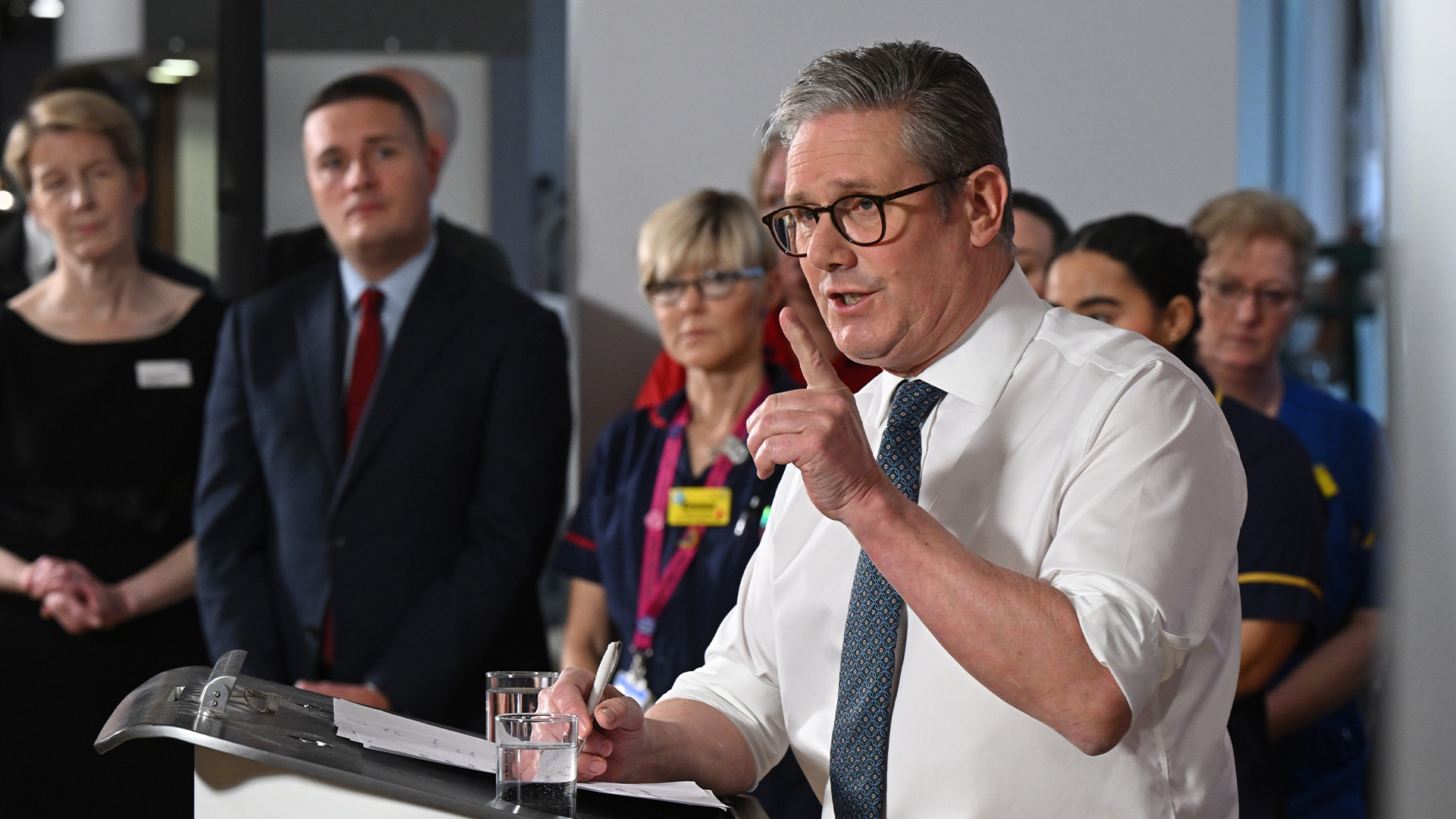

The UK has set the dates for its AI Safety Summit, which will bring together government representatives, academics, and industry experts to plan for the future of artificial intelligence (AI) development.

A key focus of the event will be ‘frontier models’, the next generation of AI systems on the horizon that hold immense potential for productivity and economic development but also pose a potential threat.

The summit will be held on 1 and 2 November at Bletchley Park.

To date, AI innovation and regulation has progressed on a largely disaggregated basis, with some collaboration through the G7 and OECD but no concrete international approach to safe AI implementation.

In August, the government announced that Matt Clifford, CEO of Entrepreneur First and chair of the Advanced Research and Invention Agency (ARIA), and Jonathan Black, former deputy national security adviser, would lead preparation for the summit.

Bletchley Park was famously home to Alan Turing and the rest of the Government Code and Cypher School (GC&CS) during the Second World War. Turing is recognized as one of the earliest pioneers in the field of AI and a giant in the field of computer science.

“To fully embrace the extraordinary opportunities of artificial intelligence, we must grip and tackle the risks to ensure it develops safely in the years ahead,” said Rishi Sunak, prime minister of the UK.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“With the combined strength of our international partners, thriving AI industry and expert academic community, we can secure the rapid international action we need for the safe and responsible development of AI around the world.”

RELATED RESOURCE

Definitive guide to practical AIOps

Get guidance on how and why enterprises should be using AIOps today

Specific attendees have not yet been announced, but it’s hoped that agreements made at the summit will sit alongside the OECD’s Recommendation on Artificial Intelligence, Global Partnership on AI (GPAI), and the G7 Hiroshima AI Process.

The UK government has made several moves in recent months to center AI as an important technology for the country’s future. In the Spring Budget, chancellor Jeremy Hunt set aside £900 million ($1.1 billion) for AI research and the creation of a new exascale supercomputer.

It has also allocated £3.75 million ($4.76 million) for AI net zero projects that can work to decarbonize the UK’s carbon economy.

Tech investor Ian Hogarth, co-author of the yearly State of AI report, has assumed the role of chair at the UK’s AI Foundation Model Taskforce, a new group that will liaise with experts across the sector to define international standards for AI safety.

It is directly involved in the AI Safety Summit, and has an additional mission to identify how the public can be better informed about AI models.

Why is AI facing greater regulation?

There is huge interest in AI across the private sector, with a wide range of products and services that utilize the technology already available. But risk analysts have also warned that AI misuse poses a serious threat.

Amidst this AI gold rush, some have called for more caution and argued that the speed of AI development is exceeding safety measures. In March 2023, figures such as Steve Wozniak and Elon Musk called for a six-month pause in AI development to curb potential risks.

The pace of development has undeniably quickened in the past year, with companies such as Microsoft, Meta, Google, and Amazon all grabbing AI with both hands and previously niche firms such as OpenAI and Anthropic having stormed into the headlines.

In order to create ethical AI, developers will need to follow strong internal guidelines or be compelled to uphold certain standards by legislation.

Demis Hassabis, CEO of Google DeepMind has stated that the safe and responsible creation of AI is paramount. In a Time interview, former Google SVP Alan Eustace alleged that Hassabis only agreed to Google’s acquisition of DeepMind on the condition that ethics would come first for development.

Microsoft, OpenAI, Google, and Anthropic have also formed the Frontier Model Forum, with the aim of identifying the safest ways to develop AI as increasingly advanced models come to fruition.

Avivah Litan, distinguished VP analyst at Gartner told ITPro, however, that the Frontier Model Forum has “practically zero” chance of developing universal solutions for safe AI due to the competition between members.

Many governments have also come to the conclusion that the private sector cannot be trusted to develop, train, or implement AI systems ethically and in line with individual rights without heavy intervention.

RELATED RESOURCE

AI for customer service

Learn about the conversational AI landscape, its three most common use cases, customer pain points, and real-world success stories from clients.

The EU’s AI Act lays out a range of new responsibilities for AI developers including a requirement for them to disclose the data they used to train models.

Individual AI systems will be given strict risk assessments under the legislation, with ‘high risk’ systems subject to registration in a public database and stringent checks on their use. Firms that do not comply with the legislation will face fines of €20 million or 4% of worldwide annual turnover.

Systems including those that use AI for live facial recognition or to subliminally affect users will be deemed unacceptable and be banned under the law. It’s expected to come into effect in 2025 after having passed a series of key votes in the European Parliament.

The UK’s own AI legislation is progressing slower than the EU’s, and explicitly emphasizes innovation over risk. To this end, the UK’s white paper on AI development does not propose a statutory approach to AI regulation.

It’s hoped that the UK’s AI Safety Summit will lay out a clearer approach for UK tech firms regarding AI. The summit will likely align the UK closer to US policy on AI, as the two countries seek closer collaboration under the recently-announced Atlantic Declaration.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not to

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not toNews The Copilot bug meant an AI summarizing tool accessed messages in the Sent and Draft folders, dodging policy rules

-

Cyber experts issue warning over new phishing kit that proxies real login pages

Cyber experts issue warning over new phishing kit that proxies real login pagesNews The Starkiller package offers monthly framework updates and documentation, meaning no technical ability is needed

-

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoption

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoptionNews The Open-Source AI Fellowship will allow engineers to apply for a 12-month “tour of duty” with the government to develop AI tools for the public sector.

-

‘Archaic’ legacy tech is crippling public sector productivity

‘Archaic’ legacy tech is crippling public sector productivityNews The UK public sector has been over-reliant on contractors and too many processes are still paper-based

-

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action plan

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action planNews The new policy, less cautious than that of the previous government, has been largely welcomed by experts

-

UK government trials chatbots in bid to bolster small business support

UK government trials chatbots in bid to bolster small business supportNews The UK government is running a private beta of a new chatbot designed to help people set up small businesses and find support.

-

The UK's hollow AI Safety Summit has only emphasized global divides

The UK's hollow AI Safety Summit has only emphasized global dividesOpinion Successes at pivotal UK event have been overshadowed by differing regulatory approaches and disagreement on open source

-

Rishi Sunak’s stance on AI goes against the demands of businesses

Rishi Sunak’s stance on AI goes against the demands of businessesAnalysis Execs demanding transparency and consistency could find themselves disappointed with the government’s hands-off approach

-

AI-driven net zero projects receive large cash injection from UK gov

AI-driven net zero projects receive large cash injection from UK govNews Funds have been awarded to projects that explore the development of less energy-intensive AI hardware and tech to improve renewables

-

Who is Ian Hogarth, the UK’s new leader for AI safety?

Who is Ian Hogarth, the UK’s new leader for AI safety?News The startup and AI expert will head up research into AI safety