Who is Mustafa Suleyman?

From Oxford drop out to ethical AI pioneer, Mustafa Suleyman is one of the biggest players in AI

The AI space is dominated by big personalities and bold claims over the future of the technology. It’s hard to go a week in 2025 without a mention of ‘artificial general intelligence’ and the promise (or doom) that it will herald.

With the global tech industry’s razor sharp focus on the roll-out of the technology in recent years, calm, conservative voices have been welcomed: Mustafa Suleyman certainly ranks among one of them.

Suleyman commands a gleaming reputation in the AI field and has been a highly vocal figure in the space for well over a decade now. Having dropped out of a degree in philosophy and theology at the University of Oxford, he turned his hand to entrepreneurship and eventually co-founding DeepMind in 2010. Four years later, the company was sold to search and cloud giant Google.

Suleyman stayed at what is now Google DeepMind until 2019, when he joined the parent company in a policy role, before departing in 2022 to found Inflection AI.

His involvement with Google DeepMind is what made his most recent career move in a bombshell: in March 2024 Suleyman was unveiled as head of Microsoft’s consumer AI division, charged with driving the tech giant’s push in the generative AI race.

Serving as CEO of Microsoft AI, Suleyman holds a place on the senior leadership team, reporting directly to chief executive Satya Nadella, and steers the research and development of AI products including Microsoft’s flagship Copilot service, Bing, and Edge.

Suleyman’s appointment was a major coup for Microsoft, given his foundational role in the development of Google’s AI capabilities.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Ethical AI development

As well as being a leading light of the AI industry and UK tech royalty, Suleyman – perhaps drawing on his background in philosophy – has become a well-known contributor to the discussions around AI ethics.

Suleyman’s stance has often erred on the side of caution, emphasizing the responsibility placed on enterprises developing these technologies and the need for ethical development practices, oversight, and governance.

In his 2023 book, The Coming Wave: AI, Power, and our Future, Suleyman noted that technologies such as AI will “usher in a new dawn for humanity, creating wealth and surplus unlike anything ever seen”. At the same time, he warned the rapid proliferation of the technology could “empower a diverse array of bad actors to unleash disruption, instability, and even catastrophe”.

Suleyman has consistently backed up this stance on the technology throughout his time in the big tech limelight. While still at DeepMind, he played a key role in steering the company’s Ethics & Society unit, established to help “explore and understand the real-world impacts of AI”.

More recently, in a December 2024 TED Talk, Suleyman explained how his approach to talking about and thinking about AI had changed with the arrival of generative AI.

“For years we in the AI community – and I specifically – have had a tendency to refer to this as just tools but that doesn’t really capture what’s actually happening here. AIs are clearly more dynamic, more ambiguous, more integrated, and more emergent than mere tools, which are entirely subject to human control. So to contain this wave, to put human agency at its center, and to mitigate the inevitable unintended consequences that are likely to arise, we should start to think about them as we might a new kind of digital species.”

“Just pause for a moment and think about what they really do,” he continued. “They communicate in our languages, they see what we see, they consume unimaginably large amounts of information. They have memory, they have creativity, they can even reason to some extent and formulate rudimentary plans. They can act autonomously if we allow them. And they do all this at levels of sophistication that is far beyond anything that we’ve ever known from a mere tool.”

“I think this frame helps sharpen our focus on the critical issues: What are the risks? What are the boundaries that we need to impose? What kind of AI do we want to build or allow to be built? This is a story that’s still unfolding.”

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

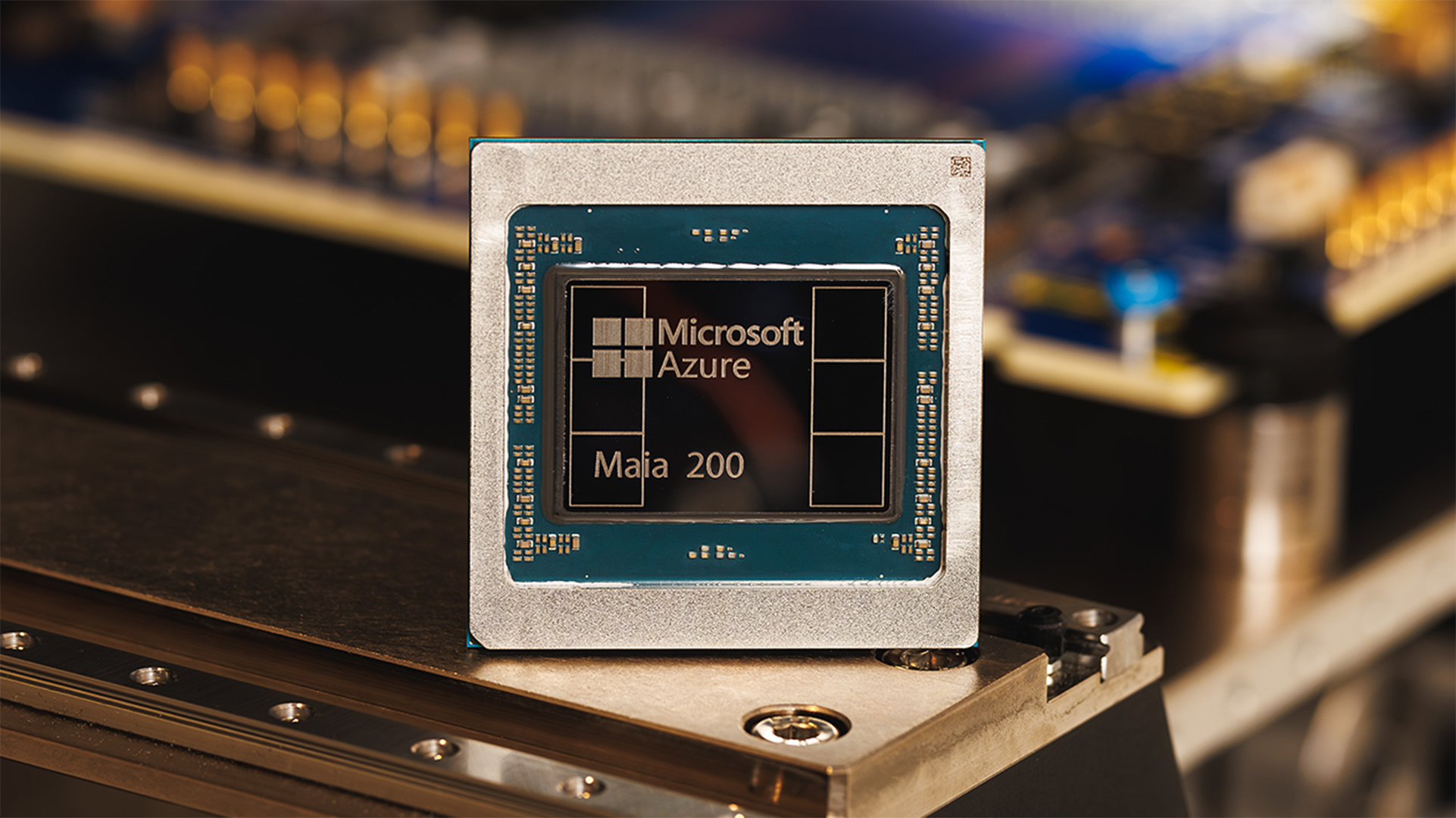

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation

-

Lloyds Banking Group wants to train every employee in AI by the end of this year – here's how it plans to do it

Lloyds Banking Group wants to train every employee in AI by the end of this year – here's how it plans to do itNews The new AI Academy from Lloyds Banking Group looks to upskill staff, drive AI use, and improve customer service

-

CEOs are fed up with poor returns on investment from AI: Enterprises are struggling to even 'move beyond pilots' and 56% say the technology has delivered zero cost or revenue improvements

CEOs are fed up with poor returns on investment from AI: Enterprises are struggling to even 'move beyond pilots' and 56% say the technology has delivered zero cost or revenue improvementsNews Most CEOs say they're struggling to turn AI investment into tangible returns and failing to move beyond exploratory projects

-

Companies continue to splash out on AI, despite disillusionment with the technology

Companies continue to splash out on AI, despite disillusionment with the technologyNews Worldwide spending on AI will hit $2.5 trillion in 2026, according to Gartner, despite IT leaders wallowing in the "Trough of Disillusionment" – and spending will surge again next year.

-

A new study claims AI will destroy 10.4 million roles in the US by 2030, more than the number of jobs lost in the Great Recession – but analysts still insist there won’t be a ‘jobs apocalypse’

A new study claims AI will destroy 10.4 million roles in the US by 2030, more than the number of jobs lost in the Great Recession – but analysts still insist there won’t be a ‘jobs apocalypse’News A frantic push to automate roles with AI could come back to haunt many enterprises, according to Forrester

-

Businesses aren't laying off staff because of AI, they're using it as an excuse to distract from 'weak demand or excessive hiring'

Businesses aren't laying off staff because of AI, they're using it as an excuse to distract from 'weak demand or excessive hiring'News It's sexier to say AI caused redundancies than it is to admit the economy is bad or overhiring has happened

-

Lisa Su says AI is changing AMD’s hiring strategy – but not for the reason you might think

Lisa Su says AI is changing AMD’s hiring strategy – but not for the reason you might thinkNews AMD CEO Lisa Su has revealed AI is directly influencing recruitment practices at the chip maker but, unlike some tech firms, it’s led to increased headcount.

-

Accenture acquires Faculty, poaches CEO in bid to drive client AI adoption

Accenture acquires Faculty, poaches CEO in bid to drive client AI adoptionNews The Faculty acquisition will help Accenture streamline AI adoption processes

-

Productivity gains on the menu as CFOs target bullish tech spending in 2026

Productivity gains on the menu as CFOs target bullish tech spending in 2026News Findings from Deloitte’s Q4 CFO Survey show 59% of firms have now changed their tune on the potential performance improvements unlocked by AI.