California has finally adopted its AI safety law – here's what it means

The new legislation covering AI safety and innovation directly counter federal efforts to ban state-level AI regulation

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

California has officially adopted a law requiring AI companies to disclose how they plan to avoid serious risks stemming from their models and admit any critical safety incidents.

The Transparency in Frontier Artificial Intelligence Act (TFAIA), previously known as Senate Bill 53 (SB53), is focused on AI safety.

It includes whistleblower protections, requirements to meet international standards, and a mechanism to report safety incidents. It will also establish a consortium that will work on a new computing cluster accessible in the public cloud to develop safe, sustainable, and ethical AI.

California is of course home to many of the companies developing these technologies, meaning local regulations could have an impact. Anthropic has publicly supported this version of the bill, though has also called for a federal law.

In adopting the new law, California becomes the first US state to legislate on AI safety, ahead of New York which has its own plan in the works. The move comes amid a wider failure to legislate around AI, with no federal AI laws as yet in place.

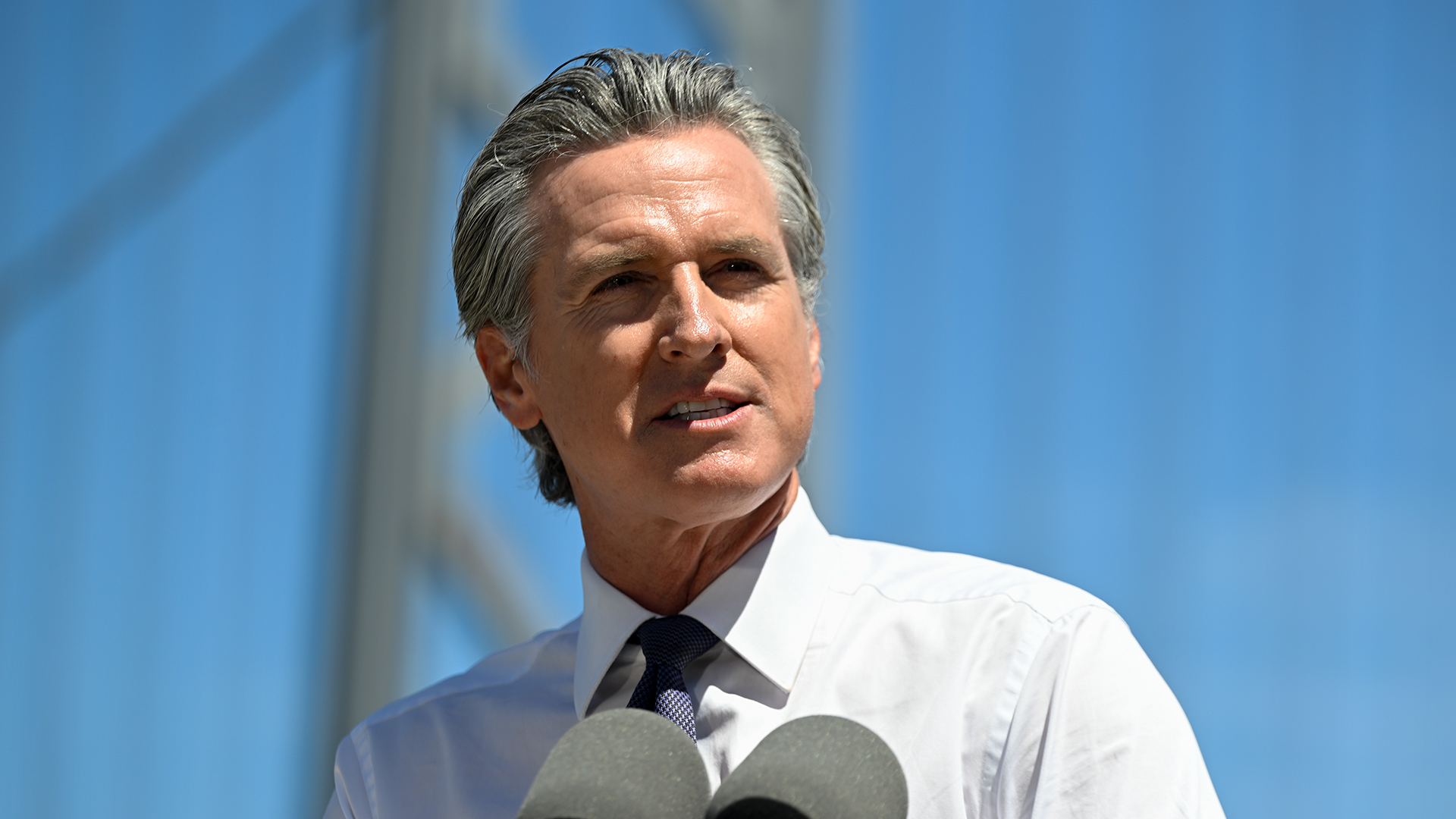

Indeed, California governor Gavin Newsom signed this new bill into law a year after vetoing a previous AI legislative attempt that was widely opposed and included safety testing for large models and the requirement for a kill-switch to be included in AI systems.

"California has proven that we can establish regulations to protect our communities while also ensuring that the growing AI industry continues to thrive," Governor Newsom said in a statement. "This legislation strikes that balance."

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Newsom added: "AI is the new frontier in innovation, and California is not only here for it – but stands strong as a national leader by enacting the first-in-the-nation frontier AI safety legislation that builds public trust as this emerging technology rapidly evolves."

What the law includes

The TFAIA was written by Democratic Senator Scott Wiener, who also wrote last year's vetoed version.

To encourage transparency, the law requires large frontier developers to publish a framework describing how it incorporates national and international standards, in effect sharing their safety plans.

The law also sets up a new mechanism for companies and the public to report critical safety incidents to California’s Office of Emergency Services and creates protection for whistleblowers who disclose significant health and safety risks of frontier models, with a civil penalty for noncompliance.

However, it only requires reports in case of physical harm, with the San Francisco Public Press stating that's been watered down from last year's bill which required all incidents to be reported. The TFAIA also comes ahead of one planned by New York, which requires reporting of any potential risk of serious harm, even before an incident happens. Under the TFAIA, California will fine companies that injure or "contribute to the death of" more than 50 people, or cause $1bn in damage.

But the fine has been slashed from $10m to $1m for a company's first violation, with the larger amount now reserved only for subsequent infractions. New York plans to fine up to $30m for repeated offenses, the San Francisco Public Press report added.

The newly signed regulation also means California will support development of safe AI: first it has established a consortium called CalCompute within the state's Government Operations Agency to develop its own framework for creating a public computing cluster to "advance the development and deployment" of AI that is safe, ethical, equitable and sustainable, the statement from the governor's office said.

To enable the law to keep up with innovation, the California Department of Technology can annually recommend updates based on stakeholder feedback, changes to international standards, or tech developments.

"As artificial intelligence continues its long journey of development, more frontier breakthroughs will occur," said a trio of AI leaders who helped lead a report into AI for Newsom, in a statement released alongside the governor’s.

The trio consists of Fei-Fei Li, co-director at the Stanford Institute for Human-Centered Artificial Intelligence; Jennifer Tour Chayes, dean of the College of Computing, Data Science, and Society at UC Berkeley; and Mariano-Florentino Cuéllar, former California supreme court justice and former member of the National Academy of Sciences Committee on the Social and Ethical Implications of Computing Research.

"AI policy should continue emphasizing thoughtful scientific review and keeping America at the forefront of technology," the trio added.

California counters Trump administration moves on AI

The move puts Newsom further at odds with President Trump, who widely supports unfettered development of the technology.

The government attempted to force into an unrelated bill a provision to ban state-level AI legislation for ten years, though it was removed by Senate amendment in July. In January, President Trump issued an executive order "removing barriers to American leadership in AI" that ended a Biden order on developing safe and secure AI and followed this in July with the US AI Action Plan which aims to heavily deregulate AI.

A statement released from the governor's office notes: "This legislation is particularly important given the failure of the federal government to enact comprehensive, sensible AI policy. SB 53 fills this gap and presents a model for the nation to follow."

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AI

Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AINews Mensch’s comments come amidst rising concerns about the impact of AI on traditional software

-

Westcon-Comstor and UiPath forge closer ties in EU growth drive

Westcon-Comstor and UiPath forge closer ties in EU growth driveNews The duo have announced a new pan-European distribution deal to drive services-led AI automation growth