UK tech firms face billion-pound fines for failing to curb harmful content

The government plans to hit social media platforms with GDPR-like penalties worth up to 10% of annual revenue

The government is proposing a set of punitive measures, including hefty GDPR-like fines, designed to force tech firms and social media platforms into limiting the spread of harmful content that circulates online.

Under the proposals, an evolution of the Online Harms white paper published in April 2019, social media firms and tech giants will face financial penalties of £18 million, or up to 10% of their annual global revenue, if they fail to comply.

Executives of major firms will also face criminal sanctions if their businesses fail to adhere to a duty of care for their users asking them to limit the spread of harmful content, according to Reuters.

The largest platforms, such as Facebook and Twitter, will need to assess what may constitute legal but harmful content, such as disinformation, and prevent such posts from spreading where appropriate.

“Today Britain is setting the global standard for safety online with the most comprehensive approach yet to online regulation,” said Oliver Dowden, the secretary of state for Digital, Culture Media and Sport (DCMS). “We are entering a new age of accountability for tech to protect children and vulnerable users, to restore trust in this industry, and to enshrine in law safeguards for free speech.”

The measures are due to be outlined by Dowden in the House of Commons today, and are expected to be formalised and drafted into a bill next year. There may not be any new laws until at least 2022, however.

The government has been keen to introduce a form of mechanism to keep online tech giants in check when it comes to the harmful material that propagates on their platforms, in light of the widening consensus that self-regulation has failed.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The white paper, proposed last year, included even harsher measures, including a provision that would have allowed internet service providers (ISPs) to block some services entirely if the respective companies were guilty of repeated non-compliance.

The EU, too, has been wrangling with this issue for years, suggesting various measures at different stages to address the issue of harmful content circulating on social media. Various iterations of these plans have even included granting companies a one-hour window in which to remove qualifying content or face sanctions. This is despite the EU recognising last year that these companies have become more effective at detecting and acting against hate speech.

In recent days, the European Commission has been putting the finishing touches to its Digital Services Act, according to Politico. Similar to the UK’s plans, these draft proposals will mandate that large online platforms must limit the capacity for illegal material to spread on their networks.

Regulators and outside groups must also be granted more access to internal data, and auditors will be charged with determining whether these platforms are complying with the proposals.

“In recent years, there has been a recognition from governments, society and the tech firms themselves that there is a need to take coordinated action to tackle harmful content online,” said Ben Packer, partner at global law firm Linklaters. “Today’s proposals from the UK and the EU are the most ambitious measures announced anywhere.”

“Though the regimes differ in many ways, both impose new and potentially onerous duties on online platforms to protect their users from harmful content. They also both propose heavy sanctions for those who fail to comply, including fines of up to 10% of a company’s global turnover.”

The UK’s new measures come just days after the government revealed plans to establish a special unit within the Competition and Markets Authority (CMA) to regulate the industry and market practices of tech companies. The Digital Markets Unit will work to tackle the rising concentration of market power among companies such as Facebook and Google by enforcing an industry code of conduct to govern their behaviour.

"Given our leadership in the application of ethics and integrity in IT, it should be no surprise that the UK is moving decisively to tackle online harms, one of the biggest and most complex digital challenges of our time,” said Stephen Kelly, chair of industry body Tech Nation.

“Equally, it offers the UK the opportunity to lead a new category of tech, such as "safetech", building on our heritage of regtech and compliance, which already assure global markets and economies."

Keumars Afifi-Sabet is a writer and editor that specialises in public sector, cyber security, and cloud computing. He first joined ITPro as a staff writer in April 2018 and eventually became its Features Editor. Although a regular contributor to other tech sites in the past, these days you will find Keumars on LiveScience, where he runs its Technology section.

-

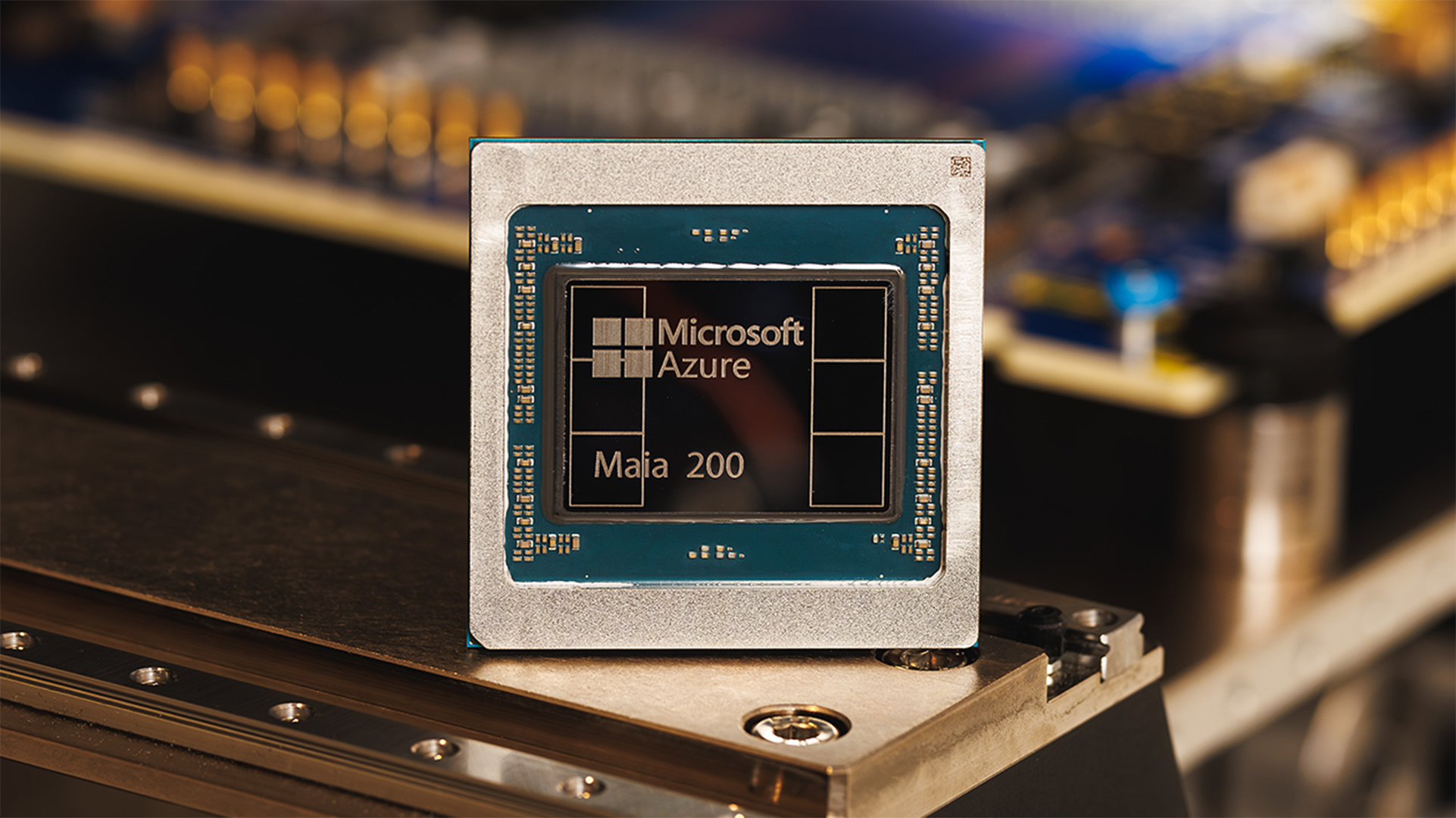

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation