AI is creating more software flaws – and they're getting worse

A CodeRabbit study compared pull requests with AI and without, finding AI is fast but highly error prone

AI is helping developers write code faster, but it's also leading to more problems, marking the latest study to highlight adoption concerns among software developers.

In a study from CodeRabbit, researchers found AI makes 1.7 more times as many mistakes as human programmers.

To come to that conclusion, CodeRabbit looked at 470 open source GitHub pull requests, of which 320 had AI input and 150 were human only.

"The results? Clear, measurable, and consistent with what many developers have been feeling intuitively: AI accelerates output, but it also amplifies certain categories of mistakes," wrote CodeRabbit's director of AI David Loker in a blog post.

He admitted the study wasn't perfect, in part because it was difficult to double-check authorship, but said the findings matched with previous research. Loker pointed to a report by Cortex that found pull requests per author increased by 20%, but incidents per pull request also went up — by 23.%.

That matches with previous research suggesting AI-generated code is now the cause of one-in-five breaches, with another report noting coders' concerns around AI introducing errors to their work.

More problems and more critical flaws

The CodeRabbit study found 10.83 issues with AI pull requests versus 6.45 for human-only ones, adding that AI pull requests were far more likely to have critical or major issues.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"Even more striking: high-issue outliers were much more common in AI PRs, creating heavy review workloads," Loker said.

Logic and correctness was the worst area for AI code, followed by code quality and maintainability and security. Because of that, CodeRabbit advised reviewers to watch out for those types of errors in AI code.

"These include business logic mistakes, incorrect dependencies, flawed control flow, and misconfigurations," Loker wrote. "Logic errors are among the most expensive to fix and most likely to cause downstream incidents."

AI code was also spotted omitting null checks, guardrails, and other error checking, which Loker noted are issues that can lead to outages in the real world.

When it came to security, the most common mistake by AI was improper password handling and insecure object references, Loker noted, with security issues 2.74 times more common in AI code than that written by humans.

Another major difference between AI code and human written-code was readability. "AI-produced code often looks consistent but violates local patterns around naming, clarity, and structure," Loker added.

Beyond those issues, Loker noted flaws in concurrency, formatting, and naming inconsistencies — all of which could not only cause issues with software or apps, but also make it harder for human reviewers to spot problems.

What's happening, and how to fix it

Some of the faults are down to a lack of context, Loker suggested, and in other cases AI is sticking to "generic defaults" rather than company rules, such as with naming patterns or architectural norms.

Security problems may be down to how models recreate "legacy patterns or outdated practices" by using older code as training data.

"AI generates surface-level correctness: It produces code that looks right but may skip control-flow protections or misuse dependency ordering," Loker added.

None of this means AI shouldn't be used in coding, he stressed. Instead, companies should ensure AI has context of business rules, policies or style guides, and be given guidelines for basic security.

Such efforts would wipe out many of the faults spotted, he said. Similarly, code reviewers should be given a checklist that takes this research into account, so they know what types of errors to watch out for.

"The future of AI-assisted development isn’t about replacing developers," he added. "It’s about building systems, workflows, and safety layers that amplify what AI does well while compensating for what it tends to miss."

FOLLOW US ON SOCIAL MEDIA

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

You can also follow ITPro on LinkedIn, X, Facebook, and BlueSky.

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

The modern workplace: Standardizing collaboration for the enterprise IT leader

The modern workplace: Standardizing collaboration for the enterprise IT leaderHow Barco ClickShare Hub is redefining the meeting room

-

Interim CISA chief uploaded sensitive documents to a public version of ChatGPT

Interim CISA chief uploaded sensitive documents to a public version of ChatGPTNews The incident at CISA raises yet more concerns about the rise of ‘shadow AI’ and data protection risks

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

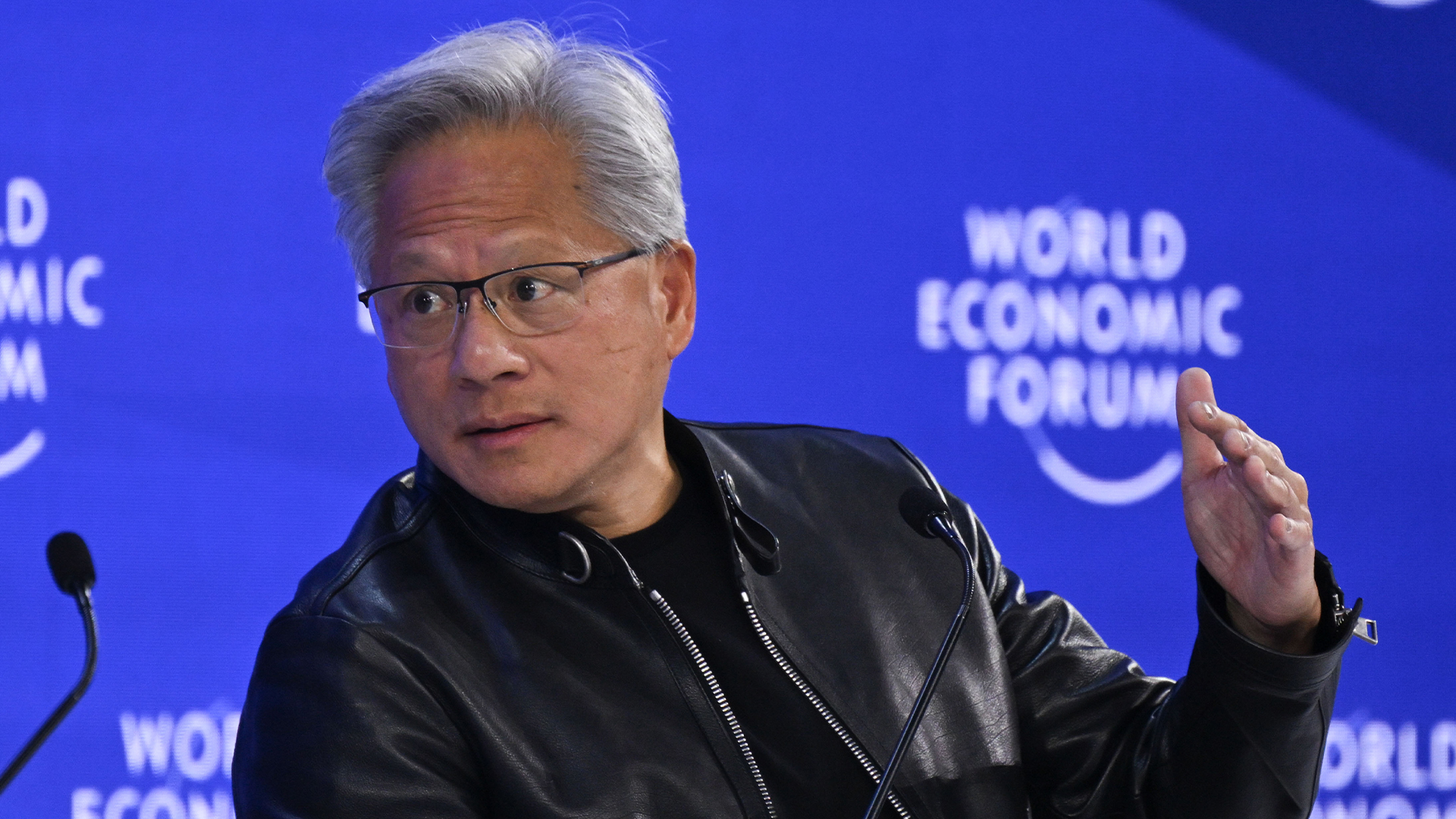

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teams

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teamsAnalysis Software developers may be forgiven for worrying about their jobs in 2025, but the end result of AI adoption will probably be larger teams, not an onslaught of job cuts.

-

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux Foundation

Anthropic says MCP will stay 'open, neutral, and community-driven' after donating project to Linux FoundationNews The AAIF aims to standardize agentic AI development and create an open ecosystem for developers

-

Developer accidentally spends company’s entire Cursor budget in one sitting — and discovers worrying flaw that let them extend it by over $1 million

Developer accidentally spends company’s entire Cursor budget in one sitting — and discovers worrying flaw that let them extend it by over $1 millionNews A developer accidentally spent their company's entire Cursor budget in a matter of hours, and discovered a serious flaw that could allow attackers to max out spend limits.