Developers say AI can code better than most humans – but there's a catch

A new survey suggests AI coding tools are catching up on human capabilities

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

AI is better at coding than most humans, according to software developers, but there are several glaring issues holding the technology back.

A survey by Clutch revealed that more than half (53%) of senior software developers believe large language models (LLMSs) can already code better than most humans.

The study comes as AI tools continue to push into software development, with solutions like GitHub Copilot, Cursor, and Windsurf, which OpenAI tried to snap up for $3bn in May before losing out to Cognition.

Given that, it's no surprise that three-quarters of those asked by Clutch said they expect AI to "significantly reshape" how software is developed over the next five years.

"That shift is already underway," the company said in a blog post detailing its findings.

"From the rise of prompt engineering to debates about AI-generated code quality, software leaders are grappling with rapidly evolving development models and updating their hiring, upskilling, and automation strategies to keep up."

According to the survey, 78% of those asked said they already use AI several times a week or more — that matches near enough to a recent survey from Stack Overflow that suggested 84% of developers were using, or plan to use, AI in their daily workflows.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

That use of AI doesn't necessarily bother developers, with 42% reporting positive feelings about AI and another 23% saying they were "excited" by the technology.

Concerns about AI

But it's not all good news, the survey noted. One-in-ten reported concerns about how AI is used in software development with 8% skeptical about the technology.

The top concern was data privacy (24%), followed by job displacement (14%), the risk of errors in work (14%), and the loss of creativity (13%).

A further 7% raised the issue of a lack of entry-level roles to help junior developers join the industry. The impact on developers entering the workforce has become a key talking point in recent months, with industry stakeholders suggesting the technology could seriously hamper opportunities for graduates.

"When asked how AI might affect entry into the profession, opinions were mixed," Clutch noted in the blog post.

"45% of respondents said AI might actually lower the barrier for junior developers by giving them better tools and faster ways to learn. But 37% said it would do the opposite, making it harder for newcomers to compete or even get noticed. AI's ability to automate junior-level work is reshaping hiring criteria."

Beyond that, 79% believe that AI skills will soon become a must-have to get hired.

How developers are using AI

How AI is being used varies, according to Clutch. The survey found that 48% of developers use AI primarily for code generation, with 36% using it in testing phases and a further 36% during code review.

A minority of developers also use AI earlier in the development cycle for requirement gathering and system design, or even post-launch for debugging.

"These stages demand speed, consistency, and pattern recognition, which makes them a natural fit for automation," the post noted.

But Clutch argues it's not just about automation - the technology is enabling development teams to overhaul traditional processes and find new ways of working.

"It’s not just about doing the same tasks faster,” Clutch noted. “It’s about doing them differently, with new methods for debugging, testing, and prototyping that weren’t practical before."

One area of concern highlighted in the survey centered around AI-generated code. A host of major tech companies, including Microsoft and Google, have touted their own gains on this front in recent months.

More than half (59%) of respondents said they used AI-generated code without fully understanding it, for example. This, the study noted, could create new security risks for organizations.

In a study last year, researchers warned that generative AI could replicate insecure code. A more recent study from Cloudsmith also warned that development teams are walking into a trap with AI-generated code, with many placing too much faith in the technology.

"That gap between speed and understanding is something teams will need to work on," Clutch noted.

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

MORE FROM ITPRO

- AI coding tools aren’t the solution to the unfolding 'developer crisis’

- AI is finally delivering results for software developers

- Developers in this one country are the most frequent users of AI coding tools

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Agile methodology might be turning 25, but it’s withstood the test of time

Agile methodology might be turning 25, but it’s withstood the test of timeNews While Agile development practices are 25 years old, the longevity of the approach is testament to its impact – and it's once again in the spotlight in the age of generative AI.

-

Will a generative engine optimization manager be your next big hire?

Will a generative engine optimization manager be your next big hire?In-depth Generative AI is transforming online search and companies are recruiting to improve how they appear in chatbot answers

-

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario Amodei

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario AmodeiNews Internal teams at Anthropic are supercharging production and shoring up code security with Claude, claims executive

-

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’News Security teams are scrambling to catch AI-generated flaws that appear correct before disaster strikes

-

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’News A research paper from Anthropic suggests we need to be careful deploying AI to avoid losing critical skills

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

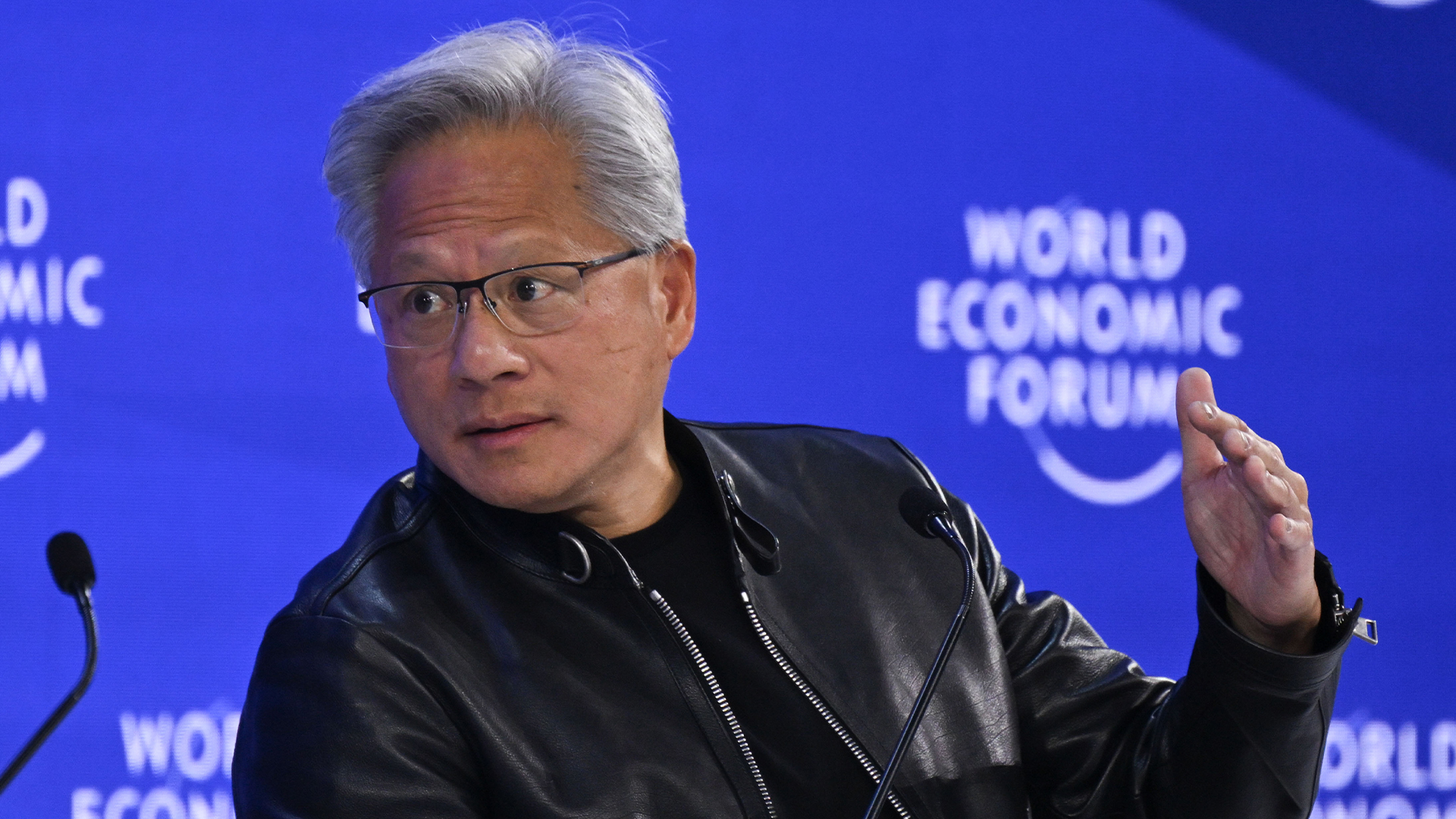

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead