The risks of open source AI models

Models such as DeepSeek R1 come with both benefits and clear security drawbacks

Open source AI models offer flexibility, accessibility and customization that allows companies to meet very specific needs. But despite the benefits, they can also pose a major risk.

Take the example of the DeepSeek R1 frontier reasoning model, which is highly functional and can compete with state-of-the-art models from OpenAI and Anthropic.

The open source AI model has been found to have “critical safety flaws”, Cisco warned in a report published shortly after the model began dominating headlines. In the firm’s testing, DeepSeek failed to block a single harmful prompt when tested against 50 random prompts taken from the HarmBench dataset, a standardized evaluation framework used for automated red teaming of large language models (LLMs).

Meanwhile, another study found that DeepSeek may contain inherent flaws that allow it to be hijacked with jailbreaks and prompt injection attacks – which could make it incompatible with the EU AI Act.

So, what risks are posed by open source AI models and how can these be mitigated?

Limited oversight

Open source AI models such as DeepSeek R1 and Meta’s Llama help firms to innovate, but they also come with “significant risks” to safety and compliance, says Dr Farshad Badie, dean of faculty of computer science and informatics at the Berlin School of Business and Innovation. “These models may spread misinformation, reinforce biases and be misused for cyber threats,” he warns.

Part of the issue is that open source software can be modified by anyone and it can be difficult to establish the dependencies present within open source code. While this is a benefit, it’s also a risk because anyone can deploy changes without review or approval – including adversaries, says James Bent, vice president of engineering at Virtuoso QA.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Open source AI models can be used for numerous nefarious purposes. Among the risks, criminals could change the models to bypass controls, generate harmful content or embed backdoors for exploitation, adds James McQuiggan, security awareness advocate at KnowBe4.

One major concern is data poisoning, he says. “If training data is compromised, the model can provide biases or disinformation, making them unreliable – or possibly dangerous.”

Open source AI models can be fine-tuned to generate malicious code, automated phishing campaigns, or even to create zero-day exploits, says McQuiggan. “Without restrictions, cyber criminals can manipulate these tools to launch attacks or work to gain access to endpoints and systems.”

There’s NCSC evidence that LLMs can be used to write malware, though the exact degree to which this poses a threat has been publicly questioned by researchers. That said, malicious tools such as GhostGPT are believed to be based on open source AI. Bent points to these tools as a potential route for business email compromise.

Adding to the risk, open source AI models are readily available and can be distributed and repurposed for unintended use. “They can spread quickly to machines, making it easy for cyber criminals to leverage the sabotaged code wherever it's been downloaded and inserted into other applications," McQuiggan says.

Yet some open source AI models pose more risk than others. Sam Rubin, SVP of consulting and threat intelligence of Unit 42, Palo Alto Networks describes how the firm’s research found that DeepSeek is more susceptible to jailbreaking than its counterparts.

“While we've jailbroken numerous models, DeepSeek proved significantly easier to bypass. We successfully bypassed its safeguards at a significantly higher rate, largely due to the lack of essential guardrails that typically restrict the generation of malicious content.”

Indeed, the security firm’s researchers were able to bypass its “weak safeguards” to generate harmful content with “little to no specialized knowledge or expertise”, he warns.

Part of the problem is that DeepSeek lacks the guardrails found in more established models such as those created by OpenAI. The Chinese model is “a big concern” due to its “limited maturity and likely rushed to market release”, says Rubin. “While other models have undergone extensive red teaming exercises resulting in published research that provides clear insights into their security methodologies and frameworks, DeepSeek has not taken the time to do the due diligence to ensure proper guardrails were in place before going to market.”

The fact that LLMs can be susceptible to jailbreaking shows it’s not always possible to trust they will work as they intend. “They are open to being manipulated,” says Rubin. “It’s important that companies consider these vulnerabilities when building open source LLMs into business processes. We have to assume that LLM guardrails can be broken and safeguards need to be built in at the organizational level.”

Benefits to security teams

Open source AI models can also offer benefits to security teams, such as threat intelligence automation and improving malware detection and security operations centers (SOCs).

The benefits brought about via the widespread adoption of AI security models through open source channels could outweigh any security concerns some businesses have. Leaders must question whether the benefit is worth the risk of using them in their business.

In some cases, experts say yes, as long as you have the correct measures in place to protect yourself. “Organizations need to consider these risks as part of their risk register or risk management programs and have proper mitigations in place to protect the organization," McQuiggan advises.

There are ways you can strive to manage open source AI models safely. Organizations should have AI governance policies and audit processes to implement and monitor the use of open source models, says McQuiggan.

Conducting training data audits is “vital” to ensure biased or poisoned datasets do not compromise models, McQuiggan adds. “Sandboxing AI models can ensure the proper function of the code and outputs and ensure no code leverages any command control or other cybersecurity-style attacks.”

However, others think the threat posed by open source AI models is too big, at least for now. In their current state, some open source AI models are unlikely to provide enough value to outweigh the “immense security risks”, says Herrin. “We need to be wary of what’s happening and understand the geopolitical aspects at play in the global AI race where security and risk management will likely take a back seat. “

Stronger oversight, ethical safeguards and compliance-driven restrictions are needed to help mitigate the risks, says Dr Badie. “A balanced, human-centered approach – ensuring transparency, responsibility, and control – is crucial to making sure these technologies serve society rather than undermine it.”

Kate O'Flaherty is a freelance journalist with well over a decade's experience covering cyber security and privacy for publications including Wired, Forbes, the Guardian, the Observer, Infosecurity Magazine and the Times. Within cyber security and privacy, her specialist areas include critical national infrastructure security, cyber warfare, application security and regulation in the UK and the US amid increasing data collection by big tech firms such as Facebook and Google. You can follow Kate on Twitter.

-

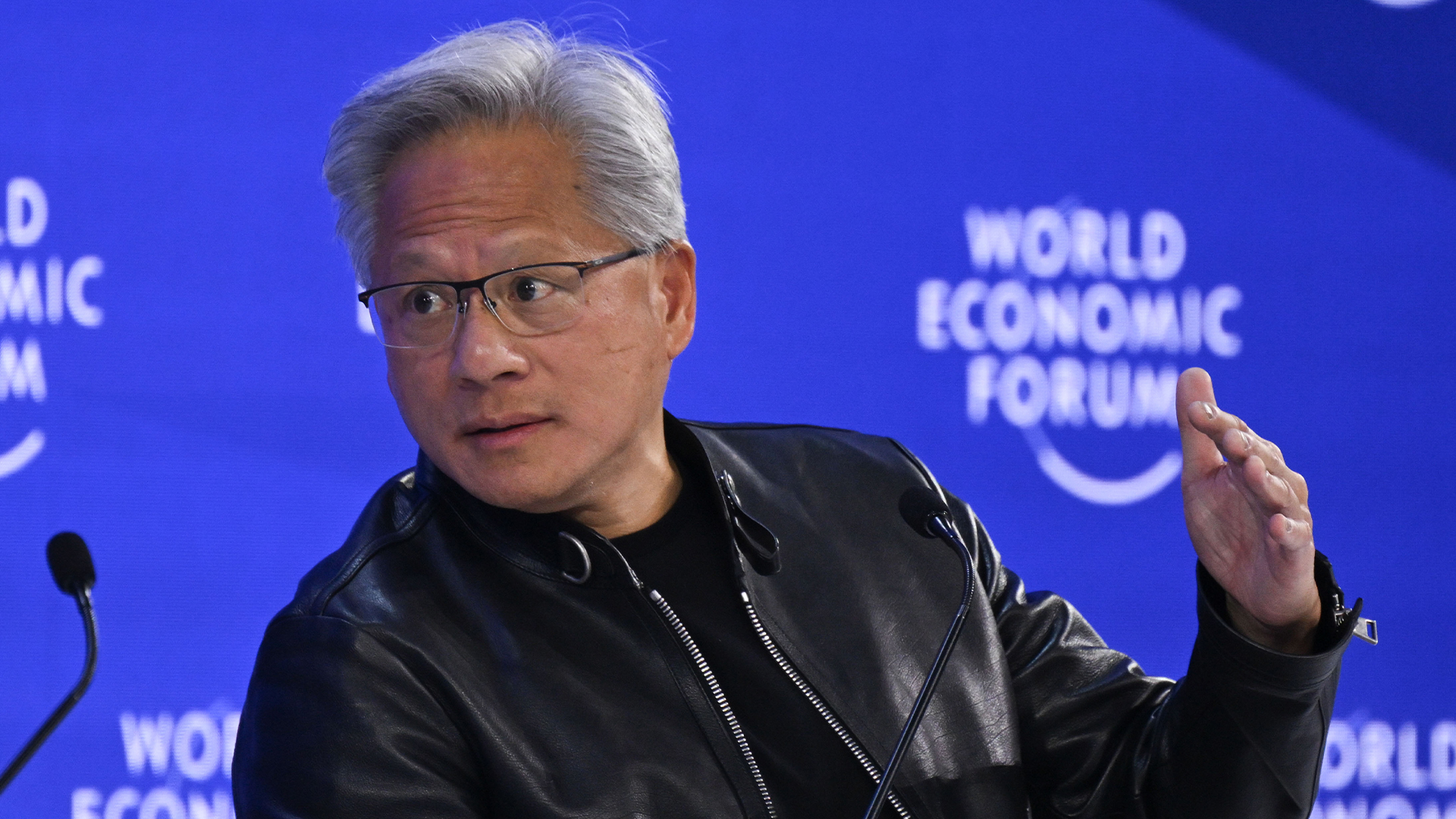

Jensen Huang says the traditional computing stack will never look the same because of AI

Jensen Huang says the traditional computing stack will never look the same because of AINews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

TP-Link promotes Kieran Vineer to distribution channel director for UK&I

TP-Link promotes Kieran Vineer to distribution channel director for UK&INews The company veteran will now oversee distribution activity for TP-Link’s networking and surveillance channels across the region