What is model context protocol (MCP)?

MCP underpins many agentic AI systems – but how does it work and is it easy to use?

Large language models, the engines of many of today’s cutting edge AI applications, process and return responses to prompts using training data, whether the task at hand is solving a logic-level problem or writing complex lines of code. LLMs cannot ‘naturally’ access external databases, content repositories, and third-party applications..

Linking LLMs to external data used to be a manually intensive, case-by-case task. Model context protocol (MCP) is a standard, open source AI protocol that provides a secure environment for LLMs to connect with external databases, tools, software, and services in real-time. It functions as a kind of universal language that enables LLMs to move beyond their training data.

Anthropic, the American AI developer responsible for Claude, released MCP as an open source standard on November 25, 2024. The firm published the documentation and announced MCP in a blog post, drawing an analogy between the MCP protocol and USB Type-C, which helps any device connect to a computer.

Fintech company Block and app development platform Apollo GraphQL were listed as early adopters of MCP. Anthropic has since partnered with developer tool companies like Zed, Replit, Sourcegraph, and Codeium to incorporate MCP in their products for AI-assisted development.

Ever since the release, MCP has received support from across the AI ecosystem. Core AI enterprises such as OpenAI and Google DeepMind adopted MCP within six months of its release. One of the recent MCP initiatives includes the addition of Microsoft, through Copilot Plus PC and Windows AI.

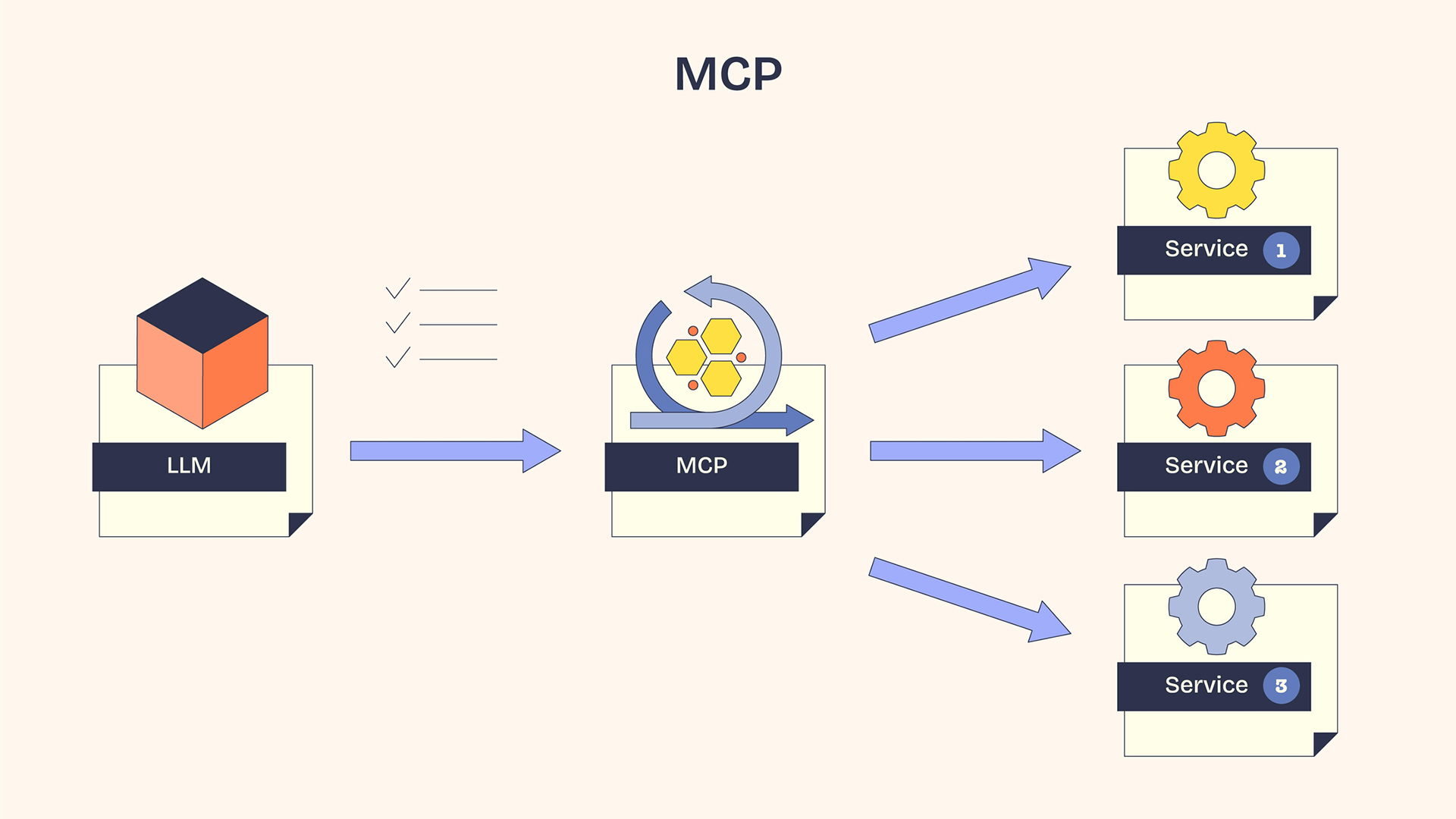

MCP architecture

MCP is an AI standard that works in a client-server architecture. All the MCP client-server communication takes place at the transport layer through standard input/output (STDIO), HTTP+ security, and server-sent events (SSE) methods.

MCP Host

The MCP host is an AI application or an IDE that contains an LLM. It is a simple interaction point for the user.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

MCP client

The MCP client, embedded in the host, converts user requests into a standardized format and sends them to MCP servers. One MCP client can effortlessly connect to “N” MCP servers while maintaining a secure 1:1 connection. Some examples of connection requests include:

- Asking for data.

- Seeking a prompt template.

- Accessing a database.

- Reading a file.

- Performing specific tasks.

MCP server

The MCP server is an independent service, database, or application programming interface (API) that listens to requests from MCP clients and returns them with the requested context, data resources, tools, and capabilities. In simple words, the MCP server converts client requests into actionable steps for the LLM.

The MCP client receives results from the server, performs translation, and passes them back to the LLM. As a result, the LLM gets access to external data or tools.

MCP: The unified advantage

At the beginning of widespread enterprise AI adoption, AI applications were stateless models. But AI users of 2025 demand context across conversations, while AI agents necessitate communication across applications and cloud environments.

By simplifying cross-platform integration, the MCP protocol makes AI even better at decision-making.

Unloading the weight of the N x M problem

N x M is an infamous problem in AI architecture. N is the number of AI models, and M is the number of tools. Both numbers are ever-growing. Each LLM requires a new custom integration to work with a specific third-party application, where developers manually fetch data from APIs and inject it into LLMs.

Due to the N x M problem, the number of custom integrations grows exponentially. It is no surprise that custom integrations skyrocket the budget and place a significant development load on engineers to seek permissions, debug, and perform whatnot!

“For years, every AI project meant building one-off connectors and custom logic to pass context around,” explains Facundo Giuliani, solutions engineering team manager at Storyblok. “MCP changes that by providing a unified standard that fits naturally into composable and MACH architectures. It brings the same modularity and reusability we already expect from modern software development into the AI layer”.

MCP is best suited for MACH architectures (Microservices API-First Cloud-Native and Headless), which call for quick, pluggable, and scalable architectures built on third-party tools. Because in MCP, there is no need to make fragmented custom integrations. It offers a unified path for data exchange through its open source two-way client-server architecture.

Reduced engineering costs

Most AI tools and vendors provide MCP client libraries, which show all the external tools available to the application in the user interface. Developers can use existing MCP clients from these libraries to discover server capabilities, facilitate faster development times, and reduce engineering costs.

MCP also allows AI applications to connect to multiple IDEs. Enterprises save on engineering costs by eliminating the need to maintain separate software development kits (SDKs) for their own tools and custom integration with different AI applications.

LLM tool management

With the help of MCP, LLMs moved beyond basic Q&A to control complex tool chains in the AI ecosystem.

- LLMs can request additional information from clients, which is necessary to execute the request.

- MCP protocol enables MCP servers to suggest structured model calls for tools. The LLM takes the final call, whether to execute the request or ignore it.

- After connection, LLM can reuse the external database whenever needed.

- MCP protocol defines the system boundaries in responses returned to client requests. LLMs can only gain access to the exposed side of the data, preventing accidental access to sensitive repositories.

- LLMs can also deny impossible prompt requests from the users.

- As LLMs can fetch data from external sources or perform web searches, AI hallucinations deteriorate.

MCP use cases

Within less than a year of release, the MCP protocol has been widely adopted and businesses are embracing its multitude of use cases.

Multi-agent orchestration: Agentic AI is not just a typical generative AI chatbot, but an agent that can make decisions and perform real-time tasks to assist humans. Enterprises are heavily invested in deploying such AI agents to automate workloads.

"By standardizing agent-to-agent and agent-to-tool communications, we create a clean interface you can reason about, test, and govern across clouds and vendors,” says Randy Bias, VP of Strategy & Technology at Mirantis, a B2B open source cloud computing software and services company.

MCP enables enterprises to deploy multiple AI agents through the MCP pipeline on different business processes. These AI agents collectively work to execute tasks.

Cloud management: The MCP server enables converting natural language into SQL queries. Without non-technical skills, users access databases and manage storage services.

Personalized assistant: MCP enables AI applications to connect agents to client devices, allowing AI agents to interact with common enterprise tools such as Notion, Slack, Google Calendar. This powers more autonomous tasks such as ticket booking, scheduling meetings, messaging, and sharing updates.

Software development: Enterprises have already integrated AI agents into their IDE to improve code generation, debugging, and accuracy reports.

Financial operations: In enterprises, MCP can help applications to fetch and process data from ERP and CRM tools.

Creative solutions: MCP helps AI tools to integrate with web design, 3D animation, and image tools. Figma and Blender MCP servers are two examples.

Startup opportunity: New software or AI applications must build their own MCP server or adapt to existing ones so any MCP client can use them.

What are the risks of MCP?

One of the major problems in the MCP protocol is that the entire foundation is built on trust. For example, MCP servers can be called by anyone, not only LLMs.

“MCP is still a relatively young technology”, warns Conor Sherman, CISO-in-residence at cloud security firm Sysdig. Because MCP protocols don’t define specific enterprise-grade authentication and authorization mechanisms, businesses need to insulate any MCP use against threats – or face serious security risks.

“The risk is that enterprises will rush to adopt MCP for its interoperability without realising that the 'payload' itself can be weaponized, carrying poisoned instructions or enabling covert exfiltration,” explains Sherman.

A recent paper by researchers at Shanghai Jiao Tong University and the Hong Kong University of Science and Technology demonstrates that attackers can embed malicious instructions into MCP servers, which LLMs read through MCP clients. The attack is known as MCP-UPD (Unintended Privacy Disclosure). When LLM uses the malicious data, the hidden code triggers access to private files or results in data theft.

Recent analysis by Backslash Security backs this up, finding approximately half of the 15,000 or more MCP servers being used at the time of publication contain dangerous misconfigurations that put them at risk from hackers.

Incorrect server implementation: When MCP servers are implemented incorrectly, they may expose critical functionalities to AI applications, thus putting enterprise assets at risk.

The two main risks businesses looking to use MCP must watch out for are:

Incorrect server implementation: When MCP servers are implemented incorrectly, they may expose critical functionalities to AI applications, thus putting enterprise assets at risk.

Prompt injection: LLMs accept malicious prompts written by an attacker. The attack is known as prompt injection. According to a recent analysis by Equixly, 43% of tested MCP implementations contain prompt injection flaws.

MCP functions like a middleware between LLMs and third-party sources, tools, or services. Still, the MCP protocol is in the early stages. Whether MCP becomes an enterprise standard or just another documentation dumped into the vastness of web pages depends on how it is supported.

Venus is a freelance technology writer specializing in IT, quantum physics, electronics, and among other technical fields. She holds a degree in Electronics and Telecommunications Engineering from Mumbai University, India.

With years of experience in writing for global media brands and IT companies, she enjoys translating complex content into engaging stories. When she’s not writing about the latest IT trends, Venus can be found tracking enterprise trends or the newest processor in town.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses