Nvidia thinks it’s time to start measuring data center efficiency by other metrics — is this the end of PUE?

Nvidia says data center efficiency has moved on so much that new measures are needed to track performance

Nvidia thinks it's time to embrace new ways of measuring data center efficiency amidst surging energy demands and an increase of power-hungry applications at enterprises globally.

For many years now, power usage effectiveness (PUE) has been seen as one the most important measures of whether a data center is energy efficient.

Coming up with the PUE for a data center is relatively straightforward. You take the total energy used by the facility - everything from lighting to heating and cooling - and divide it by the total energy used by the actual IT systems.

In 2007, when the Green Grid first published its PUE metric, the average data center PUE stood at 2.2. Since then, PUE has been widely adopted as a key way of understanding the performance of data center infrastructure. Installations focusing on driving down PUE can now get their score down to 1.2. or even less.

However, Nvidia has now suggested that it may be time to move on from PUE, arguing that data centers need an upgraded measure of energy efficiency to show progress running real-world applications.

“Over the last 17 years, PUE has driven the most efficient operators closer to an ideal where almost no energy is wasted on processes like power conversion and cooling,” the tech giant said.

“PUE served data centers well during the rise of cloud computing, and it will continue to be useful. But it’s insufficient in today’s generative AI era, when workloads and the systems running them have changed dramatically.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

That’s because PUE doesn’t measure the useful output of a data center, only the energy that it consumes, which is like measuring the amount of gas an engine uses without noticing how far the car has gone, Nvidia said.

Nvidia argues that the computer industry has a “long and somewhat unfortunate” history of describing systems and the processors they use in terms of power, typically in watts.

But when systems and processors report rising input power levels in watts, that doesn’t mean they’re less energy efficient – they may be much more efficient in the amount of work they do with the amount of energy they use.

What’s more, the industry has a tendency to measure in abstract terms, like processor instructions or math calculations, which is why millions of instructions per second (MIPS) and floating point operations per second (FLOPS) are widely quoted. While these are useful for computer scientists, Nvidia said, users would prefer to know how much real work their systems put out.

It argues that modern data center metrics should focus on energy and how much work is done with it, in the form of kilowatt-hours or joules.

Nvidia wants to shake things up

Nvidia isn’t not alone in thinking it’s time for a change. It quotes Christian Belady, a data center engineer who had the original idea for PUE, who said that with modern data centers hitting a PUE of 1.2 the metric has “run its course”.

“It improved data center efficiency when things were bad, but two decades later, they’re better, and we need to focus on other metrics more relevant to today’s problems,” Belady said:

Nvidia also quotes Jonathan Koomey, a researcher and author on computer efficiency and sustainability who said data center operators now need a suite of benchmarks that measure the energy implications of today’s most widely used AI workloads.

“Tokens per joule is a great example of what one element of such a suite might be,” he noted.

RELATED WHITEPAPER

Vladimir Galabov, research director for cloud and data center at tech analyst Omdia, told ITPro that while in principle he agreed that PUE is not an optimal measure of data center efficiency, he didn’t entirely agree with Nvidia about what the key unaddressed problem is.

“In my eyes our biggest problem is a culture of overprovisioning servers. Servers which sit idle either intentionally or unintentionally are a significant challenge. Another is servers which never pass 50% utilization. The reason this is such a significant problem in the industry is that idle and underutilized servers continue to consume power, in some cases close to what they would have consumed at max utilization,” he said.

“I think Nvidia also underestimated the fact that PUE is a de facto standard for measuring data center efficiency because the metric is simple and easy to understand. It is well understood by stakeholders outside of the data center industry like regulators who are already using it. The power of the ecosystem will make PUE difficult to displace regardless of whether it is the most optimal or not,” he added.

Daniel Bizo, research director, intelligence, at Uptime Institute told ITPro that while PUE is a useful proxy metric for the energy efficiency of power distribution and thermal management, it does not account for the efficiency of the IT infrastructure itself — and was never meant to.

The ambition to establish IT infrastructure efficiency and work per energy isn’t new, he said, but it's highly problematic to achieve. One problem is developing a standard benchmark suite that would give a representative picture of real-world efficiencies.

“This is difficult to do and police even across similar compute architectures, such as across different server CPUs, but renders the effort pointless across vastly different architectures such as CPUs and GPUs,” he said. “Not only that the relative performance and efficiency would swing wildly depending on the type of workload, but typically CPUs run workloads that are not even suited for GPUs, so comparing their energy efficiency or work output would be meaningless (e.g. processing online transactions vs. generating tokens).”

Another major issue was that even if we established the inherent energy efficiency of a compute platform, real-world energy performance will be dictated by how close the infrastructure operator can keep utilization near the efficiency optimum, Bizo added. For some systems it’s 70-80% while some reach peak efficiency at 90-100%.

“Then for useful work there is the question of the performance optimization of the application code to run on a given compute platform. With that, we arrived back where we started: even if a compute platform were more efficient on paper, the application may not extract that efficiency unless optimized for it,” he said.

“Ultimately, customers need to understand which compute platform works best for a given set of their applications, a balancing act between performance, energy and cost. Trying to make generic comparisons between vastly different platforms that have very different workload purposes has little value,” he said.

Operators that want to improve IT energy performance can do that by focusing on driving system utilization closer to optimum, identifying performance bottlenecks, selecting the right system configuration for their applications, and actively managing performance and power profiles of compute systems.

Steve Ranger is an award-winning reporter and editor who writes about technology and business. Previously he was the editorial director at ZDNET and the editor of silicon.com.

-

Nvidia just announced new supercomputers and an open AI model family for science at SC 2025

Nvidia just announced new supercomputers and an open AI model family for science at SC 2025News The chipmaker is building out its ecosystem for scientific HPC, even as it doubles down on AI factories

-

US Department of Energy’s supercomputer shopping spree continues with Solstice and Equinox

US Department of Energy’s supercomputer shopping spree continues with Solstice and EquinoxNews The new supercomputers will use Oracle and Nvidia hardware and reside at Argonne National Laboratory

-

UK to host largest European GPU cluster under £11 billion Nvidia investment plans

UK to host largest European GPU cluster under £11 billion Nvidia investment plansNews Nvidia says the UK will host Europe’s largest GPU cluster, totaling 120,000 Blackwell GPUs by the end of 2026, in a major boost for the country’s sovereign compute capacity.

-

Inside Isambard-AI: The UK’s most powerful supercomputer

Inside Isambard-AI: The UK’s most powerful supercomputerLong read Now officially inaugurated, Isambard-AI is intended to revolutionize UK innovation across all areas of scientific research

-

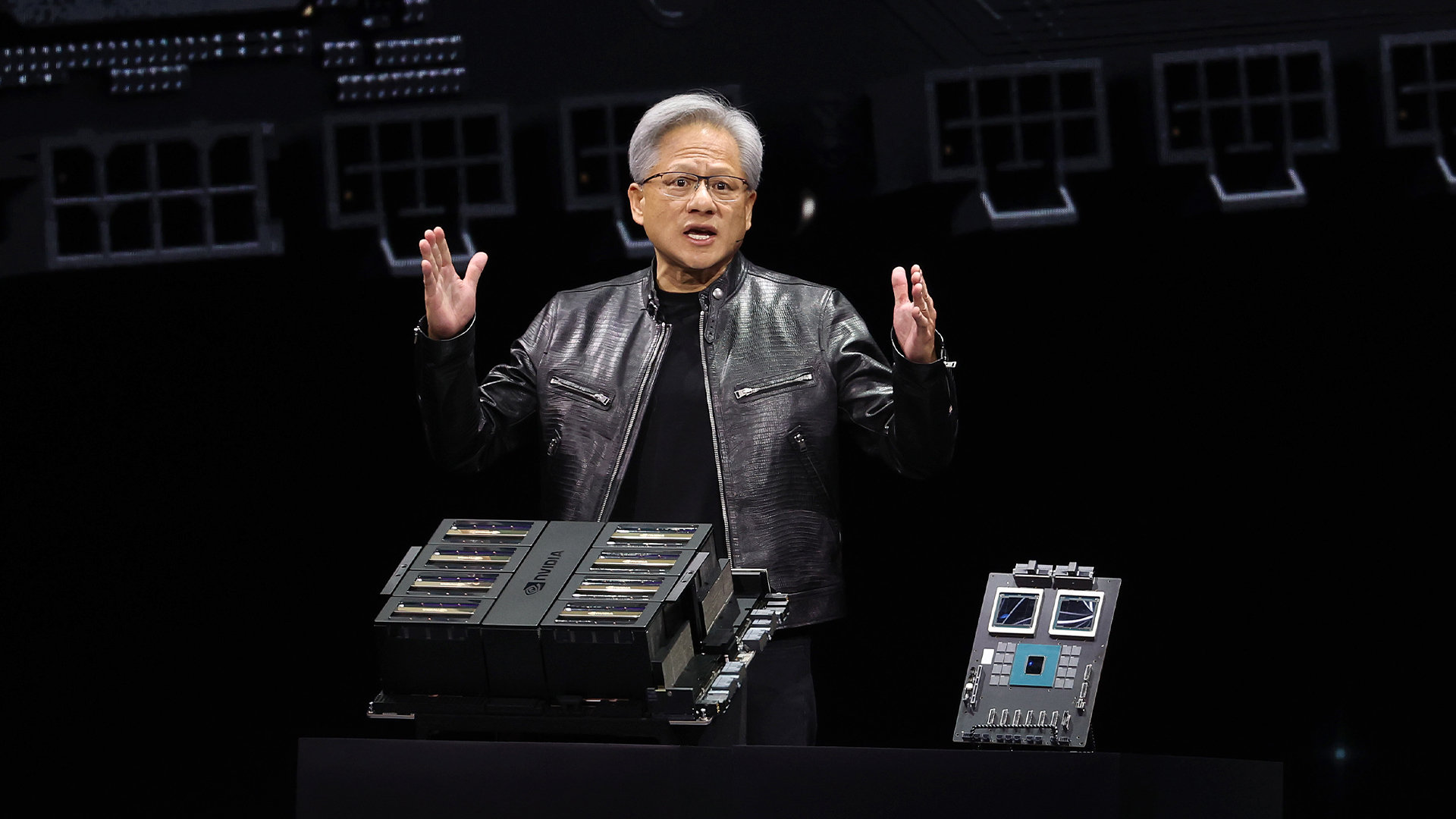

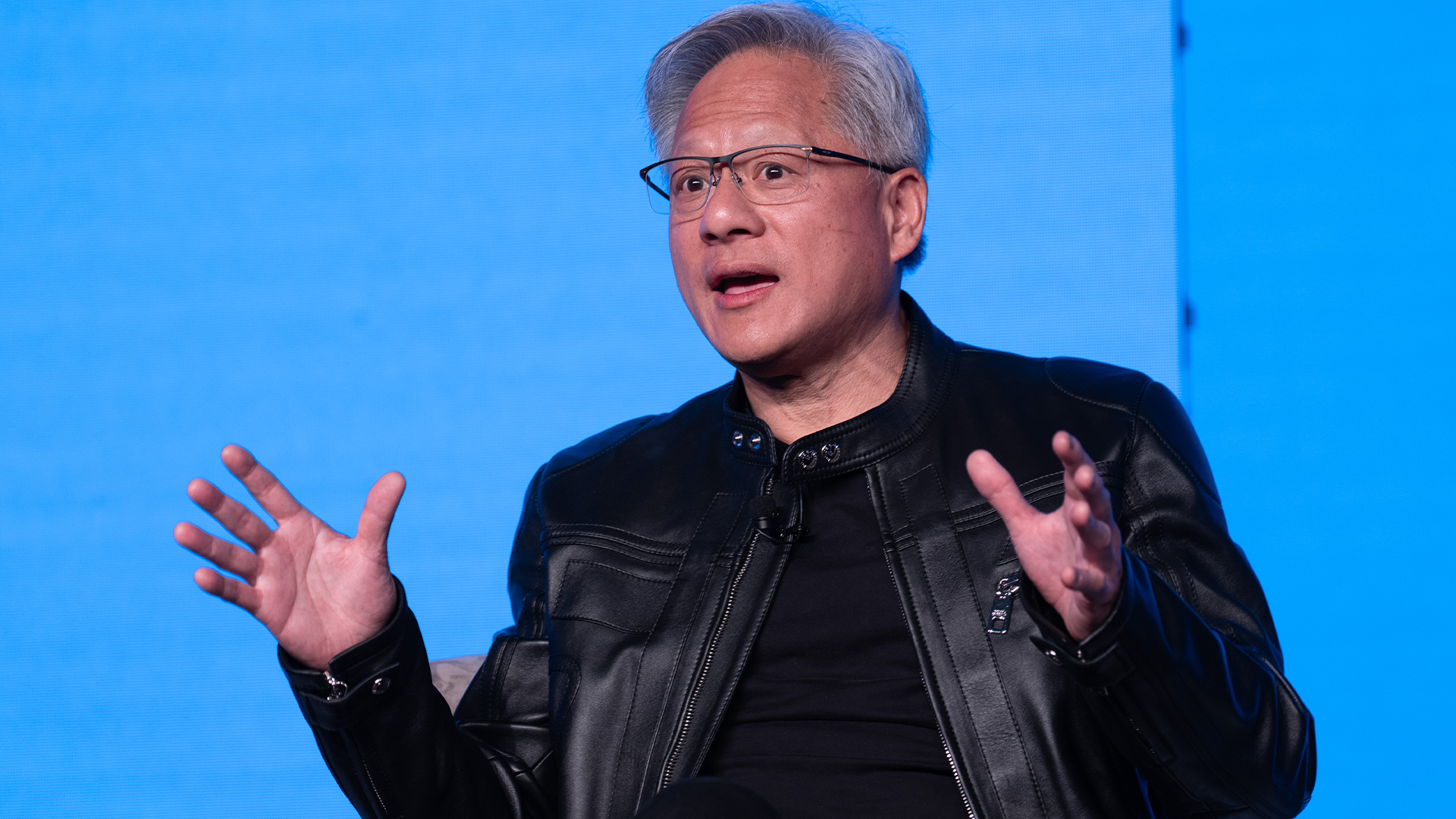

‘This is the largest AI ecosystem in the world without its own infrastructure’: Jensen Huang thinks the UK has immense AI potential – but it still has a lot of work to do

‘This is the largest AI ecosystem in the world without its own infrastructure’: Jensen Huang thinks the UK has immense AI potential – but it still has a lot of work to doNews The Nvidia chief exec described the UK as a “fantastic place for VCs to invest” but stressed hardware has to expand to reap the benefits

-

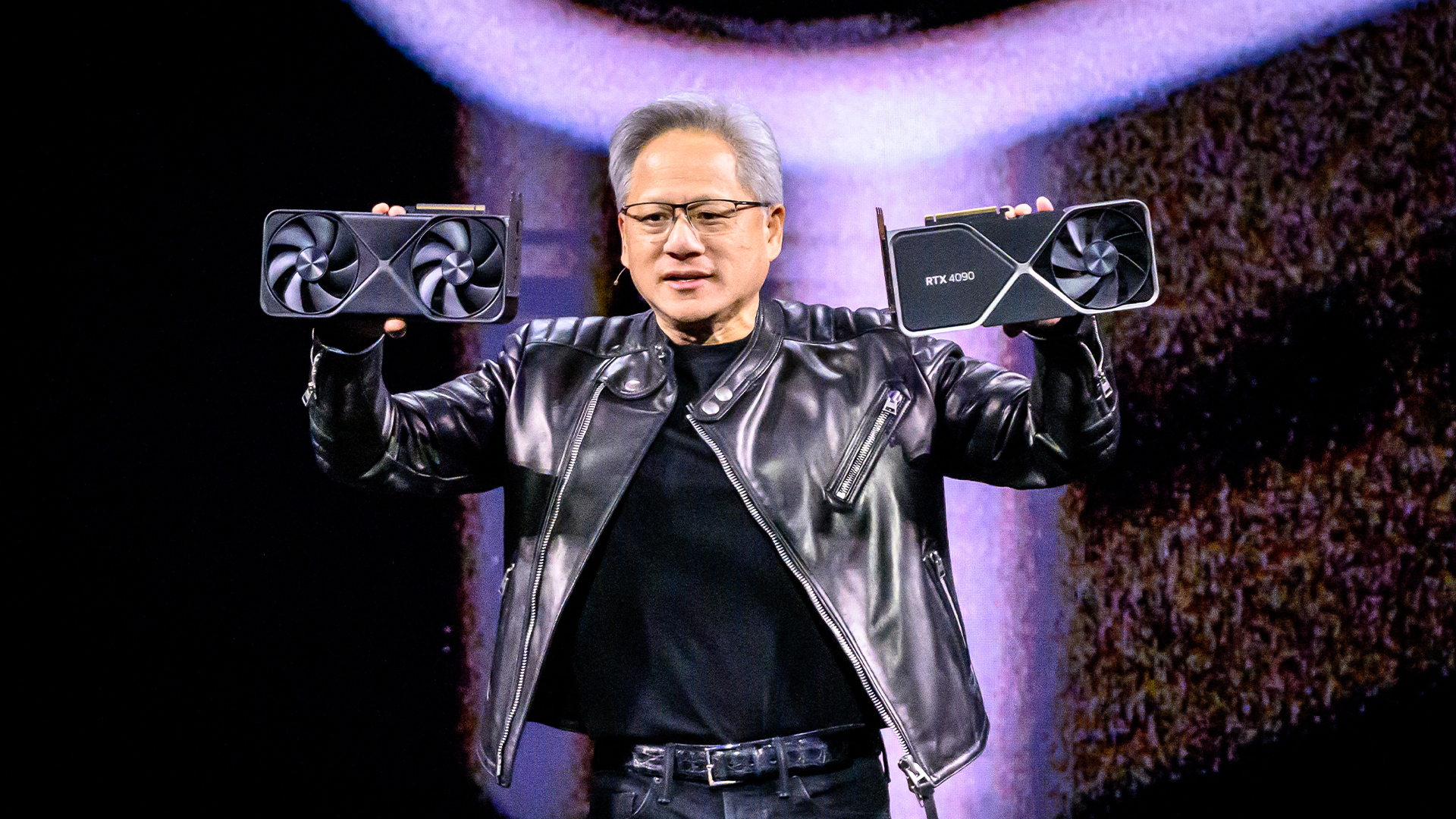

Nvidia GTC 2025: Four big announcements you need to know about

Nvidia GTC 2025: Four big announcements you need to know aboutNews Nvidia GTC 2025, the chipmaker’s annual conference, has dominated the airwaves this week – and it’s not hard to see why.

-

HPE unveils Mod Pod AI ‘data center-in-a-box’ at Nvidia GTC

HPE unveils Mod Pod AI ‘data center-in-a-box’ at Nvidia GTCNews Water-cooled containers will improve access to HPC and AI hardware, the company claimed

-

Run.ai software will be made open source in wake of Nvidia acquisition

Run.ai software will be made open source in wake of Nvidia acquisitionNews Infrastructure management tools from Run:ai will be made available across the AI ecosystem