What is a GPU?

We explain what a GPU is, and what their business applications are

Max Slater-Robins

The rise of AI has led to increased scrutiny of graphics processing units (GPUs). In fundamental terms, a graphics processing unit is a specialised processor designed to handle many tasks at once – particularly those involving parallel operations such as image rendering, simulations, and AI model training.

Most CPUs come bundled with integrated graphics so that users are provided with a legible user interface. However, for more intensive graphical tasks like video editing or graphics design a discreet GPU is a necessary additional component.

Originally developed for this purpose, to accelerate graphics performance in gaming and professional visual applications, GPUs have become powerful tools for data centers, scientific computing, artificial intelligence, and other industries.

Over the past decade, and especially since OpenAI launched ChatGPT in November 2022, this shift has been accelerated by surging demand for AI workloads, including large language models (LLMs), generative AI, and inference engines.

Companies like Nvidia and AMD, once small players compared to Intel, have developed increasingly powerful GPUs to meet these needs, enabling everything from medical research to financial modelling to operate at vast scale.

Today, GPUs are the backbone of many of the world’s most advanced computing systems, whether powering cloud-based AI tools or ranking among the top supercomputers globally. Their importance now extends far beyond consumer gaming rigs – they are critical infrastructure for the future of digital innovation.

What is a GPU and what does it do?

Simply put, the GPU is responsible for handling the computational demands of graphics-intensive functions on a computer.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Unlike central processing units, or CPUs, which are optimised for sequential task execution, GPUs are designed to process thousands of operations simultaneously. This is made possible by their large number of smaller, efficient cores which are ideal for parallel processing tasks such as matrix multiplication, image processing, and neural network calculations.

Traditionally, GPUs were best known for their role in gaming and media production, rendering complex 3D scenes and enabling real-time video playback. Of course, traditionally in this sense means up to even just a few years ago.

Graphics cards from Nvidia and AMD have long been staples of desktop setups, helping gamers and creative professionals achieve smoother frame rates, faster rendering, and higher fidelity visuals. However, the scope of GPU usage has expanded dramatically.

To take one example, in high-performance computing (HPC), GPUs now accelerate scientific simulations, climate modelling, and genomic analysis. They are the modern backbone for workloads that benefit from high throughput and massive parallelism.

In finance, they power risk modelling and high-frequency trading algorithms. In AI, they are essential for training and deploying models such as ChatGPT, Gemini, and Claude.

The shift toward general-purpose GPU computing, or GPGPU (which sadly is a mouthful), has also reshaped data center design. Modern cloud platforms – including AWS, Microsoft Azure, and Google Cloud – offer GPU instances specifically tuned for AI and machine learning (ML) tasks.

In essence, the GPU has transformed from a graphics engine into a multipurpose compute powerhouse, changing the world in the process.

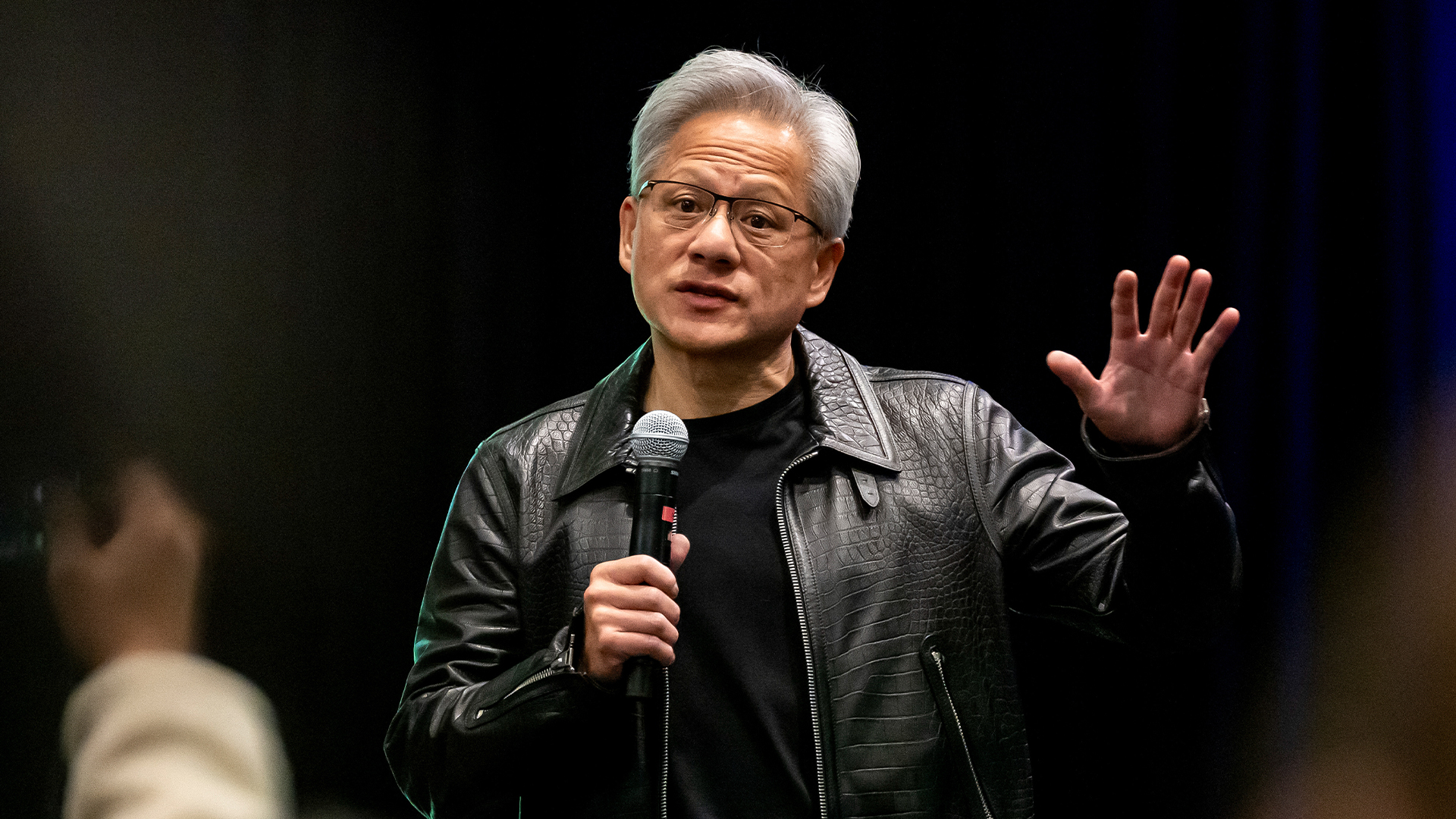

We need to talk about Nvidia

Nvidia remains the market leader in GPU development, particularly in AI and HPC, having started early. As the dominant force in the GPU market, Nvidia has played a central role in reshaping how modern computing infrastructure is built.

Founded in 1993 and best known for its GeForce graphics cards, the company has in recent years pivoted hard toward AI, data centre acceleration, and high-performance computing.

That shift has made Nvidia one of the world’s most valuable tech firms – and placed its enterprise GPUs at the heart of large-scale AI deployments from OpenAI and many others. Its recent innovations, namely the Blackwell architecture and the H200 GPU – mark a major step forward in performance, efficiency, and scalability for modern workloads.

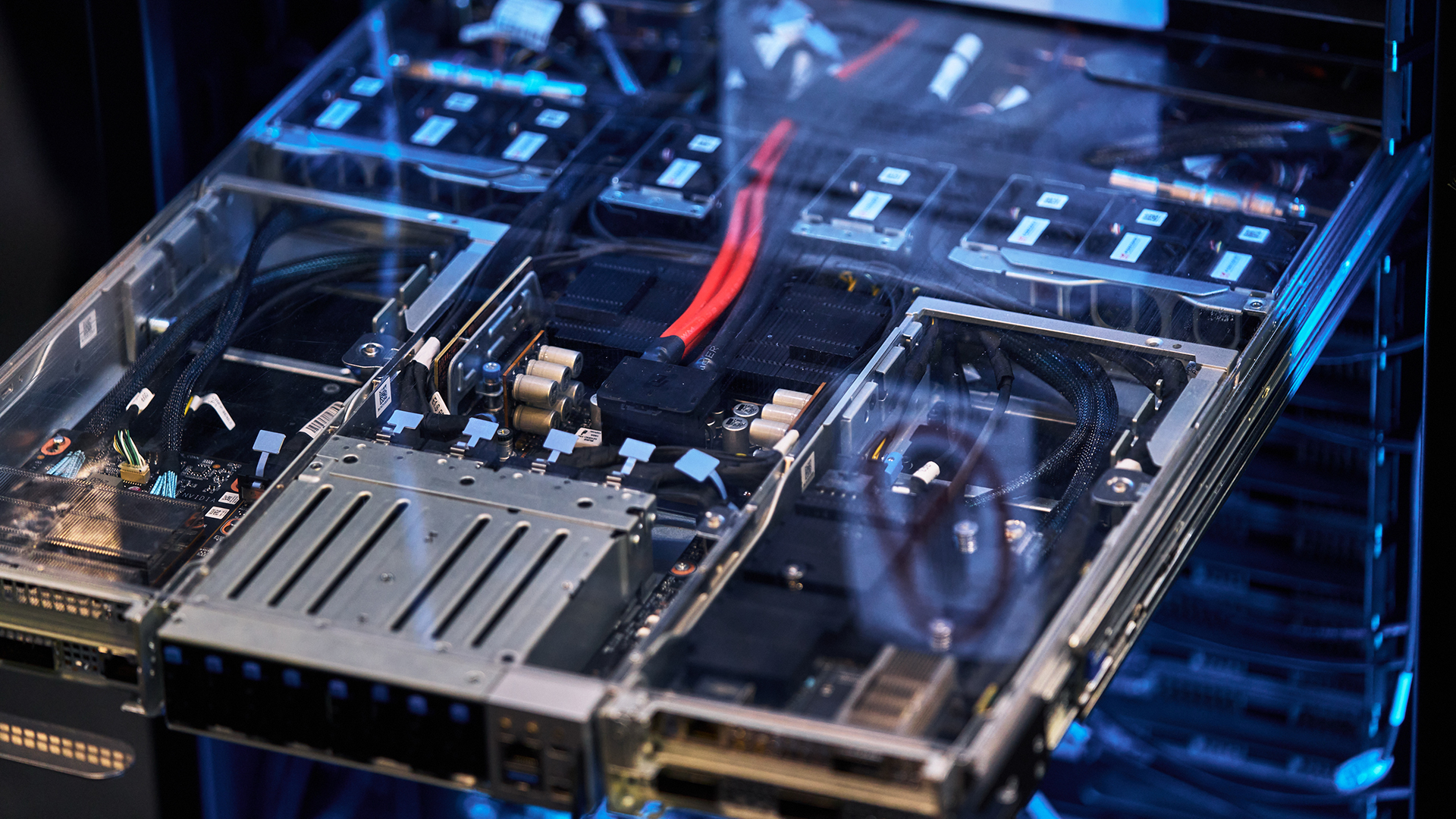

Unveiled in March 2024, the Blackwell platform is the successor to the Hopper architecture, and represents Nvidia’s most ambitious GPU design to date. At its core is the B200 GPU, a 208-billion transistor chip supporting up to 192GB of HBM3e memory and delivering an astonishing 10TB/s of memory bandwidth.

These capabilities make it particularly well-suited to training and fine-tuning large language models (LLMs) and generative AI tools that demand immense data throughput.

As if that wasn’t enough, Blackwell also introduced the GB200 Grace Blackwell Superchip, which pairs two B200 GPUs with Nvidia’s custom Grace CPU. These chips power Nvidia’s new GB200 NVL72 system, a rack-scale AI supercomputer featuring 72 GPUs.

Major cloud providers, including Microsoft Azure, Google Cloud, and AWS, announced Blackwell deployments, underlining the architecture’s strategic importance for public AI infrastructure.

While Blackwell is still ramping toward full production, the H200 GPU serves as Nvidia’s current-generation workhorse for AI and HPC. Based on the Hopper architecture (like the H100), the H200 features expanded memory – up to 141GB of HBM3e – and enhanced bandwidth, making it a strong fit for inference workloads and medium-scale training tasks.

Together, these GPUs reflect Nvidia’s dual-pronged approach: deliver incremental improvements now, while preparing for a longer-term leap in compute capabilities with Blackwell. And that’s why Nvidia is on top.

In 2026, Nvidia will release its Rubin class GPUs, with the Nvidia Vera Rubin NVL 144 CPX platform set to deliver 8 exaflops of AI compute, 1.7 petabytes per second of memory bandwidth, and 100TB of fast memory.

What about AMD?

Although Nvidia continues to lead the AI GPU market by a wide margin, AMD has made notable progress with its Instinct range of accelerators – particularly in the high-performance computing (HPC) space and large-scale AI workloads.

Rather than trying to match Nvidia feature-for-feature, AMD has leaned into its strengths: packaging innovation, energy efficiency, and deep integration with open-source software frameworks.

The Instinct family is now a key pillar of AMD’s data centre strategy. Its current-generation MI300 series, used in systems like the El Capitan supercomputer, was designed with memory-hungry AI models and scientific workloads in mind.

AMD has positioned these GPUs as viable alternatives for organizations seeking more control over hardware and software environments, or looking to diversify beyond Nvidia’s CUDA ecosystem.

Its latest release – the MI325X – builds on that effort, offering increased memory capacity to serve the ever-expanding demands of generative AI and inference. The chip is already available via cloud platforms like Vultr and Azure.

In 2026 AMD will release the MI400, which is expected to boast 1.4 petabytes of memory bandwidth, 2.9 exaflops of FP4 performance and 1.4 exaflops of PF8 performance.

Tell me about supercomputing

With a start in gaming and intense desktop workloads, GPUs have now become one of the most in-demand pieces of hardware. From cloud services to climate science, GPUs now underpin some of the most critical computing infrastructure in the world.

Nowhere is this more visible than in supercomputing, where machines like Switzerland’s Alps are redefining what’s possible in AI and scientific research. Built on Nvidia’s Grace Hopper platform, Alps is engineered not just for raw power, but for hybrid workloads – blending traditional simulation with the demands of generative AI and LLMs.

This kind of architecture is quickly becoming the norm.

Whether through Nvidia’s Blackwell systems or AMD’s growing Instinct range, the trajectory is clear: modern computing is being rebuilt around acceleration. Power efficiency, scalability, and memory bandwidth now matter as much as clock speed – and GPUs are central to delivering on all three.

As AI continues to reshape business and research, GPUs will only grow in importance. They’re no longer just optional performance boosters – they’re strategic infrastructure, helping define the next era of computing across cloud, data centre, and edge environments.

Adam Shepherd has been a technology journalist since 2015, covering everything from cloud storage and security, to smartphones and servers. Over the course of his career, he’s seen the spread of 5G, the growing ubiquity of wireless devices, and the start of the connected revolution. He’s also been to more trade shows and technology conferences than he cares to count.

Adam is an avid follower of the latest hardware innovations, and he is never happier than when tinkering with complex network configurations, or exploring a new Linux distro. He was also previously a co-host on the ITPro Podcast, where he was often found ranting about his love of strange gadgets, his disdain for Windows Mobile, and everything in between.

You can find Adam tweeting about enterprise technology (or more often bad jokes) @AdamShepherUK.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

The Asus Ascent GX10 is a MiniPC with supercomputer ambitions for AI developers – but it's not cheap

The Asus Ascent GX10 is a MiniPC with supercomputer ambitions for AI developers – but it's not cheapReviews The Ubuntu-on-ARM operating system could limit its appeal, but for both serious and neophyte AI developers, it's a great desktop box

-

Nvidia’s Intel investment just gave it the perfect inroad to lucrative new markets

Nvidia’s Intel investment just gave it the perfect inroad to lucrative new marketsNews Nvidia looks set to branch out into lucrative new markets following its $5 billion investment in Intel.

-

Nvidia hails ‘another leap in the frontier of AI computing’ with Rubin GPU launch

Nvidia hails ‘another leap in the frontier of AI computing’ with Rubin GPU launchNews Set for general release in 2026, Rubin is here to solve the challenge of AI inference at scale

-

Framework Desktop review: Modular design and ferocious AMD performance

Framework Desktop review: Modular design and ferocious AMD performanceReviews AMD's Ryzen Max CPUs debut in Framework's impressive modular self-build small-form desktop PC

-

AMD chief exec Lisa Su says its new Helios AI rack is a 'game changer' for enterprises ramping up inference – here's why

AMD chief exec Lisa Su says its new Helios AI rack is a 'game changer' for enterprises ramping up inference – here's whyNews The integrated hardware offering will feature upcoming AMD chips and networking cards

-

AMD Advancing AI 2025: All the latest news and updates from San Jose

AMD Advancing AI 2025: All the latest news and updates from San JoseFollow all the news and updates live from AMD's latest Advancing AI conference

-

What enterprises need to be Windows 11 ready

What enterprises need to be Windows 11 readySupported Hardware purchasing will play a key role in delivering success during the Windows 11 migration rush

-

Nvidia braces for a $5.5 billion hit as tariffs reach the semiconductor industry

Nvidia braces for a $5.5 billion hit as tariffs reach the semiconductor industryNews The chipmaker says its H20 chips need a special license as its share price plummets