Nvidia just announced new supercomputers and an open AI model family for science at SC 2025

The chipmaker is building out its ecosystem for scientific HPC, even as it doubles down on AI factories

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Nvidia has announced a slew of supercomputing and AI factory announcements, with new hardware adoption and high-performance computing (HPC) plans laid out in line with partner organizations.

Timed to coincide with the start of Supercomputing 2025 (SC25), the annual HPC event held this year in St. Louis, Nvidia unveiled new orders for HPC and supercomputer sites, alongside a series of agreements for major partners to use its network switches.

First, Nvidia announced it is partnering with Japan’s public research institute Riken on two new supercomputers powered by GB200 systems. The first of these systems will combine traditional supercomputing and AI to tackle issues in manufacturing, robotics, seismology, and quantum research.

It will be powered by 1,600 Nvidia Blackwell GPUs, connected by Quantum-X800 InfiniBand networking and built on the GB200 NVL4 platform.

The second system will aim to combine GPUs with Riken’s quantum HPC hybrid infrastructure. Powered by 540 Blackwell GPUs with the same compute platform and networking technology, the aim here is to run quantum algorithms and hybrid computing simulations.

“Integrating the NVIDIA GB200 NVL4 accelerated computing platform with our next-generation supercomputers represents a pivotal advancement for Japan’s science infrastructure,” said Satoshi Matsuoka, director of the Riken Center for Computational Science.

“Our partnership will create one of the world’s leading unified platforms for AI, quantum and high-performance computing, allowing researchers to unlock and accelerate discoveries in fields ranging from basic sciences to industrial applications for businesses and society.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Riken’s petascale Fugaku supercomputer was the fastest in the world between June 2020 and May 2022. It now ranks seventh in the world per the latest top500 list.

The two new systems announced today will act as development platforms to inform the creation of FugakuNEXT, due online at the end of 2029.

This next-generation super computer will aim to combine traditional supercomputing with quantum computing and bring together Fujitsu Monaka X CPUs and Nvidia architecture via new NVLink Fusion silicon.

Nvidia has recently announced plans to build two new supercomputers for the US Department of Energy (DoE), known as Solstice and Equinox. Harris explained that Solstice will be powered by 100,000 Blackwell GPUs, delivering 2,000 exaflops of AI performance – “more AI flops than the entire top500 combined”.

Nvidia touts open AI models for scientific computing

Another major announcement made by Nvidia is the launch of Apollo, a new family of AI physics models aimed at helping developers run complex industrial simulations in real-time.

The model family unlocks capabilities across a wide range of scientific applications, all with real-world use cases for enterprises backed by Nvidia’s AI infrastructure.

These include sector-specific simulations such as structural analysis across automotive, electronics, and aerospace, as well as electronic defect direction, mechanical design, and computational lithography.

Apollo is also capable of intense, scalable simulations including climate and weather modelling, computational fluid dynamics, real-time simulation of electromagnetic signals to recreate wireless communications and optical data, and nuclear physics simulations.

Some Nvidia partners have already been using Apollo to improve their processes. For example, the semiconductor giant Applied Materials – the second largest chip equipment supplier after ASML – has used Apollo models to improve the power efficiency of its manufacturing process.

Running the models on Nvidia GPUs via its CUDA framework, the firm has unlocked flow, plasma, and thermal modeling of its semiconductor process chambers, combining AI and digital twins for an overall acceleration of 35x across its ACE+ multiphysics simulation platform.

Northrop Grumman and Luminary Cloud have also used Apollo models to design spacecraft thruster nozzles more efficiently.

Apollo joins Nvidia’s existing open model families announced at GTC 25 including Clara for biomedical AI, Cosmos for physical AI, GR00T for robotics, and Nemotron for agentic AI.

New adoption for Nvidia’s networking technology

The Texas Advanced Computing Center, AI cloud computing firm CoreWeave, and AI factory startup Lambda will adopt Nvidia Quantum-X Photonics in their upcoming data center infrastructure.

Nvidia has said the co-packaged optics networking switches are capable of 115.Tbits/sec networking, connecting millions of GPUs while improving application runtime by 5x and power efficiency by 3.5x.

“Nvidia Quantum-X photonics will help them realize more robust, serviceable, and energy efficient network fabrics that keep large-scale workloads running fast and efficiently,” said Dion Harris, senior director of HPC and AI Infrastructure Solutions at Nvidia.

In an example, Harris explained that new networking technologies must pair with GPU breakthroughs to power the latest mixture-of-experts (MoE) models such as DeepSeek R1.

Nvidia’s answer is the Blackwell NVL72, in which 72 GPUs are connected to one another with all-to-all, 130 TB/sec bandwidth NVLink switches. In SemiAnalysis data on DeepSeek R1, the NVL72 performed at 10x better performance per dollar, performance per watt, and throughput – for an overall 10x improvement in revenue.

“You can't do this with just chip innovation by itself,” said Harris. “We achieve this through extreme co-design with Innovation and optimisation. Optimisation across gpus CPUs memory. Networking rack scale architectures as well as software.

In the year since SC24, Harris said 80 new systems built on Nvidia’s systems have been announced with 300,000 GPUs committed and the equivalent of 4,500 exaflops of AI performance planned.

Arm is also becoming a CPU partner for NVLink, alongside existing partners such as Fujitsu, Intel, and Qualcomm.

At the same time as it expands its traditional networking portfolio, Nvidia has also announced new adoption roadmaps for its NVQLink and CUDA-Q platforms, which will connect GPUs to quantum processors in the near future with a latency of less than four microseconds.

At SC25, Nvidia has announced 21 supercomputing centers around the world will adopt NVQLink including:

- Australia’s Pawsey Supercomputing Research Centre

- DCAI, operator of Denmark’s Gefion supercomputer.

- Germany’s Jülich Supercomputing Centre (JSC).

- Japan’s Global Research and Development Center for Business by Quantum-AI technology (G-QuAT), at the National Institute of Advanced Industrial Science and Technology (AIST).

- The Korea Institute of Science and Technology Information (KISTI).

In the US, many of the DoE’s national laboratories have also signed on including Lawrence Berkeley National Laboratory, Los Alamos National Laboratory, and Oak Ridge National Laboratory (which currently hosts the supercomputer Frontier).

“Performing tasks like quantum error correction requires a Quantum GPU interconnect with microsecond latency and throughput in the hundreds of gigabits per second,” Harris said.

“It's also essential for a scalable interconnect platform to be open and universal supporting all types of quantum processor builders, so that every supercomputer has a turnkey solution to bring quantum into their workflow.”

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

MORE FROM ITPRO

- Nvidia, Deutsche Telekom team up for "sovereign" industrial AI cloud

- Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’

- Nvidia’s Intel investment just gave it the perfect inroad to lucrative new markets

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

AWS CEO Matt Garman isn’t convinced AI spells the end of the software industry

AWS CEO Matt Garman isn’t convinced AI spells the end of the software industryNews Software stocks have taken a beating in recent weeks, but AWS CEO Matt Garman has joined Nvidia's Jensen Huang and Databricks CEO Ali Ghodsi in pouring cold water on the AI-fueled hysteria.

-

Deepfake business risks are growing

Deepfake business risks are growingIn-depth As the risk of being targeted by deepfakes increases, what should businesses be looking out for?

-

Dawn, one of the UK’s most powerful supercomputers, is about to get a huge performance boost thanks to AMD

Dawn, one of the UK’s most powerful supercomputers, is about to get a huge performance boost thanks to AMDNews The Dawn supercomputer in Cambridge will be powered with AMD MI355X accelerators

-

France is getting its first exascale supercomputer – and it's named after an early French AI pioneer

France is getting its first exascale supercomputer – and it's named after an early French AI pioneerNews The Alice Recoque system will be be France’s first, and Europe’s second, exascale supercomputer

-

US Department of Energy’s supercomputer shopping spree continues with Solstice and Equinox

US Department of Energy’s supercomputer shopping spree continues with Solstice and EquinoxNews The new supercomputers will use Oracle and Nvidia hardware and reside at Argonne National Laboratory

-

HPE's new Cray system is a pocket powerhouse

HPE's new Cray system is a pocket powerhouseNews Hewlett Packard Enterprise (HPE) has unveiled new HPC storage, liquid cooling, and supercomputing offerings ahead of SC25

-

UK to host largest European GPU cluster under £11 billion Nvidia investment plans

UK to host largest European GPU cluster under £11 billion Nvidia investment plansNews Nvidia says the UK will host Europe’s largest GPU cluster, totaling 120,000 Blackwell GPUs by the end of 2026, in a major boost for the country’s sovereign compute capacity.

-

Inside Isambard-AI: The UK’s most powerful supercomputer

Inside Isambard-AI: The UK’s most powerful supercomputerLong read Now officially inaugurated, Isambard-AI is intended to revolutionize UK innovation across all areas of scientific research

-

‘This is the largest AI ecosystem in the world without its own infrastructure’: Jensen Huang thinks the UK has immense AI potential – but it still has a lot of work to do

‘This is the largest AI ecosystem in the world without its own infrastructure’: Jensen Huang thinks the UK has immense AI potential – but it still has a lot of work to doNews The Nvidia chief exec described the UK as a “fantastic place for VCs to invest” but stressed hardware has to expand to reap the benefits

-

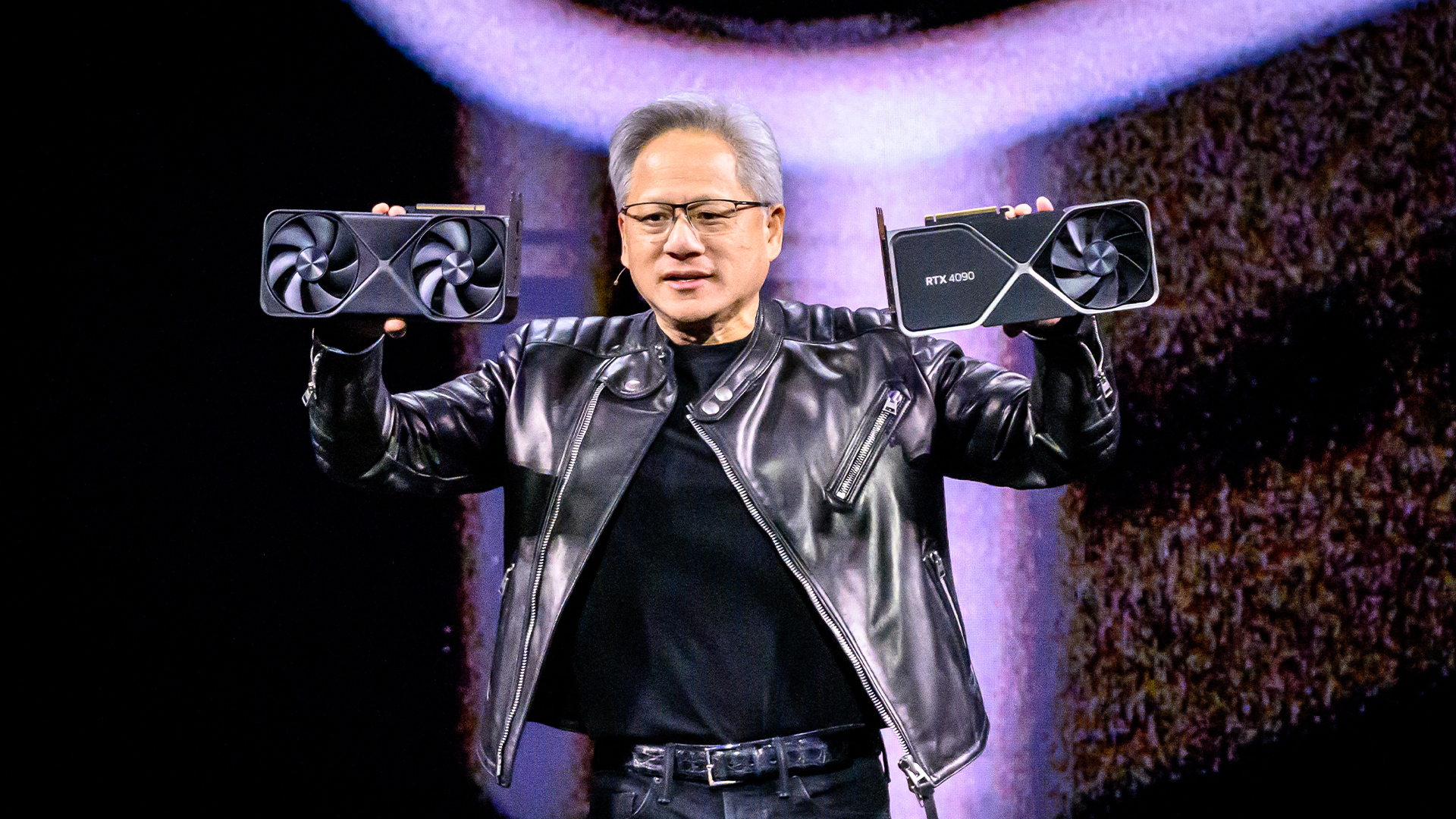

Nvidia GTC 2025: Four big announcements you need to know about

Nvidia GTC 2025: Four big announcements you need to know aboutNews Nvidia GTC 2025, the chipmaker’s annual conference, has dominated the airwaves this week – and it’s not hard to see why.