Google's new Jules coding agent is free to use for anyone – and it just got a big update to prevent bad code output

Devs using Jules will be able to leverage more autonomous code generation and completion, within certain limits

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Google publicly released its Jules coding agent earlier this month, and it’s already had a big update aimed at shoring up code quality.

Jules is intended as an ‘asynchronous’ coding agent, capable of working in the background alongside developers to write coding tests, fix bugs, and apply version updates across code.

Users working with the agent can delegate complex coding tasks based on project goals, marking a contrast to manually-operated vibe coding models and AI coding assistants such as GitHub Copilot or Google Code Assist.

Once Jules has access to an organization’s repositories, it clones the code contained within to a Google Cloud virtual machine (VM) and makes changes based on user prompts.

As a safety precaution, Jules does not make permanent changes to code before a human has manually approved its decisions - an issue that has caused serious problems for vibe coding users in recent weeks.

When its outputs are completed, Jules presents them as a pull request to the relevant developer, with a breakdown showing its reasoning for the changes it made and the difference between the code in its existing branch and its own proposed code.

Under the hood of the Jules coding agent

Jules is powered by Gemini 2.5 Pro, Google’s flagship LLM which is capable of ‘reasoning’ through complex problems before acting. Boasting a one million token context window, Jules is capable of using large swathes of an organization’s existing code for context.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Google has stated that Jules runs privately by default and is not trained on the private code to which it’s exposed.

Although Jules is free to use, the entry level tier only allows users to complete 15 tasks per day and just three concurrent tasks at any given moment.

Enterprises looking to use it at scale may contact their Google Cloud sales representative to add Google AI Ultra to their Workspace plan. This provides access to Jules for 300 daily tasks, as well as 60 concurrent tasks.

Independent developers or other interested users can pay for £18.99 ($19.99) per month for AI Pro, for which they’ll get 100 daily and 15 concurrent tasks, or £234.99 ($249.99) per month for AI Ultra.

Those on paid plans also have priority access to Google’s latest model. In practice, this means all plans currently use Gemini 2.5 Pro, but in the near future subscribers will be able to use more advanced models to power their Jules outputs.

Jules comes with constructive self-criticism

AI-powered coding is becoming increasingly popular, with Google’s own internal code now standing at more than 25% AI-generated and a recent Stack Overflow study finding that 84% of software developers now use AI.

But nearly half (46%) of respondents to the same study said they don’t trust AI accuracy, while three-quarters (75.3%) said they would turn to co-workers when they don’t trust AI answers.

AI-generated code also comes with inherent risks and limitations. Almost half of the code generated with leading models contains vulnerabilities, according to recent research by Veracode, including cross-site scripting (XSS) and SQL injection flaws.

To address these concerns, Google has also released a ‘critic’ feature within Jules, which subjects all proposed changes to adversarial reviews at point of generation in an attempt to ensure the code generated by the agent is as robust and efficient as possible.

When the critic detects code that needs to be redone, it sends it back to Jules to be improved. Google gave the example of code that includes a subtle logic error being handed back to Jules with the explanation: “Output matches expected cases but fails on unseen inputs”.

Google differentiated the critic from linters, common code analysis tools that can be found in coding agents from competitors such as OpenAI’s Codex, due to its ability to judge code relative to the user’s context and intent rather than assessing code quality based on presets.

It added that in the future the critic will become an agent in its own right, with the ability to draw on tools such as an AI code interpreter or search engines to gain more context on code, as well as to trigger criticism of code generation at multiple points throughout Jules’ reasoning process.

These ambitions are due to be realized in future versions, with no announced release timeframe.

“Our goal is fewer slop PRs, better test coverage, stronger security,” Google wrote in its blog post announcing the feature.

Make sure to follow ITPro on Google News to keep tabs on all our latest news, analysis, and reviews.

MORE FROM ITPRO

- GitHub's new 'Agent Mode' feature lets AI take the reins for developers

- AI coding tools are finally delivering results for enterprises

- Everything you need to know about OpenAI’s new agent for ChatGPT

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not to

Microsoft Copilot bug saw AI snoop on confidential emails — after it was told not toNews The Copilot bug meant an AI summarizing tool accessed messages in the Sent and Draft folders, dodging policy rules

-

Cyber experts issue warning over new phishing kit that proxies real login pages

Cyber experts issue warning over new phishing kit that proxies real login pagesNews The Starkiller package offers monthly framework updates and documentation, meaning no technical ability is needed

-

‘AI is making us able to develop software at the speed of light’: Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AI

‘AI is making us able to develop software at the speed of light’: Mistral CEO Arthur Mensch thinks 50% of SaaS solutions could be supplanted by AINews Mensch’s comments come amidst rising concerns about the impact of AI on traditional software

-

Automated code reviews are coming to Google's Gemini CLI Conductor extension – here's what users need to know

Automated code reviews are coming to Google's Gemini CLI Conductor extension – here's what users need to knowNews A new feature in the Gemini CLI extension looks to improve code quality through verification

-

Claude Code creator Boris Cherny says software engineers are 'more important than ever’ as AI transforms the profession – but Anthropic CEO Dario Amodei still thinks full automation is coming

Claude Code creator Boris Cherny says software engineers are 'more important than ever’ as AI transforms the profession – but Anthropic CEO Dario Amodei still thinks full automation is comingNews There’s still plenty of room for software engineers in the age of AI, at least for now

-

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario Amodei

Anthropic Labs chief Mike Krieger claims Claude is essentially writing itself – and it validates a bold prediction by CEO Dario AmodeiNews Internal teams at Anthropic are supercharging production and shoring up code security with Claude, claims executive

-

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’

AI-generated code is fast becoming the biggest enterprise security risk as teams struggle with the ‘illusion of correctness’News Security teams are scrambling to catch AI-generated flaws that appear correct before disaster strikes

-

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’

‘Not a shortcut to competence’: Anthropic researchers say AI tools are improving developer productivity – but the technology could ‘inhibit skills formation’News A research paper from Anthropic suggests we need to be careful deploying AI to avoid losing critical skills

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

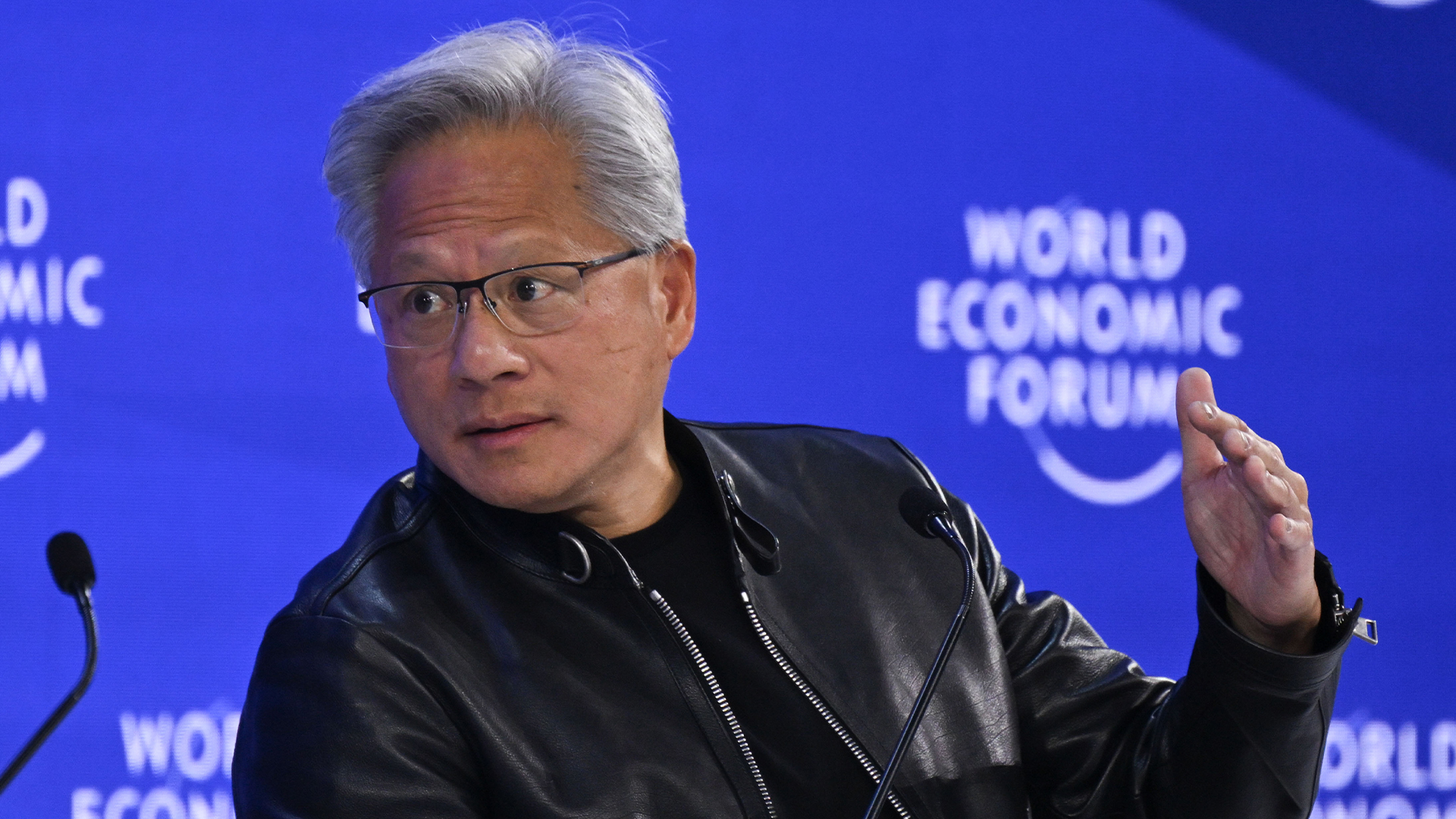

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software