An open source challenger to GitHub Copilot? StarCoder2, a code generation tool backed by Nvidia, Hugging Face, and ServiceNow, is free to use and offers support for over 600 programming languages

StarCoder2 offers code generation support for over 600 programming languages, and it’s free to use

Rory Bathgate

The StarCoder code generation tool has received a massive update that could position it as a leading open source alternative to services such as GitHub Copilot.

Initially launched in May 2023 as part of a collaboration between Hugging Face and ServiceNow, the latest iteration, StarCoder2, now also has major industry backing in the form of Nvidia.

The code generation tool supports developers by automating code completion, similar to GitHub Copilot or Amazon CodeWhisperer. It’s also capable of summarizing existing code and generating original snippets

StarCoder2 is available in three different model sizes, each trained by a different member of the partnership.

The smallest version is a three billion-parameter model trained by ServiceNow, with a seven billion-parameter model trained by Hugging Face.

Nvidia was responsible for the largest iteration of StarCoder2 with a 15 billion-parameter model built using its NeMo generative AI platform and trained on Nvidia’s accelerated AI infrastructure.

Each fork of the StarCoder2 models offers a significantly expanded array of programming languages they can work in.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The original StarCoder tool was trained on over 80 different programming languages, whereas StarCoder2 boasts the ability to generate code in 619 languages.

StarCoder2 is underpinned by the Stack v2 dataset, the largest open code dataset suitable for LLM pretraining, according to Hugging Face. The AI company said this latest dataset is seven times larger than the original Stack v1.

Paired with new training techniques, the trio believe this will help the models understand low-resource programming languages, mathematics, and program source code discussions.

The performance of each of the new LLMs is vastly enhanced too, with the three billion-parameter StarCoder2 matching the performance of Hugging Face’s original 15 billion-parameter StarCoder model.

StarCoder2 could be a game changer for devs

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

StarCoder2 is a huge step forward for open source AI code generation. In opening the door to competition within the open source community for the title of ‘best AI pair programmer’ and putting the heat on Meta’s Code Llama, it has ensured that developers have a future of solid, open options to look forward to.

RELATED WHITEPAPER

Within the paper accompanying the launch, the team behind StarCoder2 presented evidence that the model can go toe-to-toe with Code Llama even in its largest, 34-billion parameter size.

In MBPP, a benchmark that pits a coding model against approximately 1,000 entry-level Python programming problems, StarCoder2’s 15-billion parameter model scored 66.2 against Code Llama 34B’s 65.4.

The fact that the training data for StarCoder is openly available through the Stack will also be a relief to many organizations.

Future legal battles will be fought over who owns the data used to train AI and any company that discovers its source code was generated using scraped proprietary data could be in for a very difficult and costly replacement process down the line.

In contrast, the openness of StarCoder2 is a crowning achievement. In the interest of crediting the developers whose code formed the basis of StarCoder2, users can enter outputs into a dataset search on Hugging Face to identify if the code the tool has produced is ‘original’ or a verbatim copy from its immense training data.

Alternatively, teams can freely search the dataset themselves.

It’s in the interest of all developers to have strong options like this on the market, as innovation and competition in the sector will only drive models to become more accurate. But the precedent StarCoder2 sets in terms of responsible AI model creation through open source may be its lasting legacy.

Solomon Klappholz is a former staff writer for ITPro and ChannelPro. He has experience writing about the technologies that facilitate industrial manufacturing, which led to him developing a particular interest in cybersecurity, IT regulation, industrial infrastructure applications, and machine learning.

- Rory BathgateFeatures and Multimedia Editor

-

HPE and Nvidia launch first EU AI factory lab in France

HPE and Nvidia launch first EU AI factory lab in FranceNews The facility will let customers test and validate their sovereign AI factories

-

Dell Technologies doubles down on AI with SC25 announcements

Dell Technologies doubles down on AI with SC25 announcementsAI Factories, networking, storage and more get an update, while the company deepens its relationship with Nvidia

-

Some of the most popular open weight AI models show ‘profound susceptibility’ to jailbreak techniques

Some of the most popular open weight AI models show ‘profound susceptibility’ to jailbreak techniquesNews Open weight AI models from Meta, OpenAI, Google, and Mistral all showed serious flaws

-

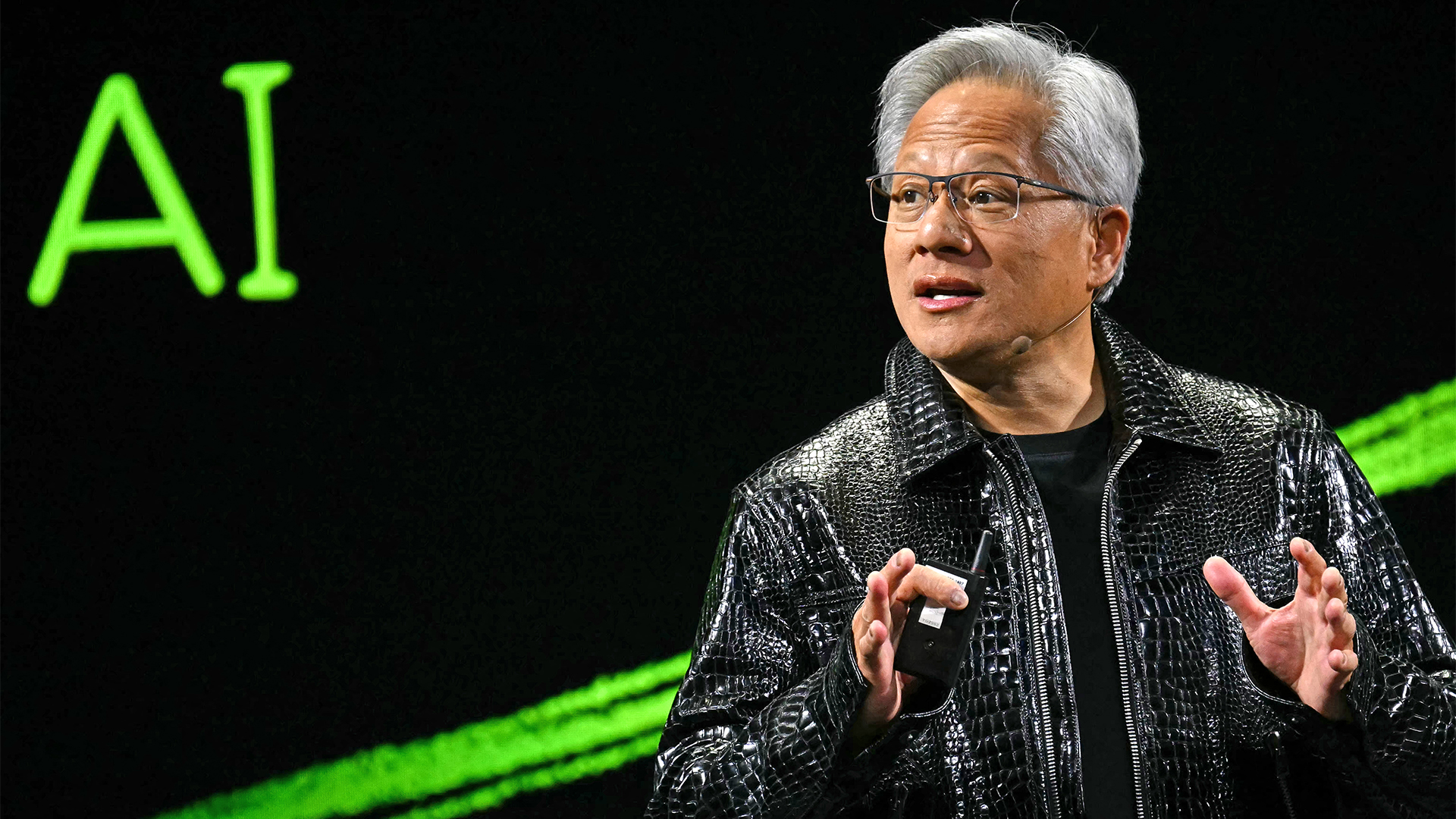

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.News Enterprise workforces of the future will be made up of a "combination of humans and digital humans," according to Nvidia CEO Jensen Huang. But how will humans feel about it?

-

OpenAI signs another chip deal, this time with AMD

OpenAI signs another chip deal, this time with AMDnews AMD deal is worth billions, and follows a similar partnership with Nvidia last month

-

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companies

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companiesNews OpenAI will use Nvidia chips to build massive systems to train AI

-

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concerns

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concernsNews The Nvidia chief exec appears upbeat on the future of the AI market despite recent concerns

-

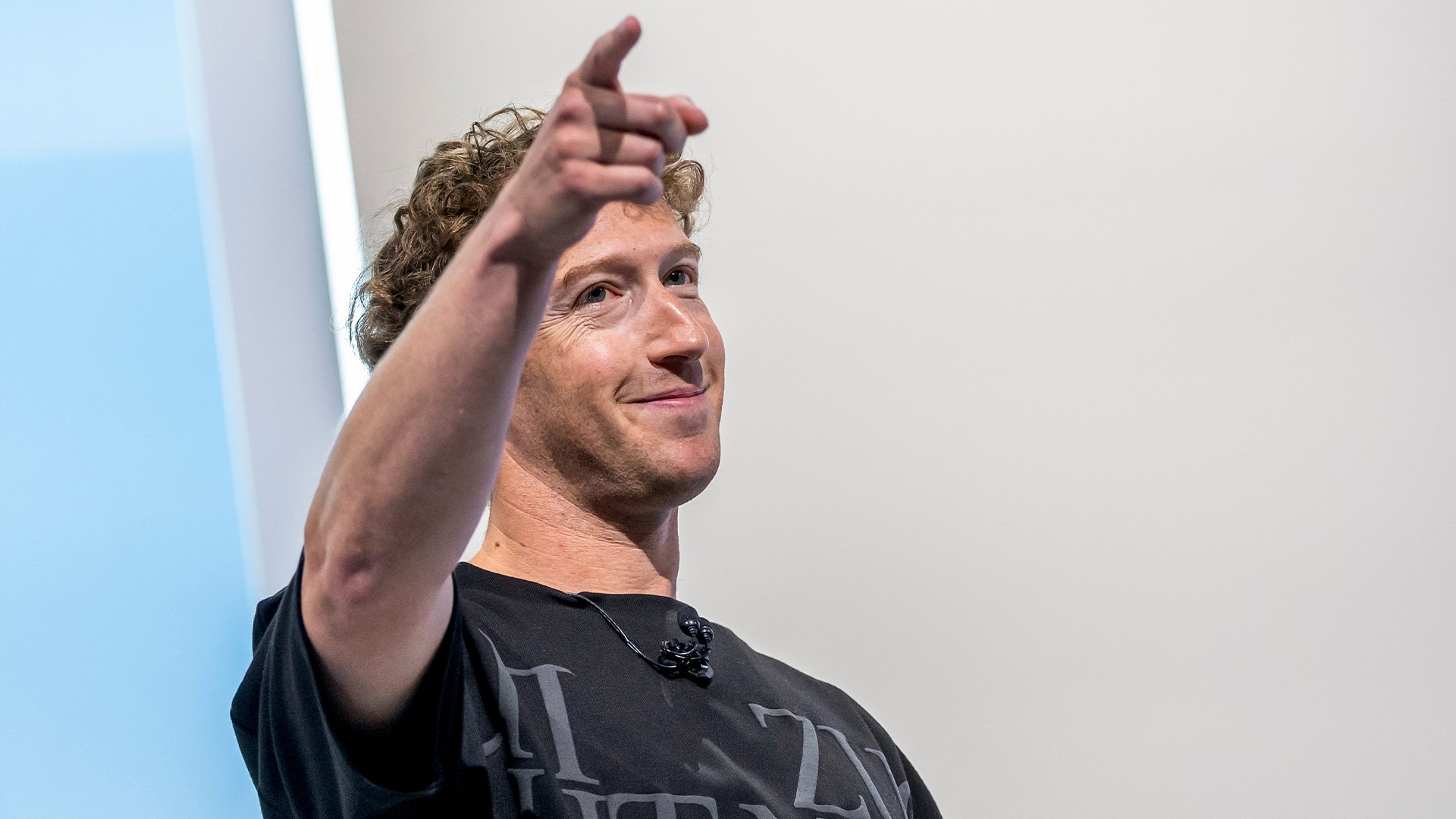

Meta’s chaotic AI strategy shows the company has ‘squandered its edge and is scrambling to keep pace’

Meta’s chaotic AI strategy shows the company has ‘squandered its edge and is scrambling to keep pace’Analysis Does Meta know where it's going with AI? Talent poaching, rabid investment, and now another rumored overhaul of its AI strategy suggests the tech giant is floundering.