Meta’s Code Llama AI coding tool just got a big performance boost

New versions of generative AI coding tool arrives, supporting Python, C++, Java, PHP and more

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

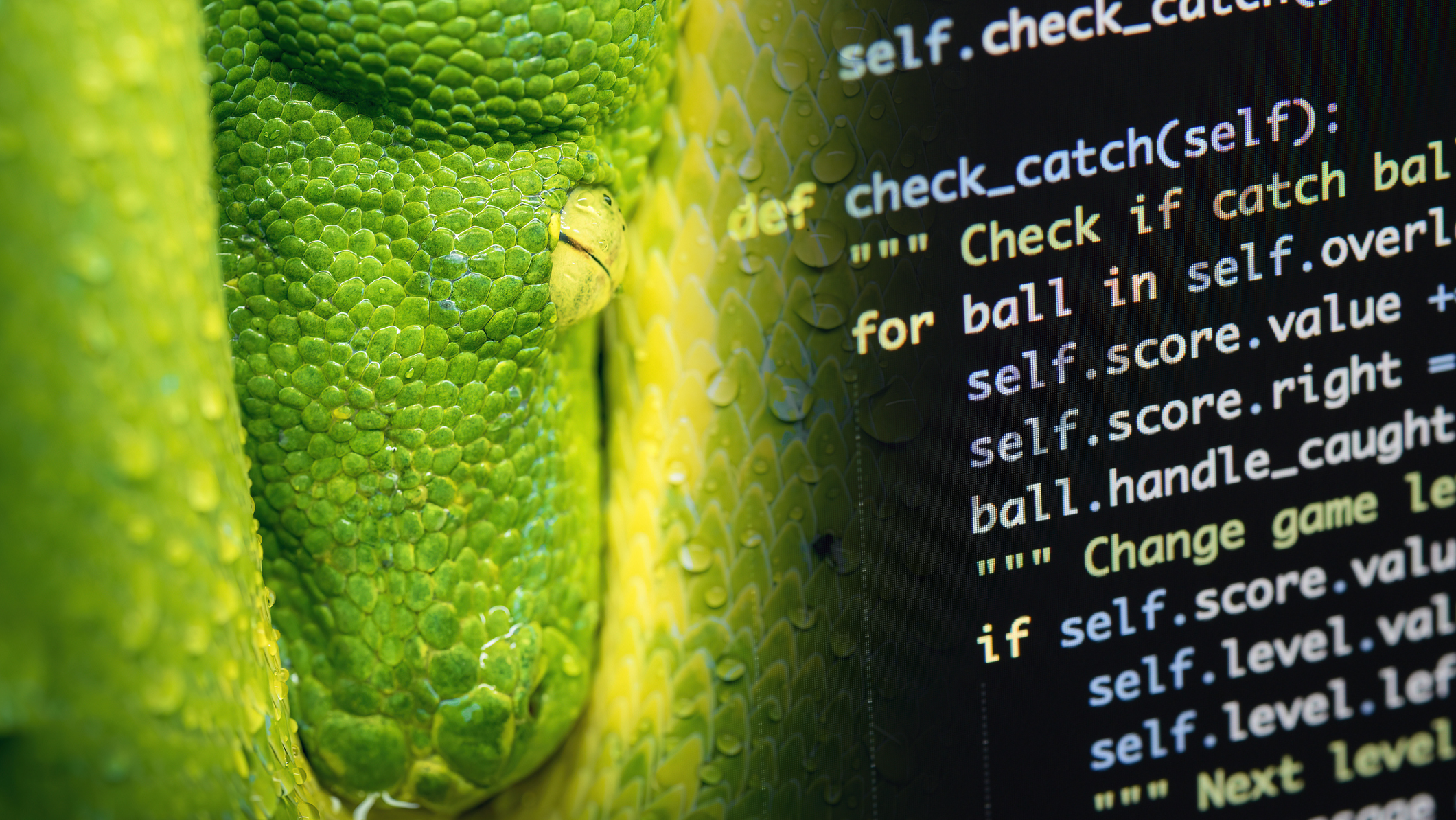

Meta has released new versions of its software code-writing generative AI tool Code Llama that can help developers work quicker and more efficiently.

Code Llama is a large language model (LLM) that can use text prompts to generate code. By using generative AI tools like Code Llama, developers should be able to write quicker and better code, while these tools can also lower the barrier of entry to people who are learning to code.

Meta released the original versions of Code Llama back in August last year in three sizes, with 7B, 13B, and 34B parameters. The new offerings include take it up to 70B – 70 billion parameters – with Llama Code 70B being the largest and most high performance LLM in the group so far.

Code Llama 70B is now available in the three versions, all of which are open source and thus free for research and commercial use. The new versions are:

- CodeLlama – 70B, the foundational code model

- CodeLlama – 70B - Python, specialized for Python

- Code Llama – 70B – Instruct, which is fine-tuned for understanding natural language instructions

“Writing and editing code has emerged as one of the most important uses of AI models today,” said Meta CEO Mark Zuckerberg on Facebook, announcing the updated models. Developers can request access to the models from Meta.

Code Llama is a code-specialized version of Meta’s open source Llama 2 foundational general purpose LLM, created by training Llama 2 further on code-specific datasets. That means Code Llama can generate code, and text about code, from both code and natural language prompts. For example, you could ask it to ‘Write a function that outputs the Fibonacci sequence’.

It can also be used for code completion and debugging and supports some of the most popular languages in use today, including Python, C++, Java, PHP, Typescript (Javascript), C#, and Bash.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

'Code Llama – Python' is a language-specialized variation of Code Llama. Meta said that because Python is the most benchmarked language for code generation – and because Python and PyTorch play an important role in the AI community – a specialized model would be particularly useful to developers.

More on coding

'Code Llama - Instruct' is a second variation of Code Llama that has been fed a “natural language instruction” input and the expected output which makes it better at understanding what humans expect out of their code prompts. Because this version has been fine-tuned to generate “helpful and safe” answers, Meta recommends using Code Llama - Instruct variants whenever using Code Llama for code generation.

Meta said it didn’t recommend using Code Llama or 'Code Llama - Python' to perform general natural language tasks since neither of these models are designed to follow natural language instructions.

“Code Llama is specialized for code-specific tasks and isn’t appropriate as a foundation model for other tasks,” it said.

RELATED RESOURCE

Discover why development teams need to adopt a responsible approach to AI code completion tools

“Programmers are already using LLMs to assist in a variety of tasks, ranging from writing new software to debugging existing code. The goal is to make developer workflows more efficient, so they can focus on the most human centric aspects of their job, rather than repetitive tasks,” Meta said when it first launched the tools.

Not all AI models used for coding are open source, but Meta said LLMs for coding benefit from an open approach, both in terms of innovation and safety. “Publicly available, code-specific models can facilitate the development of new technologies that improve peoples' lives,” it said.

Meta said that in its own benchmark testing, Code Llama outperformed state-of-the-art publicly available LLMs on code tasks.

More on Microsoft Copilot

Certainly, looking at the updated benchmark data Meta has included, it seems that 'Code Llama Instruct – 70B' and 'Code Llama – Python 70B' do seem to perform very well against the rivals listed. However, the race to be the most high-performing LLMs is a tough one, with each of the big players constantly iterating.

Meta’s LLMs are far from the only option for developers looking to give their code an artificial intelligence boost. Amazon offers its Code Whisperer free for individual developers, while Github Copilot, powered by generative AI models developed by GitHub, OpenAI, and Microsoft, is probably the best known, and claims to make developers up to 55% more efficient at coding.

Steve Ranger is an award-winning reporter and editor who writes about technology and business. Previously he was the editorial director at ZDNET and the editor of silicon.com.

-

Amazon’s rumored OpenAI investment points to a “lack of confidence” in Nova model range

Amazon’s rumored OpenAI investment points to a “lack of confidence” in Nova model rangeNews The hyperscaler is among a number of firms targeting investment in the company

-

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the key

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the keyNews Meta CEO Mark Zuckerberg promises new models this year "will be good" as the tech giant looks to catch up in the AI race

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion The Microsoft chief said there’s a risk public sentiment might sour unless adoption is distributed more evenly

-

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industries

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industriesNews Microsoft CEO Satya Nadella appears confident that the AI market isn’t in the midst of a bubble, but warned widespread adoption outside of the technology industry will be key to calming concerns.

-

Infosys teams up with AWS to fuse Amazon Q Developer with internal tools

Infosys teams up with AWS to fuse Amazon Q Developer with internal toolsNews Combining Infosys Topaz and Amazon Q Developer will enhance the company's internal operations and drive innovation for customers

-

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issues

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issuesNews Microsoft CEO Satya Nadella might want the term "AI slop" shelved in 2026, but businesses will still be dealing with increasing output problems and poor returns.

-

AWS has dived headfirst into the agentic AI hype cycle, but old tricks will help it chart new waters

AWS has dived headfirst into the agentic AI hype cycle, but old tricks will help it chart new watersOpinion While AWS has jumped on the agentic AI hype train, its reputation as a no-nonsense, reliable cloud provider will pay dividends

-

Want to build your own frontier AI model? Amazon Nova Forge can help with that

Want to build your own frontier AI model? Amazon Nova Forge can help with thatNews The new service aims to lower bar for enterprises without the financial resources to build in-house frontier models