Everything you need to know about Microsoft Copilot Studio

The new Microsoft Copilot Studio will allow customers to create their own AI assistants

Rory Bathgate

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Microsoft has unveiled the launch of its new Copilot Studio platform to enable Microsoft 365 customers to build their own artificial intelligence (AI) “copilot” assistants.

The company confirmed the launch of the new platform alongside a raft of announcements at its 2023 Ignite developer conference on 15 November as it continues to expand artificial intelligence offerings across its core product ranges.

Copilot Studio will enable users to “quickly build, test, and publish standalone copilots and custom GPTs”, the company said.

“Copilot Studio exposes a full end-to-end lifecycle for customizations and standalone copilots within a single pane of glass — you can build, deploy, analyze, and manage all from within the same web experience,” said Jared Spataro, head of modern work and business applications at Microsoft.

“And since it’s a software as a service (SaaS), everything you create is live instantly.”

The launch of Microsoft Copilot Studio follows the roll-out of a similar DIY copilot creation platform from OpenAI last week, GPT Store. Like Copilot Studio, it allows users to create their own custom GPTs and applications.

What does Microsoft Copilot Studio do?

Copilot Studio will essentially act as a central development hub for Microsoft 365 users to design, test, and launch their own intuitive AI applications based on individual business needs.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Microsoft has been keen to emphasize that these will be more than just handy chatbots for enterprises. They will allow users to input natural language prompts and questions to provide detailed answers to business-related queries.

For example, IT teams can create their own standalone custom copilots that are “role and function specific”. This could be in the form of an IT support copilot to help staff in their day-to-day administrative tasks, Microsoft said, or a copilot to help sales teams complete requests for proposal (RFPs) more efficiently.

Copilot Studio will also provide what Microsoft described as a “drag and drop low-code approach” to make the process of creating custom copilots easier for users of all skill levels.

“You can use the low-code graphical interface or natural language to build your copilot — and Copilot Studio will help you iteratively refine the conversation design,” Spataro said.

“The product offers a host of features to streamline solution development, including collaborative commenting, graphical multi-authoring, and side-by-side coding views.”

Microsoft Copilot safety features

Safety concerns surrounding the use of generative AI tools have been a recurring theme in recent months, with enterprises worried about the potential for data leakage and the exposure of sensitive company information.

Microsoft said Copilot Studio will come with built-in governance and control features aimed at mitigating such risks.

These features will enable IT teams to “centrally monitor usage and analytics” from a single control pane, the company said. The integrated admin center will also provide complete visibility of Copilot for Microsoft 365 customizations, as well as standalone copilots developed internally, it added.

“IT has full visibility and control across the lifecycle with the built-in analytics dashboard,” Spataro said. “And admins can control maker and user access, secure data using company-specific policies, and manage environments all within the admin center.”

Copilots your way

With the release of Copilot Studio, Microsoft customers now have access to an ever wider range of AI assistants. Joining the likes of GitHub Copilot Enterprise, Copilot Studio will be of particular interest to business users looking to use generative AI to boost their productivity.

Right now, the precise lines separating each Copilot offering are clear. But by allowing business users to produce their own ‘copilots’ for specific workloads, trained on their data and customized using natural language, Microsoft is moving to give users the ability to endlessly iterate on the base functions of the Copilot range and eventually automate almost anything in their business.

There’s no doubt Microsoft has real confidence in its ability to deliver hit after hit with the Copilot lineup, and that it knows its strength is offering customers flexibility when it comes to the data, security management, and minute processes of their Copilot plugins.

Rory Bathgate is Features & Multimedia Editor at ITPro, leading our in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

Where Amazon Bedrock emphasizes the huge choice of first and third-party models for customer benefit, Azure’s third-party offerings play a clear second fiddle to the flagship offering of Copilot.

Microsoft has bet that when it comes down to it, customers know how best to use their data and that this will be the deciding factor in success rather than the depth of models used.

This has been reflected in Microsoft’s decision to double down on AI at a hardware level too, with the announcement of two homegrown chips.

The Microsoft Azure Maia AI Accelerator and Microsoft Azure Cobalt CPU will be integrated throughout Microsoft data centers from 2024, and will be used to improve efficiency and provide crucial backing for AI workloads amid record demand for AI-capable hardware.

RELATED RESOURCE

Discover insights that will support future IT strategies

DOWNLOAD NOW

The Maia 100 chip is a real feather in the cap for Microsoft, having been designed specifically for training and inference large language models (LLMs). It’s likely to be the chip code-named ‘Athena’ during development and will help Microsoft to meet the growing inference demands of its customers on the same level as Google Cloud and AWS, both of which already use in-house silicon.

Going in-house will likely present huge savings for Microsoft down the road, with Azure AI inference only set to grow with the release of each new Copilot product and customers being actively encouraged to assign tasks to custom copilots and GPTs.

In a post on LinkedIn Satya Nadella, CEO at Microsoft, stated that Maia was built with a more rugged cooling unit to enable higher efficiency performance, which will also help to cut the huge energy costs that have become part and parcel of the growing cost of establishing AI platforms.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

- Rory BathgateFeatures and Multimedia Editor

-

Cyber experts issue warning over new phishing kit that proxies real login pages

Cyber experts issue warning over new phishing kit that proxies real login pagesNews The Starkiller package offers monthly framework updates and documentation, meaning no technical ability is needed

-

Microsoft hails advances in glass data storage

Microsoft hails advances in glass data storageNews Project Silica uses lasers to encode data into borosilicate glass, where it stays stable for thousands of years

-

Satya Nadella says “our multi-model approach goes beyond choice’ as Microsoft adds Claude AI models to 365 Copilot

Satya Nadella says “our multi-model approach goes beyond choice’ as Microsoft adds Claude AI models to 365 CopilotNews Users can choose between both OpenAI and Anthropic models in Microsoft 365 Copilot

-

Nasuni announces new Microsoft 365 Copilot integration

Nasuni announces new Microsoft 365 Copilot integrationNews Nasuni’s File Data Platform now includes integration with the Microsoft Graph Connector to enhance data access for Microsoft’s AI services

-

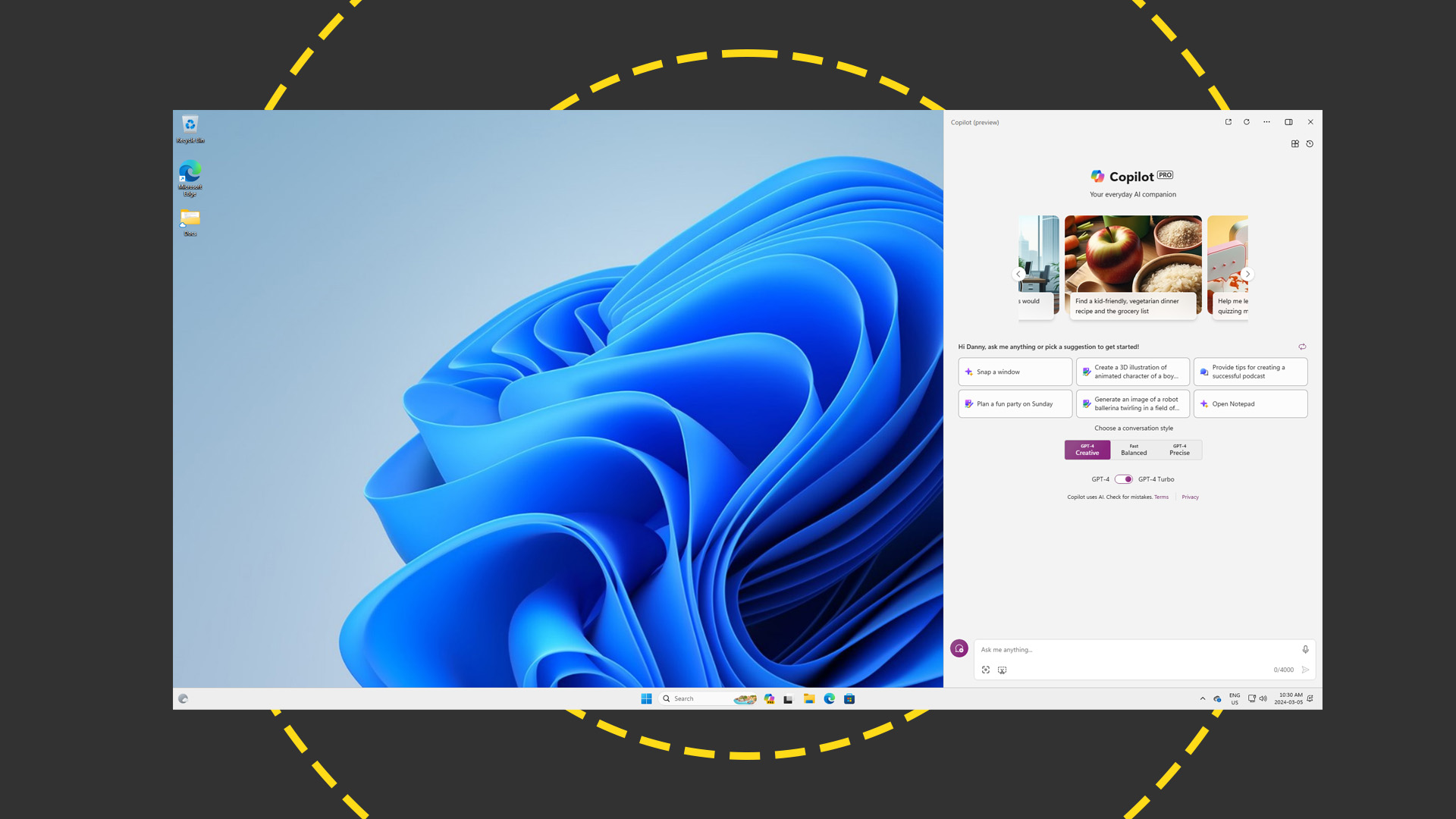

Microsoft Copilot review: AI baked into your apps

Microsoft Copilot review: AI baked into your appsReviews Microsoft already provides generative AI online – now it's putting it inside its operating systems and apps but the results are mixed

-

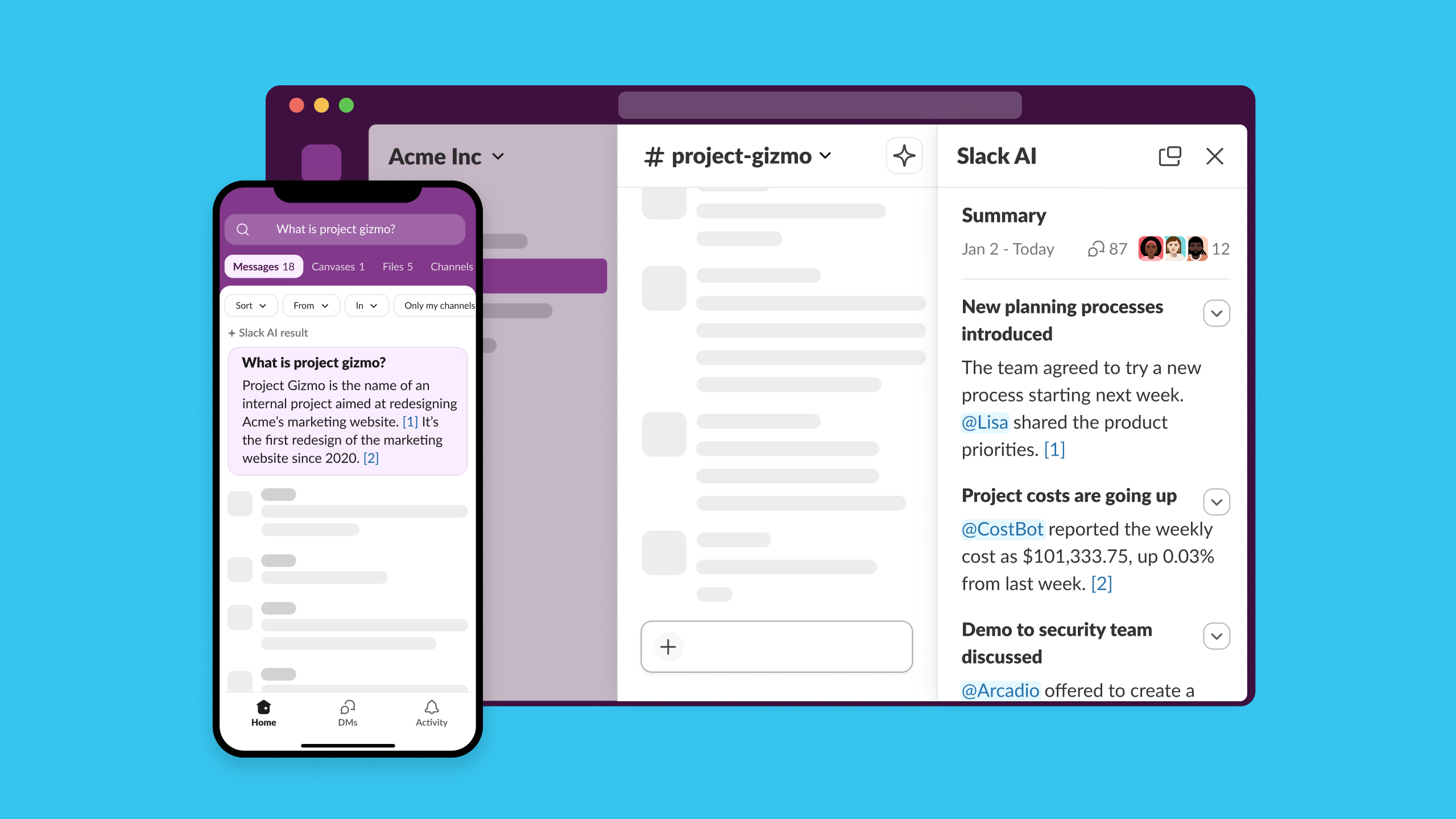

Slack AI: Everything you need to know about the platform's new features

Slack AI: Everything you need to know about the platform's new featuresNews New Slack AI features look to supercharge user productivity and collaboration as the firm targets intense competition with industry competitors

-

Duet AI vs Copilot: All the similarities and differences

Duet AI vs Copilot: All the similarities and differencesAnalysis Google has embedded generative AI across the entirety of its Workspace apps, but how does it compare to Copilot and what are its advantages?

-

Microsoft: 'People care more about AI's benefits than their job security'

Microsoft: 'People care more about AI's benefits than their job security'Microsoft’s 2023 Work Trend Index also showed workers have less focus time than ever, hindering innovation

-

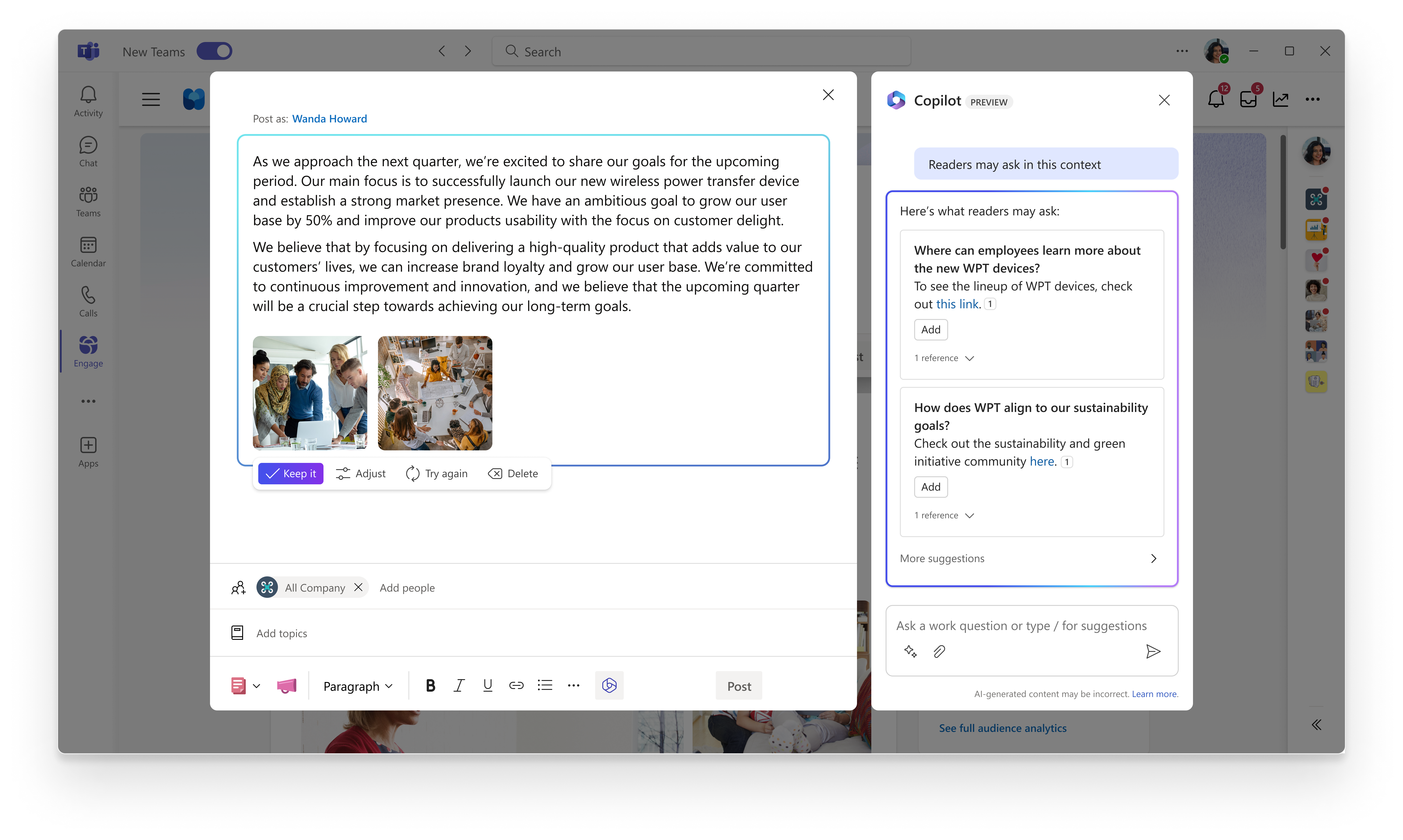

Microsoft Copilot for Viva brings AI to employee experience

Microsoft Copilot for Viva brings AI to employee experienceLeaders can make use of new analytical and generative AI tools to improve workforce engagement