Nvidia announces next-generation AI chip in preparation for trillion-parameter LLMs

The firm aims to future-proof its AI hardware lineup by providing solutions for models that don’t yet exist

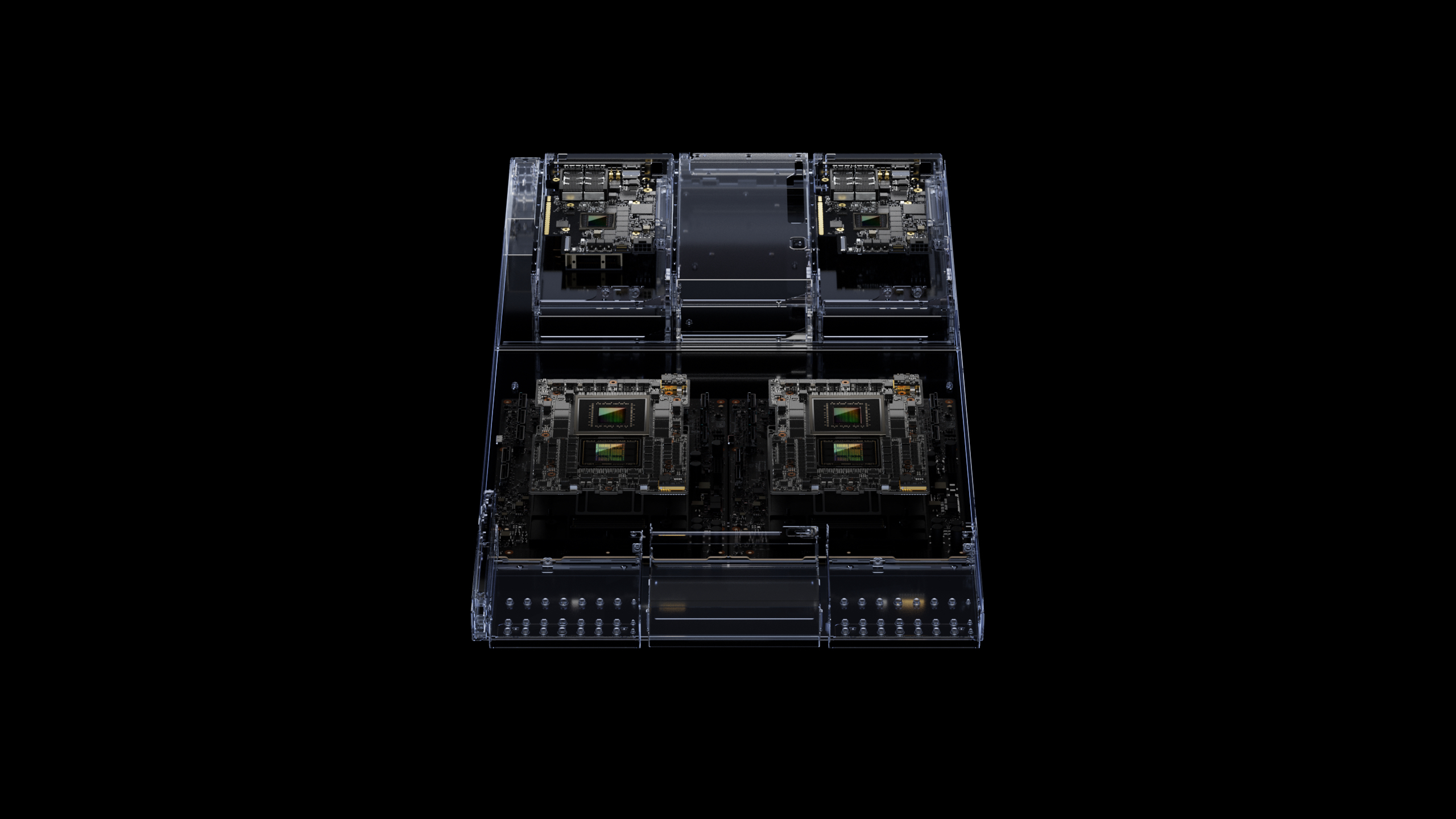

Nvidia has announced a new AI hardware platform powered by a next-generation chip, which it said will be able to train the most complex generative AI workloads and complement high-performance servers.

The Nvidia GH200 Grace Hopper uses the firm’s new HBM3e processor, which Nvidia said will deliver 3x faster memory bandwidth than its current generation chip.

Once available, it will be configurable to suit the needs of customers. In its dual configuration, in which two chips are paired, the hardware is capable of performing at eight petaflops, leveraging 144 Arm Neoverse cores and 282GB of HBM3e memory.

HMB3e is 50% faster than current-generation HBM3 memory, can deliver 10TB/sec bandwidth, and is capable of processing models 3.5x larger than its predecessor.

Firms seeking to train and fine-tune massive large language models (LLM), which can be made up of hundreds of billions of parameters at present but could exceed a trillion parameters in the near future, will benefit from this increased on-chip performance.

This also means that AI models can be trained and inferenced using a more compact network, and negate the need for expensive, large-scale supercomputers for contemporary AI training.

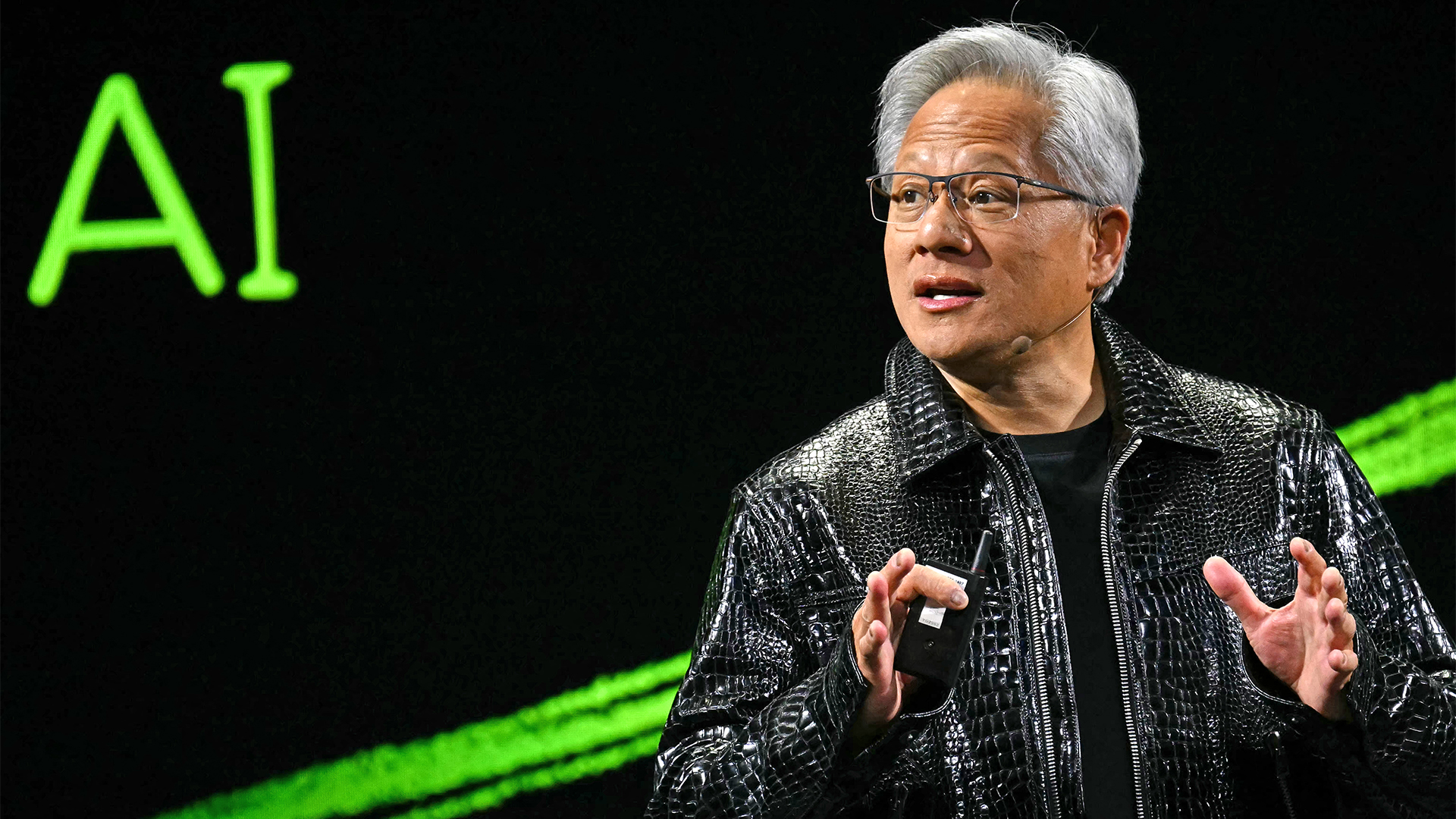

“The inference cost of large language models will drop significantly, because look how small this computer is and you could scale this out in the world's data centers because the servers are really really easy to scale out,” said Jensen Huang, founder and CEO of Nvidia, in the firm’s keynote at Siggraph 2023.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Huang has also stated the importance of delivering more powerful solutions for data centers in order to keep up with booming AI development.

“To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs,” he said.

RELATED RESOURCE

IT leaders must parse the reality against the hype and choices that protect their organization. Learn why AI/ML is crucial to cyber security, how it fits in, and its best use cases.

“The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center.”

First-generation GH200 Grace Hopper Superchips entered full production in May, aimed at hyperscalers and supercomputing with 900 GB/sec bandwidth. Systems derived from the new GH200 Grace Hopper platform are expected as soon as Q2 2024.

“While Nvidia’s announcement is ultimately a product refresh – it does the same thing the previous model did, but faster – it’s still early enough days for artificial intelligence that a product making AI training and inference faster, rather than easier, is vitally important,” James Sanders, principal analyst, cloud and infrastructure at CCS Insight, told ITPro.

“For years, it’s been good enough for computers to copy data in RAM dedicated to the CPU over to the GPU as needed, as the two chips did fundamentally different things in a workload. Artificial intelligence changed this, making the performance penalty for shuttling data back and forth too high.”

“As a result, high-speed coherent memory shared between the GPU and CPU is a significant performance improvement for AI workloads. Nvidia’s GH200 uses HBM3e, which is 50% faster, providing a boost to AI applications.”

Nvidia emphasized that the new platform is compatible with its MGX modular server designs, which could assist the uptake of the new hardware across a multitude of server layouts.

GH200s can also be connected via Nvidia NVLink technology to form a supercomputer configuration the firm calls the Nvidia DGX GH200.

This is comprised of 256 GH200 Grace Hopper Superchips which provide 144TB of shared memory to tackle future generative AI models such as trillion-parameter LLMs and huge deep learning algorithms.

In recent years Nvidia has cemented its place as a provider of choice for AI hardware and infrastructure.

Leaning on its expertise as a world-renowned designer of graphics processing units (GPUs), the firm has released a lineup of chips that were purpose-built for high-performance workloads such as data analytics and training machine learning (ML) and generative AI models.

Nvidia AI architectures aimed at HPC and data centers include Grace, Hopper, Ada Lovelace, and BlueField, and span the gamut of central processing units (CPUs), GPUs, and data processing units (DPUs).

Its Spectrum-X Ethernet platform also aims to boost the efficiency of Ethernet networks within data centers, to improve the speed of processing data and running AI workloads in the cloud.

Hopper chips include the H100, which formed the backbone of the supercomputer Microsoft built to train OpenAI’s GPT-4 and has been used for cloud computing by Amazon Web Services (AWS), Google Cloud, and Microsoft Azure.

A number of large tech firms have also partnered with Nvidia to provide their customers with a platform for custom-built generative AI solutions.

In July Dell announced a collaboration with Nvidia on generative AI, which will make use of Nvidia’s NeMo end-to-end AI framework to provide customers with pre-built, industry-specific models that can be deployed on a business premises.

Snowflake and Nvidia have also announced a partnership, which will see Nvidia NeMo used to help organizations build generative AI solutions that interface with their proprietary data stored in Snowflake’s Data Cloud.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

HPE and Nvidia launch first EU AI factory lab in France

HPE and Nvidia launch first EU AI factory lab in FranceNews The facility will let customers test and validate their sovereign AI factories

-

Dell Technologies doubles down on AI with SC25 announcements

Dell Technologies doubles down on AI with SC25 announcementsAI Factories, networking, storage and more get an update, while the company deepens its relationship with Nvidia

-

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.

Nvidia CEO Jensen Huang says future enterprises will employ a ‘combination of humans and digital humans’ – but do people really want to work alongside agents? The answer is complicated.News Enterprise workforces of the future will be made up of a "combination of humans and digital humans," according to Nvidia CEO Jensen Huang. But how will humans feel about it?

-

OpenAI signs another chip deal, this time with AMD

OpenAI signs another chip deal, this time with AMDnews AMD deal is worth billions, and follows a similar partnership with Nvidia last month

-

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companies

Why Nvidia’s $100 billion deal with OpenAI is a win-win for both companiesNews OpenAI will use Nvidia chips to build massive systems to train AI

-

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concerns

Jensen Huang says 'the AI race is on' as Nvidia shrugs off market bubble concernsNews The Nvidia chief exec appears upbeat on the future of the AI market despite recent concerns

-

HPE's AI factory line just got a huge update

HPE's AI factory line just got a huge updatenews New 'composable' services with Nvidia hardware will allow businesses to scale AI infrastructure

-

Nvidia, Deutsche Telekom team up for "sovereign" industrial AI cloud

Nvidia, Deutsche Telekom team up for "sovereign" industrial AI cloudNews German telecoms giant will host industrial data center for AI applications using Nvidia technology