OpenAI's Sam Altman: Hallucinations are part of the “magic” of generative AI

The OpenAI chief said there is value to be gleaned from hallucinations

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

AI hallucinations are a fundamental part of the “magic” of systems such as ChatGPT which users have come to enjoy, according to OpenAI CEO Sam Altman.

Altman’s comments came during a heated chat with Marc Benioff, CEO at Salesforce, at Dreamforce 2023 in San Francisco in which the pair discussed the current state of generative AI and Altman’s future plans.

When asked by Benioff about how OpenAI is approaching the technical challenges posed by hallucinations, Altman said there is value to be gleaned from them.

“One of the sort of non-obvious things is that a lot of value from these systems is heavily related to the fact that they do hallucinate,” he told Benioff. “If you want to look something up in a database, we already have good stuff for that.

“But the fact that these AI systems can come up with new ideas and be creative, that’s a lot of the power. Now, you want them to be creative when you want, and factual when you want. That’s what we’re working on.”

Altman went on to claim that ensuring that platforms only generate content when they’re absolutely sure would be “naive” and counterintuitive to the fundamental nature of the systems in question.

“If you just do the naive thing and say ‘never say anything that you’re not 100% sure about’, you can get them all to do that. But it won’t have the magic that people like so much.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The topic of hallucinations, whereby an AI asserts or frames incorrect information as factually correct, has been a recurring talking point over the last year amid the surge in generative AI tools globally.

It’s a pressing topic given the propensity for some systems to frame false information as fact during a period in which misinformation still remains an abrasive and highly sensitive subject.

The issue of hallucinations has even led to OpenAI being sued in the last year, with a US radio host launching a defamation lawsuit against the tech giant due to false claims that he had embezzled funds.

Similarly, Google was left red-faced during its highly publicized launch event for Bard when it produced an incorrect answer to a question posed to the bot.

RELATED RESOURCE

Driving disruptive value with Generative AI

This free webinar explains how businesses are responsibly leveraging AI at scale

DOWNLOAD FOR FREE

Google shrugged the incident off as an example of why testing - or the quality of testing - is a critical factor in the development and learning process for generative AI models.

Industry stakeholders and critics alike have raised repeated concerns about AI hallucinations of late, with Marc Benioff describing the term as a buzzword for “lies” during a keynote speech at the annual conference.

“I don’t call them hallucinations, I call them lies,” he told attendees.

OpenAI is by no means disregarding the severity of the situation, however. In June, the firm published details of a new training process that it said will improve the accuracy and transparency of AI models.

The company used “process supervision” techniques to train a model for solving mathematical problems, it explained in a blog post at the time. This method rewards systems for each individual accurate step taken while generating an answer to a query.

OpenAI said the technique will help train models that produce outputs that will result in fewer confidently incorrect answers.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

Pulsant unveils high-density data center in Milton Keynes

Pulsant unveils high-density data center in Milton KeynesNews The company is touting ultra-low latency, international connectivity, and UK sovereign compute power to tempt customers out of London

-

Anthropic Labs chief claims 'Claude is now writing Claude'

Anthropic Labs chief claims 'Claude is now writing Claude'News Internal teams at Anthropic are supercharging production and shoring up code security with Claude, claims executive

-

OpenAI's Codex app is now available on macOS – and it’s free for some ChatGPT users for a limited time

OpenAI's Codex app is now available on macOS – and it’s free for some ChatGPT users for a limited timeNews OpenAI has rolled out the macOS app to help developers make more use of Codex in their work

-

Amazon’s rumored OpenAI investment points to a “lack of confidence” in Nova model range

Amazon’s rumored OpenAI investment points to a “lack of confidence” in Nova model rangeNews The hyperscaler is among a number of firms targeting investment in the company

-

OpenAI admits 'losing access to GPT‑4o will feel frustrating' for users – the company is pushing ahead with retirement plans anway

OpenAI admits 'losing access to GPT‑4o will feel frustrating' for users – the company is pushing ahead with retirement plans anwayNews OpenAI has confirmed plans to retire its popular GPT-4o model in February, citing increased uptake of its newer GPT-5 model range.

-

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the key

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the keyNews Meta CEO Mark Zuckerberg promises new models this year "will be good" as the tech giant looks to catch up in the AI race

-

DeepSeek rocked Silicon Valley in January 2025 – one year on it looks set to shake things up again with a powerful new model release

DeepSeek rocked Silicon Valley in January 2025 – one year on it looks set to shake things up again with a powerful new model releaseAnalysis The Chinese AI company sent Silicon Valley into meltdown last year and it could rock the boat again with an upcoming model

-

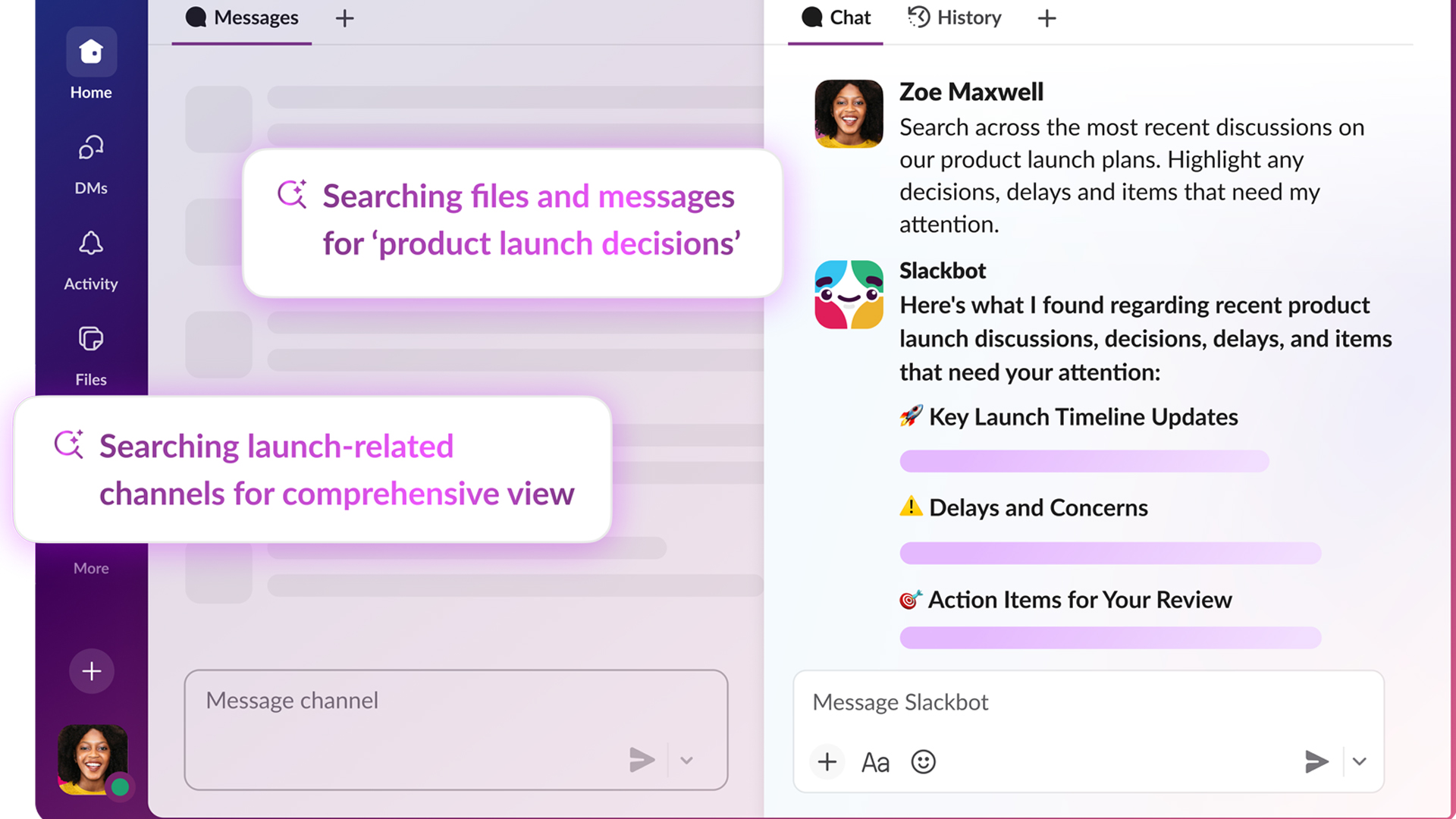

The AI-enabled Slackbot is now generally available – Salesforce says it could save more than a day’s work per week

The AI-enabled Slackbot is now generally available – Salesforce says it could save more than a day’s work per weekNews With an entirely overhauled model behind the chatbot, users can summarize channels and ask for highly personalized information

-

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'News OpenAI details efforts to protect ChatGPT Atlas against prompt injection attacks

-

OpenAI says GPT-5.2-Codex is its ‘most advanced agentic coding model yet’ – here’s what developers and cyber teams can expect

OpenAI says GPT-5.2-Codex is its ‘most advanced agentic coding model yet’ – here’s what developers and cyber teams can expectNews GPT-5.2 Codex is available immediately for paid ChatGPT users and API access will be rolled out in “coming weeks”