Snowflake unveils Arctic, an enterprise-grade LLM

Snowflake claims it is the most open model available, and teases that this is just the beginning

Data cloud specialist Snowflake has launched Arctic in a move it claims delivers the most open, enterprise-grade large language model (LLM) out there.

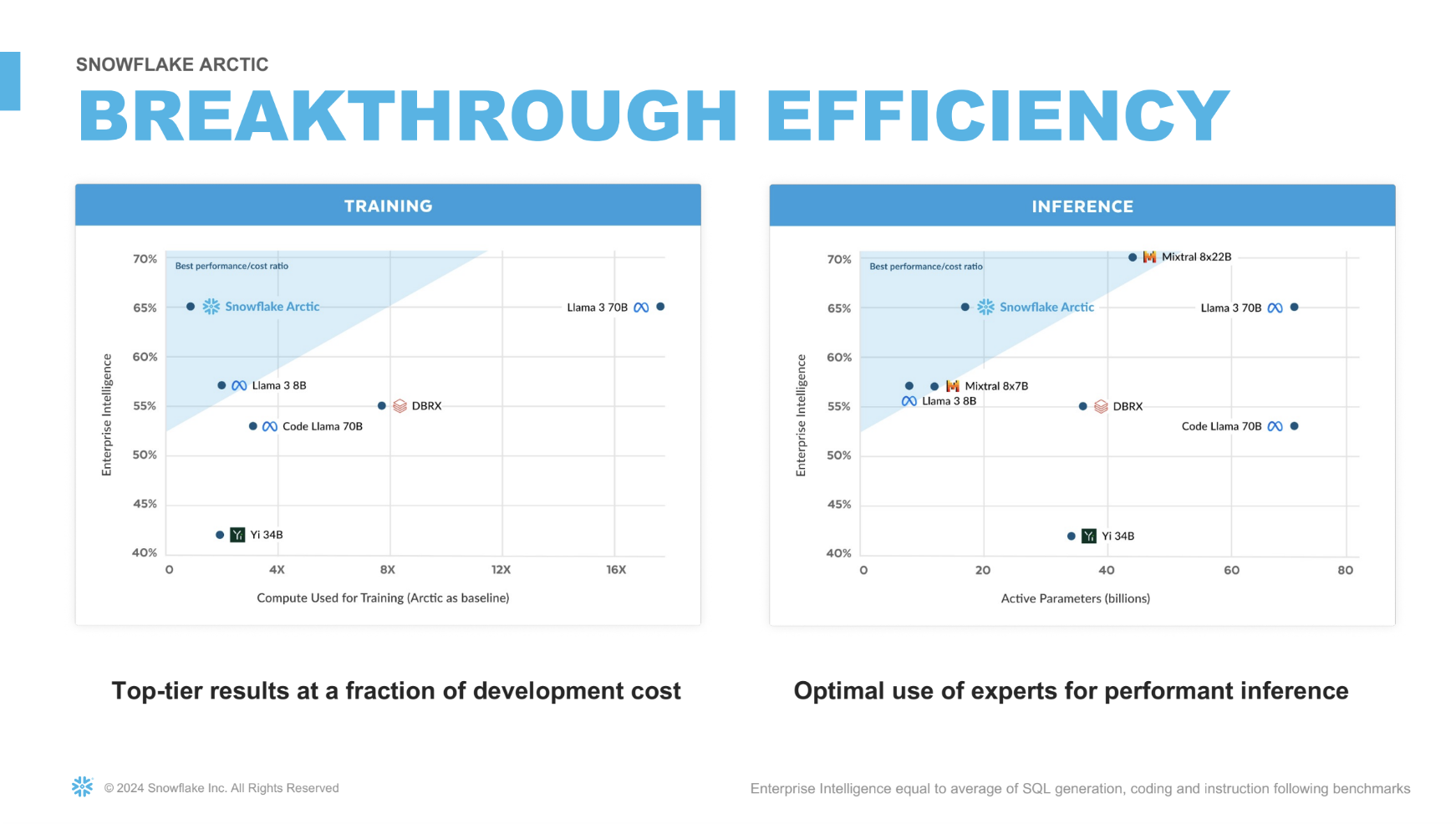

What’s more, the company claims Arctic delivers extremely high levels of intelligence in a cost and resource-efficient way. Indeed, the AI research team that built the LMM did so in less than three months whilst spending around one-eighth of the training costs normally associated with similar models.

Snowflake hopes customers can benefit from similar cost and time efficiencies.

“There’s no AI strategy without a data strategy. And good data is the fuel for AI. We think Snowflake is the most important enterprise AI company on the planet because we are the data foundation. We think the house of AI is going to be built on top of the data foundation that we are creating” said Snowflake CEO Sridhar Ramaswamy.

“Snowflake’s mission as a company has always been to eliminate complexity so our customers can focus on the future of their business and stay ahead. We think that simplicity is the ultimate sophistication. We’ve been leaders in this space of data now for many years and we are bringing that same mentality to AI.”

Citing his experience working at Google, Ramaswamy said that the ability to dominate there is because one player came along and created something better than what was already out there. But he claimed “AI is going the other way. It is rapidly decentralizing. I think this is a good thing for all of us. What this means in practice is that simply creating or using someone else’s foundation model is not going to be a value differentiator. It never was.”

“It’s the product that matters, not just the ability. Very quickly at Snowflake we realized that models are nice, they let people do things they have not been able to do before. But what people want are end-to-end products.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Snowflake isn't jumping on the bandwagon

Ramaswamy was quick to dismiss suggestions this latest launch is a positioning exercise and instead promised an accelerated pace of innovation of this ilk was on the horizon.

“This is not a positioning exercise. I genuinely think of AI as a transformational technology. I know people are sick of hearing that from tech leaders, but I think of this as a technology that is perhaps even more impactful than mobile. And we all know how much impact that had on humanity,” he said.

“AI is an important overlay layer that accelerates the value our customers are going to get from their data investments. That’s the big deal. A natural consequence of this is people are going to realize they can do so much more with Snowflake and AI and data.

“That comes from a relentless focus on what our customers want and where they are going. It would have been easy for us to just say that we will wait for some open source model and just use it. Instead, we are making a foundational investment because we think these investments are going to unlock more value for our customers.”

RELATED WEBINAR

Many current AI products hallucinate and their creators often aren’t transparent with the volume of failure, which just isn’t acceptable for enterprises, according to Ramaswamy.

“We think the future of AI should be easy, efficient, but most of all trusted and reliable. That’s where we come in," he said.

“Snowflake Arctic is a huge milestone for AI innovation and a big step forward for enterprise-grade open LLMs. It is a big moment for Snowflake… The pace of innovation and how quickly we are bringing this to market is truly, truly remarkable even by the lightning-quick standards of AI.

“I think that this is going to be the foundation that lets us, Snowflake, and our customers build enterprise-grade products and actually begin to realize the promise and value of AI.”

The company has close to 10,000 customers - including the likes of AT&T, Capital One, Disney, and Fox - making use of its existing platform to “run their business and get ahead” according to Ramaswamy.

Three USPs

Baris Gultekin, Snowflake's Head of AI, added that Arctic has three key unique differentiators:

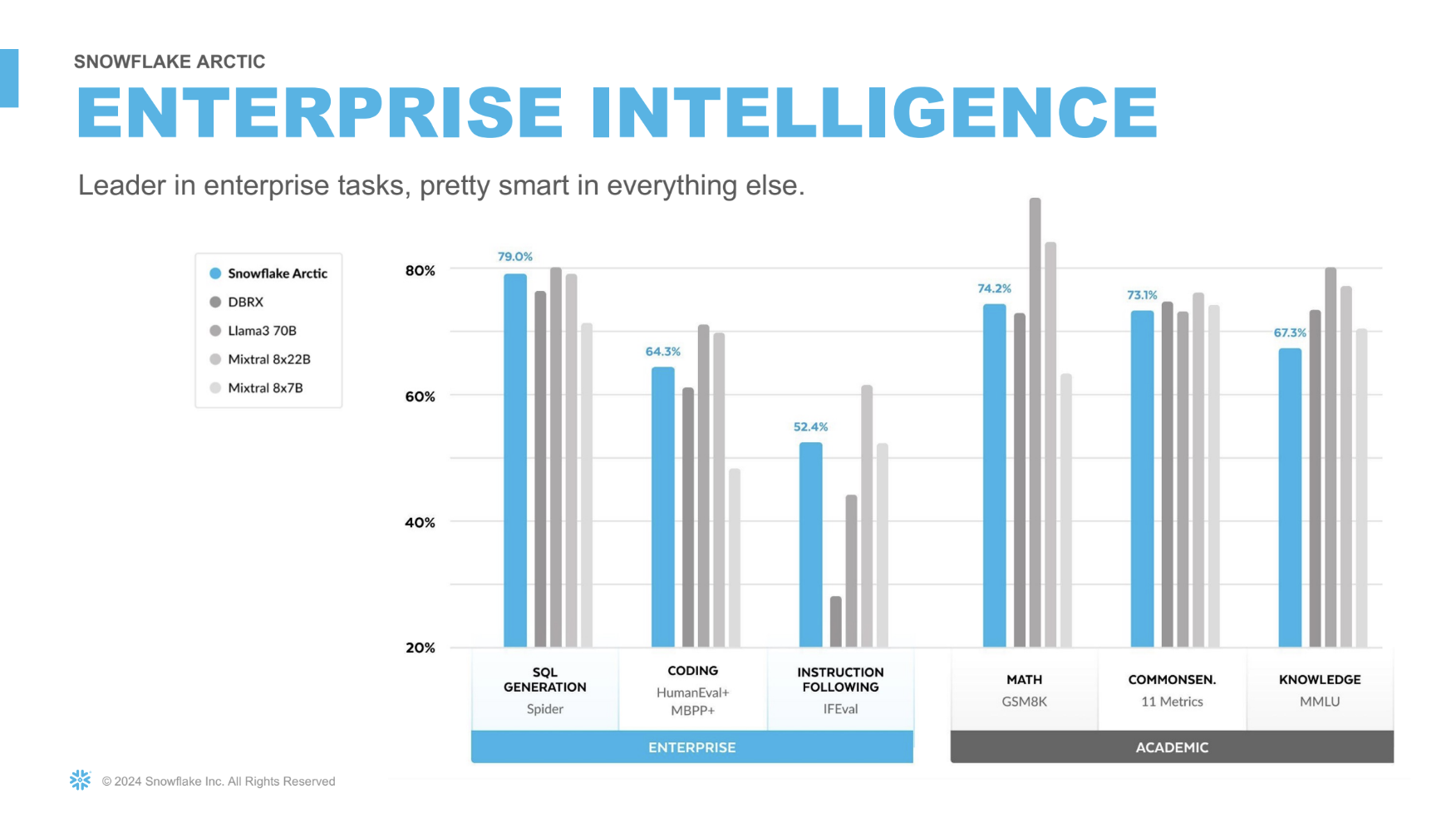

During a press conference earlier this week, Snowflake demoed the Arctic and talked up the benefits in more detail. The firm said that the LMM is able to marry high-quality results with great efficiency and activates 17 out of 480 billion parameters at any one time while at work.

This, Snowflake claims, is half the parameters than DBRX and 75 percent less than Llama 3 70B during inference of training.

“We’re actively working with our partners and community to further optimize this,” Gultekin added.

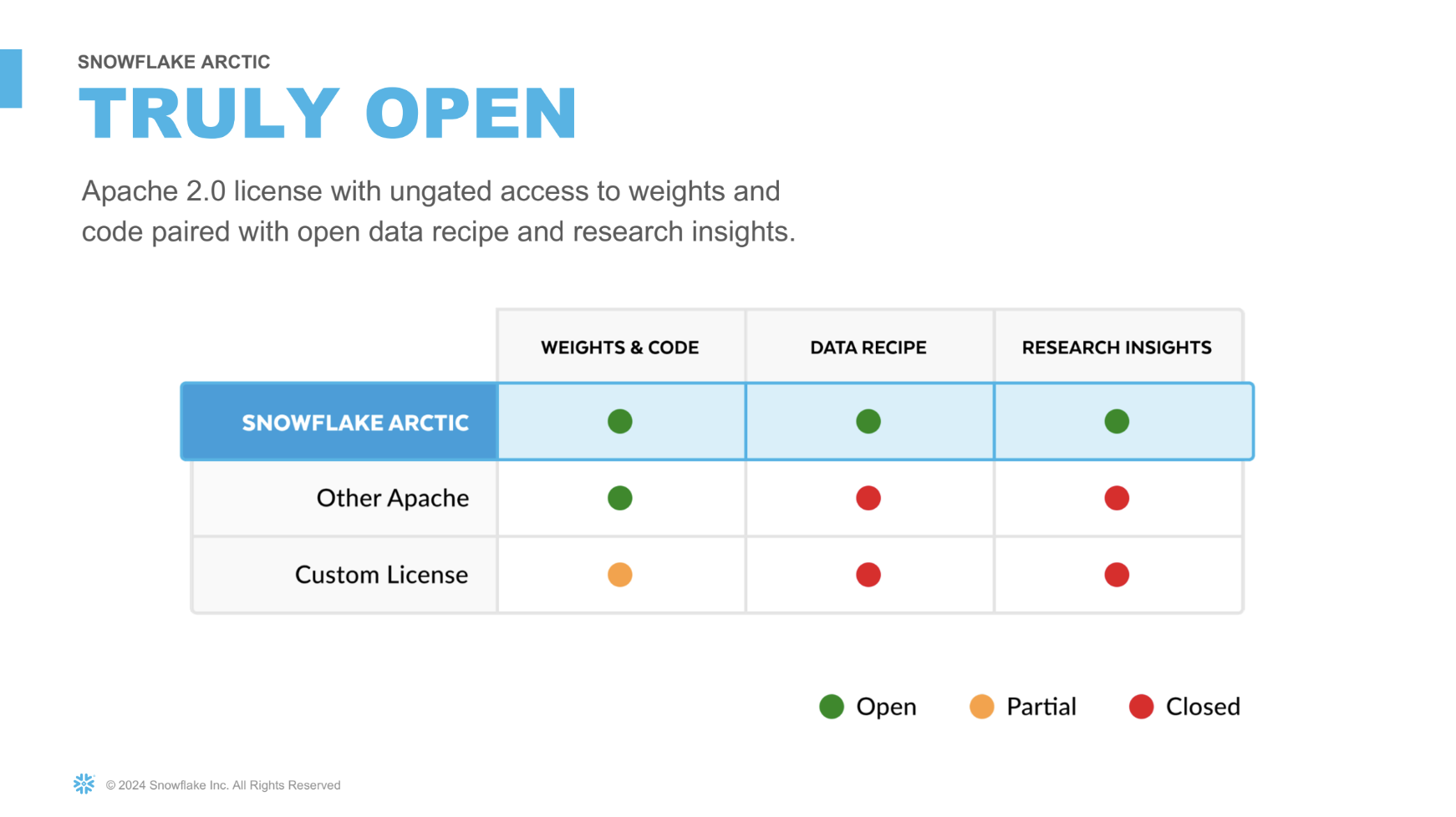

“Arctic is truly open… With this release we’re not just opening the model weights, we’re also sharing our research insights through comprehensive research cookbooks. The cookbook is designed to expedite the learning process for anyone looking into the world-class MoE model. And it offers high-level insights as well as granular technical detail to craft LMMs like Arctic so that anyone can build their desired intelligence efficiently and economically.”

“To recap Snowflake is setting a new baseline for how fast and efficient the state-of-art open source models can be trained.”

More to come

Ramaswamy also teased that this launch is just the start of something bigger and there will no doubt be more to come at the company’s conference in San Francisco in early June.

“I am a very driven and impatient person. I believe in seizing opportunities. So the pace of innovation in what we do in AI will continue. [As will] the pace of innovation in other things we do… So there is a lot coming. We have this and many more launch announcements and customer testimonials coming up at our summit.

“We should think of this more as business as usual. If you look at the pace of stuff that is happening in AI, anyone that wants to be an A player here better figure out how to move quickly,” he said.

He continued: “It’s fun, it’s exhilarating and sometimes exhausting, but it’s all good. I think this is a time of rapid progress and I feel fortunate to be alive at the center of this.”

Maggie has been a journalist since 1999, starting her career as an editorial assistant on then-weekly magazine Computing, before working her way up to senior reporter level. In 2006, just weeks before ITPro was launched, Maggie joined Dennis Publishing as a reporter. Having worked her way up to editor of ITPro, she was appointed group editor of CloudPro and ITPro in April 2012. She became the editorial director and took responsibility for ChannelPro, in 2016.

Her areas of particular interest, aside from cloud, include management and C-level issues, the business value of technology, green and environmental issues and careers to name but a few.

-

What is Microsoft Maia?

What is Microsoft Maia?Explainer Microsoft's in-house chip is planned to a core aspect of Microsoft Copilot and future Azure AI offerings

-

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using it

If Satya Nadella wants us to take AI seriously, let’s forget about mass adoption and start with a return on investment for those already using itOpinion If Satya Nadella wants us to take AI seriously, let's start with ROI for businesses

-

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investment

Half of agentic AI projects are still stuck at the pilot stage – but that’s not stopping enterprises from ramping up investmentNews Organizations are stymied by issues with security, privacy, and compliance, as well as the technical challenges of managing agents at scale

-

What Anthropic's constitution changes mean for the future of Claude

What Anthropic's constitution changes mean for the future of ClaudeNews The developer debates AI consciousness while trying to make Claude chatbot behave better

-

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industries

Satya Nadella says a 'telltale sign' of an AI bubble is if it only benefits tech companies – but the technology is now having a huge impact in a range of industriesNews Microsoft CEO Satya Nadella appears confident that the AI market isn’t in the midst of a bubble, but warned widespread adoption outside of the technology industry will be key to calming concerns.

-

Workers are wasting half a day each week fixing AI ‘workslop’

Workers are wasting half a day each week fixing AI ‘workslop’News Better staff training and understanding of the technology is needed to cut down on AI workslop

-

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popular

Retailers are turning to AI to streamline supply chains and customer experience – and open source options are proving highly popularNews Companies are moving AI projects from pilot to production across the board, with a focus on open-source models and software, as well as agentic and physical AI

-

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issues

Microsoft CEO Satya Nadella wants an end to the term ‘AI slop’ and says 2026 will be a ‘pivotal year’ for the technology – but enterprises still need to iron out key lingering issuesNews Microsoft CEO Satya Nadella might want the term "AI slop" shelved in 2026, but businesses will still be dealing with increasing output problems and poor returns.

-

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'

OpenAI says prompt injection attacks are a serious threat for AI browsers – and it’s a problem that’s ‘unlikely to ever be fully solved'News OpenAI details efforts to protect ChatGPT Atlas against prompt injection attacks

-

Google DeepMind CEO Demis Hassabis thinks startups are in the midst of an 'AI bubble'

Google DeepMind CEO Demis Hassabis thinks startups are in the midst of an 'AI bubble'News AI startups raising huge rounds fresh out the traps are a cause for concern, according to Hassabis