How the National Library of Sweden harnessed AI to unlock centuries of language data

From Viking-age manuscripts to 1970s broadcasts, AI is helping to digitize more than 18 million items key to Sweden’s history

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

The National Library of Sweden – Kungliga biblioteket – is responsible for collecting and preserving the nation’s printed and electronic historic materials, as well as making them accessible to the public and researchers.

Housing more than 18 million items, including books, newspapers, magazines, maps, photographs, and audio recordings, its collections go back more than a thousand years.

To make these collections more accessible to researchers and members of the public, it tapped into the powerful potential of artificial intelligence (AI), as part of a broader modernization strategy.

Tapping into Sweden’s past

While AI is often discussed in a more forward-thinking framework, many organizations are wielding AI to gain insights into our past – including the National Library of Sweden. The library's collections are vast and diverse, and constantly growing. One of its biggest challenges has been managing the sheer volume of material it maintains.

“The oldest manuscripts we have are from about from the Viking age,” says Love Börjeson director of KBLab, the Kungliga biblioteket’s data lab. “We also have very large Icelandic collections and very large Latin collections.”

The library receives millions of new items each year, and it was difficult to keep up. Another challenge was the challenge of making its collections more discoverable by researchers, with the sheer depth of the materials maintained making it difficult to scour through.

Börjeson has been leading KBLab since 2019, and has been AI Sweden's data and infrastructure lead for applied language technology since 2021. He works with large scale AI models in high performance computing (HPC) environments, and has an educational background in computational social science at Stamford.

Automating the tasks involved in managing its collections, such as cataloging, accessioning, and preservation, was a priority. The library also hoped to improve the discoverability of these collections. Having embraced AI, the library also faced having to keep up with the latest research and developments in the field and ensure it was using the latest tools and techniques to stay ahead.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Embracing heavy-duty computing and LLMs

The library embarked on a modernization process that involved implementing a multi-layered computational infrastructure. This comprised new laptops, workstations, servers, and supercomputers.

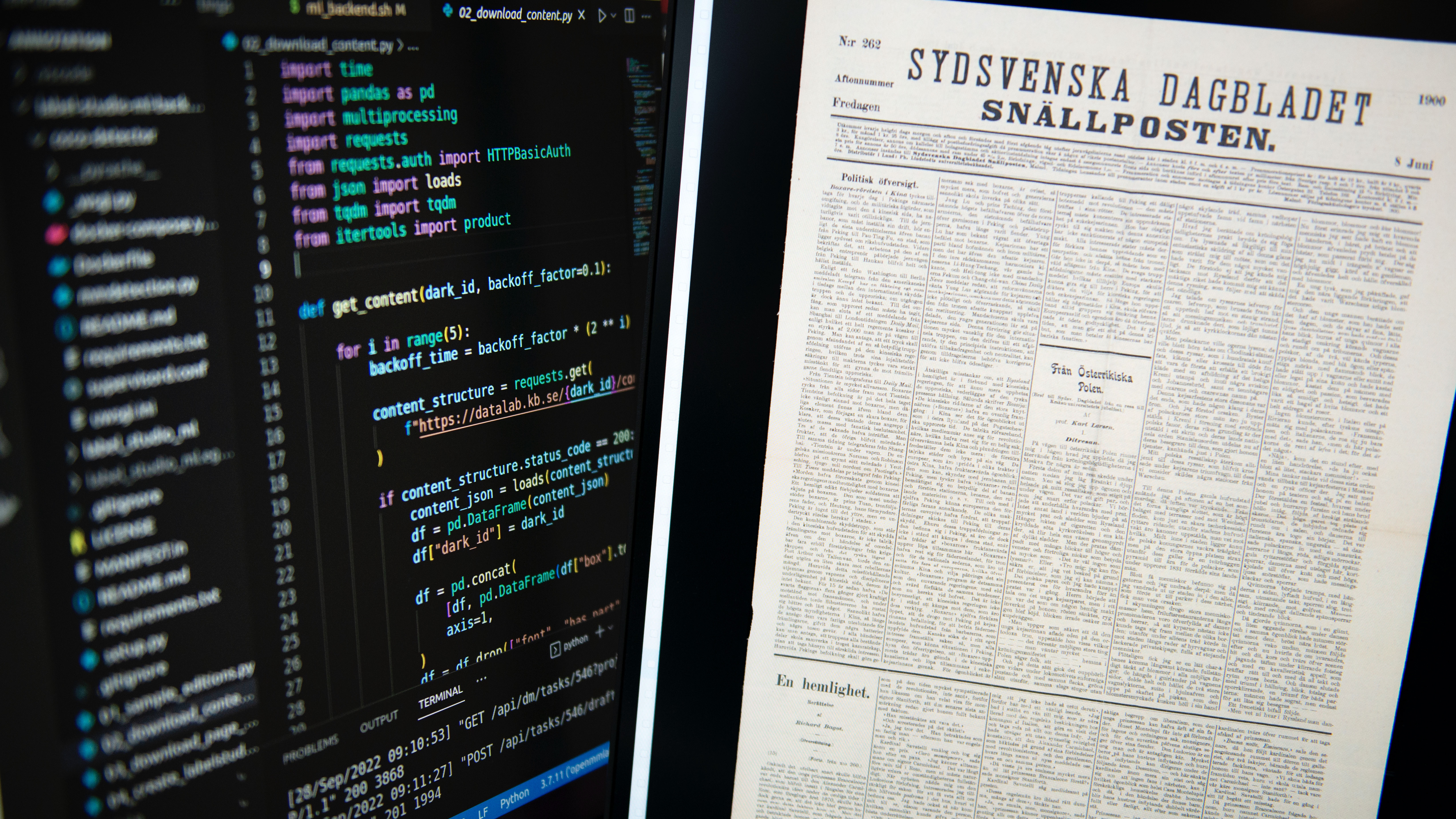

The National Library of Sweden has been digitizing newspapers from the 17th century onwards, as well as radio and TV broadcasts from 1979 and electronic legal deposits since 2005.

It’s also been working on digitizing centuries of language data, starting with a transformer model in 2019, but quickly realizing it needed a heavier-duty system.

They installed two Nvidia DGX systems, acquired from Swedish provider AddPro, for on-premises AI development in 2020 and 2021, which would prepare them for even larger runs on GPU-based supercomputers in the EU.

The library uses Nvidia NeMo Megatron, a PyTorch framework for training large language models (LLMs), as well as AI tools for transcribing audio to text. Researchers can use these platforms to search for specific radio broadcasts.

Cutting-edge AI is remaking history

Historians, archaeologists, musicians, and data scientists are deploying AI to re-envision historical moments. Like so many tales from the evolution of modern computing, success with AI is grounded in the values of collaboration, opportunity, and experimentation.

The team is also developing text generation models, and hoping to use AI to process videos and generate automatic descriptions. The library has also partnered with the University of Gothenburg to develop downstream apps for linguistic research using the lab's models.

One of the library's most significant achievements is an AI-based system called Swedish Language Models (SweLL), which was deployed to tackle the challenge of digitizing and unlocking centuries of language data. SweLL uses machine learning algorithms to analyze Swedish texts, learn language patterns and syntax, and transcribe handwritten documents into digital text. The system also corrects spelling errors and automatically tags and categorizes texts by topics, time period, and author.

SweLL has raised the accessibility of language data. It’s easier for researchers and learners to access the Swedish language data, enabling quick and accurate searches and analysis of language patterns and trends. Additionally, the library developed more than 24 open source transformer models using Nvidia DGX systems, available through Hugging Face, which allows researchers to create specialized datasets for quantity-oriented research.

Heavy AI investment has paid off

The library is digitizing a significant volume of historical text data to make it more accessible in the years to come. LLMs have made it easier for non-researchers, like journalists, to interact with the data too.

“They can ask questions like ‘what the sentiments in headlines in newspapers during the conflict in Ukraine are’,” says Börjeson. “It was sad, then positive last fall, then negative again. You can have that kind of quantitative questions without actually accessing data.”

READ MORE IN OUR SERIES

Overcoming legal and technical obstacles in data processing can be time-consuming, meanwhile, but feasible. LLMs do pose a risk of producing false news when moving data, which can be minimized by keeping computational resources close to the data.

Börjeson is pleased with the institution’s decision to invest heavily in local computing resources, as it’s actually paid off in the short term let alone taking a while. “It’s enabled our data scientists to be more proficient when training AI models,” he says, adding the returns have been significant and rapid.

The investment has significantly reduced the time and cost required to process data, which was previously time-consuming and resource-intensive. AI has also paved the way for new discoveries, identifying previously unknown language patterns and leading to new insights into the history and culture of Sweden.

Unexpectedly, the project has also benefitted other organizations, including regional libraries, private companies, and Sweden’s government. These benefits include fine-tuning the position of a bus stop in northern Sweden and reducing the bureaucratic workload for courts and police officers.

Rene Millman is a freelance writer and broadcaster who covers cybersecurity, AI, IoT, and the cloud. He also works as a contributing analyst at GigaOm and has previously worked as an analyst for Gartner covering the infrastructure market. He has made numerous television appearances to give his views and expertise on technology trends and companies that affect and shape our lives. You can follow Rene Millman on Twitter.

-

Sumo Logic expands European footprint with AWS Sovereign Cloud deal

Sumo Logic expands European footprint with AWS Sovereign Cloud dealNews The vendor is extending its AI-powered security platform to the AWS European Sovereign Cloud and Swiss Data Center

-

Going all-in on digital sovereignty

Going all-in on digital sovereigntyITPro Podcast Geopolitical uncertainty is intensifying public and private sector focus on true sovereign workloads

-

Fit for artificial intelligence

Fit for artificial intelligencewhitepaper Ensure data availability to applications and services with hybrid cloud storage

-

Fit for AI

Fit for AIwhitepaper Ensure data availability to applications and services with hybrid cloud storage

-

How to build an intelligent foundation for the retail sector

How to build an intelligent foundation for the retail sectorSupported Content As the retail sector grapples with unprecedented challenges, industrial digital intelligence has become crucial to underpin survival and growth successfully

-

How is the public sector harnessing AI for good?

How is the public sector harnessing AI for good?Supported Content From hospital wait time reductions to automating admin processes, AI is being used to create an intelligent foundation that powers digital transformation and delivers real value for staff and customers alike

-

How to build an intelligent foundation for the manufacturing sector

How to build an intelligent foundation for the manufacturing sectorSupported Content Manufacturing's future hinges on harnessing the power of industrial digital intelligence, with solid foundations integrating AI, automation and the IoT to overcome challenges.

-

Five key orchestration capabilities to achieve AI value at enterprise scale

Five key orchestration capabilities to achieve AI value at enterprise scalewhitepaper Realize ROI through greater productivity and better business outcomes

-

How AI and digital transformation are game changers for the finance industry

How AI and digital transformation are game changers for the finance industrySupported Content Advances in generative AI technology are enabling financial services institutions to unlock marked efficiency benefits

-

What is AI?

What is AI?whitepaper AI impacts all sectors and is transformative for business, but what is AI and how does it work?