Global AI agreement “impossible” and companies should avoid tech-specific policies, experts warn

A technology-agnostic approach rooted in mitigations against bias is one route to safer AI systems, while leaders wait for legislation to meet their concerns

Global legislation on AI is unlikely to reach a point of consensus in the near future, according to industry experts, who urged organizations to focus on the relationship between AI and workers rather than trying to account for specific approaches to the technology.

Discussing the future potential for AI in a talk held at Dell Technologies World 2024, experts concurred that AI development is currently the rate at which the public sector can keep pace with safety controls.

The panel included Lisa Ling, journalist and broadcaster; Rana el Kaliouby, co-founder of AI firm Affectiva; Paul Calleja, director of research computing at the University of Cambridge; and John Roese, global CTO at Dell Technologies.

On the point of AI regulation, Calleja said that a global governing body is “kind of impossible”, noting that policy occurring on a national level is a more direct route in the short-to-medium term.

Acknowledging the EU AI Act as a “good start,” el Kaliouby added that delivered in its current form, the legislation will be unable to develop in lock-step with AI innovation. In the long-term, she contended, this will prevent it from being effective.

“It's going to take two years to be implemented and with the pace of change it's going to become obsolete fairly quickly unless it’s kept up to date,” she explained, adding that disagreement over the risks AI poses continues to stymie any government’s response.

“I used to serve on the World Economic Forum’s Global Council for AI and Robotics and it was multi-stakeholder from all over the world. It was interesting, we could not agree on what is a good use of AI and what is not, so I think it’s hard.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The panel acknowledged that events such as the Seoul AI Summit are important for global communication on AI, but ultimately unlikely to result in meaningful controls.

This is because generative AI is breaking the mold for technological development, the panel said, given the multitude of advances that have already been made in the field.

“We have very little chance for our current legacy approach of regulatory and standard setting to be successful,” Roese said.

Roese added that while the development time for previous technologies gave governments three to ten years to develop scientific consensus and hold summits on regulatory approaches, regulation is already lagging the leading edge of AI by years.

“This is moving so fast and if we really want to create a high-end regulatory ethics framework, one of the mistakes we must avoid is just trying to make it specific to the technology,” said Roese.

“Let's define the relationship between people and machines, let’s define the high-order things and put our governance structure around that.”

Roese cited the example of retrieval-augmented generation (RAG), in which LLM outputs are grounded in unstructured data to improve their relevance, as proof of how fast AI.

“Let's make the assumption that the underlying technology of today will not even be relevant in three years, maybe two years, maybe one,” he said.

Tackling AI risks demands a diverse response

The panel covered some of the largest risks that AI poses, including its potential to embed algorithmic biases and the danger of AI-powered cyber attacks.

Leading AI companies have promised to halt development if models get dangerous, including the likes of Amazon, Google, Meta, and Microsoft. The agreement was made at the Seoul AI Summit 2024, the follow-up to the AI Safety Summit held at Bletchley Park in 2023.

“Everybody in this room is a thought leader, or a business leader, an AI innovator, or a tech innovator. We all have a responsibility to not just wait for regulation to happen but to do the right thing for our communities, organizations, and teams,” said el Kaliouby.

She cited examples of use cases for AI that Affectiva has actively avoided supporting, such as the use of AI for autonomous weapons or for surveillance.

el Kaliouby added that while the firm had been approached by an intelligence agency seeking to use AI for lie detection, this is an avenue with limited regulatory protections in place and one which holds significant potential for discrimination and that as a result it had rejected the proposal.

RELATED WHITEPAPER

Prior to the talk, Roese told ITPro that technical issues aren’t the biggest barrier to AI adoption but instead that a general lack of clear brand identity and vision for AI deployment in the boardroom is holding businesses back.

Approaching with this clarity of mind is key to tackling bias, said el Kaliouby, noting that new AI firms can use the pressures of their product launch as an excuse to delay bias mitigation indefinitely.

“It’s very easy to say ‘we’re a small startup, we need to shift products so who cares about bias for now, we’ll fix it later’. I think you have to build it from day one into the system and these are the kinds of startups I’m looking for, who are really thinking thoughtfully about bias and misinformation.”

For added focus, el Kaliouby added, she tied the bonus plan of her firm’s entire executive team to successfully implementing levers within its machine learning pipeline to ensure bias was being mitigated.

Despite the doubts over a global approach, the panel was united in acknowledging that certain AI risks will have to be addressed through national policy. Calleja noted that leaders will need to ensure that AI is “not for the few, for the many and that these tools are used to drive equality not make inequality”.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

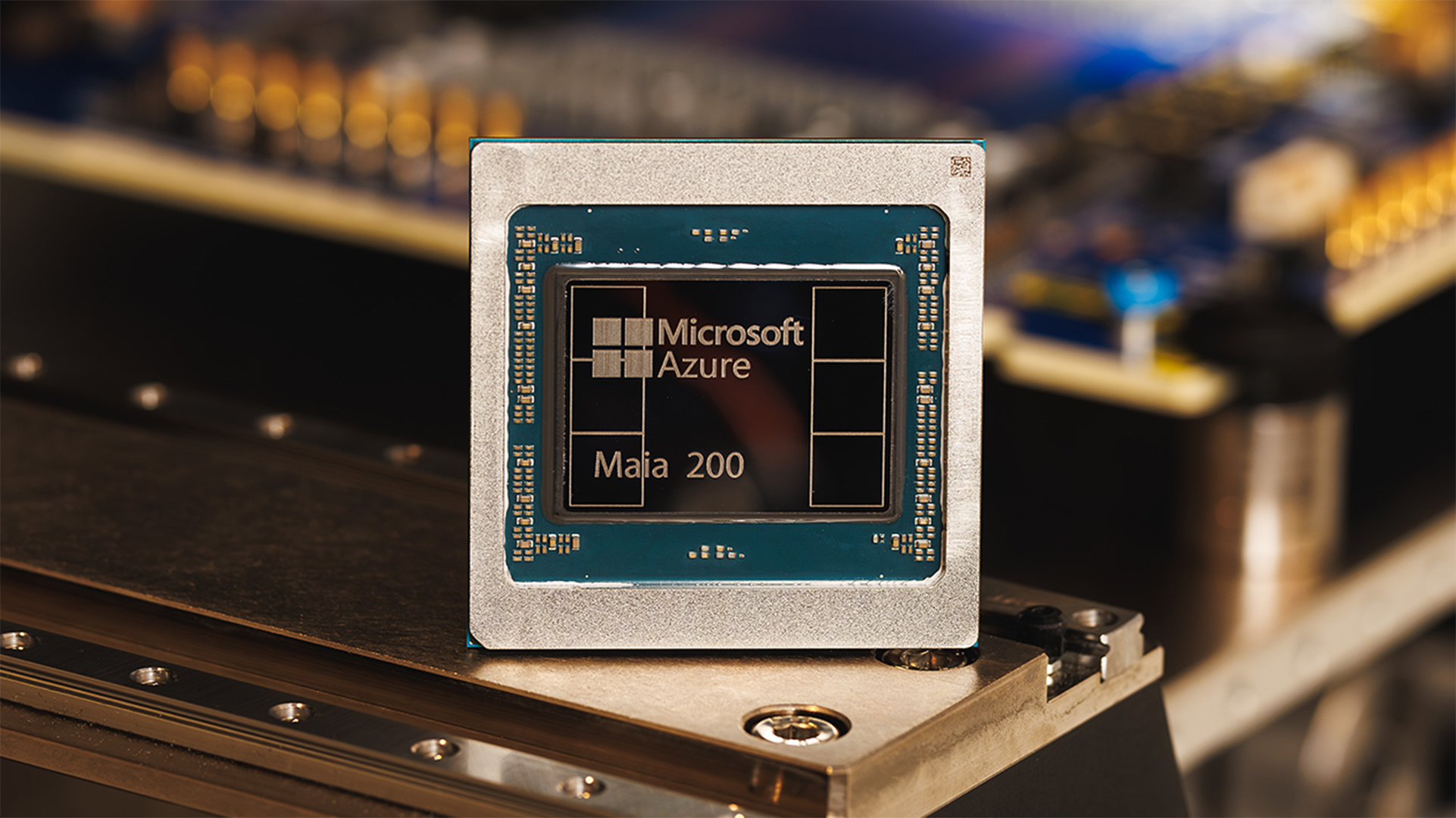

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and Google

Microsoft unveils Maia 200 accelerator, claiming better performance per dollar than Amazon and GoogleNews The launch of Microsoft’s second-generation silicon solidifies its mission to scale AI workloads and directly control more of its infrastructure

-

Infosys expands Swiss footprint with new Zurich office

Infosys expands Swiss footprint with new Zurich officeNews The firm has relocated its Swiss headquarters to support partners delivering AI-led digital transformation

-

Computacenter enters the fray against Broadcom in Tesco's VMware lawsuit

Computacenter enters the fray against Broadcom in Tesco's VMware lawsuitNews The IT reseller has added its own claim against Broadcom in VMware case brought by Tesco

-

Dell Technologies Global Partner Summit 2025 – all the news and updates live from Las Vegas

Dell Technologies Global Partner Summit 2025 – all the news and updates live from Las VegasKeep up to date with all the news and announcements from the annual Dell Technologies Global Partner Summit in Las Vegas

-

Who is John Roese?

Who is John Roese?Dell's CTO and Chief AI Officer John Roese brings pragmatism to AI

-

Meta layoffs hit staff at WhatsApp, Instagram, and Reality Labs divisions

Meta layoffs hit staff at WhatsApp, Instagram, and Reality Labs divisionsNews The 'year of efficiency' for Mark Zuckerberg continues as Meta layoffs affect staff in key business units

-

Business execs just said the quiet part out loud on RTO mandates — A quarter admit forcing staff back into the office was meant to make them quit

Business execs just said the quiet part out loud on RTO mandates — A quarter admit forcing staff back into the office was meant to make them quitNews Companies know staff don't want to go back to the office, and that may be part of their plan with RTO mandates

-

Amazon workers aren’t happy with the company’s controversial RTO scheme – and they’re making their voices heard

Amazon workers aren’t happy with the company’s controversial RTO scheme – and they’re making their voices heardNews An internal staff survey at Amazon shows many workers are unhappy about the prospect of a full return to the office

-

Predicts 2024: Sustainability reshapes IT sourcing and procurement

Predicts 2024: Sustainability reshapes IT sourcing and procurementwhitepaper Take the following actions to realize environmental sustainability

-

Advance sustainability and energy efficiency in the era of GenAI

Advance sustainability and energy efficiency in the era of GenAIwhitepaper Take a future-ready approach with Dell Technologies and Intel