AI, hallucinations and Foo Fighters at Dreamforce 2023

Firms lax on generative AI ‘trust’ risk face a reckoning as Salesforce bids to separate itself from the pack

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Dreamforce 2023 was, by all accounts, a resounding success, providing Salesforce an opportunity to showcase its razor-sharp focus on generative AI and ethical AI goals before the more than 40,000 attendees who descended on San Francisco.

Tech firms always mark events like Dreamforce with an A-list performance, with Foo Fighters rocking out the Chase Center on the penultimate night of an overwhelming week.

Often enough, these acts have nothing to do with the event – Salt-N-Pepa played Databricks Data+AI Summit 2023, for example – but at Dreamforce, this choice was oddly appropriate.

During WWII, Allied pilots reported seeing floating orbs – dubbed ‘foo fighters’ – flying beside their planes while on bombing raids over occupied Europe.

READ MORE

This phenomenon captivated people the world over, with some claiming they were otherworldly entities keeping tabs on a warring human race. Others, however, suggested they could have been hallucinations arising from combat-related stress. Flak cannons and marauding Messerschmitt fighter planes tend to have that effect.

The end of WWII heralded the beginning of a new era and a period of rapid technological advancement. Much like then, we find ourselves at the precipice of another tectonic shift. Generative AI is the term on everyone’s lips, with the emergence of ChatGPT prompting a huge wave of hype and optimism.

But, much like the Allied pilots who reported those elusive foo fighters lingering ominously on the horizon, firms operating in this space are encountering new specters in the form of regulatory scrutiny, customer hesitancy, and AI’s own dangerous hallucinations.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

AI hallucinations: Magic or lies?

During his keynote, Salesforce chief executive Marc Benioff outlined the company’s ambitious plans to capitalize on the AI era.

For many, generative AI represents the technological promise humanity has been striving to achieve for decades – relieving workers from monotony and opening up more time to pursue meaningful work.

But that’s the sunlit uplands outlook there. Behind the glamorous veneer of generative AI are lingering concerns over data privacy, workforce upheaval, and hallucination-induced calamity.

Benioff fired a broadside at large language model (LLM) companies during his opening keynote, suggesting their hunger for data without any regard for ethics or safety may put enterprises at risk of being exploited. Benioff and others singled out the lack of safeguards as a key inhibitor to long-term success and customer adoption.

“They call them hallucinations,” he said. “I call them lies.”

Benioff’s bold exclamation came just hours before a fireside chat with OpenAI CEO Sam Altman, in which he probed the controversial exec on this issue. Altman’s relaxed views on AI hallucinations are unlikely to fill users, enterprises, and regulators alike with confidence.

To him, this is what gives generative AI its “magic” – putting him at odds with Benieoff and Salesforce as a whole. In this new generative AI era, according to the tech giant, trust and confidence is critical.

Doubling down on trust and transparency

We heard ‘trust’ a lot throughout Dreamforce 2023, with Salesforce repeatedly keen to emphasize its responsible approach to AI development.

The firm has a “laser sharp focus” on ethical, responsible, and trusted AI development and use, said its head of ethical AI, Kathy Baxter – and it’s a focus the company has maintained for several years.

She stressed the need to respect human rights and data the company has been trusted with, adding it never uses customers’ data to train models without explicit consent.

READ MORE

“All of the models we’re building … [use] consented data and so customers can feel confident in it,” she told reporters. “We’re grounding these models in our customers' data as well so they can feel confident that it’s going to be accurate.”

It’s obvious Salesforce has been using ‘trust’ to differentiate it from industry counterparts. In 2019, the company published its trusted AI principles, Baxter noted, which have “guided the creation and implementation of AI ever since”.

It also created a dedicated ethical and humane use officer to steer the responsible use of AI, and it’s proud to hold the mantle of creating the industry’s first chief trust officer to support this.

RELATED RESOURCE

Get a full return on your Salesforce investment.

But that’s not all. Clara Shih, CEO of Salesforce AI repeatedly emphasized the importance of ethical and trusted AI use, suggesting it’s a key talking point among customers. Internal research shows while 76% of customers trust companies to make “honest claims” about AI products and services, just 57% trust them to use AI ethically.

“What we’re hearing from customers is always around trust,” she said. “How do I make sure from a data security, governance perspective, and from an ethics and responsibility perspective, that we have all the guardrails in place?”

An AI future without ‘foo fighters’

This sharp privacy focus could stand Salesforce in good stead with both customers and lawmakers on both sides of the Atlantic amidst a period of heightened regulatory scrutiny. The EU’s strong position on generative AI, for instance, may worry industry stakeholders, who see it as detrimental to innovation.

In this context, Salesforce is strategically positioning itself to become the gold standard for generative AI “done right”, in the eyes of regulators.

“We’re just at the beginning of that [generative AI] breakthrough,” Benioff told reporters last week – and he’s not wrong. It’s clear he, and Salesforce, see the coming months and years as a period of significant opportunity, and a chance to pull away from the competition.

The firm started 2023 in a precarious position, having laid off thousands of workers. But its performance in recent months has been impressive, with much of it fueled by the interest in generative AI. Then, as Dreamforce 2023 drew to a close, the firm announced it plans to hire more than 3,000 staff across its engineering, sales, and data cloud segments.

READ MORE

It’s obvious that Salesforce has a clear-cut vision for AI in which it's stripping itself of the issues that might undermine the technology's pathway to maturity – including dangerous hallucinations.

With responsible development at its core, the tech giant is framing itself as a trusted player in the space to differentiate it from competitors, many of which often find themselves embroiled in controversy.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

Security expert warns Salt Typhoon is becoming 'more dangerous'

Security expert warns Salt Typhoon is becoming 'more dangerous'News The Chinese state-backed hacking group has waged successful espionage campaigns against an array of organizations across Norway.

-

HPE ProLiant Compute DL345 Gen12 review

HPE ProLiant Compute DL345 Gen12 reviewReviews The big EPYC core count and massive memory capacity make this affordable single-socket rack server ideal for a wide range of enterprise workloads

-

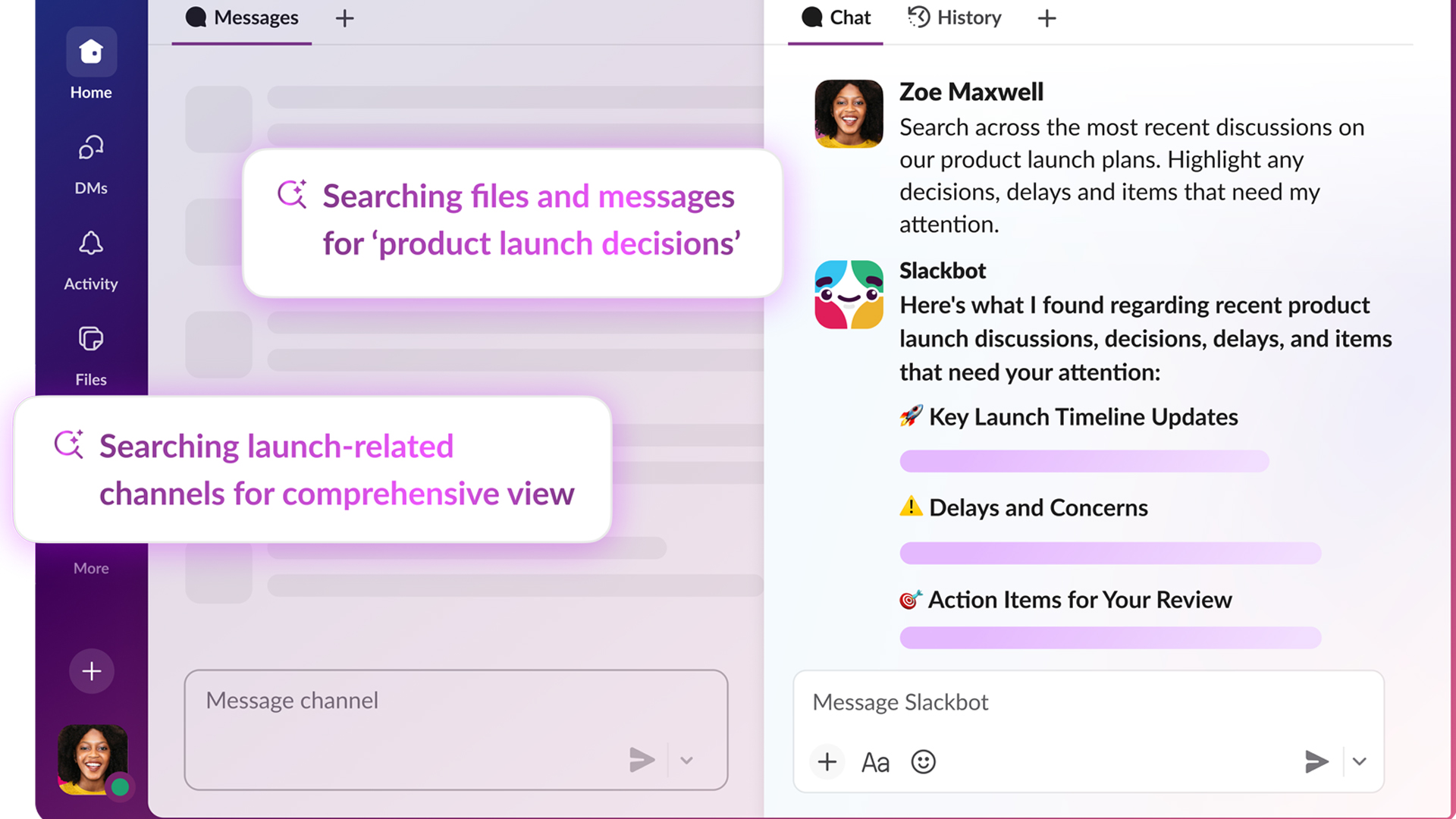

The AI-enabled Slackbot is now generally available – Salesforce says it could save more than a day’s work per week

The AI-enabled Slackbot is now generally available – Salesforce says it could save more than a day’s work per weekNews With an entirely overhauled model behind the chatbot, users can summarize channels and ask for highly personalized information

-

'It's slop': OpenAI co-founder Andrej Karpathy pours cold water on agentic AI hype – so your jobs are safe, at least for now

'It's slop': OpenAI co-founder Andrej Karpathy pours cold water on agentic AI hype – so your jobs are safe, at least for nowNews Despite the hype surrounding agentic AI, OpenAI co-founder Andrej Karpathy isn't convinced and says there's still a long way to go until the tech delivers real benefits.

-

‘I don't think anyone is farther in the enterprise’: Marc Benioff is bullish on Salesforce’s agentic AI lead – and Agentforce 360 will help it stay top of the perch

‘I don't think anyone is farther in the enterprise’: Marc Benioff is bullish on Salesforce’s agentic AI lead – and Agentforce 360 will help it stay top of the perchNews Salesforce is leaning on bringing smart agents to customer data to make its platform the easiest option for enterprises

-

Dreamforce 2025 live: All the latest updates from San Francisco

Dreamforce 2025 live: All the latest updates from San FranciscoNews We're live on the ground in San Francisco for Dreamforce 2025 – keep tabs on all of our rolling coverage from the annual Salesforce conference.

-

Salesforce just launched a new catch-all platform to build enterprise AI agents

Salesforce just launched a new catch-all platform to build enterprise AI agentsNews Businesses will be able to build agents within Slack and manage them with natural language

-

Salesforce CEO Marc Benioff says the company has cut 4,000 customer support staff for AI agents so far

Salesforce CEO Marc Benioff says the company has cut 4,000 customer support staff for AI agents so farNews The jury may still be out on whether generative AI is going to cause widespread job losses, but the impact of the technology is already being felt at Salesforce.

-

‘Humans must remain at the center of the story’: Marc Benioff isn’t convinced about the threat of AI job losses – and Salesforce’s adoption journey might just prove his point

‘Humans must remain at the center of the story’: Marc Benioff isn’t convinced about the threat of AI job losses – and Salesforce’s adoption journey might just prove his pointNews Marc Benioff thinks fears over widespread AI job losses may be overblown and that Salesforce's own approach to the technology shows adoption can be achieved without huge cuts.

-

Salesforce wants technicians and tradespeople to take AI agents on the road with them

Salesforce wants technicians and tradespeople to take AI agents on the road with themNews Salesforce wants to equip technicians and tradespeople with agentic AI tools to help cut down on cumbersome administrative tasks.