Meta ditching its responsible AI team doesn't bode well

In dropping its responsible AI team, Meta highlights its innovation-at-all-costs mentality

With Meta reportedly breaking up its Responsible AI (RAI) team, the tech giant has become the latest in a growing list of firms to dance dangerously with AI safety concerns.

Members of the RAI team will be distributed among other areas of Meta, including its generative AI product team, with others set to focus on AI infrastructure projects, The Information reported last week.

A Meta spokesperson told Reuters that the move is intended to "bring the staff closer to the development of core products and technologies".

This does, to a degree, make sense. Embedding those responsible for ethical AI within specific teams could offer alternative voices within the development process that will consider potential harms.

However, the move means Meta’s responsible AI team, which was tasked with fine-tuning the firm’s AI training practices, has more or less been completely gutted. Earlier this year, the division saw a restructuring which left it as a “shell of a team”, according to Business Insider.

The team was hamstrung from the get-go, reports suggest, with the publication noting that it had “little autonomy” and was bogged down by excessive bureaucratic red tape.

Ordinarily, a restructuring and redistribution of staff from this team would raise eyebrows, but given intense discussions over AI safety in recent months the decision from Meta seems perplexing to say the least.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Concerns over AI safety and ethical development have been growing in intensity amidst claims that generative AI could have an adverse impact on society.

RELATED RESOURCE

Learn more about a software platform that can deliver secure, trustworthy, and scalable AI solutions.

AI-related job losses, the use of generative AI tools for nefarious purposes such as disinformation and cyber attacks, and the potential for discriminatory bias have all been flagged as lingering concerns.

Lawmakers and regulators on both sides of the Atlantic have been highly vocal on the topic in a bid to get ahead of the curve. The European Union (EU) has been highly aggressive in its positioning on AI regulation with the EU AI Act, for example.

The US government has also been pushing heavily for AI safeguards in recent weeks, with President Biden signing an executive order aimed specifically at forcing companies to establish AI safety rules.

This confluence of external pressure has prompted big tech to act in anticipation of pending legislation, suggesting that they are willing to bow to pressure and avoid heightened regulatory scrutiny.

In July, a host of major players in the AI space, including Anthropic, Google, Microsoft, and OpenAI, launched a coalition aimed specifically at fine-tuning AI safety standards, for example.

Yet despite this, Meta appears intent on completely swerving safety concerns as it looks to double down on AI development, disregarding its “pillars of responsible AI” which include transparency, safety, privacy, and accountability.

RELATED RESOURCE

Discover how watsonx.data can help your organization successfully scale analytics and AI workloads for all your data.

Jon Carvill, senior director of communications for AI at Meta, told The Information that the company will still “prioritize and invest in safe and responsible AI development” despite the decision.

Team members redistributed throughout the business will “continue to support relevant cross-Meta efforts on responsible AI development and use”.

While these comments appear to be aimed at alleviating concerns over AI safety, it’s unlikely they will put minds at ease long-term and merely serve to highlight the fact Meta is disregarding safety in pursuit of contending with industry competitors.

Playing catch up

Meta’s sharpened focus on generative AI development appears to be the key reasoning behind gutting its responsibility team.

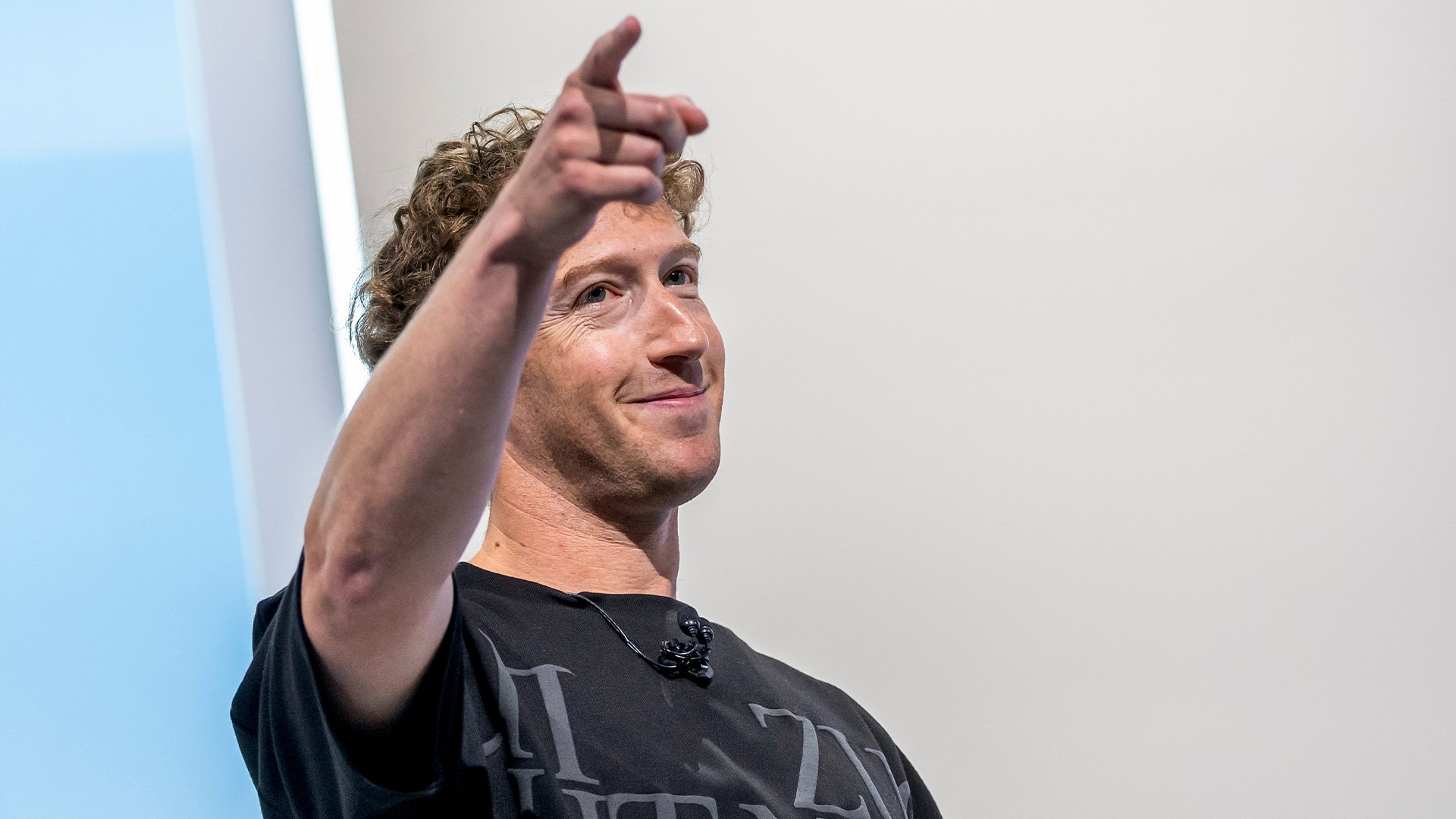

While Microsoft, Google, and other big tech names were going all in on generative AI, Meta was left playing catch-up, prompting CEO Mark Zuckerberg to scrap the company’s metaverse pipedream and pivot to the new-found focus.

Stiff competition in the AI space meant the firm has been forced to pour money, resources, and staff into its generative AI push, which so far has delivered positive results.

Earlier this year, Meta released its own large language model (LLM), dubbed LLaMA. The 7-65 billion parameter model was its first major foray into the space, and was followed by the more powerful Llama 2 model and Code Llama; Meta’s own equivalent of GitHub Copilot.

Meta isn’t alone in cutting resources for responsible AI development. X (formerly Twitter) cut staff responsible for ethical AI development following Elon Musk’s takeover in November last year right around the time the generative AI ‘boom’ ignited with the launch of ChatGPT.

Microsoft, too, cut staff in its Ethics and Society team, one of the key divisions that led research on responsible AI at the tech giant.

Meta is no stranger to criticism and has found itself in repeated battles with regulators on both sides of the Atlantic on topics such as data privacy in recent years, racking up astronomical fines in the process.

This latest move from the tech giant should have alarm bells ringing. A company willing to disregard its own internal AI safety teams in a bid to drive innovation at all costs isn’t the best look and may create long-term headaches for the firm.

Ross Kelly is ITPro's News & Analysis Editor, responsible for leading the brand's news output and in-depth reporting on the latest stories from across the business technology landscape. Ross was previously a Staff Writer, during which time he developed a keen interest in cyber security, business leadership, and emerging technologies.

He graduated from Edinburgh Napier University in 2016 with a BA (Hons) in Journalism, and joined ITPro in 2022 after four years working in technology conference research.

For news pitches, you can contact Ross at ross.kelly@futurenet.com, or on Twitter and LinkedIn.

-

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the key

‘In the model race, it still trails’: Meta’s huge AI spending plans show it’s struggling to keep pace with OpenAI and Google – Mark Zuckerberg thinks the launch of agents that ‘really work’ will be the keyNews Meta CEO Mark Zuckerberg promises new models this year "will be good" as the tech giant looks to catch up in the AI race

-

Some of the most popular open weight AI models show ‘profound susceptibility’ to jailbreak techniques

Some of the most popular open weight AI models show ‘profound susceptibility’ to jailbreak techniquesNews Open weight AI models from Meta, OpenAI, Google, and Mistral all showed serious flaws

-

Meta’s chaotic AI strategy shows the company has ‘squandered its edge and is scrambling to keep pace’

Meta’s chaotic AI strategy shows the company has ‘squandered its edge and is scrambling to keep pace’Analysis Does Meta know where it's going with AI? Talent poaching, rabid investment, and now another rumored overhaul of its AI strategy suggests the tech giant is floundering.

-

DeepMind CEO Demis Hassabis thinks Meta's multi-billion dollar hiring spree shows it's scrambling to catch up in the AI race

DeepMind CEO Demis Hassabis thinks Meta's multi-billion dollar hiring spree shows it's scrambling to catch up in the AI raceNews DeepMind CEO Demis Hassabis thinks Meta's multi-billion dollar hiring spree is "rational" given the company's current position in the generative AI space.

-

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoption

The UK government is working with Meta to create an AI engineering dream team to drive public sector adoptionNews The Open-Source AI Fellowship will allow engineers to apply for a 12-month “tour of duty” with the government to develop AI tools for the public sector.

-

Meta faces new ‘open washing’ accusations with AI whitepaper

Meta faces new ‘open washing’ accusations with AI whitepaperNews The tech giant has faced repeated criticism for describing its Llama AI model family as "open source".

-

Meta executive denies hyping up Llama 4 benchmark scores – but what can users expect from the new models?

Meta executive denies hyping up Llama 4 benchmark scores – but what can users expect from the new models?News A senior figure at Meta has denied claims that the tech giant boosted performance metrics for its new Llama 4 AI model range following rumors online.

-

The DeepSeek bombshell has been a wakeup call for US tech giants

The DeepSeek bombshell has been a wakeup call for US tech giantsOpinion Ross Kelly argues that the recent DeepSeek AI model launches will prompt a rethink on AI development among US tech giants.