Hyperautomation is overhyped

Organisations buying into fully automated systems because people are ‘too expensive’ will end up paying extra for the human touch

Automation may be a key tool in any company’s arsenal, but the promise of implementing end-to-end automation – or hyperautomation – with no human interjection, is an unachievable distraction for tech teams.

It seems only a few years ago that businesses began incorporating artificial intelligence (AI) and machine learning into their processes. This is a far cry from the widespread usage these technologies now enjoy. While they’re undeniably valuable, and a properly thought out strategy can be extremely beneficial, some companies are stretching too fast and too far in the pursuit of an unfocused form of hyperautomation.

“Digital leaders have been surprised by the complexity of automation”, Bev White, CEO of Nash Squared, tells IT Pro. Despite this, a recent Nash Squared report reveals digital leaders are, on average, planning to automate nearly one in five jobs (18% of the workforce) within the next five years. White adds: “With today’s unsustainable salary demands, talent shortages and increased operational costs, it seems the business case for digital labour is getting stronger by the day.”

For menial jobs like document processing, which increasingly contribute to worker unhappiness, increased automation has a clear role to play. If executed properly, customers won’t know whether a behind-the-scenes process has been done at all, let alone if it was done by a human or artificial intelligence (AI). Indeed companies continue to invest in automating the back office.

What’s really being promised is a seamless customer experience (CX), and this is absolutely something to which every business should strive. Businesses that buy into their own claims, though, would do well to take a step back and assess whether their automation goals really fit their needs. On the whole, the industry-cultivated idea of ‘end-to-end’ automated ecosystems is doomed to fail, because complete automation of services is’t only impractical, but also fundamentally unnecessary.

Chasing the best customer experience

The industry perspective on automation has been skewed by its development phase, argues Bernhard Schaffrik, principal analyst at Forrester, but now systems are beginning to mature, the endless use cases simply aren’t there.

RELATED RESOURCE

The Total Economic Impact™ of IBM robotic process automation

Cost savings and business benefits enabled by robotic process automation

“I think the challenge is that automation promises were so easily harvested, during the times where you were experimenting with an automation technology for the first time,” he tells IT Pro. “The appetite grew, understandably, but many missed out on sitting down together and defining an automation strategy, or an operating model. I'm talking to many senior IT decision makers, and they are just driven by removing humans from the equation because humans are too expensive.”

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Schaffrik maintains that without a clear strategy, short-term over reliance on automation won’t help businesses, and will hold firms back from properly delivering on CX. He calls for automation to be implemented only where it’s urgently needed, but for this implementation to happen alongside careful consideration of where the “human touch” is preferable.

“Customer and employee experience are the key to transformation rather than an 'all-in' pursuit of end-to-end automation, and looking at tasks in this way will help businesses focus their efforts on using automation where it will give them the most gain,” states Dermot O'Connell, senior vice president of services, EMEA at Dell.

Even when it comes to back-end tasks that don’t affect the customer experience, automation can be more effective when used in tandem with human workers rather than in place of them. Just as humans can benefit from automated tools to speed up their work, automated systems can become more ‘intelligent’ through observation of human co-workers. Extremely complex or critical tasks can also be deferred to human workers, to ensure quality and to save time on the development and deployment of the automated processes.

“Previous generation thinking about automation was that it was either zero or one: either the work was done manually, or these robots would completely take over that work,” says Ronen Lamdan, CEO of intelligent automation firm Laiye. “The reality of it is, when you apply AI, you never get to the 100%. The winning combination is actually empowering these human workers with digital capabilities, with AI capabilities, to take over the mundane parts of their work, but still retain people to service customers, to handle edge cases, to do the more cognitive intelligent thinking.”

The hidden, human cost of automation

In September, NetSuite made a number of automation announcements at SuiteWorld, its annual conference. Powered by AI and machine learning, the new features introduce ways to automate tasks such as invoicing, planning work schedules, and tracking employee metrics. The promise of end-to-end automation makes sense within the context of the ERP giant’s full suite, and speaks to a goal of providing customers with barrierless integration between services. Nobody wants to pay for a suite only to discover the quality of life features are all siloed into narrow use-cases.

But the reality is there will always be a need, or at least an ability, to escalate decisions to management level. Even in NetSuite’s keynote presentation, it could only advertise reduced manual checks for invoicing, acknowledging that the machine learning system has to escalate anything it’s confused with, to avoid costly mistakes.

In some cases, automated systems can cause more trouble than they’re worth. Business leaders are already running into implementation challenges, particularly where human labour is still required to shore up automated systems.

RELATED RESOURCE

Big payoffs from big bets in AI-powered automation

Automation disruptors realise 1.5 x higher revenue growth

A prime example of this is social media algorithms, sold to the public as self-sustaining, automated content systems that occasionally requiring minimal human oversight. One of the main benefits, often touted by firms, is their ability to curb illegal and harmful content pushed onto the users’ feeds. The likes of Instagram and TikTok now boast systems to prevent such content from ever being seen by others, or delete content that slips through the cracks.

But this process is far from automatic. Every algorithm that recognises harmful content has been ‘trained’ by humans, whose manual moderation of banned demands they be subjected to the most disturbing posts. The churn rate of such roles is sky-high – far above the industry average – and driven by the intense and upsetting nature of the roles.

There’s no easy route to successful automation

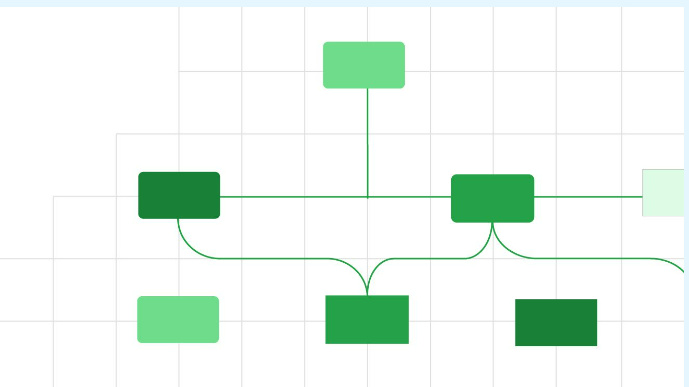

White also notes that complexity is currently one of the top three obstacles to implementing automation. “What may have at first seemed like a simple process to automate, can quite quickly turn out to be a spaghetti of logic branches – which will also require some level of manual oversight even when fully implemented.”

This complexity is particularly true for firms that have not engaged in the right amount of testing or strategising in advance of implementation. If a system has only ever been trained in a vacuum, hard-coded with functions rather than learning them on the fly from active employees, its use cases could be outdated by the time it’s properly installed. It could even be detrimental. Similarly, if a system has been designed with a ‘one size fits all’ approach to end-to-end automation, companies could find themselves rushing to rip it out down the line. Waterstones’ recent botched AI stock system affair is testament to just this.

This framing is important to understand the issue overhyping hyperautomation has created. It’s not a ‘one size fits all’ framework for increasing efficiency, nor a silver bullet for a sector facing a skills shortage. There’s no doubt hyperautomation in action can achieve powerful results, but only when taking factors like employee satisfaction into account.

On the same note, human labour saved by implementing automated systems can lead to quality improvements for workers and customers alike. Proper integration of automation alongside workers can be a win-win, improving satisfaction and productivity levels. But the lure of end-to-end automation should never overtake questions around its practicality, nor be used to hide human efforts behind the scenes.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Can robots work safely alongside humans? This one industry leader thinks we're not far away

Can robots work safely alongside humans? This one industry leader thinks we're not far awayNews Humanoid robots and people will be able to work truly side-by-side this year, according to the CEO of one leading robotics company.

-

The power of AI & automation: Proactive IT

The power of AI & automation: Proactive ITWhitepaper Automation strategies to dynamically and continuously assure cost-effective application performance

-

Magic Quadrant for enterprise conversational AI platforms

Magic Quadrant for enterprise conversational AI platformsWhitepaper An evaluation of the conversational AI platform (chatbot) market

-

Let's rethink customer service

Let's rethink customer servicewhitepaper Discover new ways to improve your customer service process

-

The power of AI & automation: Productivity and agility

The power of AI & automation: Productivity and agilitywhitepaper To perform at its peak, automation requires incessant data from across the organization and partner ecosystem

-

Digitization solves manufacturing’s five toughest challenges

Digitization solves manufacturing’s five toughest challengesWhitepaper Discover the technology trends that overcome manufacturing’s challenges, from cyber resilience to breaking free from legacy technology

-

The four pillars of excellence for technology leaders

The four pillars of excellence for technology leaderswhitepaper Download this CIOs business case for integration and automation

-

Building a strong business case for GRC automation

Building a strong business case for GRC automationwhitepaper Successfully implement an innovative governance, risk & compliance management platform