‘Frontier models are still unable to solve the majority of tasks’: AI might not replace software engineers just yet – OpenAI researchers found leading models and coding tools still lag behind humans on basic tasks

Large language models struggle to identify root causes or provide comprehensive solutions

AI might not replace software engineers just yet as new research from OpenAI reveals ongoing weaknesses in the technology.

Having created a benchmark dubbed ‘SWE-Lancer’ to evaluate AI’s effectiveness at completing software engineering and managerial tasks, researchers concluded that the technology is lacking.

“We evaluate model performance and find that frontier models are still unable to solve the majority of tasks,” researchers said.

Researchers found that, while AI excels in certain areas, it is limited in others. For example, AI agents are skilled at localizing problems but bad at working out what the root cause is.

While they can pinpoint the location of an issue with speed and use search capabilities to access necessary repositories faster than humans can, their understanding is limited in terms of how an issue spans across different components and files.

This frequently leads to solutions that are incorrect or insufficiently comprehensive, and agents can often fail by not finding the right file or location to edit.

In a comparison between two OpenAI models, o1 and GPT-4o, and Claude’s 3.5 Sonnet model, researchers found they all failed to entirely solve one particular user interface (UI) problem.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

While o1 solved the basic issue, it missed a range of others, and GPT-4o failed to solve even the initial problem. Sonnet was quick to identify the root cause of the issue and fix the bug, but the solution was not comprehensive and did not pass the researcher’s end-to-end tests.

All told, researchers said that while AI coding tools have the capacity to make software engineering more productive, but that users need to be wary of the potential flaws in AI-generated code.

Are AI coding tools more trouble than they’re worth?

While businesses are ramping up the use of AI coding tools, there have been plenty of warning signs to make firms stop and consider whether the tools are worth it.

Research from Harness earlier this year found that many developers are becoming increasingly bogged down with manual tasks and code remediation due to the increased use of AI coding tools.

The study noted that while these tools may offer huge benefits to software engineers, experts say they are still littered with weaknesses and lack some of the capabilities of human engineers.

“While these tools can boost efficiency, in their current state they often result in a surge of errors, security vulnerabilities, and downstream manual work that burdens developers," Sheila Flavell, COO of FDM Group, told ITPro.

The risk of vulnerabilities and malicious code being introduced into organizations is also significantly higher when AI coding tools are used, according to Shobhit Gautam, security solutions architect at HackerOne.

“AI-generated code is not guaranteed to follow security guidelines and best practices as defined by the organization standards. As the code is generated from LLMs, there is a possibility that third-party components may be used in the code and go unnoticed,” Gautam told ITPro.

RELATED WHITEPAPER

“Aside from the risk of copyright infringement, the code hasn’t been through the company’s validation testing and peer reviews, potentially resulting in unchecked vulnerabilities,” Gautam added.

An overreliance on AI coding tools may also be eroding the skills of human programmers, with research from education platform O’Reilly finding that interest in traditional programming languages is in decline.

Similarly, a post from tech blogger and programmer Namanyay Goel sparked debate on this topic recently when Goel claimed junior developers lack coding skills owing to a heightened use of automated AI tooling.

How can businesses use these tools effectively?

Despite concerns, there are clear signs AI coding tools are delivering value for both software engineers and enterprises. GitHub research from last year revealed AI coding tools have helped engineers deliver more secure software, better quality code, and the adoption of new languages.

With this in mind, firms need to prioritize certain processes to deliver success with AI tools. Flavell said businesses need to put upskilling front and center, as well as improving code reviews and quality assurance.

“It is essential that organizations create and implement governance processes to manage the use of AI generated code,” Gautam added.

“When it comes to coding, AI tools and human input will all play their part. Organizations gain the best of both worlds when they integrate these two together. Human Intelligence is essential to tailor coding to specific requirements, and AI can help experts increase their efficiency.”

MORE FROM ITPRO

- Can AI code generation really replace human developers?

- AI-generated code risks: What CISOs need to know

- The world's 'first AI software engineer' isn't living up to expectations

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

Hackers are using LLMs to generate malicious JavaScript in real time

Hackers are using LLMs to generate malicious JavaScript in real timeNews Defenders advised to use runtime behavioral analysis to detect and block malicious activity at the point of execution, directly within the browser

-

Developers in India are "catching up fast" on AI-generated coding

Developers in India are "catching up fast" on AI-generated codingNews Developers in the United States are leading the world in AI coding practices, at least for now

-

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”

A torrent of AI slop submissions forced an open source project to scrap its bug bounty program – maintainer claims they’re removing the “incentive for people to submit crap”News Curl isn’t the only open source project inundated with AI slop submissions

-

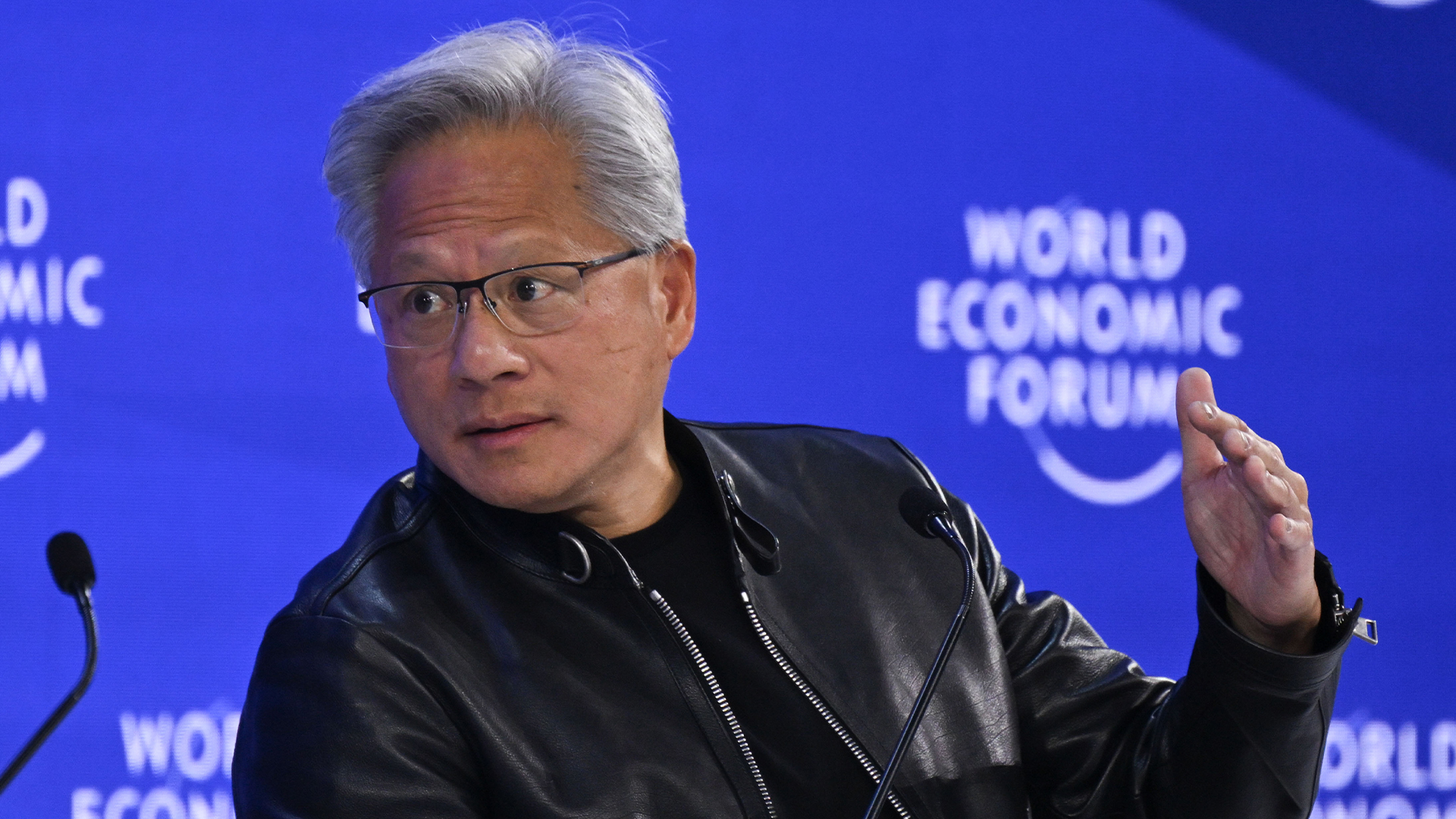

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applications

‘This is a platform shift’: Jensen Huang says the traditional computing stack will never look the same because of AI – ChatGPT and Claude will forge a new generation of applicationsNews The Nvidia chief says new applications will be built “on top of ChatGPT” as the technology redefines software

-

UK government launches industry 'ambassadors' scheme to champion software security improvements

UK government launches industry 'ambassadors' scheme to champion software security improvementsNews The Software Security Ambassadors scheme aims to boost software supply chains by helping organizations implement the Software Security Code of Practice.

-

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleagues

So much for ‘trust but verify’: Nearly half of software developers don’t check AI-generated code – and 38% say it's because it takes longer than reviewing code produced by colleaguesNews A concerning number of developers are failing to check AI-generated code, exposing enterprises to huge security threats

-

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivals

Microsoft is shaking up GitHub in preparation for a battle with AI coding rivalsNews The tech giant is bracing itself for a looming battle in the AI coding space

-

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivity

AI could truly transform software development in 2026 – but developer teams still face big challenges with adoption, security, and productivityAnalysis AI adoption is expected to continue transforming software development processes, but there are big challenges ahead

-

AI is creating more software flaws – and they're getting worse

AI is creating more software flaws – and they're getting worseNews A CodeRabbit study compared pull requests with AI and without, finding AI is fast but highly error prone

-

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teams

AI doesn’t mean your developers are obsolete — if anything you’re probably going to need bigger teamsAnalysis Software developers may be forgiven for worrying about their jobs in 2025, but the end result of AI adoption will probably be larger teams, not an onslaught of job cuts.